Open Agile Architecture

A Standard of The Open Group

Copyright © 2019-2020, The Open Group

All rights reserved.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of the copyright owners.

Any use of this publication for commercial purposes is subject to the terms of the Annual Commercial License relating to it. For further information, see www.opengroup.org/legal/licensing.

A Standard of The Open Group

Open Agile Architecture™

ISBN: 1-947754-62-1

Document Number: C208

Published by The Open Group, September 2020.

This document is updated to apply Technical Corrigendum U221, and re-issued October 2022.

Comments relating to the material contained in this document may be submitted to:

The Open Group, Apex Plaza, Forbury Road, Reading, Berkshire, RG1 1AX, United Kingdom

or by electronic mail to:

ogspecs@opengroup.org

Built with asciidoctor, version 2.0.17. Backend: html5 Build date: 2022-10-12 09:40:16 +0100

Preface

The Open Group

The Open Group is a global consortium that enables the achievement of business objectives through technology standards. Our diverse membership of more than 800 organizations includes customers, systems and solutions suppliers, tools vendors, integrators, academics, and consultants across multiple industries.

The mission of The Open Group is to drive the creation of Boundaryless Information Flow™ achieved by:

-

Working with customers to capture, understand, and address current and emerging requirements, establish policies, and share best practices

-

Working with suppliers, consortia, and standards bodies to develop consensus and facilitate interoperability, to evolve and integrate specifications and open source technologies

-

Offering a comprehensive set of services to enhance the operational efficiency of consortia

-

Developing and operating the industry’s premier certification service and encouraging procurement of certified products

Further information on The Open Group is available at www.opengroup.org.

The Open Group publishes a wide range of technical documentation, most of which is focused on development of Standards and Guides, but which also includes white papers, technical studies, certification and testing documentation, and business titles. Full details and a catalog are available at www.opengroup.org/library.

This Document

This document is The Open Group Open Agile Architecture™ Standard, also known as The Open Group O-AA™ Standard. It has been developed and approved by The Open Group.

This document follows a modular structure and is organized in the following parts:

-

Part 1: The O-AA Core describes the fundamental concepts of Agile Architecture

-

Part 2: The O-AA Building Blocks describes the O-AA building blocks

Examples and case studies are provided as illustrations to foster understanding of the standard. Examples and case studies are not a normative part of the standard and therefore do not include requirements.

The target audience for this document includes:

-

Agilists who need to understand the importance of architecture when shifting toward an Agile at scale model, and who want to learn architecture skills

-

Enterprise Architects, solution architects, security architects, and software architects who want to stay relevant in an Agile at scale world and who need to learn new architecture skills for the digital age

-

Business managers and executives who need to learn the importance of the architecture discipline, and who need to influence architecture decisions

Trademarks

ArchiMate, DirecNet, Making Standards Work, Open O logo, Open O and Check Certification logo, Platform 3.0, The Open Group, TOGAF, UNIX, UNIXWARE, and the Open Brand X logo are registered trademarks and Boundaryless Information Flow, Build with Integrity Buy with Confidence, Commercial Aviation Reference Architecture, Dependability Through Assuredness, Digital Practitioner Body of Knowledge, DPBoK, EMMM, FACE, the FACE logo, FHIM Profile Builder, the FHIM logo, FPB, Future Airborne Capability Environment, IT4IT, the IT4IT logo, O-AA, O-DEF, O-HERA, O-PAS, Open Agile Architecture, Open FAIR, Open Footprint, Open Process Automation, Open Subsurface Data Universe, Open Trusted Technology Provider, OSDU, Sensor Integration Simplified, SOSA, and the SOSA logo are trademarks of The Open Group.

Agile Alliance is a registered trademark of Agile Alliance.

Amazon, Amazon Web Services, and AWS are trademarks of Amazon.com.

Apache Spark is a trademark of the Apache Software Foundation.

Azure is a registered trademark of Microsoft Corporation.

Capital One and Auto Navigator are registered trademarks of Capital One.

Compaq is a trademark of Hewlett-Packard Development Company, LP.

DEC is a trademark of Digital Equipment Corporation.

eBay is a registered trademark of eBay, Inc.

Facebook is a registered trademark of Facebook, Inc.

Fannie Mae is a registered trademark of Fannie Mae.

Flink is a registered trademark of the Apache Software Foundation.

Ford is a trademark of the Ford Motor Company.

Forrester is a registered trademark of Forrester Research, Inc.

Freddie Mac is a registered trademark of Freddie Mac.

Gartner is a registered trademark of Gartner, Inc.

General Electric is a registered trademark of General Electric Company.

Google is a registered trademark of Google LLC.

Grafana is a trademark of Coding Instinct AB.

IETF is a registered trademark of the IETF Trust.

Java is a registered trademark of Oracle and/or its affiliates.

JPMorgan Chase is trademark of JPMorgan Chase & Co.

Kafka is a trademark of the Apache Software Foundation.

Li & Fung is a trademark of Li & Fung (BVI) Limited.

loanDepot is a registered trademark of loanDepot.com, LLC.

Microsoft and Microsoft Teams are registered trademarks of Microsoft Corporation.

MQSeries is a registered trademark of International Business Machines (IBM) Corporation.

Netflix is a registered trademark of Netflix, Inc.

Poiray is a registered trademark of Poiray International.

Pomodoro Technique is a registered trademark of Cirillo Consulting GmbH.

Prometheus is a trademark of the Linux Foundation.

Python is a registered trademark of the Python Software Foundation.

Quicken Loans is a registered service mark of Intuit, Inc.

R is a registered trademark of the R Foundation.

SAS software is a registered trademark of the SAS Institute, Inc.

Scaled Agile Framework and SAFe are registered trademarks of Scaled Agile, Inc.

Scrum Alliance is a registered trademark of Scrum Alliance, Inc.

Slack is a trademark of Slack Technologies, Inc.

Spotify is a trademark of Spotify AB.

Swatch is a registered trademark of the Swatch Group.

Tableau is a registered trademark of Tableau Software, LLC.

Teradata is a trademark of Teradata Corporation.

Toyota is a registered trademark of Toyota Motor Corporation.

Walmart is a registered trademark of Walmart.

Wells Fargo is a trademark of Wells Fargo & Company.

Wikipedia is a registered trademark of the Wikimedia Foundation, Inc.

Zara is a trademark of Industria de Diseno Textil, SA (Inditex, SA).

All other brands, company, and product names are used for identification purposes only and may be trademarks that are the sole property of their respective owners.

Acknowledgements

The Open Group gratefully acknowledges the contribution of the following people in the development of this document:

-

Miguel de Andrade

-

Jean-Marc Bunouf

-

Paddy Fagan

-

Jérémie Grodziski

-

Peter Haviland

-

Frédéric Le

-

Jean-Pierre Le Cam

-

Antoine Lonjon

-

Eamonn Moriarty

-

Jérôme Régnier

-

Steve Nicholls

-

Andrew Missen

The Open Group gratefully acknowledges the following reviewers who participated in the Company Review of this document:

-

Remy Alexander

-

Fernando Bucci

-

James Doss

-

Maurice Driessen

-

Chris Forde

-

Christopher Frost

-

Mats Gejnevall

-

Sonia Gonzalez

-

Angela Graves

-

Andrew Josey

-

Marinus F. Kok

-

Ben Kooistra

-

David Lounsbury

-

Chalon Mullins

-

Oliver F. Nandico

-

Miroslaw Prywata

-

James Rhyne

-

Sriram Sabesan

-

Kalpesh Sharma

-

Robert Weisman

Referenced Documents

The following documents are referenced in this standard.

(Please note that the links below are good at the time of writing but cannot be guaranteed for the future.)

Normative References

This document does not contain any normative references at the time of publication. These may be added in a future release.

Informative References

-

[Adkins 2020] Building Secure and Reliable Systems, Best Practices for Designing, Implementing, and Maintaining Systems, by Heather Adkins, Betsy Beyer, Paul Blankinship, Ana Oprea, Piotr Lewandowski, and Adam Stubblefield, published by O’Reilly Media, March 2020; refer to: https://landing.google.com/sre/static/pdf/Building_Secure_and_Reliable_Systems.pdf

-

[Adler 2020] Bending the Law of Unintended Consequences: A Test-Drive Method for Critical Decision-Making in Organizations, by Richard M. Adler, 2020, published by Springer International Publishing

-

[Agile Alliance] Agile Glossary, published by the Agile Alliance®; refer to: www.agilealliance.org/agile101/agile-glossary/

-

[Agile Manifesto] Manifesto for Agile Software Development, 2001; refer to: www.agilemanifesto.org/

-

[Andreessen 2011] Why Software Is Eating The World, by Marc Andreessen, August 2011, published in the Wall Street Journal; refer to: www.wsj.com/articles/SB10001424053111903480904576512250915629460, retrieved April 25, 2020

-

[ANSI/IEEE] Standard Glossary of Software Engineering Terminology, STD-729-1991, published by ANSI/IEEE

-

[Atwood 2006] The Last Responsible Moment, by Jeff Atwood, October 2006; refer to: www.blog.codinghorror.com/the-last-responsible-moment/

-

[Auto Navigator] Capital One® Auto Navigator® App; refer to: www.capitalone.com/cars/

-

[Beaujean 2006] The “Moment of Truth” in Customer Service, by Marc Beaujean, Jonathan Davidson, and Stacey Madge, February 2006, published by McKinsey & Company; refer to: www.mckinsey.com/business-functions/organization/our-insights/the-moment-of-truth-in-customer-service

-

[Berdjag 2019] Automation Challenges of Socio-Technical Systems by Denis Berdjag, Choubeila Maaoui, Mohamed Sallak, and Frederic Vanderhaegen, July 2019, published by Wiley-ISTE

-

[Beyer 2016] Site Reliability Engineering: How Google Runs Production Systems, by Betsy Beyer, Chris Jones, Jennifer Petoff, Niall Richard Murphy, published by O’Reilly Media; refer to: https://landing.google.com/sre/sre-book/toc/index.html

-

[Beyer 2018] The Site Reliability Workbook: Practical Ways to Implement SRE, by Betsy Beyer, Niall Richard Murphy, David K. Rensin, Kent Kawahara, Stephen Thorne, published by O’Reilly Media, July 2018; refer to: https://landing.google.com/sre/workbook/toc/

-

[Blumberg 2018] Five Enterprise-Architecture Practices that Add Value to Digital Transformations, by Sven Blumberg, Olivier Bossert, and Jan Sokalski, November 2018, published by McKinsey & Company; refer to: www.mckinsey.com/business-functions/mckinsey-digital/our-insights/five-enterprise-architecture-practices-that-add-value-to-digital-transformations

-

[Bradley 2018] Strategy Beyond the Hockey Stick: People, Probabilities, and Big Moves to Beat the Odds, by Chris Bradley, Martin Hirt, and Sven Smit, March 2018, published by John Wiley & Sons

-

[Brandenburger 2019] Strategy Needs Creativity, by Adam Brandenburger, March-April 2019, published in the Harvard Business Review

-

[Brandolini 2019] Introducing EventStorming, by Alberto Brandolini, last updated on August 23rd 2019, published as an ebook on Leanpub; refer to www.leanpub.com/introducing_eventstorming

-

[Brosseau 2019] The Journey to an Agile Organization by Daniel Brosseau, Sherina Ebrahim, Christopher Handscomb, and Shail Thaker, May 2019, published by McKinsey & Company

-

[Burton 2014] Leverage Business Capability Modeling to Integrate Strategy With Execution, by Betsy Burton, Gartner Vice President & Distinguished Analyst, 2014

-

[C4 Model] The C4 Model for Visualising Software Architecture, Context, Containers, Components and Code; refer to: https://c4model.com

-

[Cagan 2018] Inspired: How to Create Tech Products Customers Love, by Marty Cagan, January 2018, published by John Wiley & Sons

-

[Campbell 2017] Operating Model Canvas: Aligning Operations and Organization with Strategy, by Andrew Campell, Mikel Gutierrez, and Mark Lancelott, April 2017, published by Van Haren Publishing

-

[Charan 2019] The Amazon Management System: The Ultimate Digital Business Engine That Creates Extraordinary Value for Both Customers and Shareholders, by Ram Charan and Julia Yang, December 2019, published by Ideapress Publishing

-

[Christensen 2013] The Innovator’s Solution: Creating and Sustaining Successful Growth, by Clayton M. Christensen and Michael E. Raynor, November 2013, published by Harvard Business Review Press

-

[Christensen 2016] Know Your Customers’ “Jobs-To-Be-Done”, by Clayton M. Christensen, Karen Dillon, David S. Duncan, and Taddy Hall, September 2016, published in the Harvard Business Review

-

[CJCSM 2005] Operation of the Joint Capabilities Integration and Development System, May 2005, published in the Chairman of the Joint Chiefs of Staff Manual; refer to: www.dau.edu/cop/e3/DAU%20Sponsored%20Documents/CJCSM%203170.01B.pdf

-

[CloudEvents] CloudEvents; refer to: www.cloudevents.io/

-

[Cockburn 2005] Hexagonal Architecture, by Alistair Cockburn; refer to: www.alistair.cockburn.us/hexagonal-architecture/

-

[Colyer 2020] Meaningful Availability, a blog post by Adrian Colyer on a paper by Hauer et al., NSDI, February 2020; refer to: https://www.blog.acolyer.org/2020/02/26/meaningful-availability/

-

[Coplien 2010] Lean Architecture, by James Coplien and Gertrud Bjørnvig, July 2010, published by Wiley

-

[Crawley 2016] Systems Architecture: Strategy and Product Development for Complex Systems, by Edward Crawley, Bruce Cameron, and Daniel Selva, 2016, published by Pearson Education Limited

-

[Cusumano 1998] Thinking Beyond Lean: How Multi Project Management is Transforming Product Development at Toyota and Other Companies, by Michael A. Cusumano and Kentaro Nobeoka, September 1998, published by Free Press

-

[Cusumano 2020] The Future of Platforms by Michael A. Cusumano, David B. Yoffie, and Annabelle Gawer, February 2020, published by The MIT Press

-

[Day 2019] See Sooner, Act Faster: How Vigilant Leaders Thrive in an Era of Digital Turbulence by George S. Day and Paul J. H. Schoemaker, October 2019, published by The MIT Press

-

[Dehghani 2019] How to Move Beyond a Monolithic Data Lake to a Distributed Data Mesh, by Zhamak Dehghani, May 2019; refer to: www.martinfowler.com/articles/data-monolith-to-mesh.html, retrieved January 20, 2020

-

[Dennis 2006] Getting the Right Things Done: A Leader’s Guide to Planning and Execution, by Pascal Dennis, December 2006, published by Lean Enterprise Institute

-

[DevOps 2015 & 2017] 2015 State of DevOps Report; refer to: www.researchgate.net/publication/302566896_2015_State_of_DevOps_Report and 2017 State of DevOps Report; refer to: www.services.google/fh/files/misc/state-of-devops-2017.pdf, retrieved January 20, 2020

-

[De Weck 2016] Engineering Systems – Meeting Human Needs in a Complex Technological World, by Olivier L. de Weck, Daniel Roos, and Christopher L. Magee, September 2016, published by MIT Press

-

[DORA State of DevOps Report 2019] DevOps Research & Assessment (DORA) 2019 State of DevOps Report; refer to https://services.google.com/fh/files/misc/state-of-devops-2019.pdf, retrieved May 3, 2020

-

[DPBoK 2020] The Digital Practitioner Body of Knowledge™ Standard (the DPBoK™ Standard), a standard of The Open Group (C196), January 2020, published by The Open Group; refer to: www.opengroup.org/library/c196

-

[Erder 2016] Continuous Architecture: Sustainable Architecture in an Agile and Cloud-Centric World, by Murat Erder and Pierre Pureur, Elsevier, November 2015, published by Morgan Kaufmann

-

[Evans 2003] Domain-Driven Design: Tackling Complexity in the Heart of Software, by Eric Evans, August 2003, published by Addison-Wesley Professional

-

[Event Storming] Event Storming Workshop Format; refer to: www.eventstorming.com/

-

[Fielding 2000] Architectural Styles and the Design of Network-Based Software Architectures, by Roy Fielding; published by the University of California; refer to: www.ics.uci.edu/fielding/pubs/dissertation/fielding_dissertation.pdf

-

[Ford 2017] Building Evolutionary Architectures, by Neal Ford, Patrick Kua, and Rebecca Parsons, September 2017, published by O’Reilly

-

[Forrester] Forrester® Research; refer to: www.forrester.com/Customer-Journey)

-

[Forsgren 2018] Accelerate: The Science of Lean Software and DevOps: Building and Scaling High Performing Technology Organizations, by Nicole Forsgren, Kim Humble, and Gene Kim, April 2018, published by Trade Select

-

[Fountaine 2019] Building the AI-Powered Organization: the Main Challenge isn’t Technology, it’s Culture, by Tim Fountaine, Brian McCarthy, and Tamim Saleh, July-August 2019, published in the Harvard Business Review

-

[Fowler] ExpositionalArchitecture, by Martin Fowler; refer to: www.martinfowler.com/bliki/ExpositionalArchitecture.html

-

[Fowler 2004] StranglerFigApplication, by Martin Fowler, June 2004; refer to: www.martinfowler.com/bliki/StranglerApplication.html

-

[Fowler 2013] Continuous Delivery, by Martin Fowler, May 2013; refer to: www.martinfowler.com/bliki/ContinuousDelivery.html

-

[Fowler 2014] Sacrificial Architecture, by Martin Fowler, October 2014; refer to: www.martinfowler.com/bliki/SacrificialArchitecture.html

-

[Fowler 2015] Making Architecture Matter – Martin Fowler Keynote, by Martin Fowler, July 2015, uploaded by O’Reilly Media; refer to: www.youtube.com/watch?v=DngAZyWMGR0

-

[Fowler 2019] Refactoring: Improving the Design of Existing Code, by Martin Fowler, January 2019, published by Addison-Wesley

-

[Friis Dam 2020] Personas – A Simple Introduction, by Rikke Friis Dam and Yu Siang Teo, 2020, published by the Interaction Design Foundation; refer to: www.interaction-design.org/literature/article/personas-why-and-how-you-should-use-them

-

[Furr 2019] Digital Doesn’t Have to Be Disruptive: The Best Results Can Come From Adaptation Rather Than Reinvention by Nathan Furr and Andrew Shipilov, July-August 2019, published in the Harvard Business Review

-

[George 2005] Fast Innovation: Achieving Superior Differentiation, Speed to Market, and Increased Profitability, by Michael George, James Works, and Kimberly Watson-Hemphill, July 2005, published by McGraw-Hill Education

-

[George 2018] Integrating Around the Consumer: A Path Forward for the Global Apparel Manufacturing Supply Chain, by Jon George and Peter Ting, November 2018, published by the Christensen Institute; refer to: www.christenseninstitute.org/wp-content/uploads/2019/03/Integrating-around-the-consumer-1.pdf

-

[Gilbreth 1921] Process Charts: First Steps in Finding the One Best Way to Do Work, by F. B. Gilbreth and L. M. Gilbreth, December 1921, presented at The Annual Meeting of The American Society of Mechanical Engineers

-

[GoF 1994] Design Patterns: Elements of Reusable Object-Oriented Software, by Erich Gamma, Richard Helm, Ralph Johnson, and John Vlissides, October 1994, published by Addison-Wesley

-

[Greyser 2019] What Does Your Corporate Brand Stand For?, by Stephen A. Greyser and Mats Urde, January-February 2019, published in the Harvard Business Review

-

[Groenfeldt 2018] Get Car Price And Finance Info Before Even Talking To A Car Salesman, by Tom Groenfeldt, March 2018, published by Forbes; refer to: www.forbes.com/sites/tomgroenfeldt/2018/03/13/get-car-price-and-finance-info-without-ever-talking-to-a-car-salesman/#43e794ba196a, retrieved on May 6, 2018

-

[Harrington 1991] Business Process Improvement: The Breakthrough Strategy for Total Quality, Productivity, and Competitiveness, by H. James Harrington, May 1991, published by McGraw-Hill Education

-

[Hodgson 2017] Feature Toggles (aka Feature Flags), by Peter Hodgson, October 2017, published in martinfowler.com; refer to: www.martinfowler.com/articles/feature-toggles.html

-

[Holland 2012] Signals and Boundaries: Building Blocks for Complex Adaptive Systems, by John H. Holland, January 2014, published by MIT Press

-

[Holland 2014] Complexity: A Very Short Introduction, by John H. Holland, July 2014, published by Oxford University Press

-

[Humble 2010] Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation, by Jez Humble and David Farley, July 2010, published by Addison-Wesley

-

[IETF® 2017] Uniform Resource Names (URNs), by P. Saint-Andre and J. Klensin, April 2017, published by the Internet Engineering Task Force (IETF®); refer to: www.tools.ietf.org/html/rfc8141

-

[Ishikawa 1985] What is Total Quality Control? The Japanese Way, by Kaoru Ishikawa, March 1985, published by Prentice Hall

-

[ISO/IEC 9126-1:2001] Software Engineering – Product Quality – Part 1: Quality Model, 2001, published by ISO (now withdrawn); refer to: https://www.iso.org/standard/22749.html

-

[ISO/IEC 25010:2011] Systems and Software Engineering – Systems and Software Quality Requirements and Evaluation (SQuaRE) – System and Software Quality Models, 2011, published by ISO; refer to: https://www.iso.org/standard/35733.html]

-

[ISO/IEC/IEEE 42010:2011] Systems and Software Engineering – Architecture Description, 2011, published by ISO; refer to: www.iso.org/standard/50508.html

-

[Johnson 2008] Reinventing your Business Model, by Mark W. Johnson, Clayton M. Christensen, and Henning Kagermann, December 2008, published in the Harvard Business Review

-

[Kalbach 2016] Mapping Experiences: A Guide to Creating Value through Journeys, Blueprints, and Diagrams by James Kalbach, December 2016, published by O’Reilly Media

-

[Kent 2012] Data and Reality: A Timeless Perspective on Perceiving and Managing Information in Our Imprecise World, Third Edition, by William Kent, February 2012, published by Technics Publications, LLC

-

[Kersten 2018] Project to Product: How to Survive and Thrive in the Age of Digital Disruption with the Flow Framework, by Mik Kersten, November 2018, published by IT Revolution Press

-

[Kim 2013] The Phoenix Project: A Novel about IT, DevOps, and Helping your Business Win, by Gene Kim, Kevin Behr, and George Spafford, January 2013, published by IT Revolution Press

-

[Kim 2016] The DevOps Handbook: How to Create World-Class Agility, Reliability, and Security in Technology Organizations, by Gene Kim, Patrick Debois, and John Willis, December 2016, published by Trade Select

-

[Kniberg 2019] Spotify: A Scrum@Scale Case Study by Henrik Kniberg, August 2019, published by Scrum Alliance®; refer to: www.scrumalliance.org/agilematters/articles/spotify-a-scrumatscale-case-study, retrieved on April 20th at 11am

-

[Korzybski 1958] Science and Sanity: an Introduction to Non-Aristotelian Systems and General Semantics, by Alfred Korzybski, 1958, published by the Institute of General Semantics

-

[Krafcik 1988] Triumph of the Lean Production System, by John F. Krafcik, 1988, MIT International Motor Vehicle Program, published in the Sloan Management Review; refer to: www.lean.org/downloads/MITSloan.pdf, retrieved on March 22,2020

-

[Leffingwell 2011] Agile Software Requirements, by Dean Leffingwell, 2011, published by Addison-Wesley

-

[LEI] The Lean Enterprise Institute; refer to: www.lean.org/

-

[LEI] Strategy Deployment, The Lean Lexicon, Fifth Edition, by Chet Marchwinski, Alexis Schroeder, and John Shook, April 2004, published by the Lean Enterprise Institute; refer to: www.lean.org/lexicon/strategy-deployment

-

[LEI] True North, The Lean Lexicon, Fifth Edition, by Chet Marchwinski, Alexis Schroeder, and John Shook, April 2004, published by the Lean Enterprise Institute; refer to: www.lean.org/lexicon/true-north, retrieved on February 23, 2020

-

[Levitt 1960] Marketing Myopia, by Theodore Levitt, first published in 1960 and reprinted in the “Best of HBR” July-August 2004, Harvard Business Review; refer to: www.hbr.org/2004/07/marketing-myopia

-

[Levitt 1962] Innovation in Marketing: New Perspectives for Profit and Growth, by Theodore Levitt, June 1962, published by McGraw-Hill

-

[Liker 2017] The Toyota Way to Service Excellence: Lean Transformation in Service Organizations, by Jeffrey K. Liker and Karyn Ross, March 2017, published by McGraw Hill Education

-

[MacCormack 2007] Exploring the Duality between Product and Organizational Architectures: A Test of the “Mirroring” Hypothesis, Working Paper 08-039, by Alan MacCormack, John Rusnak, and Carliss Baldwin, 2007, published by the Harvard Business School; refer to: www.hbs.edu/faculty/Publication%20Files/08-039_1861e507-1dc1-4602-85b8-90d71559d85b.pdf

-

[Mandelbaum 2006] Value Engineering Handbook by Jay Mandelbaum and Danny L. Reed, 2006, published by the Institute for Defense Analysis

-

[Martin 1995] The Great Transition : Using the Seven Disciplines of Enterprise Engineering to Align People, Technology, and Strategy, by James Martin, January 1995, published by Amacom

-

[Martin 2014]] The Big Lie of Strategic Planning, by Roger L. Martin, January-February 2014, published by the Harvard Business Review; refer to: www.hbr.org/2014/01/the-big-lie-of-strategic-planning

-

[McChrystal 2015] Team of Teams: New Rules of Engagement for a Complex World, by General Stanley McChrystal, Tantum Collins, Chris Fussell, and David Silverman, November 2015, published by Penguin

-

[McKinsey 2019] Planning in an Agile Organization, by Santiago Comella-Dorda, Khushpreet Kaur, and Ahmad Zaidi, February 2019, published by McKinsey & Company; refer to: www.mckinsey.com/business-functions/mckinsey-digital/our-insights/planning-in-an-agile-organization

-

[Merriam-Webster] Merriam-Webster Dictionary; refer to: www.merriam-webster.com/

-

[Miraglia 2014] Systems Architectures and Innovation: the Modularity-Integrality Framework, by Stefano Miraglia, 2014, a Working Paper, published by the University of Cambridge

-

[MIT] Decision Rights and Governance; refer to: www.cisr.mit.edu/content/classic-topics-decision-rights

-

[MIT] Mastering Design Thinking; refer to: www.executive-ed.mit.edu/mastering-design-thinking

-

[MIT OCW 2010] Operations Strategy, MIT Course Number 15.769 Fall 2010, Massachusetts Institute of Technology Open Courseware; refer to: www.ocw.mit.edu/courses/sloan-school-of-management/15-769-operations-strategy-fall-2010/

-

[Morgan 2019] Designing the Future: How Ford™, Toyota™, and Other World-Class Organizations Use Lean Product Development to Drive Innovation and Transform their Business, by James M. Morgan and Jeffery K. Liker, November 2018, published by McGraw-Hill Education

-

[Murman 2002] Lean Enterprise Value: Insights from the MIT’s Lean Aerospace Initiative, by Earll M. Murman, Joel Cutcher-Gershenfeld, and Tom Allen, May 2002, published by AIAA

-

[Norman 2013] The Design of Everyday Things, by Don Norman, December 2013, published by MIT Press

-

[Northrop 2012] A Framework for Software Product Line Practice, Version 5.0, by Linda M. Northrop and Paul C. Clements, December 2012, published by the Software Engineering Institute

-

[Nygard] Architecture Decision Record Template; refer to: www.github.com/joelparkerhenderson/architecture-decision-record, retrieved on February 24, 2020

-

[Nygard 2011] Documenting Architecture Decisions, Michael Nygard Blog, 2015; refer to: www.thinkrelevance.com/blog/2011/11/15/documenting-architecture-decisions

-

[Nygard 2018] Release It! Design and Deploy Production-Ready Software, by Michael Nygard, January 2018, published by The Pragmatic Bookshelf

-

[O-AA Security Playbook 2021] O-AA™ Security Playbook, The Open Group Guide (G216), March 2021, published by The Open Group; refer to: www.opengroup.org/library/g216

-

[Ohno 1988] Toyota Production System: Beyond Large-Scale Production, by Taiichi Ohno and Norman Bodek, March 1988, published by Productivity Press

-

[Olsen 2015] The Lean Product Playbook: How to Innovate with Minimum Viable Products and Rapid Customer Feedback, by Dan Olsen, July 2015, published by John Wiley & Sons

-

[Parker 2016] Platform Revolution: How Networked Markets are Transforming the Economy – and How to Make them Work for You, by Geoffrey G. Parker, Marshall W. Van Alstyne, and Sangeet Paul Choudary, March 2016, published by W. W. Norton and Company

-

[Parnas 1972] On the Criteria to be Used in Decomposing Systems into Modules, by S. L. Parnas, 1972, published by Carnegie Mellon University

-

[Pasmore 2019] Reflections: Sociotechnical Systems Design and Organization Change, by William Pasmore, Stu Winby, Susan Albers Mohrman, and Rick Vanasse, 2019, published in the Journal of Change Management, Vol. 19

-

[Patton 2014] User Story Mapping: Discover the Whole Story, Build the Right Product, by Jeff Patton, September 2014, published by O’Reilly Media

-

[Paulchell 2016] Evolution of a Data Streaming Architecture: Enabling the Business to Turn Data into Insight, by Joseph Paulchell, Principal Software Engineer, 2016, published by Capital One Digital Engineering; refer to: www.resources.sei.cmu.edu/asset_files/Presentation/2016_017_001_454648.pdf, retrieved on May 6th 2020

-

[Porter 1996] What Is Strategy? by Michael E. Porter, November-December 1996, published in the Harvard Business Review

-

[Prehofer 2007] Compositionality in Software Platforms, by Christian Prehofer, Jilles van Gurp, and Jan Bosch, 2007, published by Nokia Research

-

[Raft] The Raft Consensus Algorithm; refer to: www.raft.github.io/

-

[Rezai 2016] When is Software Goods?, by Arezou Rezai, September 2016, published by Paris Smith LLP; refer to: www.parissmith.co.uk/blog/when-is-software-goods/

-

[Richards 2015] Software Architecture Patterns, by Mark Richards, February 2015, published by O’Reilly Media

-

[Richardson 2010] Understanding Customer Experience, by Adam Richardson, October 2010, published in the Harvard Business Review

-

[Ries 2009] Sharding for Startups, by Eric Ries, January 2009; refer to: www.startuplessonslearned.com/2009/01/sharding-for-startups.html

-

[Ries 2011] The Lean Startup: How Constant Innovation Creates Radically Successful Businesses, by Eric Ries, October 2011, published by Portfolio Penguin

-

[Rigby 2018] Agile at Scale, by Darrell K. Rigby, Jeff Sutherland, and Andy Noble, May-June 2018, published in the Harvard Business Review

-

[Ross 2019] Designed for Digital: How to Architect your Business for Sustained Success, by Jeanne W. Ross, Cynthia M. Beath, and Martin Mocker, September 2019, published by MIT Press

-

[Ross 2019] Why Hypotheses Beat Goals, by Jeanne Ross, April 2019, published by MIT Sloan Management Review; refer to: www.sloanreview.mit.edu/article/why-hypotheses-beat-goals/

-

[Rossman 2019] Think Like Amazon™: 50 1/2 Ideas to Become a Digital Leader, John Rossman, May 2019, published by McGraw-Hill Education

-

[Rother 2003] Learning to See – Value-Stream Mapping to Create Value and Eliminate Muda, Version 1.3, by Mike Rother and John Shook, June 2003, published by the Lean Enterprise Institute

-

[Rozanski 2005] Software Systems Architecture: Working with Stakeholders using Viewpoints and Perspectives, by Nick Rozanski and Eoin Woods, April 2005, published by Addison-Wesley

-

[Samaras 2013] Capabilities-Based Planning for Energy Security at Department of Defense Installations, by Constantine Samaras and Henry H. Willis, 2013, published by RAND Corporation

-

[Sawhney 2016] Putting Products into Services, by Mohanbir Sawhney, September 2016, published in the Harvard Business Review; refer to: www.hbr.org/2016/09/putting-products-into-services

-

[Scaled Agile, Inc.] Scaled Agile, Inc. The Provider of SAFe®; refer to: www.scaledagile.com

-

[Scheiber 2017] How Uber Uses Psychological Tricks to Push Its Drivers’ Buttons, by Noam Scheiber and graphics by Jon Huang, April 2017, published by the New York Times Company

-

[Schema.org] Schema.org is a collaborative, community activity with a mission to create, maintain, and promote schemas for structured data on the Internet, on web pages, in email messages, and beyond; refer to: www.schema.org/

-

[Schoemaker 2009] How to Make Sense of Weak Signals, by Paul J.H. Schoemaker and George S. Day, April 2009, published by Massachusetts Institute of Technology (MIT) Sloan Management Review (SMR); refer to: www.sloanreview.mit.edu/article/how-to-make-sense-of-weak-signals/

-

[Seddon 2003] Strategy and Business Models: What’s the Difference?, by Peter B. Seddon and Geoffrey P. Lewis, published in the Pacific Asia Conference on Information Systems (PACIS) 2003

-

[Seite 2010] The Concept of Modularisation of Industrial Services by Fabrice Seite, Oliver Schneider, and Andreas Nobs, 2010, published by IFIP International Federation for Information Processing and printed in Advances in Production Management Systems: New Challenges, New Approaches: International IFIP WG 5.7 Conference, APMS 2009, by B. Vallespir and T. Alix, November 2014, published by Springer

-

[Sen 1992] Inequality Re-Examined, by Amartya Sen, 1992, published by Clarendon Press

-

[Shoup 2014] From the Monolith to Micro-Services, by Randy Shoup, October 2014, published by slideshare.net; refer to: www.slideshare.net/RandyShoup/monoliths-migrations-and-microservices

-

[Simon 1962] The Architecture of Complexity, by Herbert A. Simon, December 1962, published in the Proceedings of the American Philosophical Society, Volume 106

-

[Simon 2018] Liquid Software: How to Achieve Trusted Continuous Updates in the DevOps World, by Fred Simon, Yoav Landman, Baruch Sadogursky, May 2018, published by CreateSpace Independent Publishing Platform

-

[Singh 2020] Mobile Deep Learning with TensorFlow Lite, ML Kit, and Flutter, by Anubhav Singh and Rimjhim Bhadani, April 2020, published by Packt Publishing

-

[Skelton 2019] Team Topologies: Organizing Business and Technology Teams for Fast Flow, by Matthew Skelton and Manuel Pais, September 2019, published by IT Revolution Press

-

[Spear 1999] Decoding the DNA of the Toyota Production System, by Steven Spear and H. Kent Bowen, September-October 1999, published by the Harvard Business Review; refer to: www.hbr.org/1999/09/decoding-the-dna-of-the-toyota-production-system

-

[sre.google] sre.google; refer to: https://sre.google/

-

[Stanford 2010] An Introduction to Design Thinking – Process Guide, by the Hasso Plattner Institute of Design, Stanford

-

[Steiglitz 2019] The Discrete Charm of the Machine: Why the World Became Digital, by Kenneth Steiglitz, February 2019, published by Princeton University Press

-

[Sull 2015] Why Strategy Execution Unravels – and What to Do About It, by Donald Sull, Rebecca Homkes, and Charles Sull, March 2015, published in the Harvard Business Review

-

[Sutcliff 2019] The Two Big Reasons That Digital Transformations Fail, by Mike Sutcliff, Raghav Narsalay, and Aarohi Sen, October 2019, published by the Harvard Business School

-

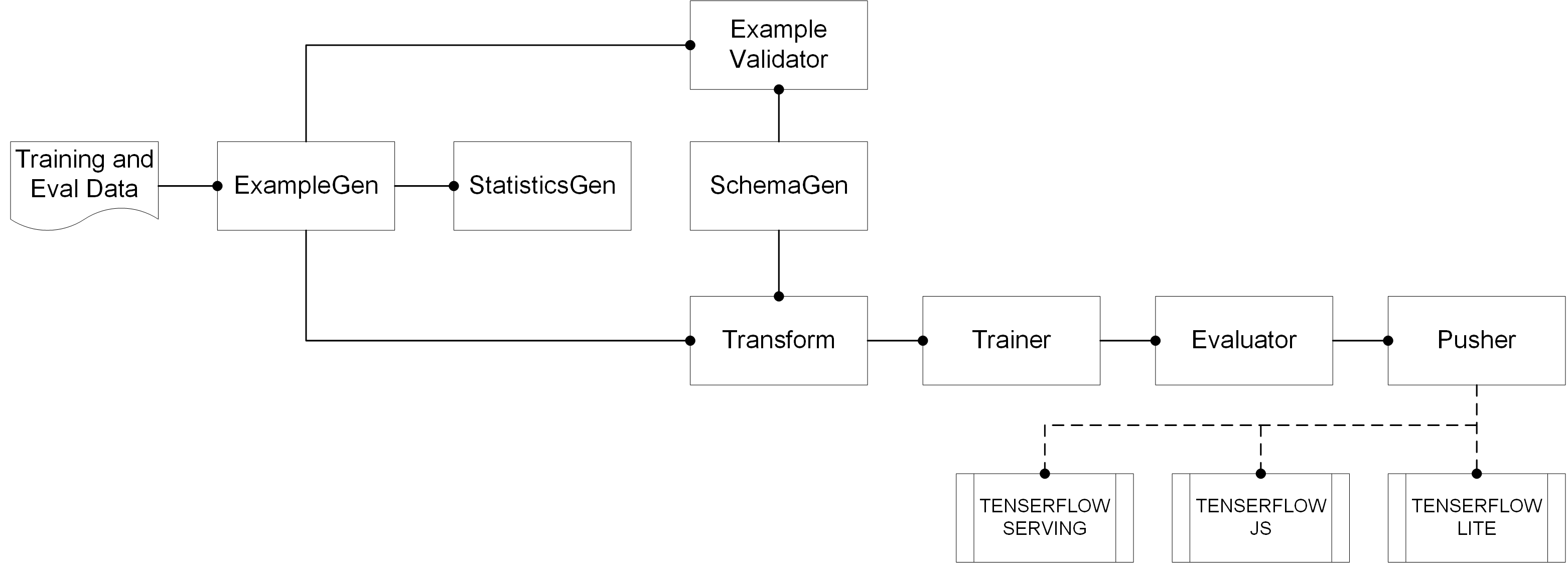

[TFX User Guide] TFX User Guide; refer to: www.tensorflow.org/tfx/guide

-

[TOGAF Standard 2018] The TOGAF® Version 9.2, a standard of The Open Group (C182), April 2018, published by The Open Group; refer to: www.opengroup.org/library/c182

-

[Ton 2010] Zara: Managing Stores for Fast Fashion, by Zeynep Ton, Elena Corsi, and Vincent Dessain, January 2010, revised edition, published by Harvard Business School

-

[Traynor 2016] Focus on the Job, Not the Customer by Des Tranor, 2016, published by Inside Intercom; refer to: www.intercom.com/blog/when-personas-fail-you/, retrieved on 30/04/2020

-

[Trist 1951] Some Social and Psychological Consequences of the Longwall Method of Coal-Getting by E. L. Trist and K.W Bamforth, 1951, published by The Tavistock Institute

-

[Ulrich 1993] The Role of Product Architecture in the Manufacturing Firm, by Karl Ulrich, December 1993, Research Policy, final version received by MIT, Sloan School of Management

-

[Ulrich 2020] Product Design and Development, Seventh Edition, by Karl T. Ulrich, Steven D. Eppinger, and Maria C. Yang. 2020, published by McGraw-Hill

-

[Van Mieghem 2015] Operations Strategy: Principles and Practice, Second Edition, by Jan A. Van Mieghem and Gad Allon, January 2015, published by Dynamic Ideas

-

[Vanderhaegen 2019] Automation Challenges of Socio-Technical Systems, by Frederic Vanderhaegen, Choubeila Maaoui, Denis Berdjag, and Mohamed Sallak, July 2019, published by Wiley-ISTE

-

[Vandermerwe 1988] Servitization of Business: Adding Value by Adding Services, by Sandra Vandermerwe and Juan Rada, Winter 1988, published in the European Management Journal, Volume 6, Issue 4

-

[Vaughn 2013] Implementing Domain-Driven Design, by Vaughn Vernon, February 2013, published by Addison-Wesley Professional

-

[Ward 2014] Lean Product and Process Development, Second Edition, by Allen C. Ward and Durward K. Sobek II, February 2014, published by the Lean Enterprise Institute

-

[Weill 2005] How Effective is Your IT Governance? Research Briefing, by Peter Weill and Jeanne Ross, 2005, published by MIT CISR

-

[Wikipedia®] List of System Quality Attributes, published by Wikipedia®; refer to: en.wikipedia.org/wiki/List_of_system_quality_attributes

-

[Wind 2015] Beyond Advertising: Creating Value Through All Customer Touchpoints, by Yoram (Jerry) Wind and Catharine Findiesen Hays, published by Wiley 2015

-

[Wind 2016] Beyond Advertising: Creating Value through All Customer Touchpoints, by Yoram (Jerry) Wind and Catharine Findiesen Hays, February 2016, published by John Wiley & Sons

-

[Womack 1996] Lean Thinking: Banish Waste and Create Wealth in Your Corporation by James P. Womack and Daniel T. Jones, 1996, published by Simon & Schuster

-

[World-Class EA 2017] World-Class EA: Governors’ Approach to Developing and Exercising an Enterprise Architecture Governance Capability (W178), July 2017, published by The Open Group; refer to: www.opengroup.org/library/w178

-

[Wu 2010] Operational Capabilities: The Secret Ingredient by Sarah Jinhui Wu†, Steven A. Melnyk, and Barbara B. Flynn, November 2010, published in Decision Sciences, Volume 41, No. 4

1. Introduction

1.1. Objective

This document is The Open Group Open Agile Architecture™ standard. The objective of this document is to cover both the Digital Transformation of the enterprise, together with the Agile Transformation of the enterprise.

1.2. Overview

This documents covers both the Digital Transformation and the Agile Transformation of the enterprise. It is divided into two parts:

-

Part 1: The O-AA Core covers the fundamental concepts of the framework and introduces its structure before explaining why the enterprise needs to conduct a dual Digital and Agile Transformation, thus establishing the foundation of the Agile Architecture Body of Knowledge

-

Part 2: The O-AA Building Blocks develops the topics introduced in Part 1 in greater detail, including chapters on topics such as Agile strategy, Agile organization, and software architecture

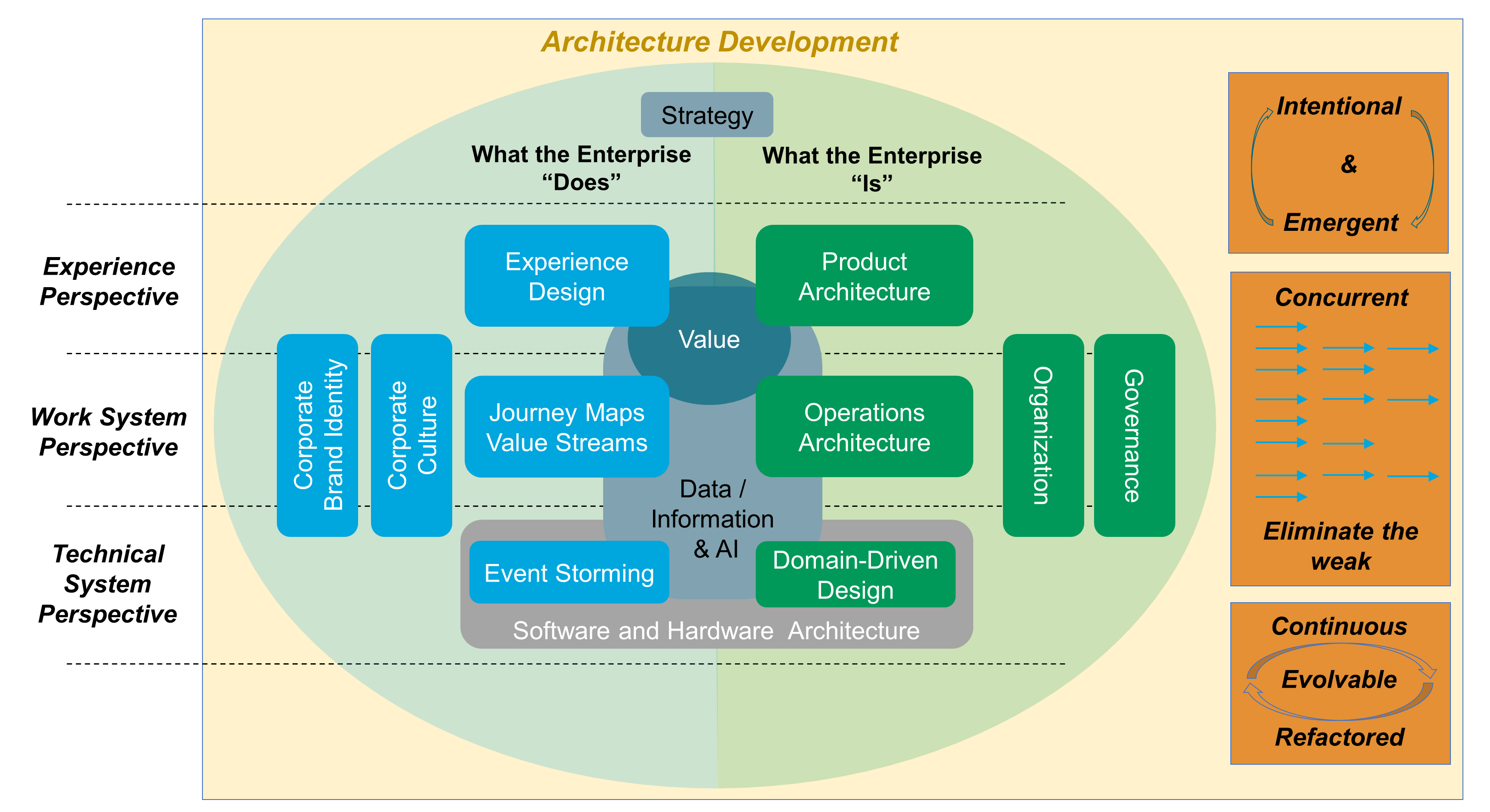

It includes content from the perspectives of what the enterprise does, such as experience design, journey mapping, and what the enterprise is, such as product architecture, and operations architecture.

1.3. Conformance

Refer to The Open Group website for conformance requirements for this document.

1.4. Normative References

None.

1.5. Terminology

For the purposes of this document, the following terminology definitions apply:

- Can

-

Describes a possible feature or behavior available to the user or application.

- May

-

Describes a feature or behavior that is optional. To avoid ambiguity, the opposite of “may” is expressed as “need not”, instead of “may not”.

- Shall

-

Describes a feature or behavior that is a requirement. To avoid ambiguity, do not use “must” as an alternative to “shall”.

- Shall not

-

Describes a feature or behavior that is an absolute prohibition.

- Should

-

Describes a feature or behavior that is recommended but not required.

- Will

-

Same meaning as “shall”; “shall” is the preferred term.

1.6. Future Directions

It is expected that this document will need to be revised from time to time to remain current with both practice and technology.

2. Definitions

For the purposes of this document, the following terms and definitions apply. Merriam-Webster’s Collegiate Dictionary should be referenced for terms not defined in this section.

2.1. Accountability

The obligation to demonstrate task achievement and take responsibility for performance in accordance with agreed expectations; the obligation to answer for an action. (Source: Vanderhaegen 2019)

2.2. Alignment Diagram

Any map, diagram, or visualization that reveals both sides of value creation in a single overview. It is a category of diagram that illustrates the interaction between people and organizations. (Source: Kalbach 2016)

2.3. Allowable Lead Time

The time available between starting a product development initiative or process and finishing it in order to satisfy customers.

2.4. Architectural Runway

Consists of the existing code, components, and technical infrastructure needed to implement near-term features without excessive redesign and delay. (Source: Scaled Agile, Inc.)

2.5. Architecture

-

The fundamental concepts or properties of a system in its environment embodied in its elements, relationships, and in the principles of its design and evolution. (Source: ISO/IEC/IEEE 42010:2011)

-

(System Engineering Context) The embodiment of concept, and the allocation of physical/informational function (process) to elements of form (objects) and definition of structural interfaces among the objects. (Source: Crawley 2016)

2.6. Architecture Principle

A qualitative statement of intent that should be met by the architecture. (Source: The TOGAF® Standard 2018)

2.7. Architecture Style

A coordinated set of architectural constraints that restricts the roles/features of architectural elements and the allowed relationships among those elements within any architecture that conforms to that style. (Source: Fielding 2000)

2.8. Capability

-

An ability that an organization, person, or system possesses. (Source:TOGAF Standard 2018)

-

The ability to achieve a desired outcome. (Source: CJCSM 2005)

2.9. Catchball

A dialog between senior managers and project teams about the resources and time both available and needed to achieve the targets.

(Source: LEI)

2.10. Continuous Architecture

An architecture with no end-state that is designed to evolve to support the changing needs of the digital enterprise.

2.11. Customer Experience

The sum-totality of how customers engage with your company and brand, not just in a snapshot in time, but throughout the entire arc of being a customer. (Source: Richardson 2010)

2.12. Customer Journey

The series of interactions between a customer and a company that occur as the customer pursues a specific goal. (Source: Forrester®)

2.13. Design Thinking

A methodology for creative problem solving that begins with understanding unmet customer needs. (Sources: Stanford 2010 and MIT)

2.14. Digital Platform

A software system composed of application and infrastructure components that can be rapidly reconfigured using DevOps and cloud-native computing.

2.15. Digital Practices

A synthesis of methods and guidance from a wide variety of practitioners and professional communities active in digital technology (Lean, Agile, DevOps, etc.) designed to create and manage products with an increasing digital component, or lead their organization through Digital Transformation.

2.16. Digital Technology

A powerful, accessible, and potentially game-changing technology (social, mobile, cloud, analytics, Internet of Things (IoT), cognitive computing, and biometrics) often used in combination and usually characterized by its ability to positively impact an enterprise’s business model, customer experience, product, or operating system to enable innovation and growth.

2.17. Digital Transformation

The use of digital practices supported by digital technologies to achieve a shift in the business model, value proposition, operating system, or distribution system to radically improve customer relationships, profitability, internal processes, performance, accessibility, and market reach of an enterprise.

2.18. Domain Model: Domain-Driven Design

The representation of a selected abstraction of domain knowledge that is rigorously organized.

(Source: Evans 2003)

2.19. Ecosystem

The complex community of organisms and their environment, functioning as an ecological unit.

(Source: Wind 2015)

2.20. Epic

-

(Classical Agile) A large user story that cannot be delivered as defined within a single iteration, or is large enough that it can be split into smaller user stories.

(Source: Agile Alliance®)

-

(Scaled Agile) The highest-level expression of a customer need. Development initiatives that are intended to deliver the value of an investment theme and are identified, prioritized, estimated, and maintained in the portfolio backlog. (Source: Leffingwell 2011)

2.21. Event Storming

The identification of domain events, commands, persona, or entities to facilitate a structured conversation about the domain.

2.22. Evolutionary Architecture

An architecture that supports guided, incremental change across multiple dimensions. (Source: Ford™ 2017)

2.23. Evolvability

A meta-non-functional requirement that aims to prevent other architecture requirements, in particular the non-functional ones, from degrading over time.

2.24. Feature

The functional characteristic of a product (goods or services).

2.25. Hardware

Tools, machines, wiring, and other physical components of a system.

2.26. Information Security

The protection of information and information systems from unauthorized access, use, disclosure, disruption, modification, or destruction in order to provide integrity, confidentiality, and availability.

2.27. Integrality

The system’s property of being made up of elements that behave consistently as a whole.

(Source: Miraglia 2014)

2.28. Intentional Architecture

A purposeful set of statements, models, and decisions that represent some future architectural state.

2.30. Journey Mapping

Laying out the entire end-to-end customer experience.

2.31. Lead Time

The time between the initiation and completion of a process.

2.32. Lean Value Stream

All of the actions, both value-creating and non-value-creating, required to bring a product from concept to launch (also known as the development value stream) and from order to delivery (also known as the operational value stream).

(Source: LEI)

2.33. Modularity

The system’s property of being made up of elements that present a high independence of other elements. (Source: Miraglia 2014)

2.34. Modularization

Design decisions which must be made before the work on independent modules can begin.

(Source: Parnas 1972)

2.35. Operating System

The combination of assets and processes required to deliver a product or a service.

(Source: Van Mieghem 2015)

2.36. Outcome

The result of an activity conducted by a provider and experienced by a consumer.

2.37. Persona

A fictional character which is created based upon research in order to represent the different user types that might use a service, product, site, or brand in a similar way. (Source: Friis Dam 2020)

2.38. Platform Business Model

Business model that is based on the two-sided market theory.

2.39. Process

Any activity or group of activities that takes an input, adds value to it, and provides an output to an internal or external customer.

(Source: Harrington 1991)

2.40. Product

A bundle of services and/or goods offered to customers.

2.41. Product Backlog

A list of the new features, changes to existing features, bug fixes, infrastructure changes, or other activities that a team may deliver in order to achieve a specific outcome. (Source: Agile Alliance)

2.42. Product-Centric Organization

An organization structured around permanent teams by opposition to temporary teams or projects.

2.43. Refactoring

The process of changing a software system in a way that does not alter the external behavior of the code yet improves its internal structure.

(Source: Fowler 2019)

2.44. Responsibility

The obligation to carry forward a task to its successful conclusion.

(Source: Vanderhaegen 2019)

2.45. Service

-

(Business context) An act performed for the benefit of another.

-

(Software context) An encapsulated component that delivers its outcomes through well-defined interfaces.

2.46. Social System

People, their behavior, cultural beliefs, skills, and expertise, and how work teams are forming and interacting, as well as organizational leadership, strategy, structure, policy, and procedures.

2.47. System

A set of entities and their relationships, whose functionality is greater than the sum of the individual entities. (Source: Crawley 2016)

2.48. User Story

A brief statement of intent that describes something the system needs to do for the user.

(Source: Patton 2014)

2.49. Value Stream

End-to-end collection of value-added and non-value-added activities that create an overall result for a customer, stakeholder, or end user.

2.50. Weak Signal

A seemingly random or disconnected piece of information that at first appears to be background noise but can be recognized as part of a significant pattern by viewing it through a different frame or connecting it with other pieces of information. (Source: Schoemaker 2009)

2.51. Work System

Human participants and/or machines perform processes and activities using software, hardware, and other resources to deliver products or experiences.

Part 1: The O-AA Core

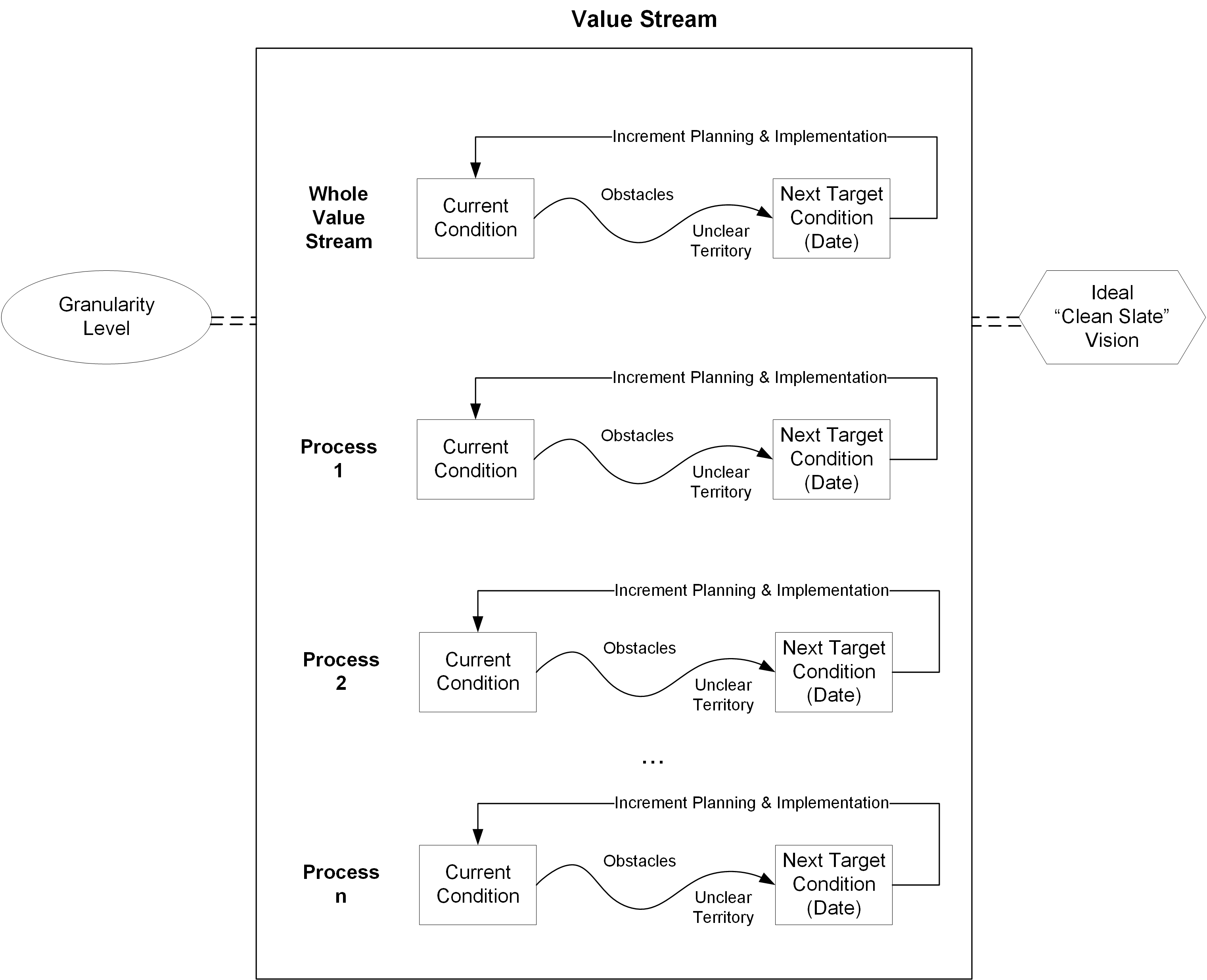

This Part defines the fundamental concepts of Agile Architecture and describes the O-AA approach, the dual Digital and Agile Transformation, architecture development, intentional architecture, continuous architectural refactoring, architecting the Agile Transformation, and Agile governance. A set of axioms for the practice of Agile Architecture is listed in the last chapter.

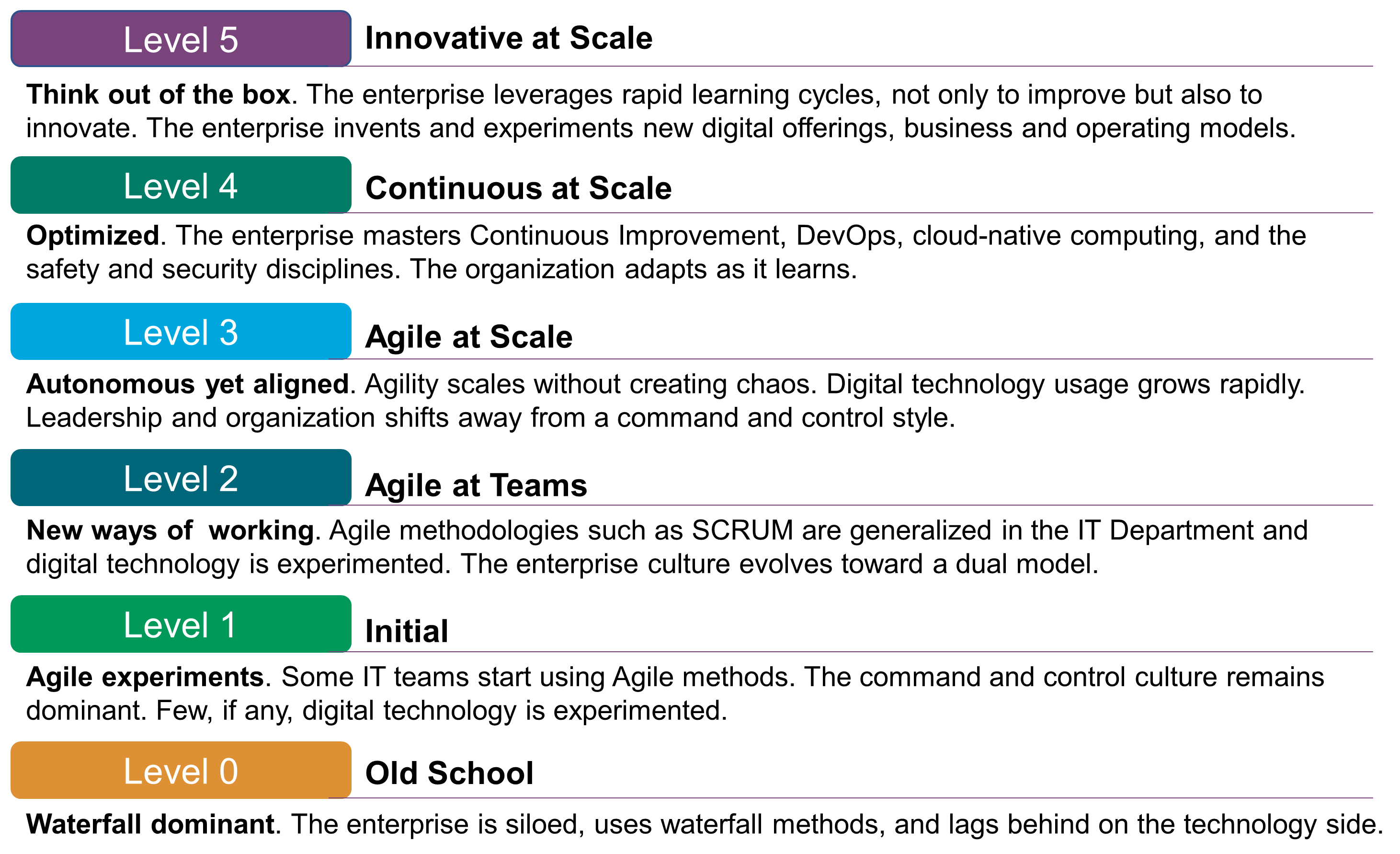

Enterprises are at various stages of the adoption of Agile methodologies. Figure 1 represents a basic Agile Maturity Model that should help the reader to make an assessment of where they are, and ideally helps to frame the key concepts that follow.

3. A Dual Transformation

The Digital Practitioner Body of Knowledge™ Standard [DPBoK™ 2020] defines Digital Transformation as:

-

"A strategy and an operating model change, in which technological advancements are leveraged to improve human experiences and operating efficiencies, and to evolve the products and services to which customers will remain loyal"

It also defines the Digital Enterprise as:

-

"An enterprise characterized by: 1. creation of digitalized products or services that are either delivered fully digitally (e.g., digital media or online banking), or 2. where physical products and services are obtained by the customer by digital means (e.g., online car-sharing services)"

The digital enterprise is about applying digital technology to adapt or change:

-

The strategy of the enterprise

-

The product or service it markets

-

The experience it delivers to customers, employees, and other stakeholders

-

Its operating system

The Agile enterprise senses changes in its environment early and acts upon them decisively and rapidly.

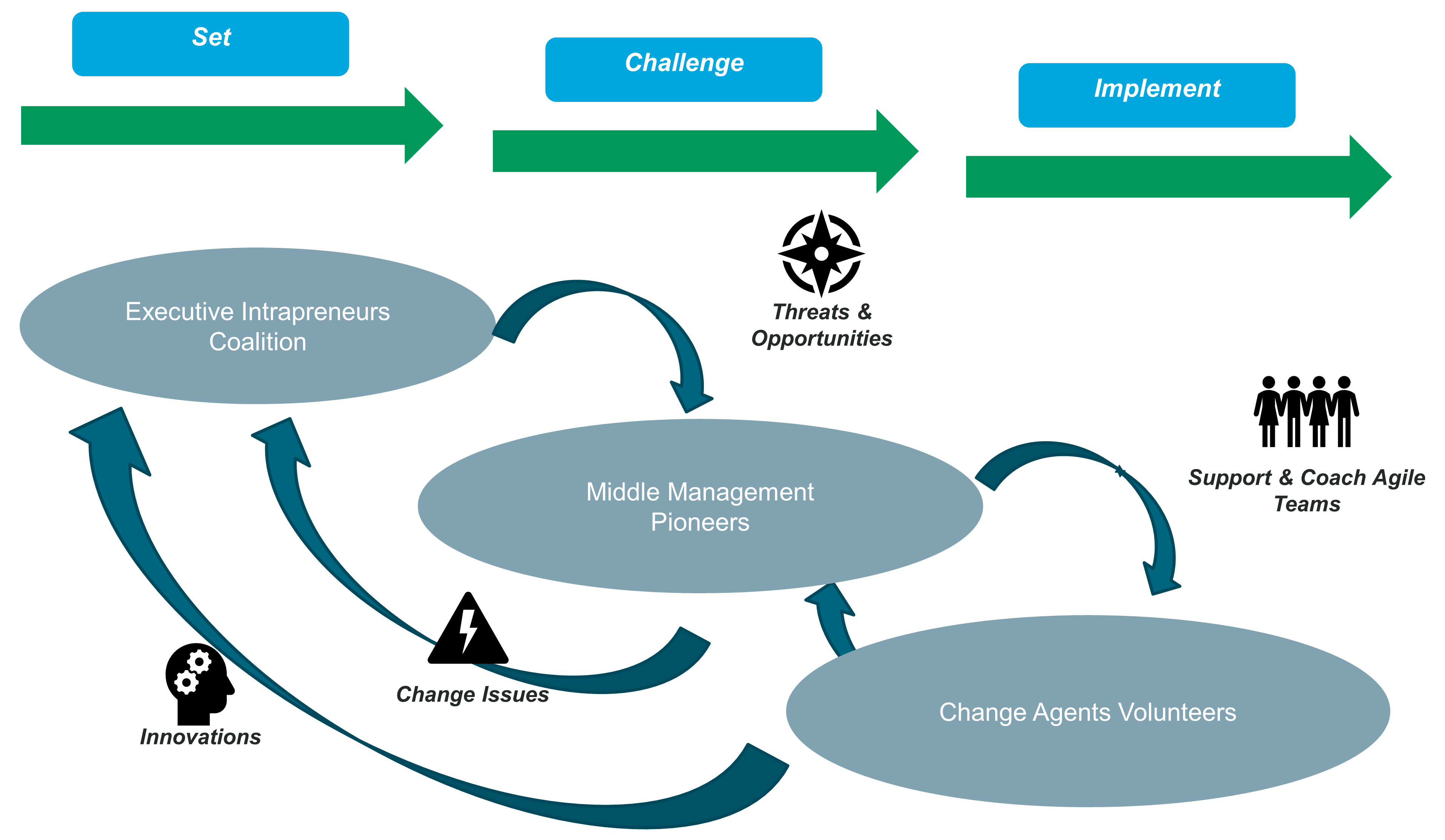

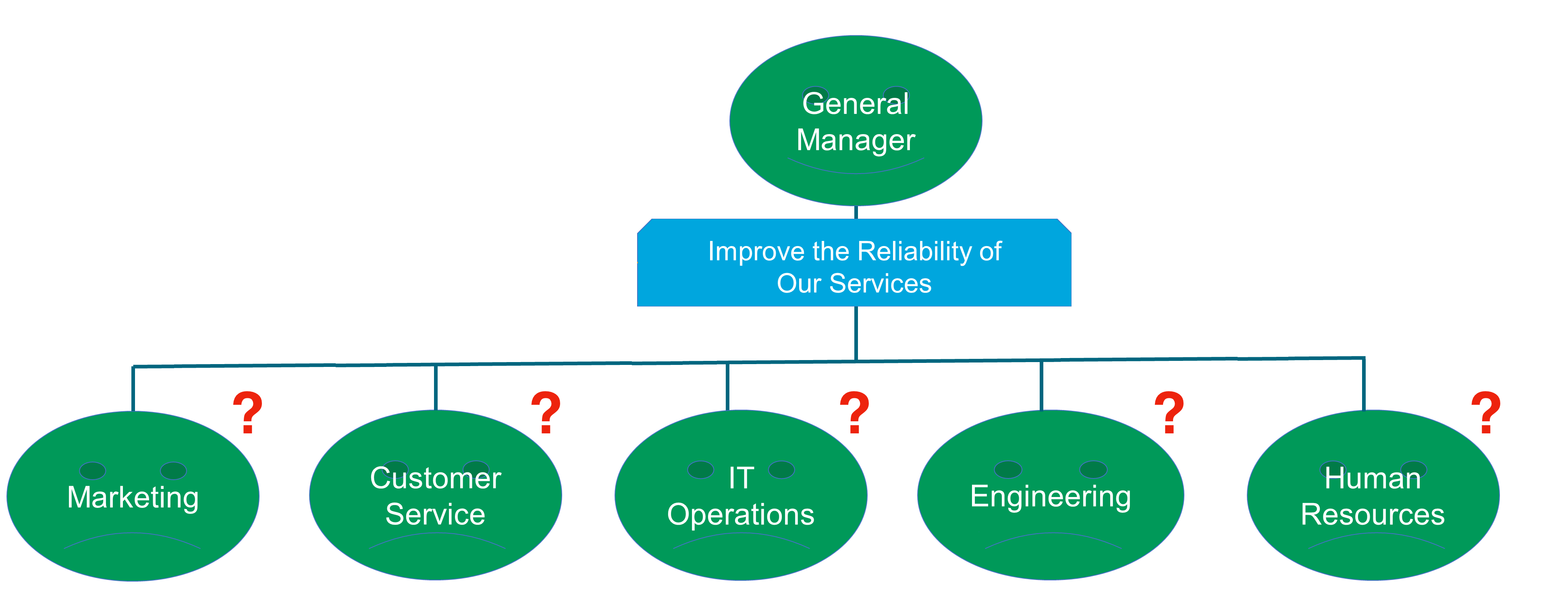

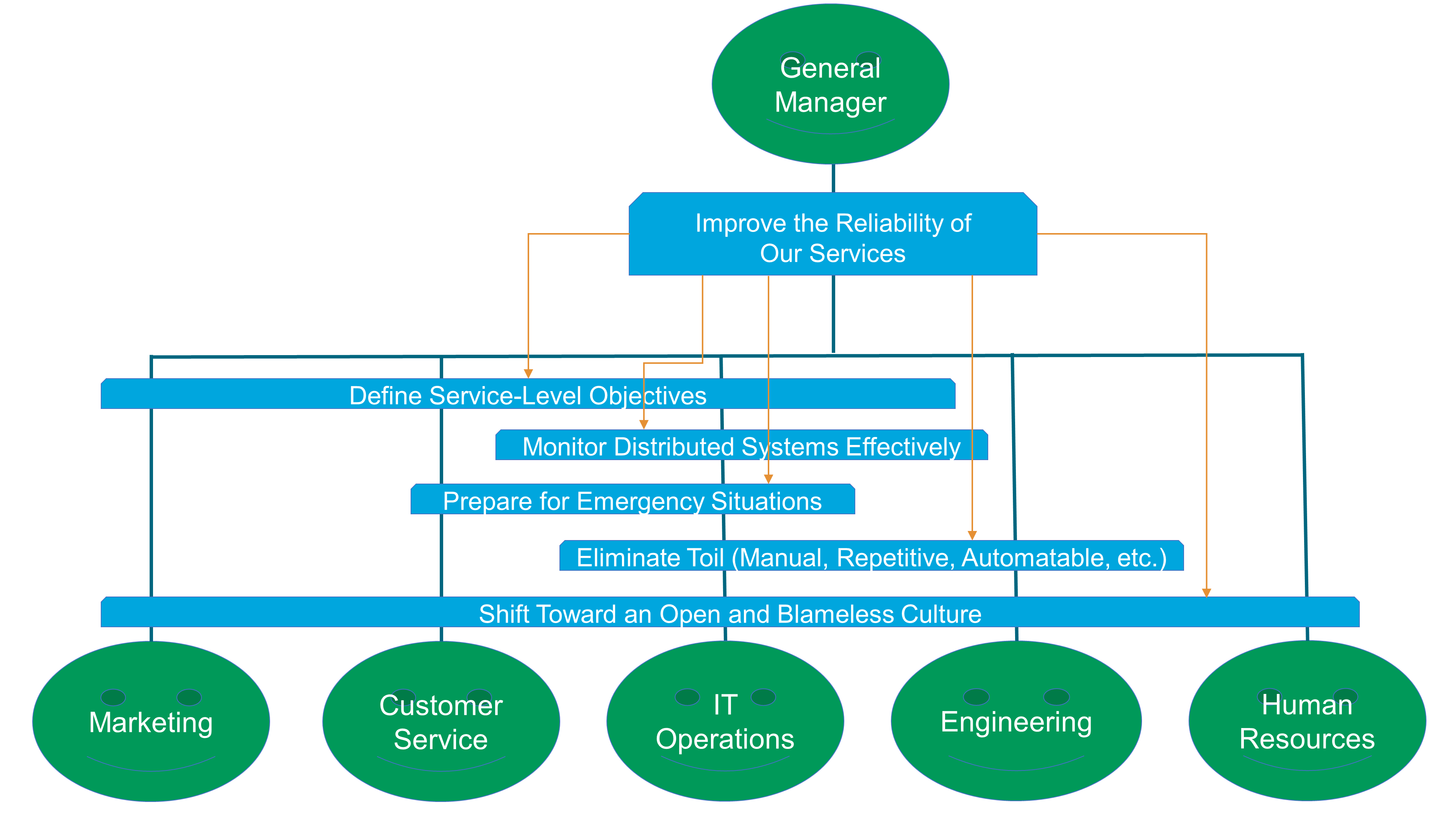

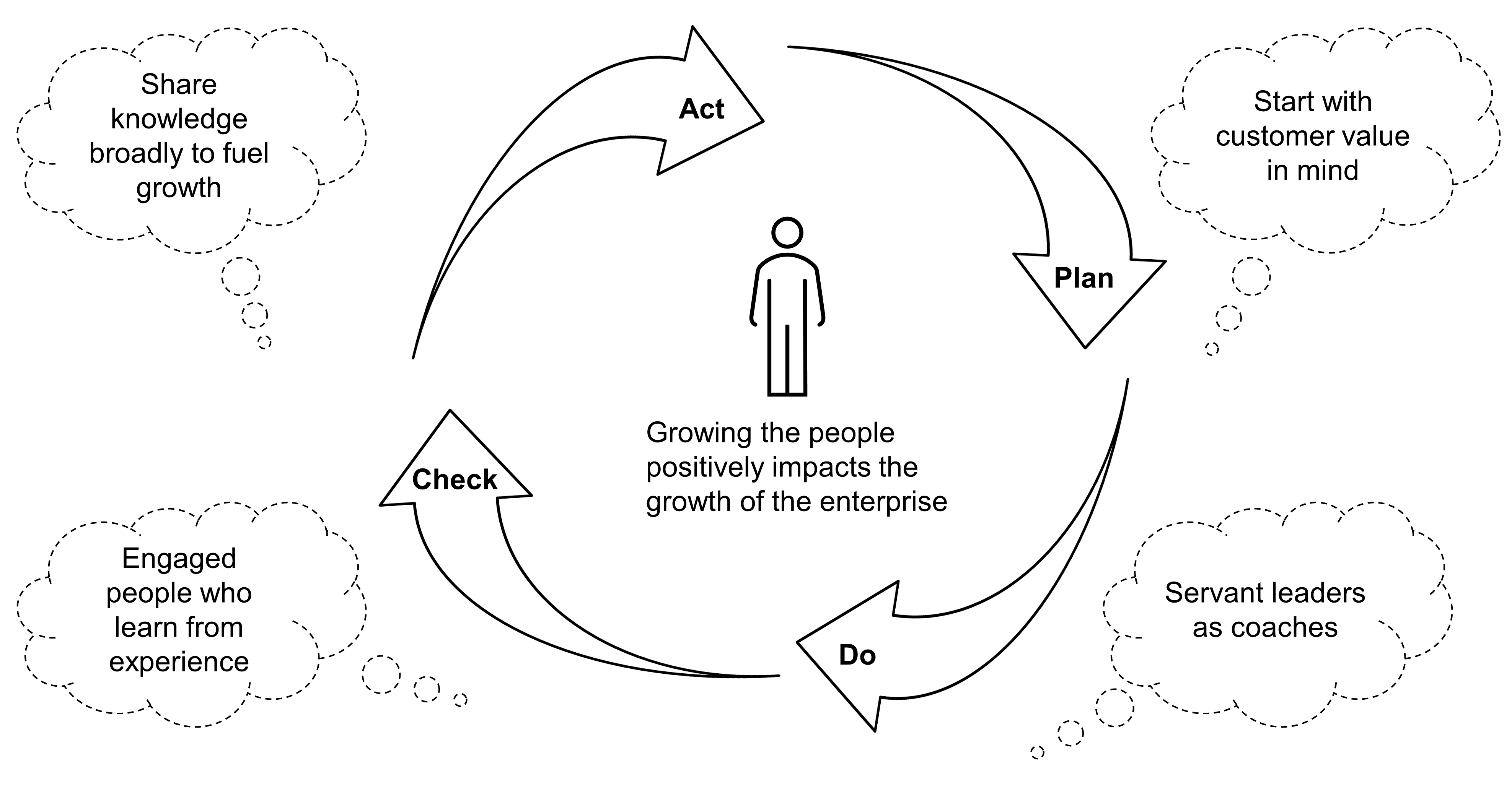

The Agile Transformation of the enterprise must address the organizational and cultural dimensions of the transformation agenda, as illustrated in Figure 2; see Chapter 7.

3.1. Why Organizational Agility Matters

Mike Sutcliff and his co-authors conducted a survey among 1,350 executives to discover why Digital Transformations fail [Sutcliff 2019]. The number one reason given for failure is the unspoken disagreements between top managers about goals. The recommendation is to “define and articulate not only the opportunity but also the problem it solves, and how the company will build the organization around the desired solution before investing”.

Tim Fountaine and his co-authors show that technology is not the biggest challenge when building the AI-powered organization [Fountaine 2019]: “Cutting-edge technology and talent are not enough. Companies must break down organizational and cultural barriers that stand in AI’s way”.

These two articles and surveys show that:

-

Organizational agility is required to support scaling beyond the pilot stage

-

Addressing the organizational and soft aspects of Digital Transformation is a key success factor

In order to become Agile, the organization must:

-

Shift from siloed work to interdisciplinary collaboration, because digital technology has the biggest impact when leveraged by cross-functional teams that are aligned on outcomes

-

Empower decentralized decision-making, because business agility requires vigilant organizations capable of seeing risks and opportunities sooner, and positioning themselves to act faster to address them [Day 2019]

The enterprise culture needs to shift from experience-based to fact-based decision-making. Amazon™ epitomizes this shift. Ram Charan reports that "everything that matters can be tracked, measured, and analyzed, with insights generated and routine decisions automated … It liberates Bezos, executives, and frontline employees at Amazon from managing routine daily chores and the inevitable bureaucracy associated" [Charan 2019].

The digital enterprise needs to deliver a consistent user (customer, employee, or partner) experience across all touchpoints. The authors of Beyond Advertising recommend thinking about brands as touchpoint orchestrators: “the brand’s multiple touchpoints and how they interact with each other, from a digital out-of-home experience to a tablet, from mobile to the store” [Wind 2016].

3.2. Connecting Touchpoints to the Operating System

Every interaction with a brand, from the first time you become aware that it exists to every touchpoint you encounter along the way in your daily life, has an impact: “From the customer perspective, touchpoints with a brand or product are not differentiated: it is the seamless experience that matters.”

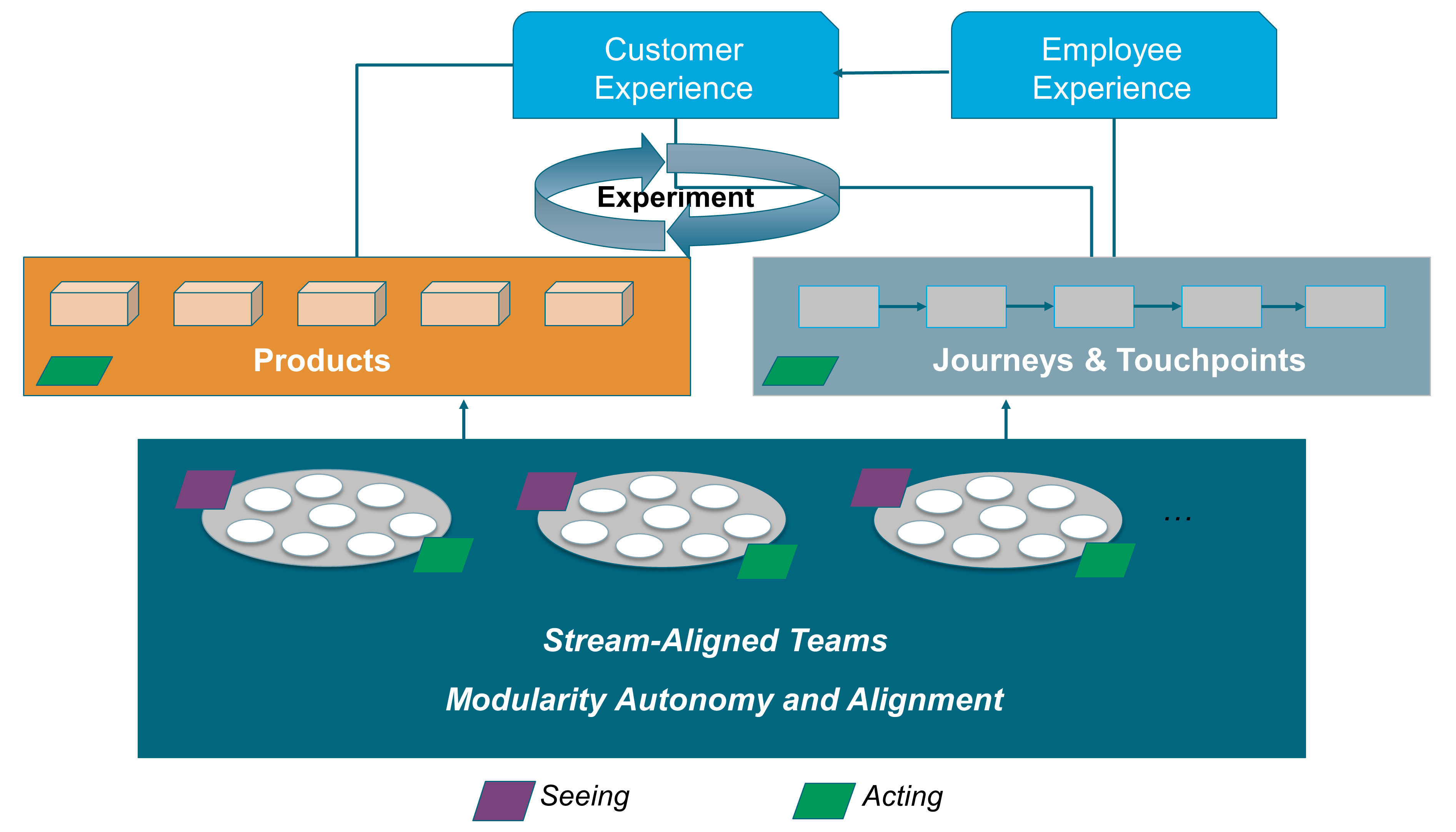

Figure 3 illustrates the variety of touchpoints that a brand has to orchestrate to deliver a positive customer experience.

It can be predicted that: “Touchpoints will continue to multiply in an era where every object has the potential to become connected and interactive.”

This evolution impacts the enterprise as a whole: “New structures and processes will need to allow for agility and reaping the benefits both from decentralization and, when needed, the power that leveraging through centralization facilitates.”

New operating systems are required to create real-time, personalized experiences. One of their key functions is to enable touchpoint orchestration. The operating system is the combination of assets and processes required to deliver a product or a service; for a more detailed definition of operating system, see Section 2.35.

3.3. Developing Business and Organizational Agility

Business agility is the ability to quickly identify and respond to market and environment changes. Enterprises develop business agility when they sense changes in their environment early and act upon them decisively and rapidly.

“Employees deep down in the organization may be closer to weak signals at the edge of the organization and must believe they will receive an open hearing when raising concerns or suggesting ideas”. [Day 2019]

When Agile teams are vigilant and empowered to act, they are better at navigating digital turbulence than those at head office. When weak signals reach head office, they are filtered and cascaded down the organization. In this context, weak signals are the first indicator of a change or an emerging issue. This increases the likelihood that weak signals (represented by the purple parallelograms on Figure 4) will not be seen, or that no one will respond to them. This explains why head office can be slow to identify and act upon potential threats and opportunities.

Figure 4 illustrates that Agile teams close to the field have enough autonomy to modify products or journeys in response to weak signals. Agile teams do not have to seek permission from head office before experimenting with responses to weak signals.

Business agility is facilitated by organizational agility because:

-

Agile teams are cross-functional, which brings diverse perspectives when analyzing weak signals

-

They share a common purpose and vision, which helps to put weak signals in perspective

-

Most of them are stream-aligned, which means Agile teams are directly responsible for delivering products or journeys, facilitating rapid experimentation

Organizational agility was introduced in Section 3.1 and is covered by Chapter 12.

Digital Transformation does not have to be disruptive [Sutcliff 2019]. Business agility is about organizations learning to be capable of evolving rapidly.

4. Architecture Development

This chapter defines the O-AA approach to Agile Architecture development. It is based on a set of modular building blocks that can be configured and assembled in a variety of manners. It supports several architecture development styles.

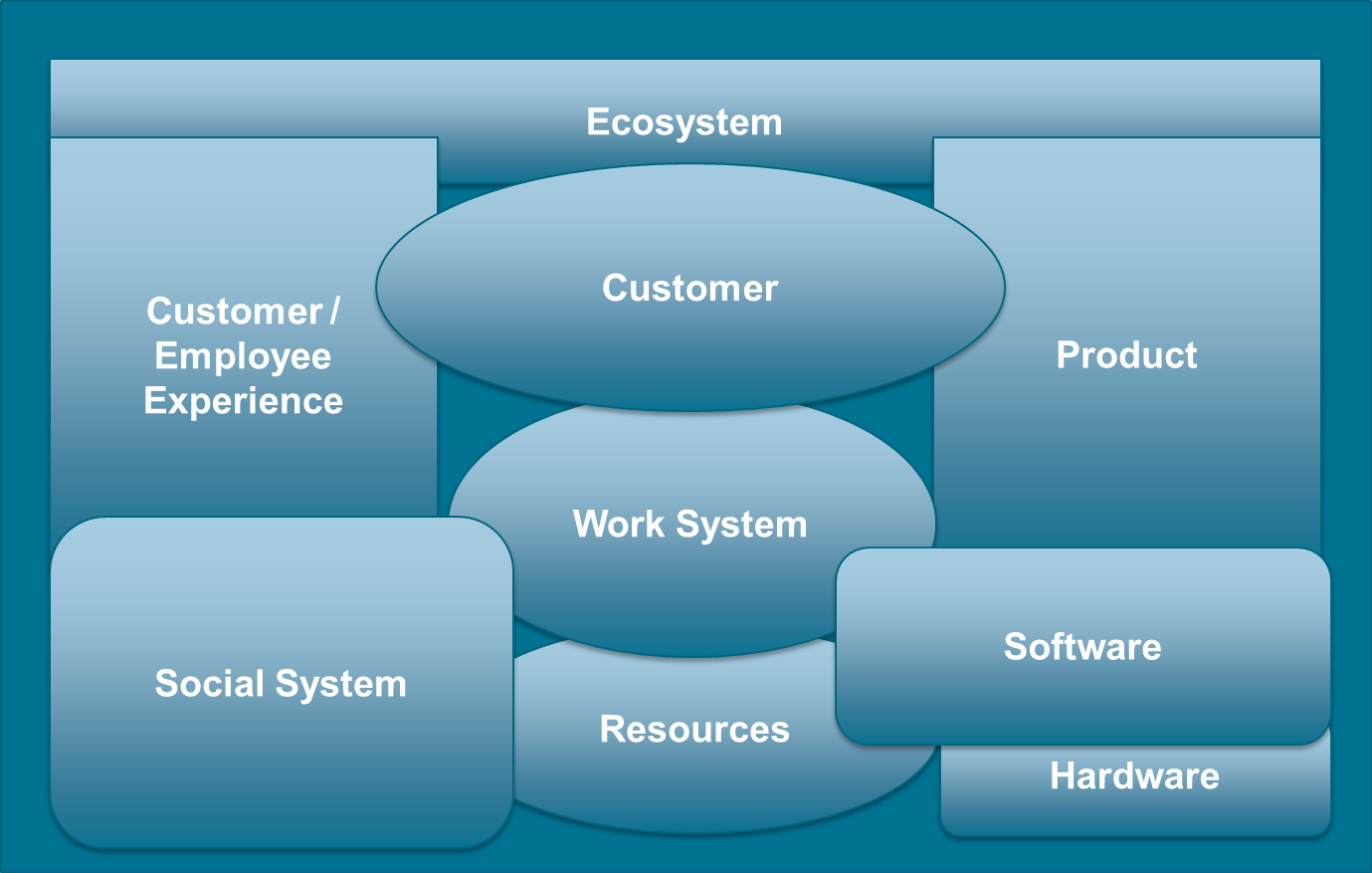

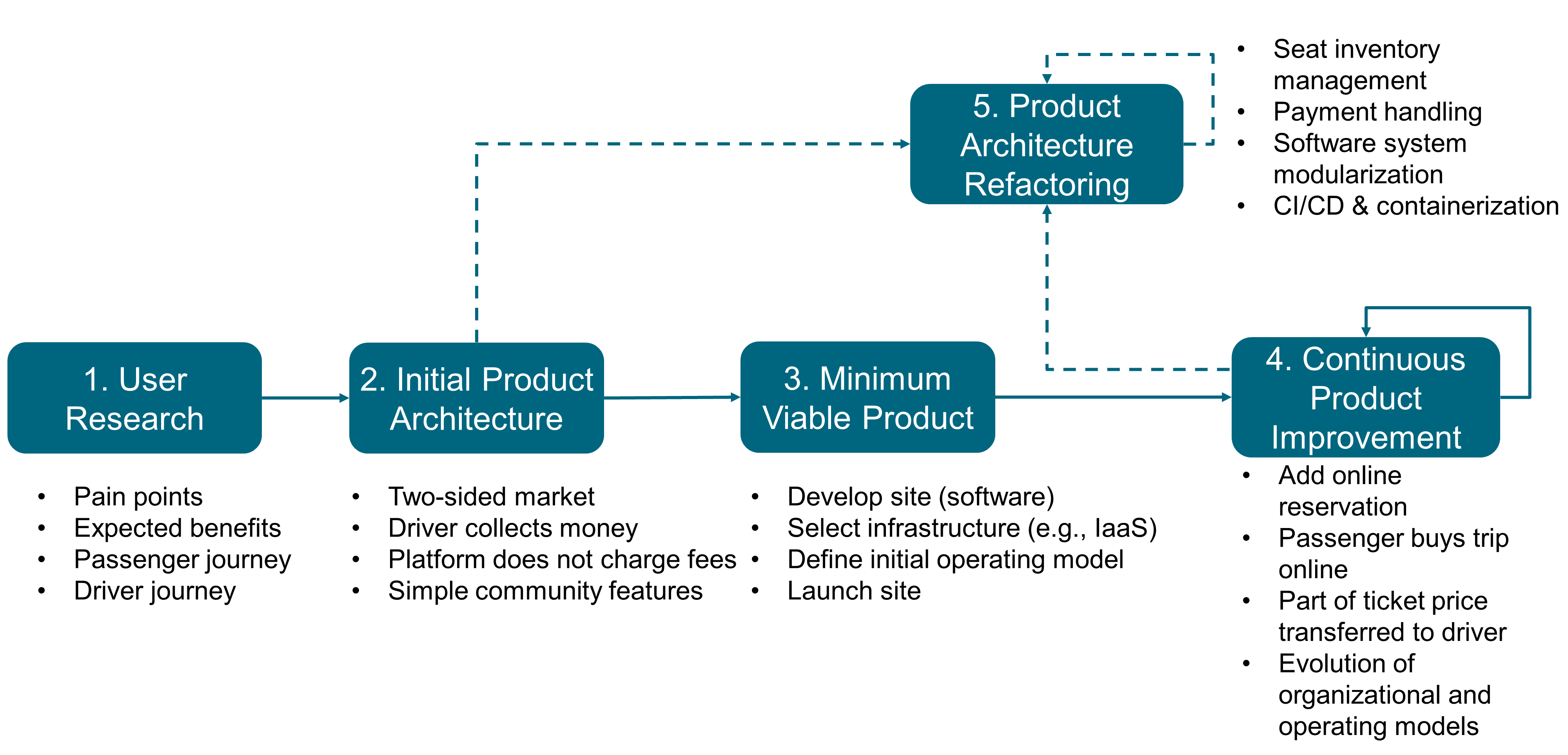

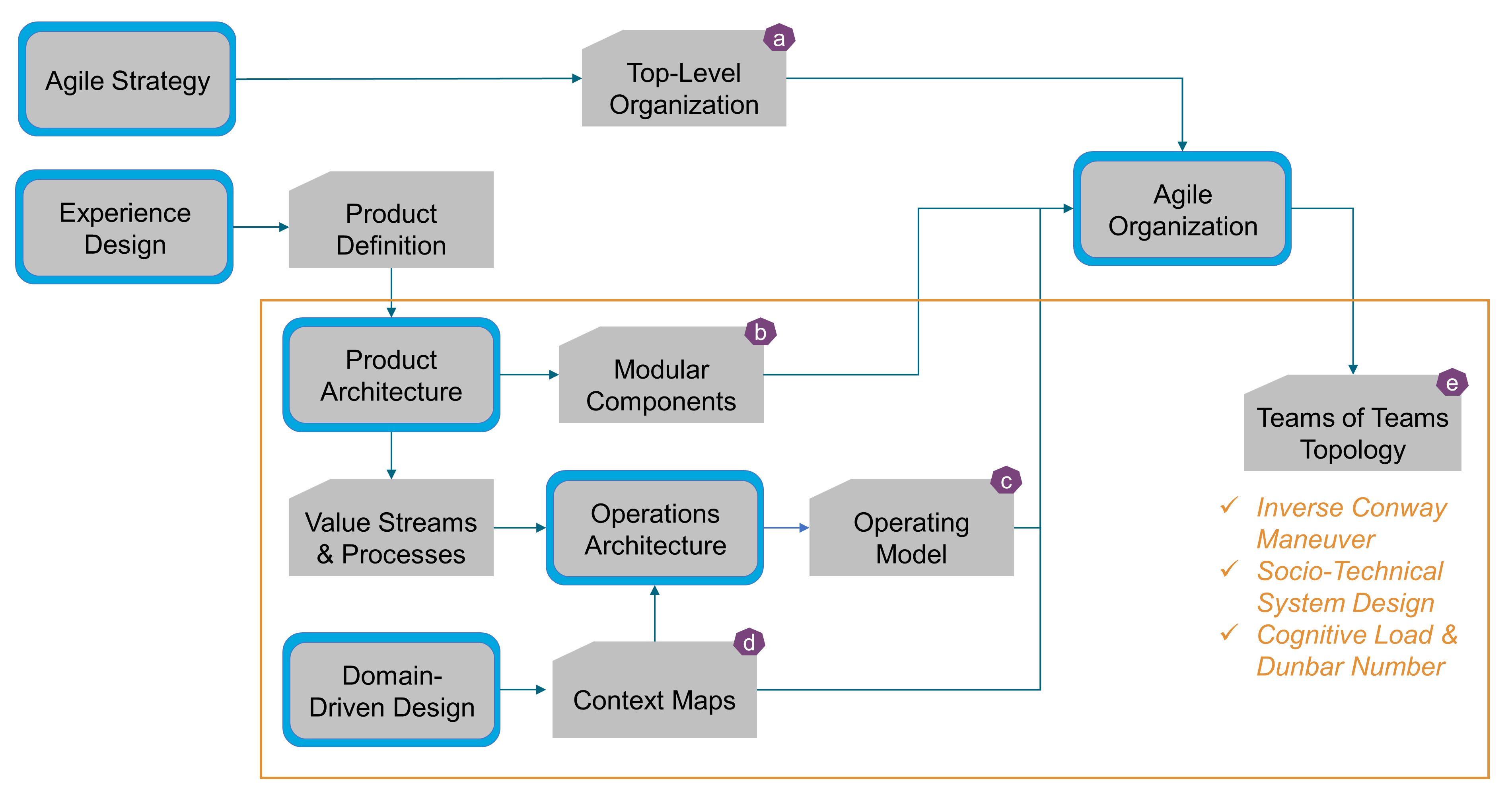

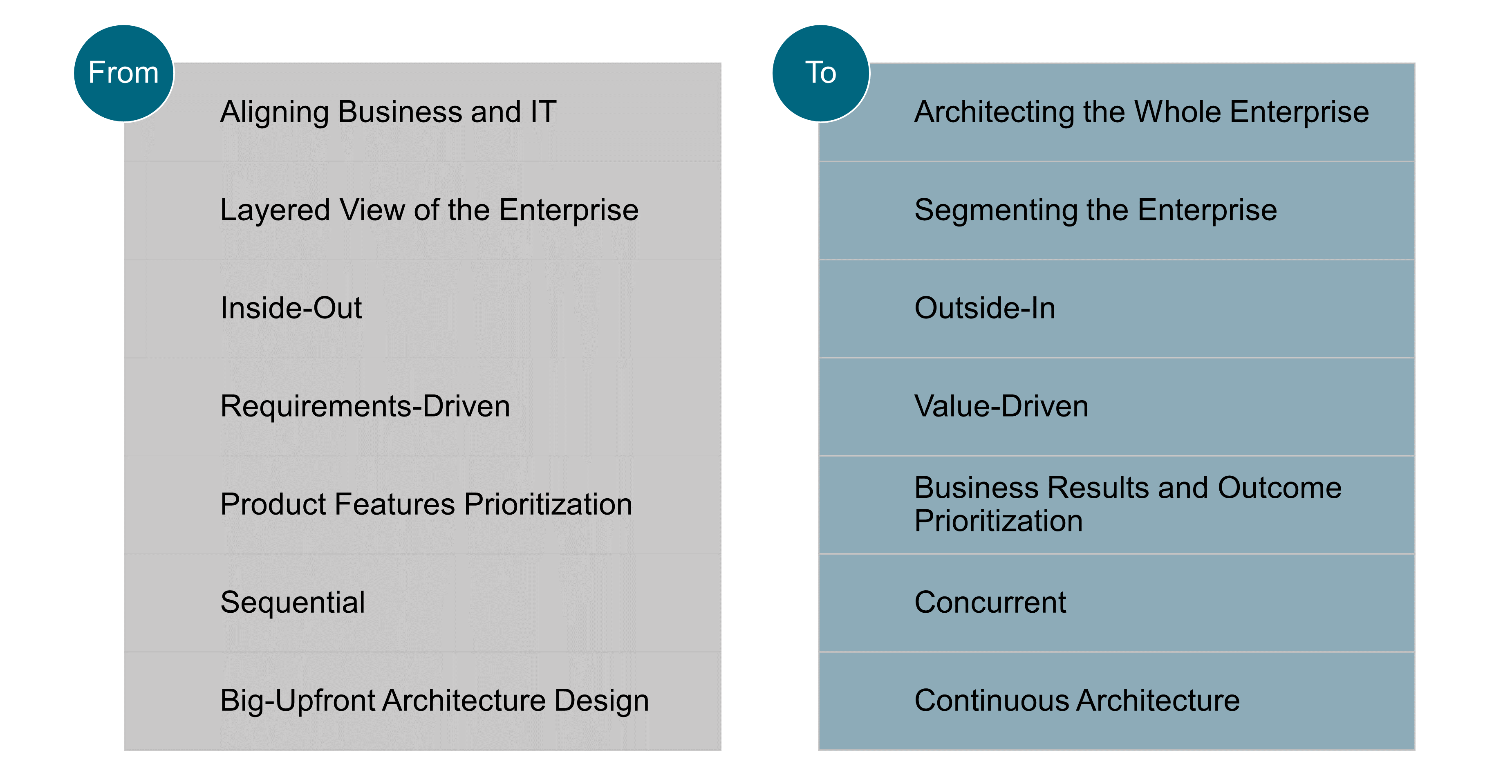

The scope of this document covers the enterprise as a whole, not just the alignment of business and IT; see Figure 5. It includes designing the enterprise business, organization, and operating models, which is the responsibility of senior executives who can be assisted by management consultants or Enterprise Architect profiles. Along with the authors of Designed for Digital: How to Architect your Business for Sustained Success, within this document the term Business Architecture is avoided because in many companies architecture is seen as the IT unit’s responsibility: “Right now, if you have a Business Architecture function, it’s probably buried in your IT organization (and having limited impact)” [Ross 2019].

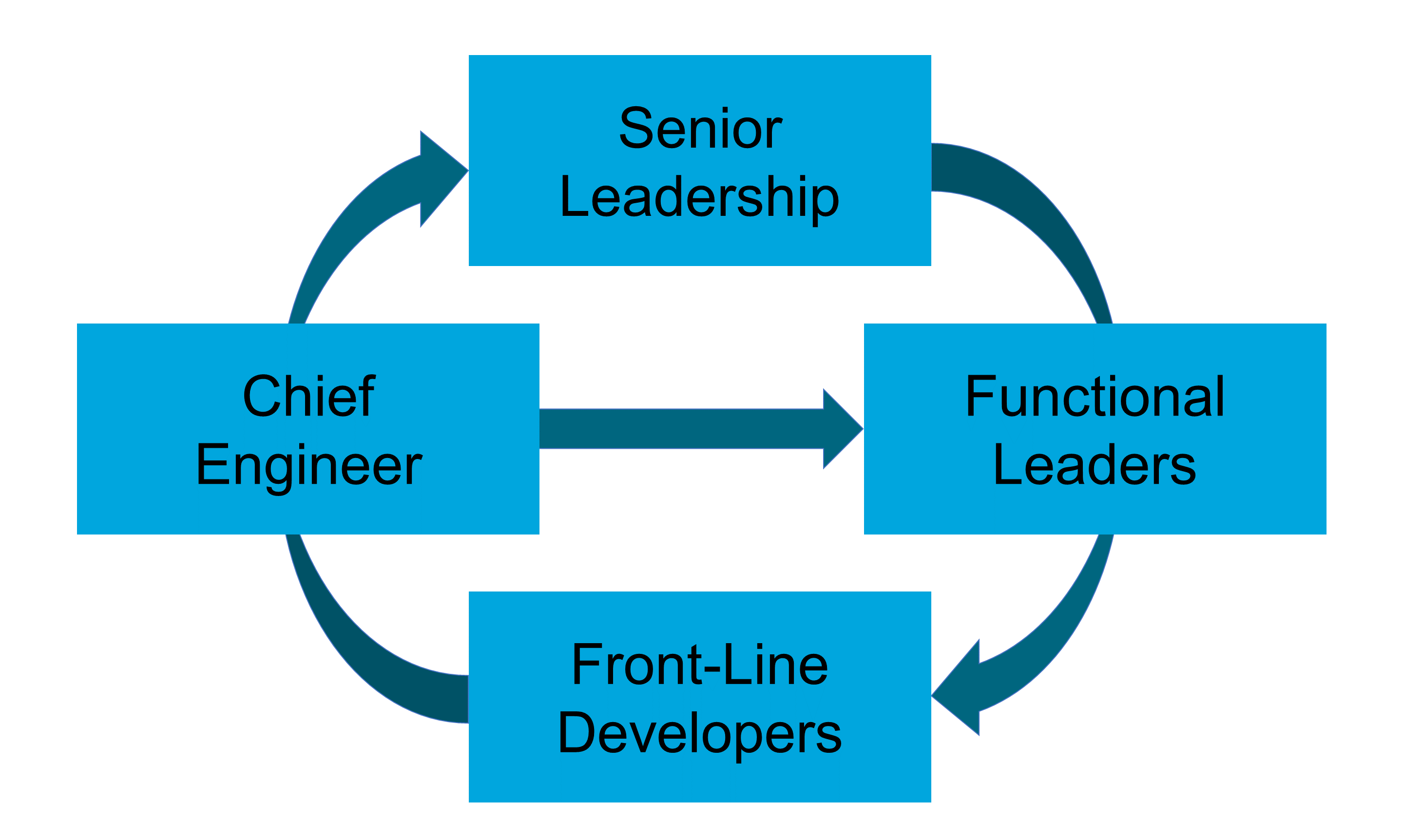

Enterprise Architecture in this context will become more like an internal management consultancy, which implies that Enterprise Architects must develop their management consulting skills to include relationship building, problem solving, coaching, and negotiation as well as specialty skills, such as product management, design thinking, and Lean management.

The range of skills that should be considered part of the Enterprise Architect role includes the disciplines needed for management consultants who design business and operating models. This document incorporates these disciplines. It borrows concepts and methods from:

-

Strategic marketing and marketing research

-

User Experience (UX)

-

Design thinking

-

Lean Product and Process Development (LPPD)

-

Socio-technical systems

-

Organizational sociology

-

Operations strategy

-

Software architecture

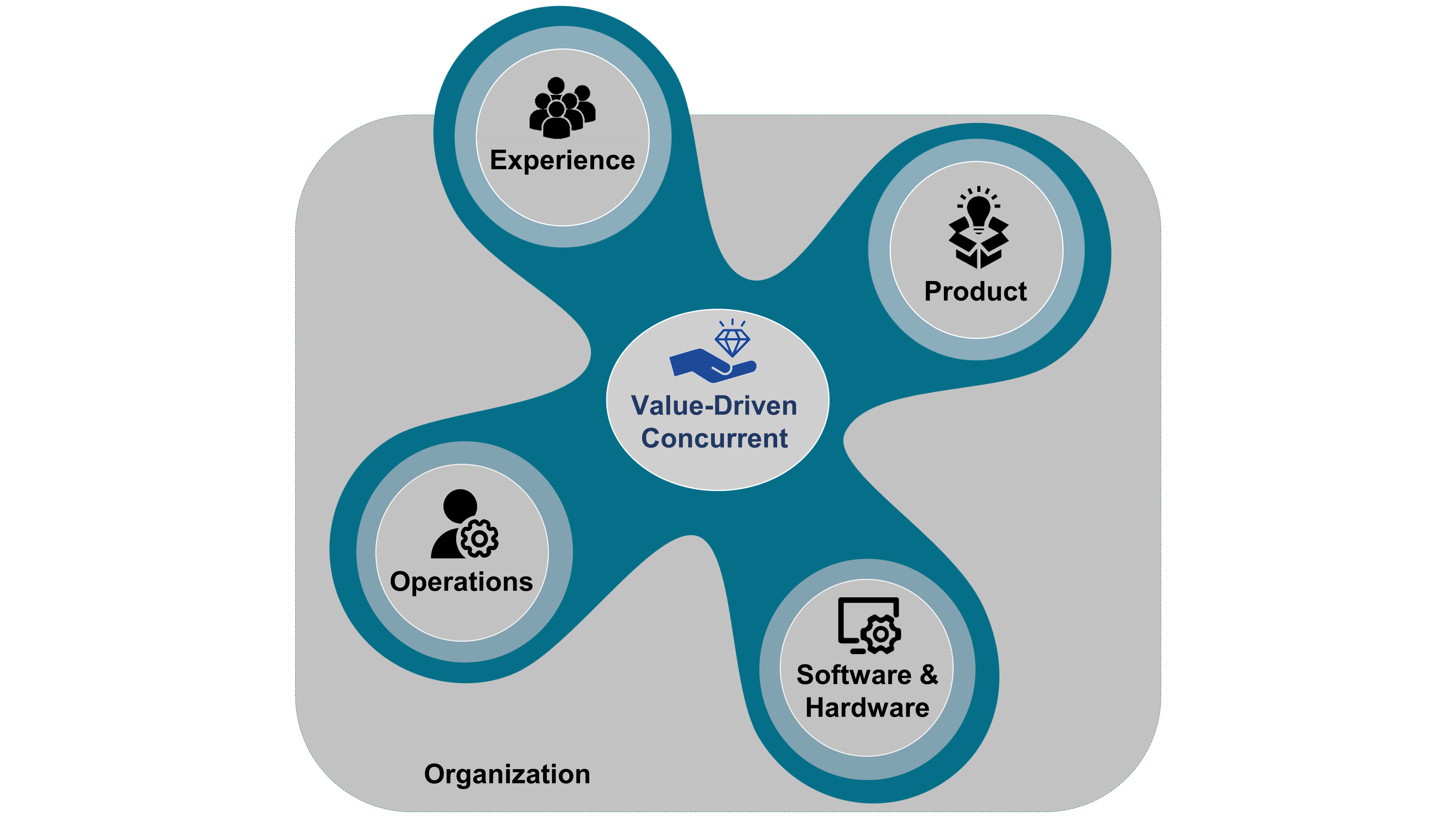

Figure 5 illustrates the scope of this document, where:

-

“Ecosystem” refers to the interactions the enterprise has with its environment; see Section 2.19

-

“Product” refers to a bundle of services and/or goods produced by end-to-end processes or Lean value streams; see Section 2.49

-

“Experience” refers to how pleasant or unpleasant it is to interact with the enterprise

-

“Work System” refers to systems in which human participants and/or machines perform processes and activities using software, hardware, and other resources to deliver:

-

Products offered to internal and/or external customers

-

Experiences delivered to clients and employees

-

-

“Social System” refers to people, their behavior, cultural beliefs, skills, and expertise, and how work teams are forming and interacting as well as organizational leadership, strategy, structure, policy, and procedures

-

“Software” refers to something used or associated with, and usually contrasted with, hardware, such as a program for a computer [Merriam-Webster]

Software can be used to automate almost anything, ranging from IT infrastructure to decision-making; e.g., prescriptive analytics.

-

“Hardware” refers to tools, machines, wiring, and other physical components of a system

-

“Resources” refers to a source of supply or support by an available means – usually used in plural

4.1. Architecture

Martin Fowler wrote: “There is no such thing as a universally accepted definition of architecture” [Fowler]. His view of architecture is aligned with that of Ralph Johnson, who defines architecture as “the important stuff (whatever that is)” [Johnson 2008]. The lack of a universally accepted architecture definition justifies why this document borrows from three different sources to analyze what architecture means.

Most architecture definitions, such as these examples, adopt a systems thinking view that models the enterprise as a set of interrelated elements:

-

“The fundamental concepts or properties of a system in its environment embodied in its elements, relationships, and in the principles of its design and evolution” [ISO/IEC/IEEE 42010:2011]

-

“The structure of components, their interrelationships, and the principles and guidelines governing their design and evolution over time” [TOGAF Standard 2018]

Both of these definitions focus on “what the enterprise is”, its elements of form, rather than “what the enterprise does”, its functions; thus positioning architecture as a discipline which guides the design and evolution of the enterprise modeled as a system.

More detail on how the TOGAF Standard, Version 9.2 guides the delivery of architecture can be found in Sections 2.3, 2.4, and 2.5 of the TOGAF Standard; see [TOGAF Standard 2018].

To paraphrase Alfred Korzybski’s famous sentence [Korzybski 1958]: “a map is not the territory it represents, but if correct, it has a similar structure to the territory, which accounts for its usefulness”; the architecture model of the enterprise is not the enterprise. The enterprise makes architecture decisions that are reflected in the way it does business and operates. Architecture decisions may be implicit and are not necessarily documented or modeled.

Crawley [Crawley 2016] notes that “architecture is the embodiment of concept, and the allocation of physical/informational functions (processes) to elements of form (objects) and definition of structural interfaces among the objects”, and goes on to state that the systems engineering discipline adds two important ideas:

-

The allocation of functions or activities to the system’s elements or components

-

The definition of the structural interfaces that link the system’s elements or components

4.2. Development Building Blocks

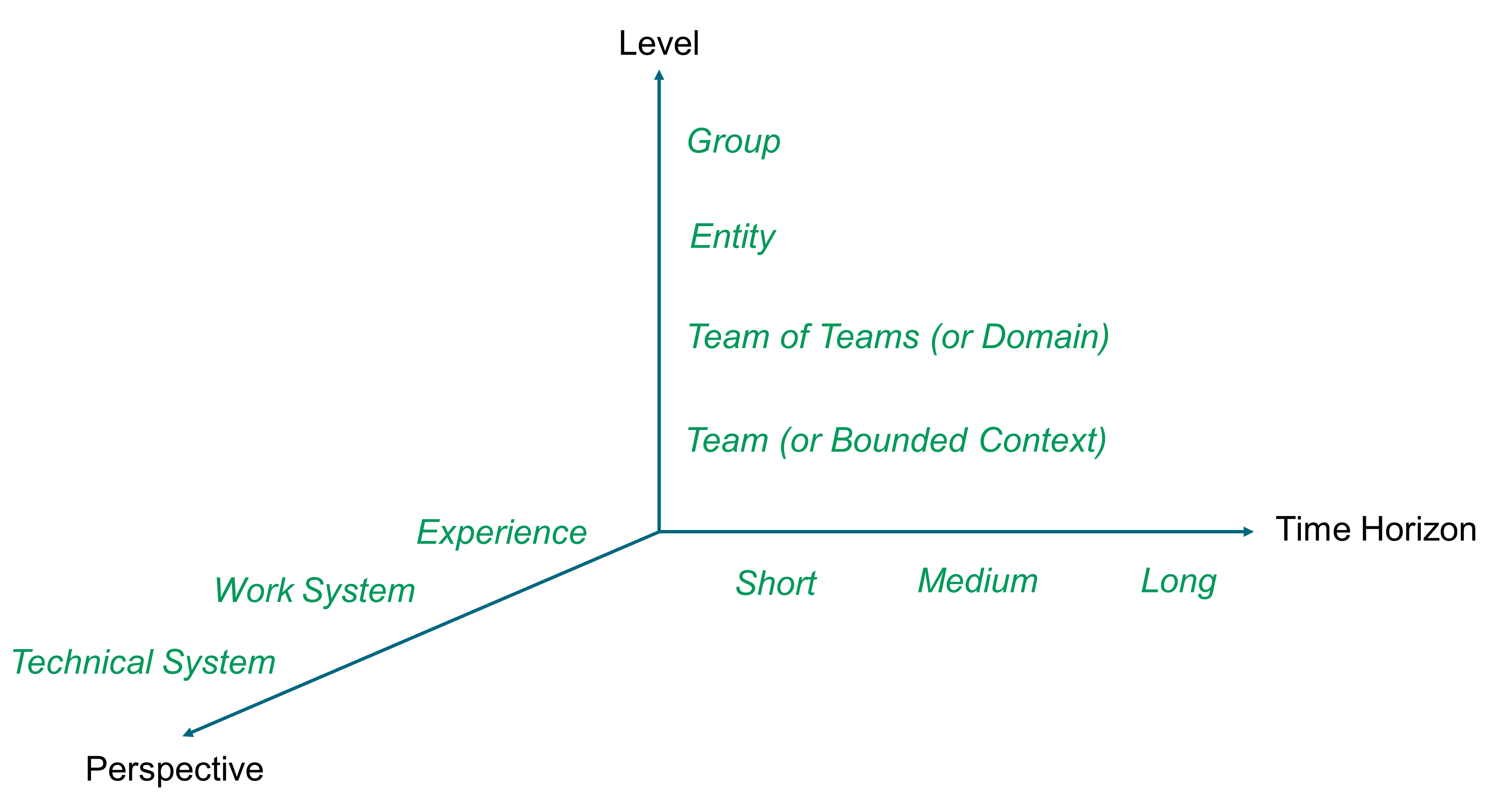

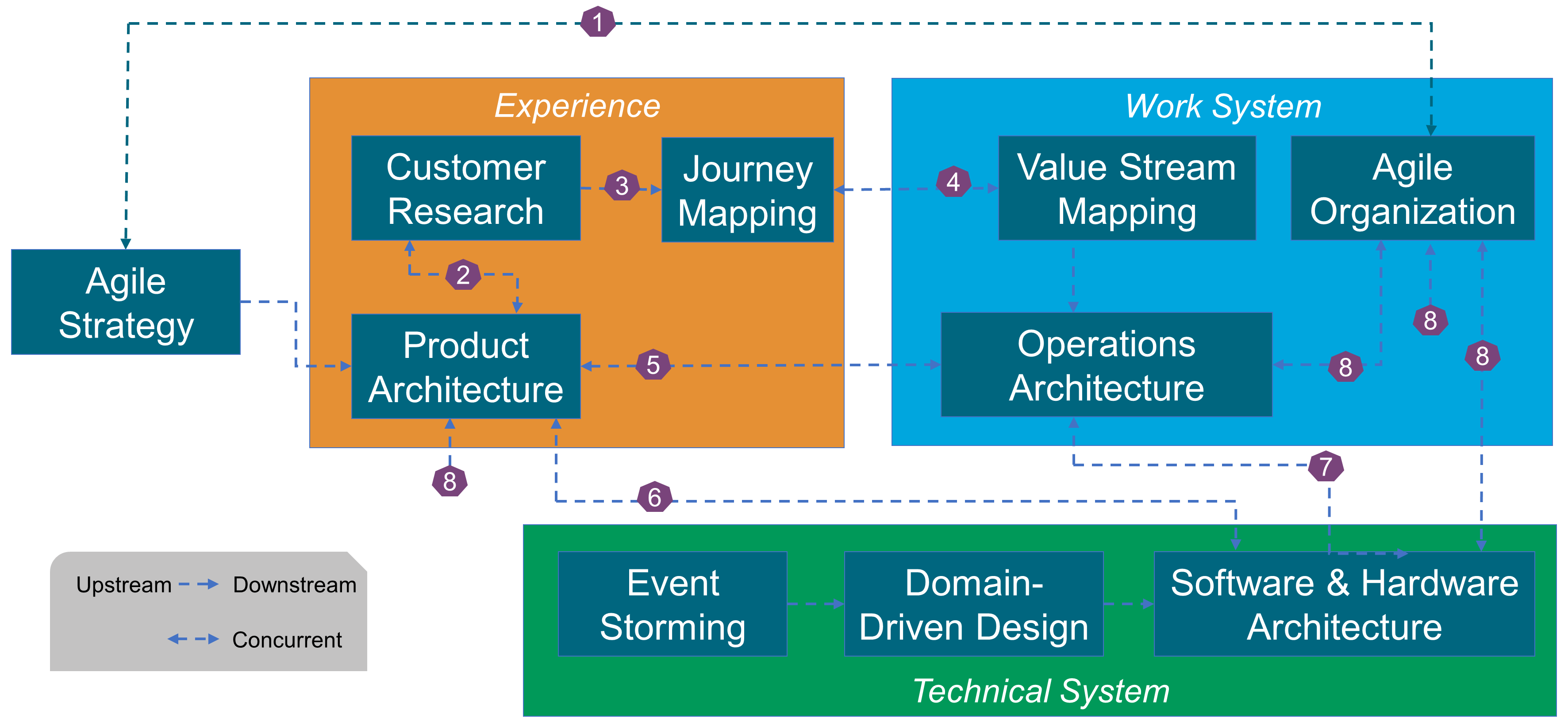

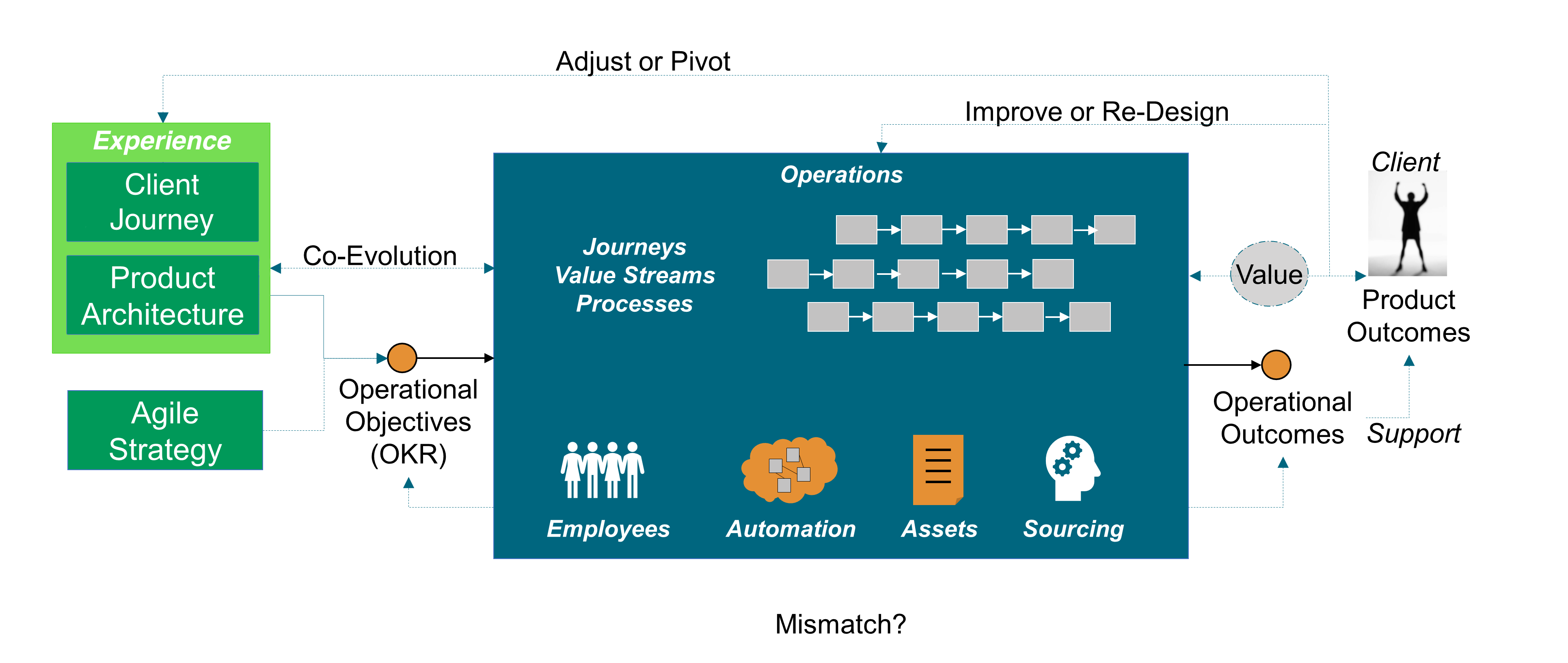

As shown in Figure 6, the O-AA development is structured along two axes that represent:

-

The function-form axis: What the Enterprise “Does”, What the Enterprise “Is”

-

The perspective axis: Experience Perspective, Work System Perspective, Technical System Perspective

The “Experience Perspective” is in the problem space, while the “Work System Perspective” is in the solution space, as well as the “Technical System Perspective”.

The O-AA building blocks are positioned along the two axes, with the exception of: “Strategy”, “Value”, and “Data/Information & AI”.

The “Corporate Brand Identity” and “Corporate Culture” building blocks influence what the enterprise does.

4.2.1. Strategy

Michael Porter recommends distinguishing operational effectiveness from strategy. Strategy is about developing sustainable differentiation based upon strategic positioning.

“Strategic positioning means performing different activities from rivals' or performing similar activities in different ways.” [Porter 1996]

Porter’s strategy formulation helps to connect strategic intent with execution. Mapping activity systems helps to verify interactivity consistency and identify when activities are reinforcing.

Strategy formulation is also influenced by the Agile culture, which insists on the importance of experimentation. Strategy is seen as a set of strategic assumptions that need to be experimentally verified. Strategy professor, Adam Brandenburger, observes: “the assumptions underlying your business model are embedded in all your processes”. Therefore, he recommends challenging these assumptions when formulating strategy [Brandenburger 2019]:

-

Precisely identify the assumptions that underlie conventional thinking in your company or industry

-

Think about what might be gained by proving one or more of them false

-

Deliberately disturb an aspect of your normal work pattern to break up ingrained assumptions

The digital era has seen the rise in popularity of the concept of the business model. Sometimes the use of the words “strategy” and “business model” are interchangeable. Peter Seddon and Geoffrey Lewis examine in detail the meaning of two frequently used – and misused – terms, namely, “business model” and “strategy”. They argue that as used by leading thinkers these two terms might reasonably be interpreted as having roughly equivalent meanings [Seddon 2003].

A business model describes the rationale of how an organization creates, delivers, and captures value. It provides an analytical way of connecting strategy to execution. A business model has three components [Johnson 2008]:

-

A customer value proposition, which models how customers perform a specific “job” that alternative offerings do not address

-

A profit formula, which models how to generate value for the enterprise through factors such as revenue model, cost structure, margins, and inventory turnover

-

Key resources and processes, including the people, technology, products, facilities, equipment, and brand required to deliver the value proposition

Regardless of their quality, the odds that implementing the strategy and associated business model(s) will fail are high. Why?

“Culture eats strategy for breakfast.” (attributed to Peter Drucker)

In an HBR article, Donald Sull [Sull 2015] and his co-authors analyze why strategy execution unravels. They acknowledge that strategy alignment processes are fine; the issues are due to unreliable commitments from colleagues. Furthermore, strategic plans are just a set of assumptions that need to be verified.

“No plan survives first contact with the enemy.” (attributed to Helmuth van Moltke, Prussian military commander)

Other strategy execution issues are:

-

Strategy is often not well understood, even at the executive level

-

Past performance is over-valued at the expense of experimentation and agility

-

Execution should be driven by leaders who know the field, which is rarely the case when only driven by top executives

4.2.2. Corporate Brand Identity, Culture

A clear and unified corporate identity can be critical to competitive strategy. It serves as a “north star providing direction and purpose” [Greyser 2019]. Corporate brand identity starts from the enterprise mission and vision. It must be consistent with the culture and capabilities of the enterprise.

Jez Frampton believes that great brands are “business strategy brought to life” [Wind 2016]. The authors of Beyond Advertising: Creating Value Through All Customer Touchpoints point out that brands never had more ways to reach and engage people. The image of the brand is now the result of the experience of people across all channels.

In a digital world, brand experience depends on the effective bridging of the enterprise strategy with its operating model. Marketing can no longer manage brands in silos, relying on classical marketing and advertising tools. Marketing people must now become members of multi-disciplinary teams that manage brand experience holistically.

4.2.3. Value

Agile architecting borrows concepts from Value Engineering (VE), which was pioneered by L.D. Miles from General Electric®. VE is an approach directed at “analyzing the function of systems, equipment, facilities, services, and supplies for the purpose of achieving their essential functions at the lowest lifecycle cost consistent with required performance, reliability, quality, and safety” [Mandelbaum 2006].

In the digital age, value cannot be reduced to functional benefits. Value definition includes the emotional dimension; for example, reducing anxiety, providing fun, or a sense of affiliation.

The central idea is to provide benefits to clients and other stakeholders at the lowest reasonable cost. The value of a product can be increased either by maintaining its functions and features while reducing its cost, or by keeping the cost constant while increasing the functionality of the product.

4.2.4. Perspectives

The Experience perspective defines value from a client perspective. It analyzes a client’s job-to-be done; their pain points and gains. It also covers the emotional dimension of the experience, starting from an outside-in view that places customer needs and problems at the center. Design thinking and market research are incorporated into the broader Agile architecting discipline.

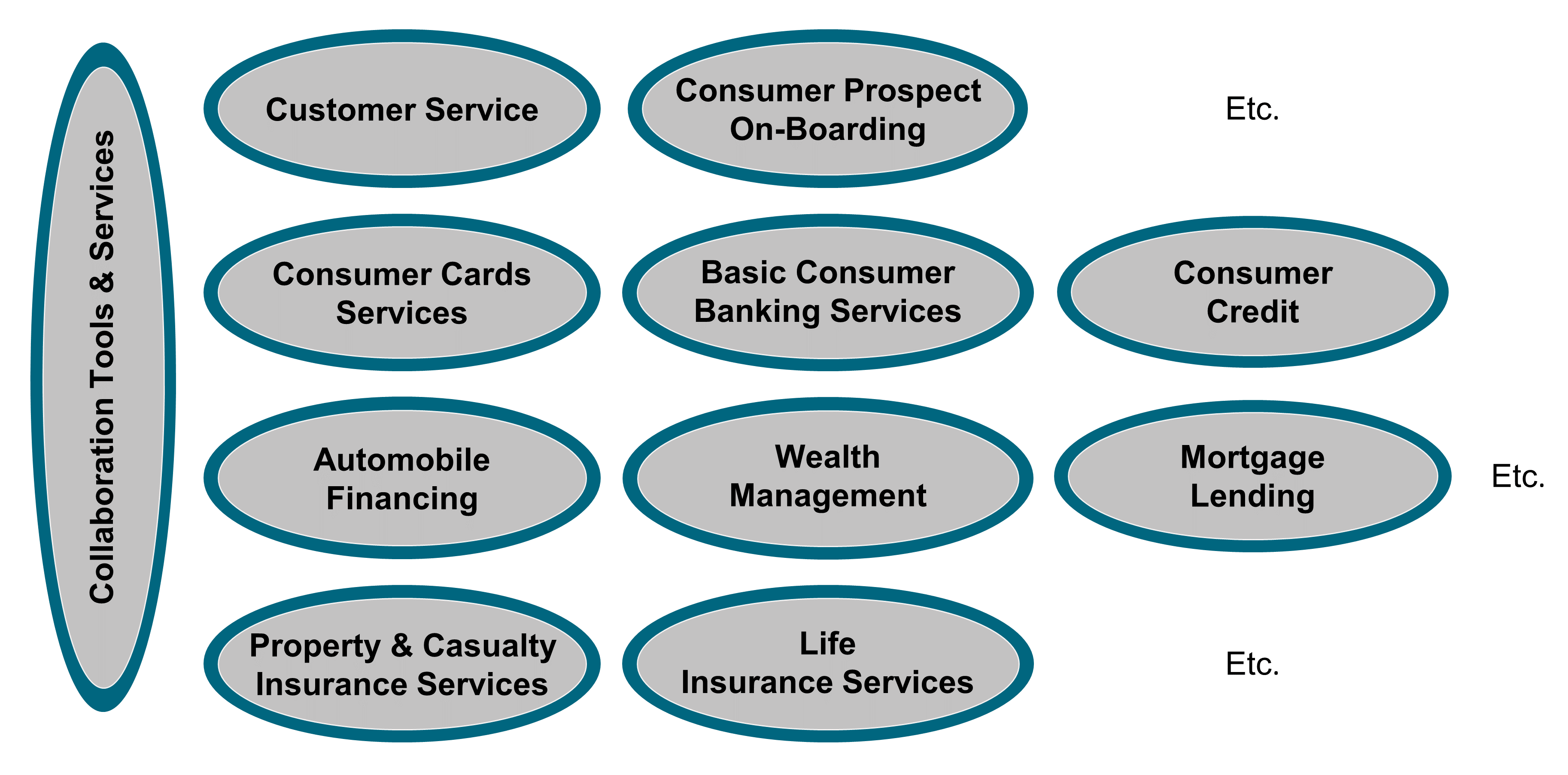

The Work System perspective defines the ability of the enterprise to deliver client benefits efficiently. It starts with analyzing commonalities across value streams; this helps to identify activities that could be shared, thus contributing to architecting the operating model.

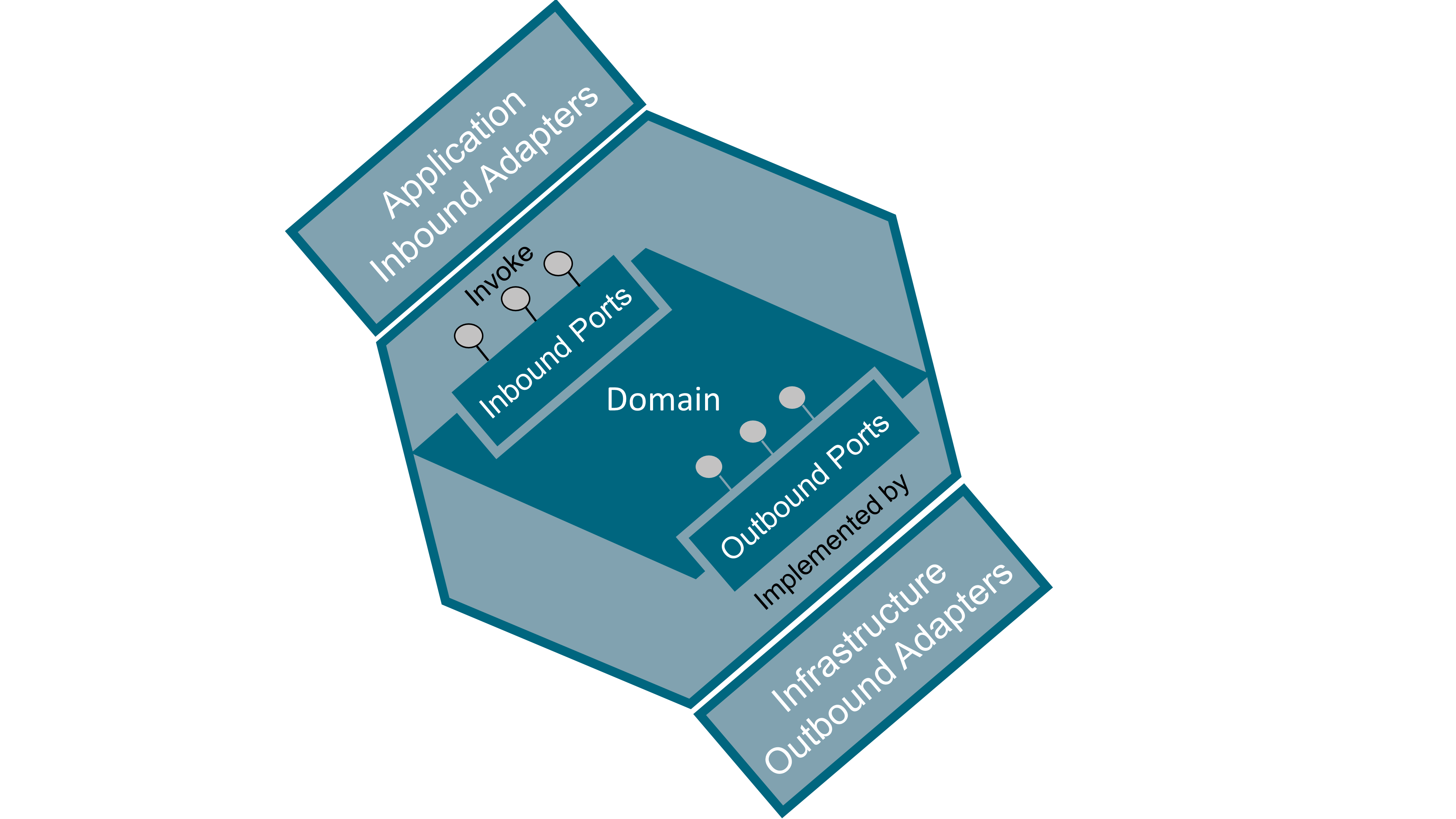

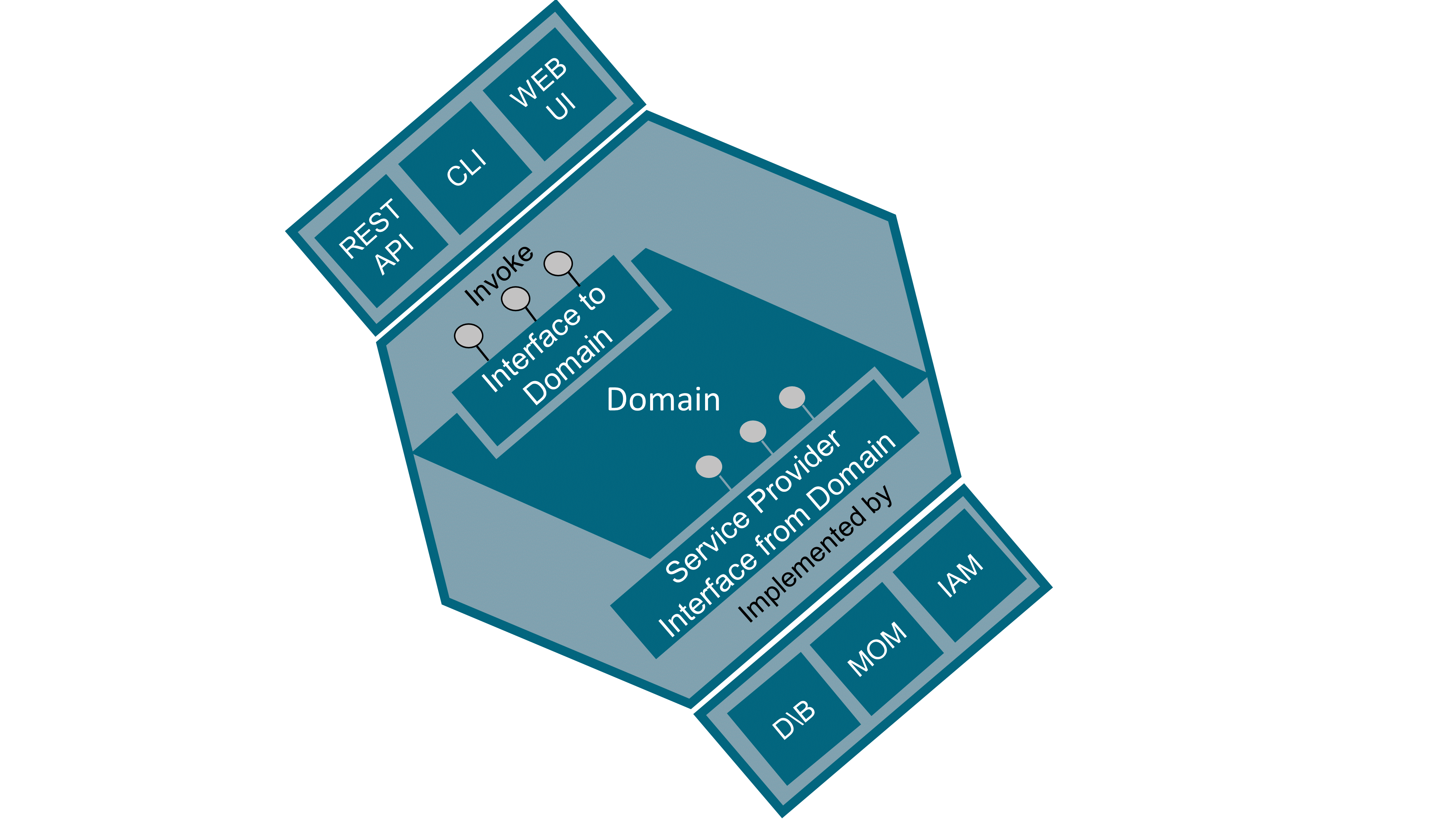

The Technical System perspective covers the software and hardware parts of the enterprise. It starts from analyzing domains such as payments, mortgage lending, or federated identity management and also includes architecting the physical world. The software architecture discipline is part of the broader Agile architecting discipline.

4.2.5. What the Enterprise “Is”

Analyzing what the enterprise “is” starts by defining the boundaries that connect:

-

The enterprise to its environment

-

The enterprise parts to each other and to the environment

Defining boundaries covers:

-

Product Architecture, which is driven by the questions:

-

How should products be broken down into components?

-

Which interfaces should link product components?

-

Which modularity–integrality trade-off is appropriate?

-

Which product platform, if any, would bring value?

-

-

Operations Architecture, which is driven by the questions:

-

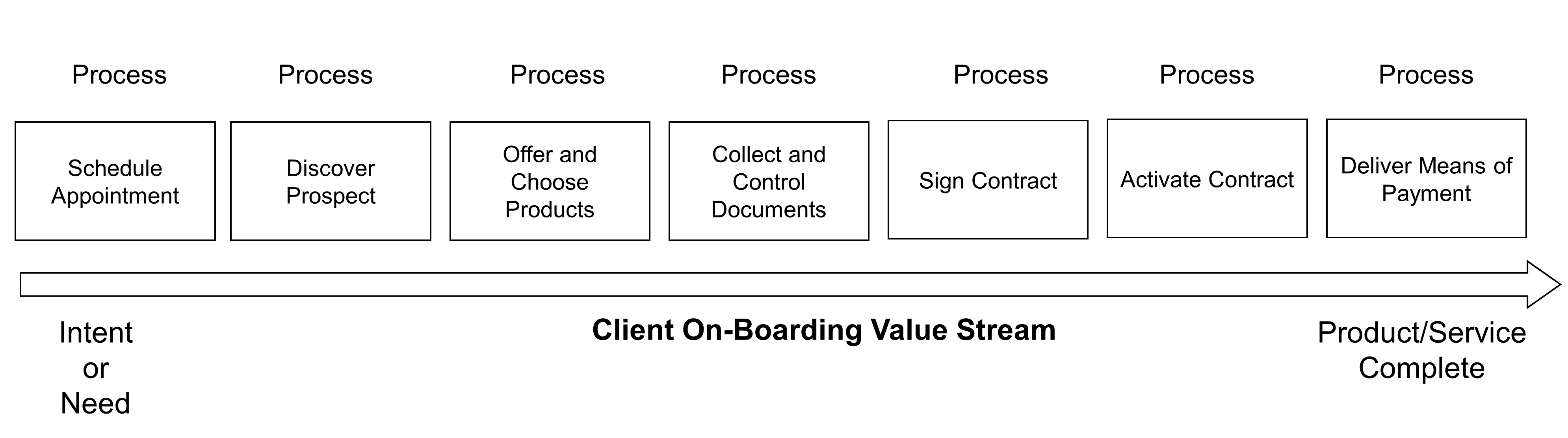

What are the key value streams and processes of your operations; and are they fit-for-purpose and efficient?

-

Are economies, skills, and scale being leveraged?

-

What is the platform strategy, if any?

-

Are the right resources, skills, and technologies deployed in the right facilities and locations?

-

-

Organization, which is driven by the questions:

-

What is the right organizational structure and culture?

-

How should organizational levels be defined, and how should the group, entity, team, and team of teams levels be articulated?

-

How should authority, responsibility, and accountability be distributed?

-

Which teams and teams of teams should be stream-aligned?

-

-

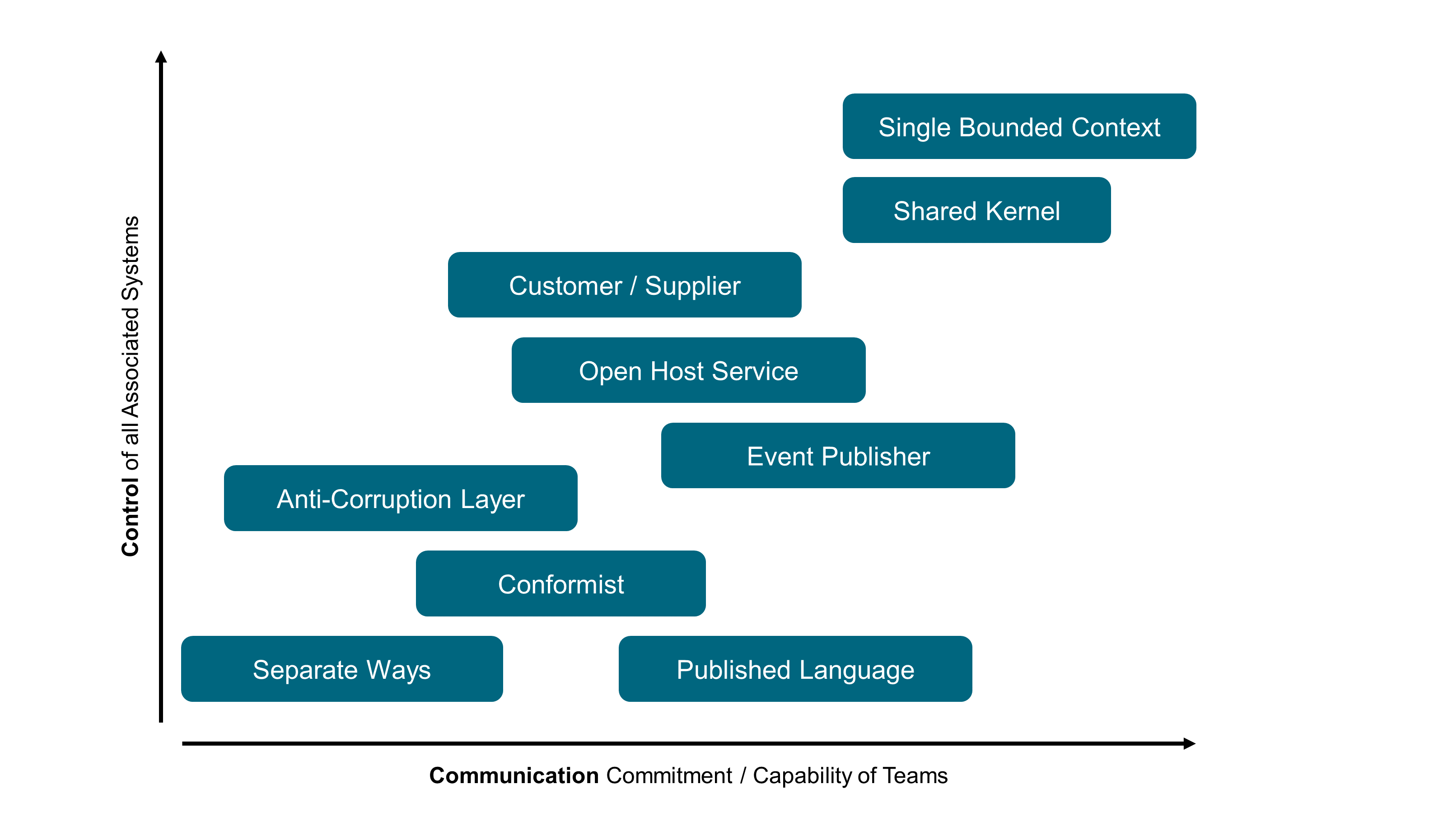

Domain-Driven Design segmentation, which is driven by the questions:

-

What are the enterprise domains and sub-domains?

-

How to decompose sub-domains into bounded contexts?

-

What are the upstream/downstream dependencies that link bounded contexts?

-

How to minimize inter-bounded context dependencies?

-

When modeling the enterprise as a Complex Adaptive System (CAS), architects should pay attention to leveling, boundaries, dependencies, and interactions: a CAS “is characterized by intricate hierarchical arrangements of boundaries and signals” [Holland 2012]. The definition of levels, boundaries, and interactions is a lever used in Agile Architecture to influence the evolution of the enterprise.

4.2.6. What the Enterprise “Does”

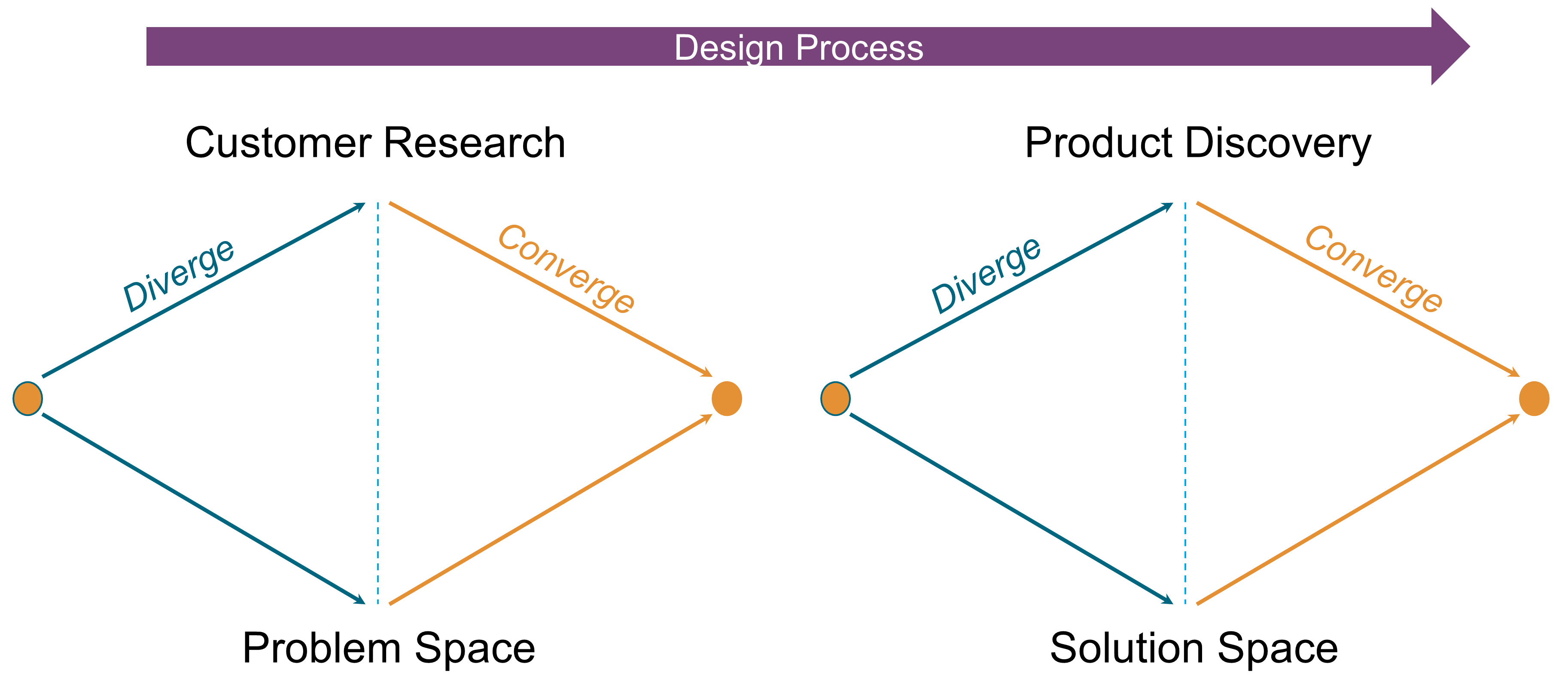

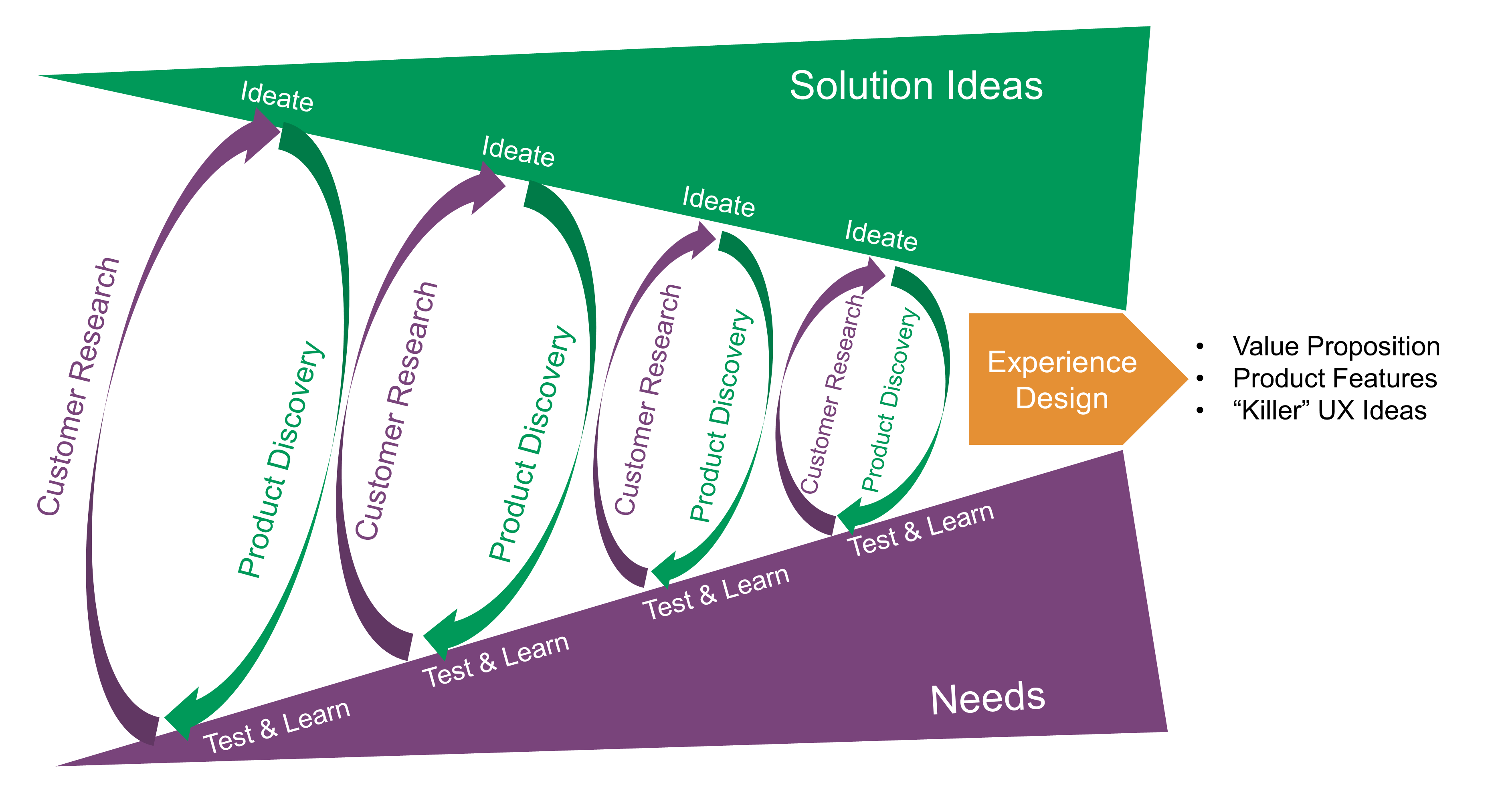

Experience Design combines customer research and product discovery during a set of design thinking iterations. This is not a linear process; it alternates between divergent and convergent thinking. Customer research borrows from marketing research, anthropology, and design thinking to capture and analyze customer needs and desires. Product discovery applies divergent and convergent thinking in the solution space to discover which products and features could satisfy customers. Customer research and product discovery apply outside-in thinking and aim at framing problems and solutions through the lens of the customer.

Journey Maps bridge outside-in thinking with inside-out thinking by defining which activities deliver the experience customers expect.

Value Streams complement the outside-in view by representing all the activities, both value and non-value creating, required to bring a product from concept to launch (also known as the development value stream) and from order to delivery or provisioning (also known as the operational value stream). More detail about value streams can be found in Section 2.49.

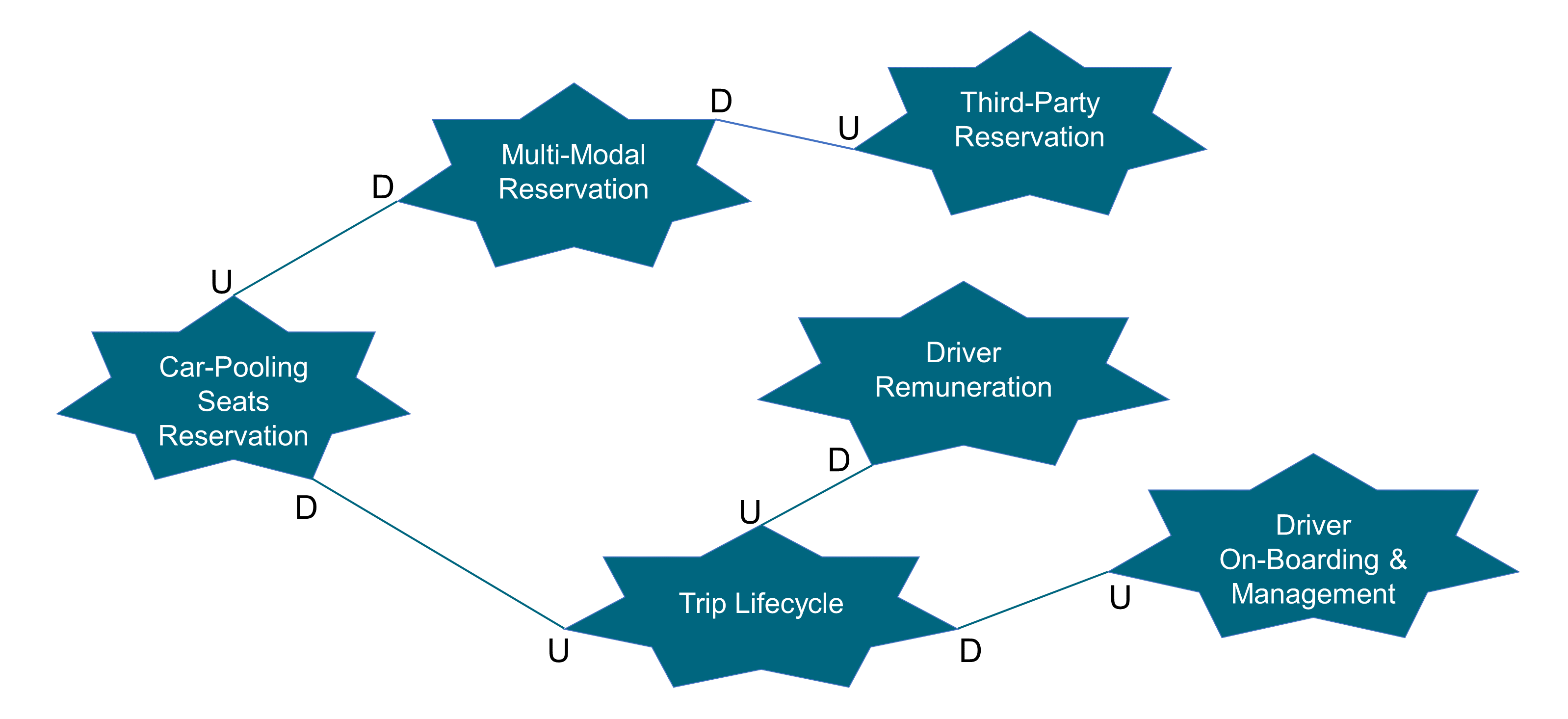

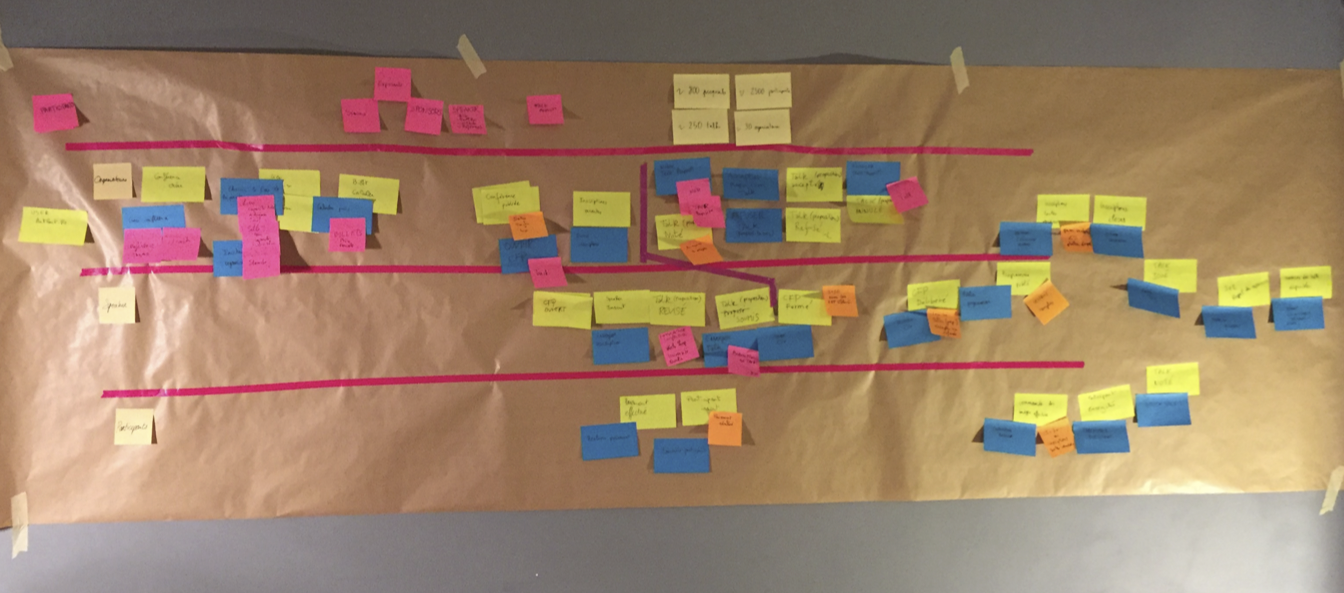

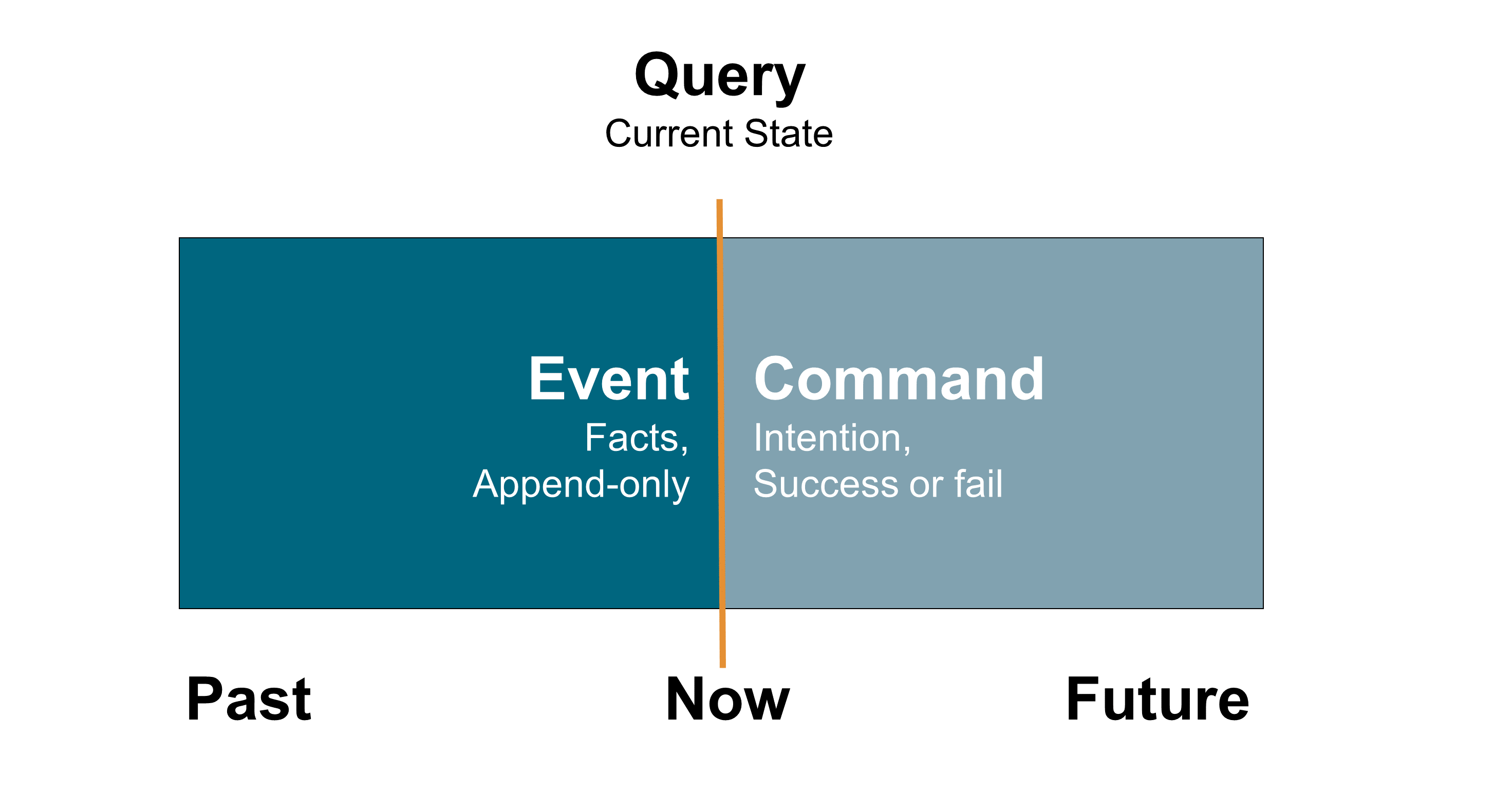

Event Storming helps Agile teams to explore the domain. The identification of domain events, commands, persona, or entities facilitates a structured conversation about the domain. The participants of an event storming workshop share knowledge of the domain to establish a common vocabulary or ubiquitous language. Domain events help to identify boundaries. Commands help to identify responsibilities to be allocated to services or components.

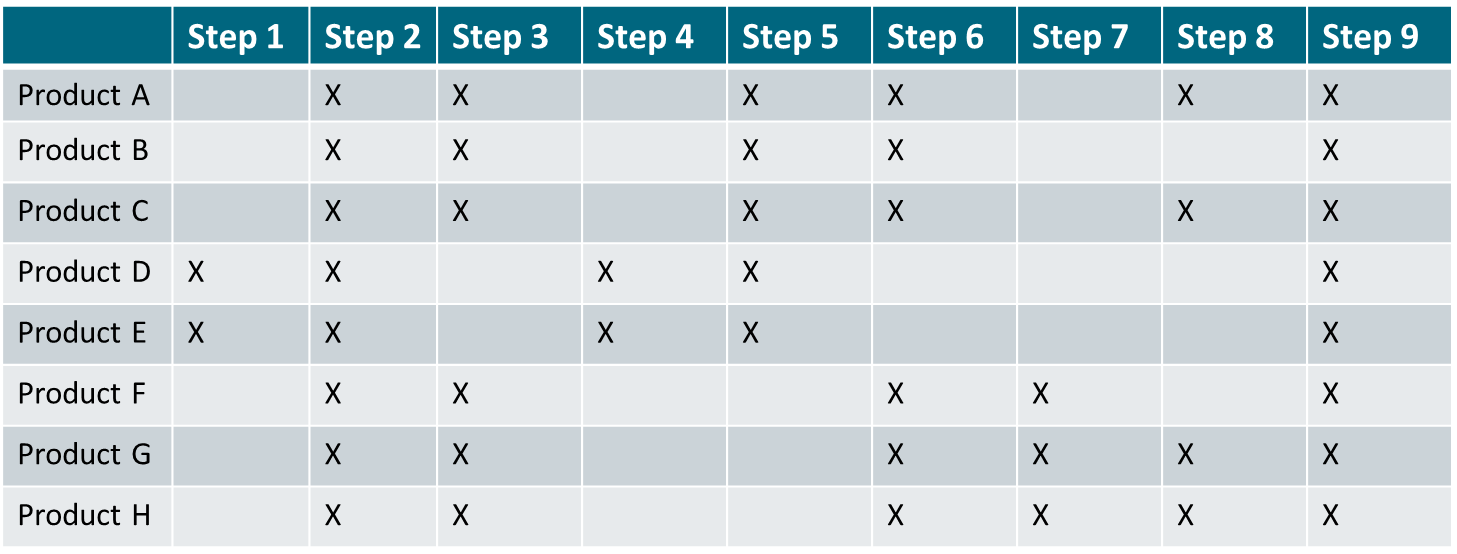

Readers who would like to zoom in on building blocks can read Chapter 10 and use Table 2.

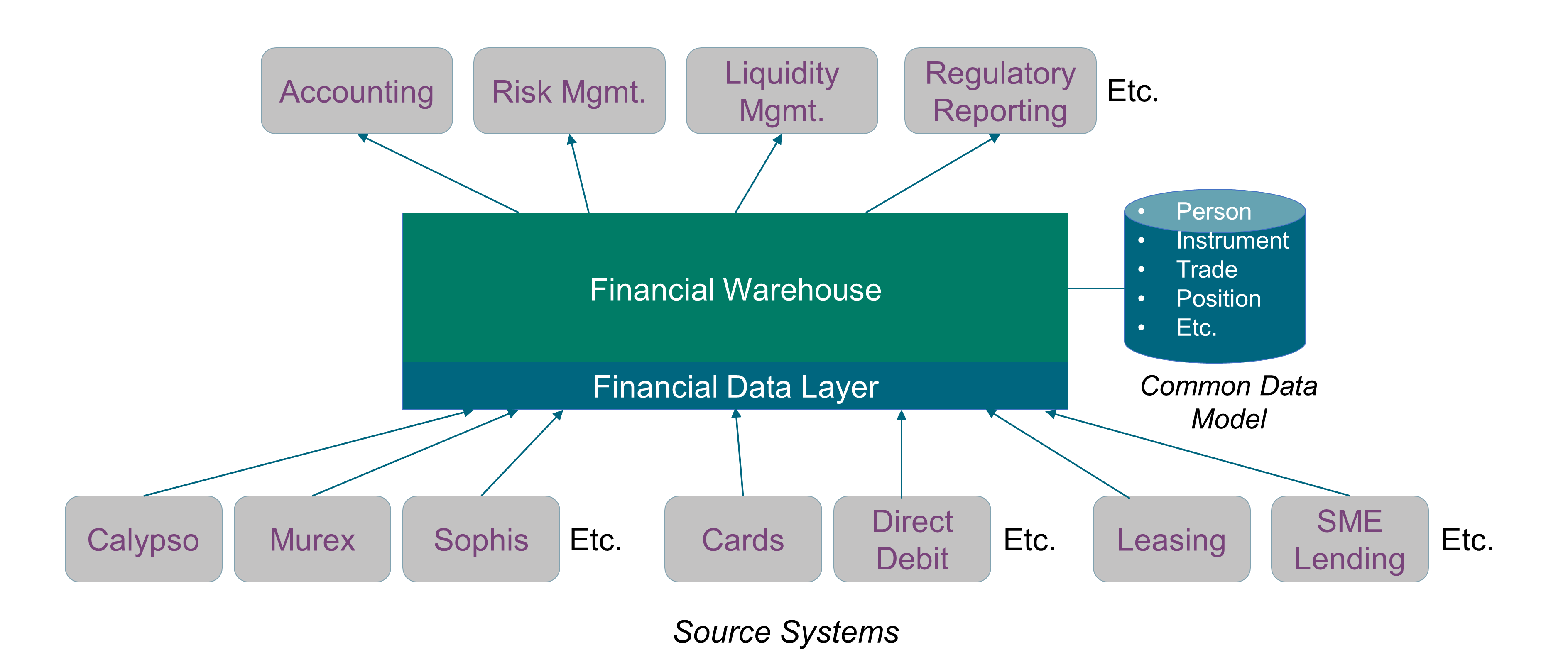

4.3. Data, Information, and Artificial Intelligence

The digital enterprise collects and processes vast amounts of data. Data describes a portion of “reality” that is of interest to the enterprise. Data becomes information when it is interpreted, when it has meaning. Data can be interpreted by humans or algorithms. Artificial Intelligence (AI) transforms data into predictions, prescriptions, and automation capabilities.

The combination of data and AI is having a transformational impact on industries. For example, predictive maintenance can improve service quality while lowering costs. Vast numbers of images can teach machines how to recognize faces or interpret chest x-rays.

Architecting systems that handle data at scale is critical. Non-functional requirements, such as compliance (e.g., privacy) or security requirements, influence Data Architecture decisions. The move from batch processing to real-time data flows is having a profound impact on Data Architecture.

4.4. Software and Hardware Architecture

“Software is eating the world.”

Marc Andreessen explained in a famous Wall Street Journal article why software is eating the world [Andreessen 2011]. The rapid evolution of software technology has fueled the growth of digital business. Following the lead of Internet giants, some enterprises from the old economy are framing themselves as tech companies; for example, Banco Bilbao Vizcaya Argentaria (BBVA): “If you want to be a leading bank, you have to be a technology company”.

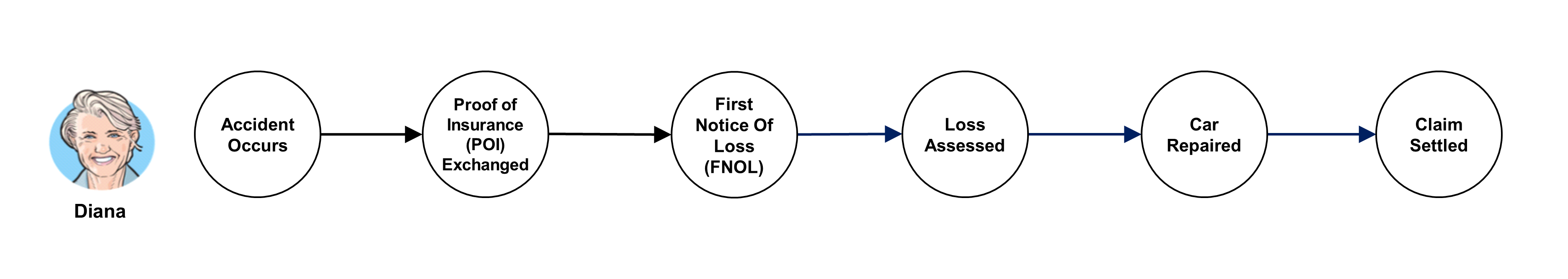

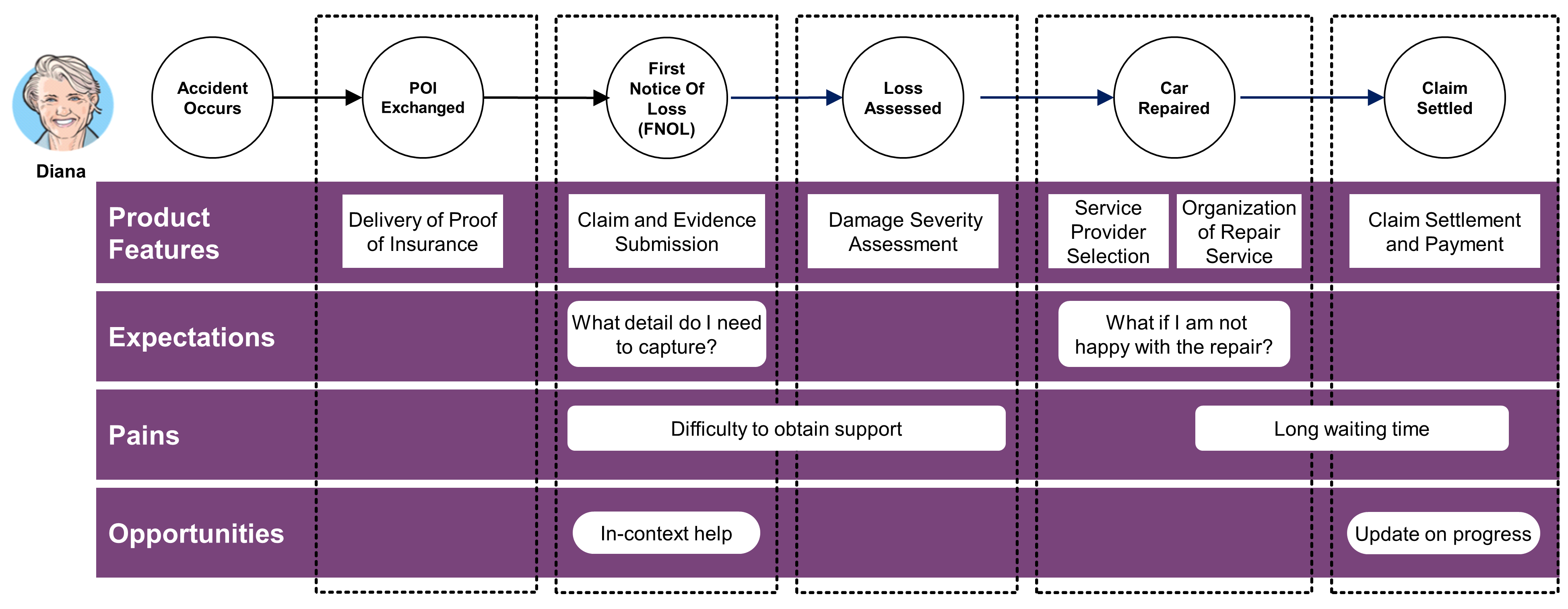

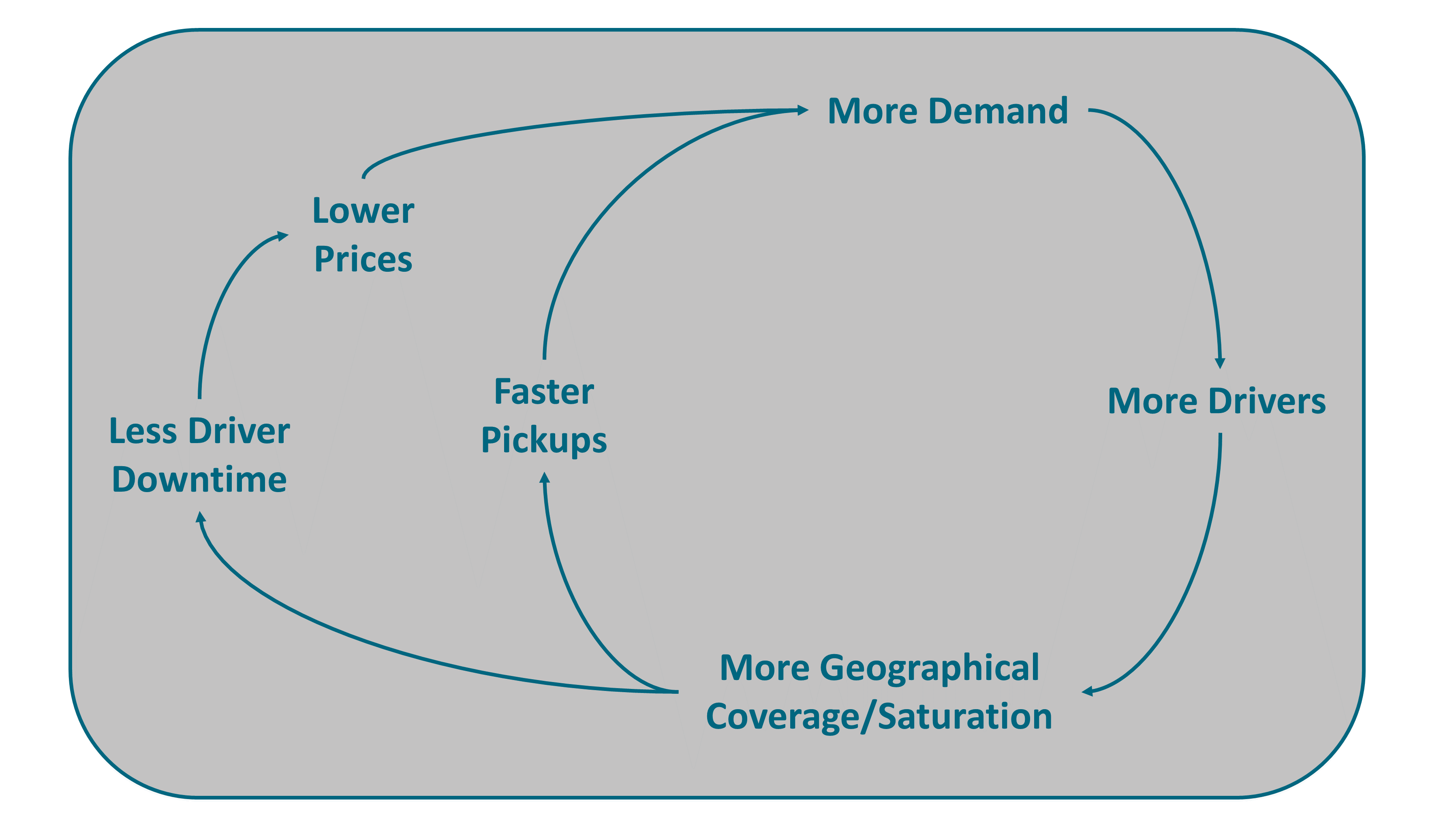

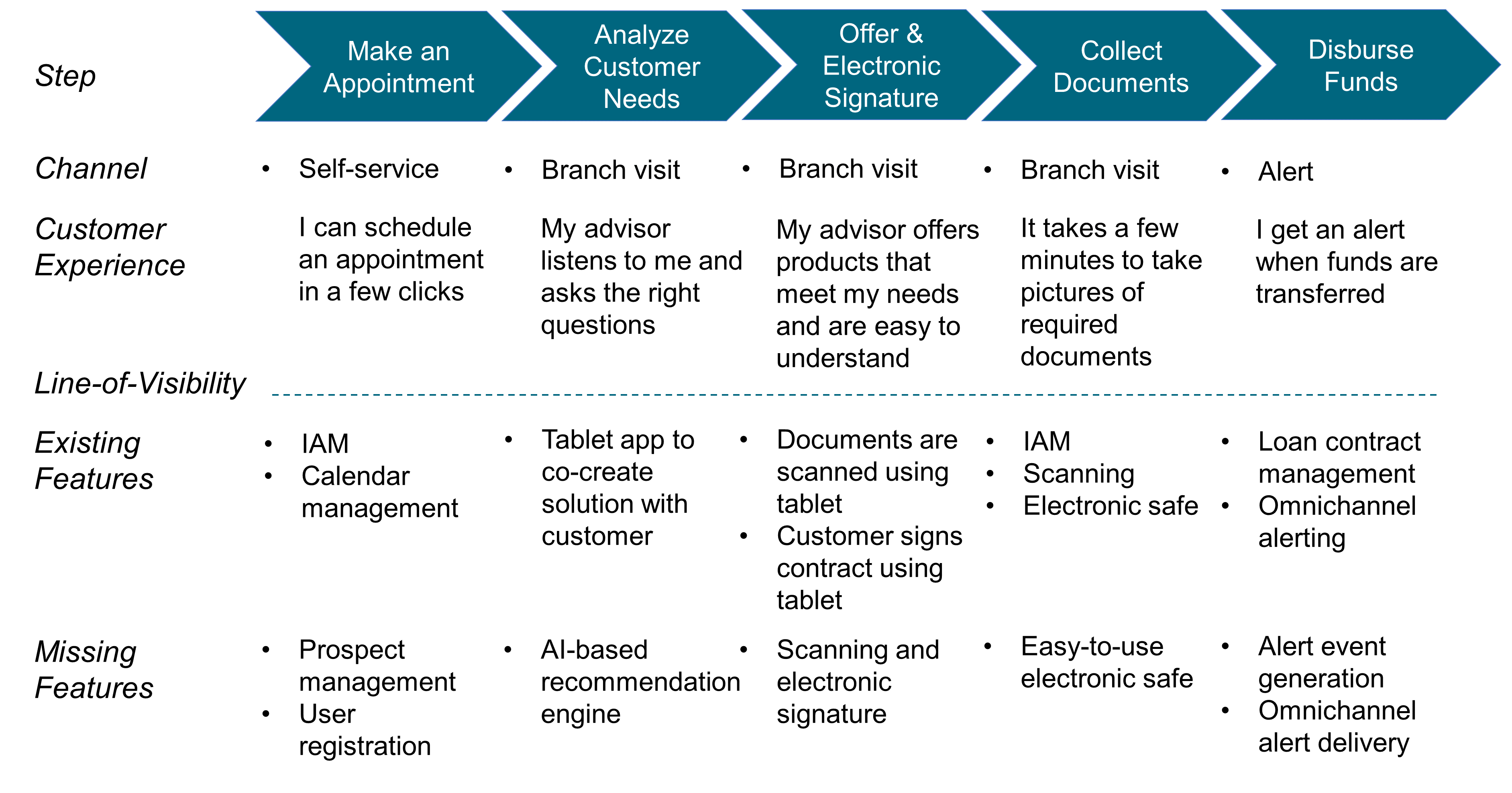

In 2002, Amazon™ was facing a complexity barrier. The size of its home page reached 800MB and it took 8 to 12 hours to compile. Jeff Bezos issued a mandate that profoundly changed the way software is created and how the enterprise is organized.