Digital Practitioner Body of Knowledge™ Standard

A Standard of The Open Group

Copyright © 2019-2020, The Open Group

All rights reserved.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of the copyright owners.

Any use of this publication for commercial purposes is subject to the terms of the Annual Commercial License relating to it. For further information, see www.opengroup.org/legal/licensing.

The Open Group Standard

Digital Practitioner Body of Knowledge™ Standard

Document Number: C196

ISBN: 1-947754-33-1

Published by The Open Group, January 2020.

Comments relating to the material contained in this document may be submitted to:

The Open Group, Apex Plaza, Forbury Road, Reading, Berkshire, RG1 1AX, United Kingdom

or by electronic mail to:

ogspecs@opengroup.org

Built with asciidoctor, version 2.0.10. Backend: html5 Build date: 2020-01-02 06:47:02 UTC

Preface

The Open Group

The Open Group is a global consortium that enables the achievement of business objectives through technology standards. Our diverse membership of more than 700 organizations includes customers, systems and solutions suppliers, tools vendors, integrators, academics, and consultants across multiple industries.

The mission of The Open Group is to drive the creation of Boundaryless Information Flow™ achieved by:

-

Working with customers to capture, understand, and address current and emerging requirements, establish policies, and share best practices

-

Working with suppliers, consortia, and standards bodies to develop consensus and facilitate interoperability, to evolve and integrate specifications and open source technologies

-

Offering a comprehensive set of services to enhance the operational efficiency of consortia

-

Developing and operating the industry’s premier certification service and encouraging procurement of certified products

Further information on The Open Group is available at www.opengroup.org.

The Open Group publishes a wide range of technical documentation, most of which is focused on development of Standards and Guides, but which also includes white papers, technical studies, certification and testing documentation, and business titles. Full details and a catalog are available at www.opengroup.org/library.

This Document

This document is the Digital Practitioner Body of Knowledge™ Standard, a standard of The Open Group, also known as the DPBoK™ Standard. It has been developed and approved by The Open Group.

The high-level structure of the document is summarized as follows:

-

Chapter 1, Introduction includes the objectives and overview, conformance requirements, and terminology definitions

-

Chapter 2, Definitions includes the terms and definitions for this document

-

Chapter 3, Digital Transformation describes the key concept of Digital Transformation

-

Chapter 4, Principles of the DPBoK Standard describes the principles by which the document will evolve and be maintained, and how Digital Practitioner competencies will be defined

-

Chapter 5, Structure of the Body of Knowledge describes how the Body of Knowledge is structured

-

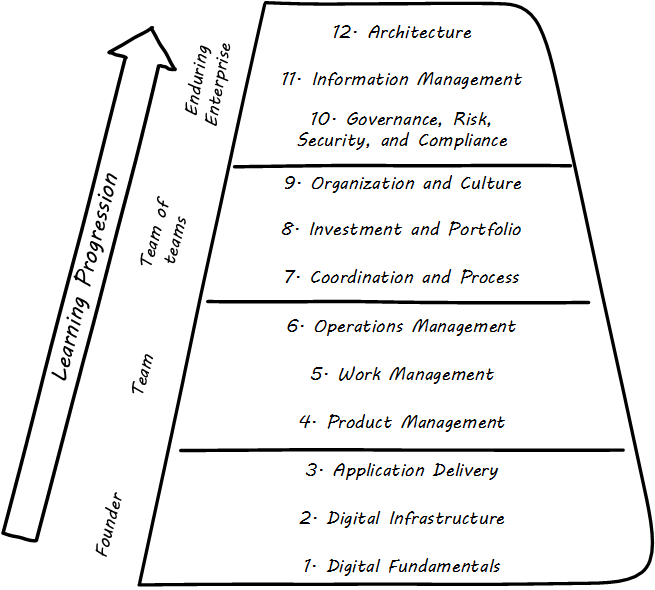

Chapter 6, The Body of Knowledge contains the Body of Knowledge, divided into four stages, called Contexts, which correspond to the stages of evolution of a digital practice. These stages are explained in the section on Context Summaries, and summarized as follows:

Context I: Individual/Founder

Foundational drivers of, and technical capabilities for, delivering digital value

Context II: Team

The critical product management, collaboration, and operational skills necessary for producing digital value

Context III: Team of Teams

Key capabilities for partitioning investments and ensuring coherence, alignment, and joint execution across multiple teams

Context IV: Enduring Enterprise

Steering, managing risk, and assuring performance at scale and over increasing time horizons and increasingly complex ecosystems

-

Appendices contains the list of abbreviations used in this document

Background and Intended Value of this Work

Applied computing, now popularly termed "digital technology", is transforming economies and societies worldwide. Digital investments are critical for modern organizations. Participating in their delivery (i.e., working to create and manage them for value) can provide prosperity for both individuals and communities. Computing programs worldwide are under pressure to produce an increasing number of qualified professionals to meet voracious workforce demand. And skill requirements have undergone a seismic shift over the past 20 years. Digital Practitioners require a wide variety of skills and competencies, including cloud architecture and operations, continuous delivery and deployment, collaboration, Agile and Lean methods, product management, and more.

Industry guidance has over the years become fragmented into many overlapping and sometimes conflicting bodies of knowledge, frameworks, and industry standards. The emergence of Agile [8] and DevOps [165] as dominant delivery forms has thrown this already fractured ecosystem of industry guidance into chaos. Organizations with longstanding commitments to existing bodies of knowledge are re-assessing those commitments. Changes in digital delivery are happening too fast for generational turnover to suffice.

Mid-career IT professionals, who still anticipate many more years in the workforce, are especially at risk. Learning the new "digital" approaches is not optional for them. But how to reconcile these new practices with the legacy "best practices" that characterized these workers' initial professional education? Now is the time to re-assess and synthesize new guidance reflecting the developing industry consensus on how digital and IT professionals should approach their responsibilities. Modern higher education is not keeping pace in these topics either. There has been too much of a gap between academic theory and classroom instruction versus the day-to-day practices of managing digital products.

The Digital Practitioner in today’s work environment thus encounters a confusing and diverse array of opinions and diverging viewpoints. This document aims to provide a foundational set of concepts for the practitioner to make sense of the landscape they find in any organization attempting to deliver digital products. It strives to put both old and new in a common context, with well-supported analysis of professional practice. Practically, it should be of value for both academic and industry training purposes.

In conclusion: this document is intended broadly for the development of the Digital Practitioner or professional. It seeks to provide guidance for both new entrants into the digital workforce as well as experienced practitioners seeking to update their understanding on how all the various themes and components of digital and IT management fit together in the new world.

Sources of Material

While this document draws from a wide variety of industry sources, there are two primary sources of material of this work.

The Forums of The Open Group

The Open Group has a number of different related programs of work that contributed substantially to the content of, and interest in, the DPBoK Standard (this document). The initial groundwork was laid by the Digital Business Customer Experience (DBCX) Work Group, which was the predecessor to the Digital Practitioners Work Group, the current maintainers of this document. In addition, this document is informed by and makes reference to other Forums of The Open Group, including:

-

The Architecture Forum

-

The Open Platform 3.0™ Forum

-

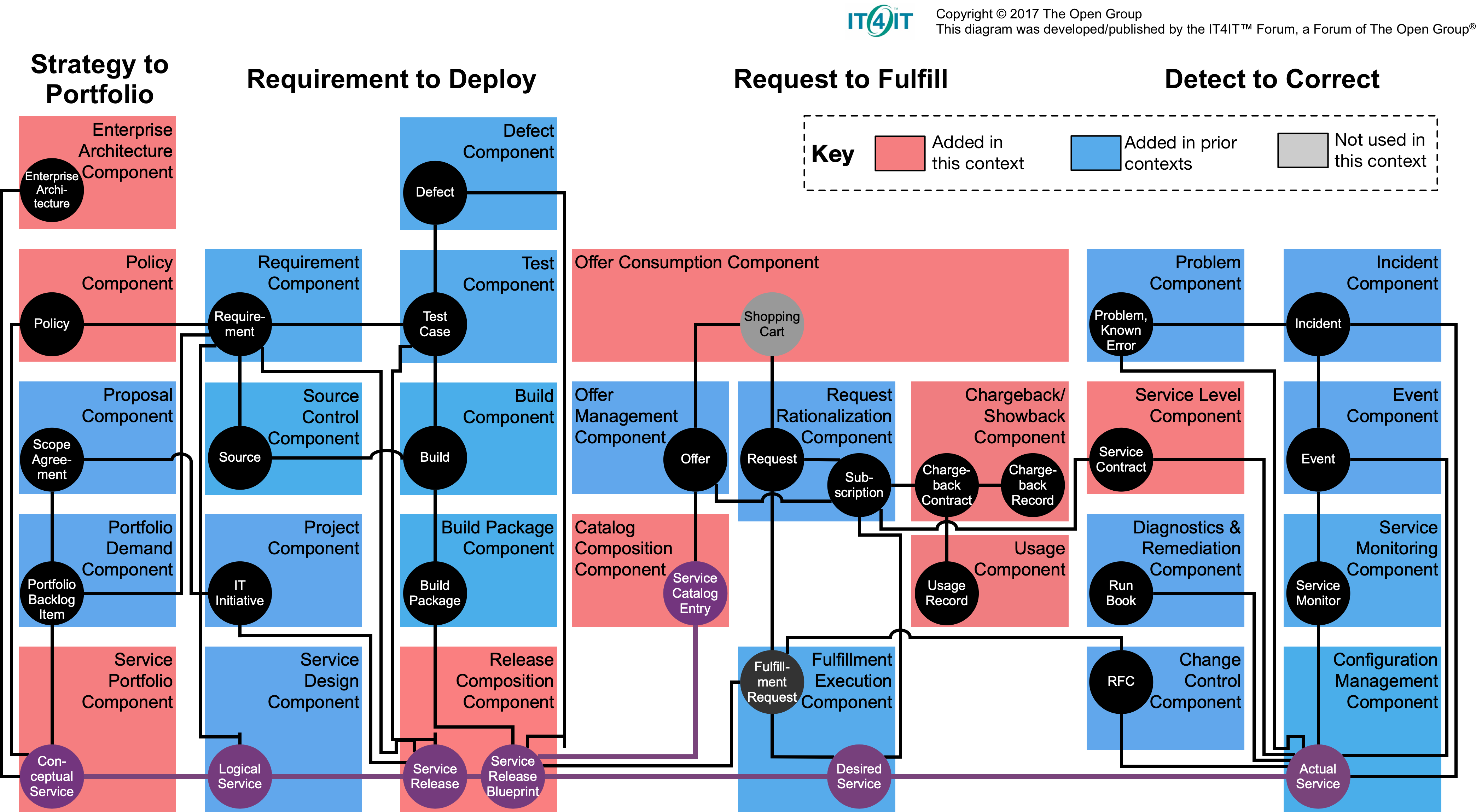

The IT4IT™ Forum

The University of St. Thomas

This work is in part derived from material developed by Charles Betz between 2014 and 2017 for use in teaching in the Graduate Programs in Software Engineering at the University of St. Thomas in St. Paul, Minnesota, USA, for SEIS 660 (IT Infrastructure Management), later replaced by SEIS 664 (Information Technology Delivery). Graduate Programs in Software at University of St. Thomas offers Masters' degrees in Software Engineering, Data Science, Information Technology, and Software Management. It is the largest program of its kind in the US and emphasizes rigorous, realistic preparation of practitioners. No suitable collegiate texts were available providing comprehensive survey coverage of the Digital/Lean/Agile transition and its impacts on IT management generally, so this material was developed collaboratively, incrementally, and iteratively via an open Github project over the course of three years.

Relationship to Prior Publications

The resulting textbook, Managing Digital: Concepts and Practices, was contributed by the author and published by The Open Group Press to serve as an experiment in collaborative, open source document development, and also to support worldwide distribution on a low/no-cost basis. That material is separate and distinct from this document, but the agreement allows for the "harvesting" of material from that text. Such harvesting will not be cited, as it is expected to be substantial. The reader of both documents will, therefore, notice deliberate similarities and identical passages. However, the textbook also includes extensive quotations, sidebars, anecdotes, cases, tangential elements, personal observations, exercises, and so forth that will not be found in this document. In general, this document is briefer, drier, and written with a normative should/shall/may/must framing (see IETF RFC 2119, [40]). Eventually, the textbook may be the basis for a "Guide", supporting this document in the same way that (for example) the IT4IT Management Guide [12] supports the IT4IT Standard. See definitions of Standard versus Guide in The Open Group Standards Process [280].

Curation Approach

Relationship of this Document to Other Bodies of Knowledge

This document may source knowledge from other bodies of knowledge. One of the reasons for the existence of this document is that a constellation of new best practices and approaches based on cloud, Agile, Lean, and DevOps is overtaking older approaches based on physical infrastructure, project management, process management, and functional specialization. The Phoenix Project [165] is a useful introduction to the new approaches; evidence of their effectiveness can be seen in the publicly available videos of practitioner case studies presented at the DevOps Enterprise Summit.

Interpretive Aspects

This document should not merely be an assemblage of other sources, however. It may include well-grounded synthesis and interpretation of the curated source material. See the DPBoK principles for further information.

Evidence of Notability

In the current fast-paced digital economy, curating notable and rigorous work by individuals on a fair-use basis into the standard seems advisable.

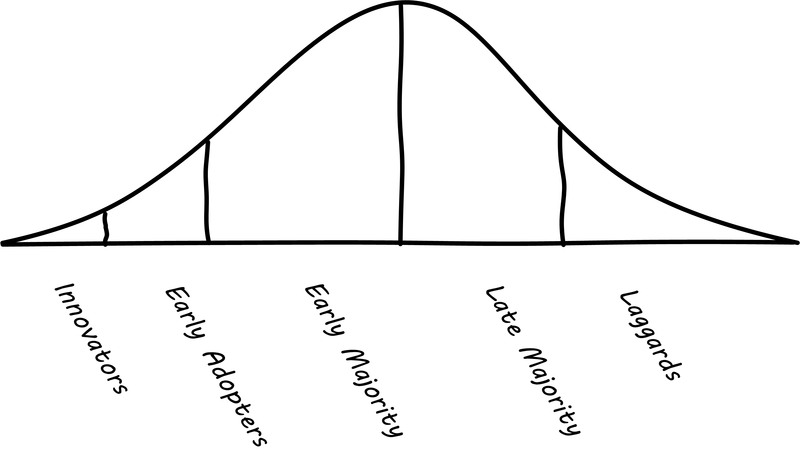

This will require an ongoing discussion and debate as to relevance and notability of the material. DevOps, design thinking, Agile methods, Site Reliability Engineering (SRE), and many other concepts have emerged of late. How do we know that they are notable and relevant? That they have staying power and merit inclusion? A proposed set of heuristics follows:

-

Existence of an organized community – is there evidence for a concept’s interest in terms of practitioners self-identifying under its banner and choosing to spend their resources attending local, national, or international events?

-

Notable publications – are books in print on the topic from reputable publishers; e.g., O’Reilly or Addison-Wesley? Are these books garnering reviews on Amazon or Goodreads?

-

Media and analyst coverage – there is an active community of professional commentary and analysis; its attention to a given topic is also evidence of notability – social media attention is an important, but not conclusive, subset of this class of evidence (it can be too easily manipulated)

The use of a given body of knowledge or other guidance as broadly used audit criteria (e.g., cloud provider compliance) shall be construed as evidence of notability.

Trademarks

ArchiMate®, DirecNet®, Making Standards Work®, Open O® logo, Open O and Check® Certification logo, OpenPegasus®, Platform 3.0®, The Open Group®, TOGAF®, UNIX®, UNIXWARE®, and the Open Brand X® logo are registered trademarks and Boundaryless Information Flow™, Build with Integrity Buy with Confidence™, Dependability Through Assuredness™, Digital Practitioner Body of Knowledge™, DPBoK™, EMMM™, FACE™, the FACE™ logo, IT4IT™, the IT4IT™ logo, O-DEF™, O-HERA™, O-PAS™, Open FAIR™, Open Platform 3.0™, Open Process Automation™, Open Subsurface Data Universe™, Open Trusted Technology Provider™, O-SDU™, Sensor Integration Simplified™, SOSA™, and the SOSA™ logo are trademarks of The Open Group.

Airbnb™ is a trademark of Airbnb, Inc.

Amazon Web Services® is a registered trademark and Amazon™, AWS™, and Kindle™ are trademarks of Amazon.com, Inc. or its affiliates.

Android™ is a trademark of Google LLC.

Apache®, Apache Mesos®, and CouchDB® are registered trademarks of the Apache Software Foundation (ASF).

Apple®, iPhone®, and MacBook Air® are registered trademarks of Apple Inc.

BABOK® and Business Analysis Body of Knowledge® are registered trademarks owned by International Institute of Business Analysis.

CISSP® is a registered certification mark of the International Information Systems Security Certification Consortium, Inc.

COBIT® is a registered trademark of ISACA.

Debian® is a registered trademark owned by Software in the Public Interest, Inc.

DMBOK® is a registered trademark of DAMA International.

Etsy® is a registered trademark of Etsy, Inc., in the US and/or other countries.

Facebook® is a registered trademark of Facebook, Inc.

Flickr® and Yahoo® are registered trademarks of Yahoo, Inc.

Google® is a registered trademark and Google Compute Engine™ is a trademark of Google LLC.

IBM® is a registered trademark of International Business Machines Corporation in the United States, other countries, or both.

ITIL® and PRINCE2® are registered trademarks of AXELOS Limited.

LinkedIn® is a registered trademarks of LinkedIn Corporation and its affiliates in the United States and/or other countries.

Linux® is a registered trademark of Linus Torvalds in the US and other countries.

Lyft™ is a trademark of Lyft, Inc.

Microsoft® is a registered trademark and Azure™, PowerPoint™, and Visio™ are trademarks of Microsoft Corporation in the United States and/or other countries.

Netflix® is a registered trademark of Netflix, Inc.

NGINX™ is trademark of NGINX, Inc.

OmniGraffle™ is a trademark of The Omni Group.

Oracle® and Java® are registered trademarks and JavaScript™ is a trademark of Oracle and/or its affiliates.

PMBOK®, Project Management Body of Knowledge®, and Project Management Institute® are registered trademarks of the Project Management Institute, Inc.

RabbitMQ® is a registered trademark of Pivotal Software, Inc. in the US and other countries.

SABSA® is a registered trademark of The SABSA Institute.

Scaled Agile Framework® and SAFe® are registered trademarks of Scaled Agile, Inc.

Spotify™ is a trademark of Spotify AB.

UML® is a registered trademark and BPMN™, Business Process Modeling Notation ™, and Unified Modeling Language™ are trademarks of Object Management Group, Inc. in the United States and/or other countries.

Zachman® is a registered trademark of Zachman International, Inc.

All other brands, company, and product names are used for identification purposes only and may be trademarks that are the sole property of their respective owners.

Acknowledgments

The Open Group gratefully acknowledges the contribution of the following people in the development of this document:

-

Charles Betz

-

Georg Bock

-

James Doss

-

Michael Fulton

-

Jim Hietala

-

Mohan Hiremath

-

Dave Hornford

-

Frédéric Le

-

Antoine Lonjon

-

Dave Lounsbury

-

Robert Martin

-

Sriram Sabesan

-

Mark Smalley

Many assisted with and/or contributed to this work before its transition to The Open Group:

-

Glen Alleman

-

Brad Appleton

-

David Bahn

-

Jason Baker

-

Richard Barton

-

Jabe Bloom

-

Terry Brown

-

Kate Campise

-

Murray Cantor

-

Rob England

-

Nicole Forsgren

-

Stephen Fralippolippi

-

Svetlana Gluhova

-

Will Goddard

-

Lorin Hochstein

-

Jez Humble

-

Majid Iqbal

-

Mark Kennaley

-

Firasat Khan

-

Gene Kim

-

Dmitry and Alina Kirsanov

-

Mary Lebens

-

Evan Leybourn

-

Tom Limoncelli

-

Chris Little

-

Mary Mosman

-

Mark Mzyk

-

Sriram Narayam

-

Amos Olagunju

-

Justin Opatrny

-

Pat Paulson and his students

-

Francisco Piniero

-

Ben Rockwood

-

Mark Smalley

-

John Spangler

-

Grant Spencer

-

Jeff Sussna

-

Halbana Tarmizi

-

Roger K. Williams

Referenced Documents

Normative References

This document does not contain any normative references at the time of publication. These may be added in a future release.

Informative References

-

Thinking in Systems: A Primer by Donella Meadows. White River Junction, VT: Chelsea Green Publishing Company, 2008.

-

Agile Hiring by Sean Landis. Walnut Creek, California: Artima, Inc, 2011.

-

“The Secret to Amazon’s Success ‒ Internal APIs, by Kin Lane,” The API Evangelist. 2012.

-

M. L. Abbott and M. T. Fisher, The Art of Scalability: Scalable Web Architecture, Processes, and Organizations for the Modern Enterprise (2nd Edition). Old Tappan, NJ: Pearson Education, Inc., 2015.

-

D. M. Abrashoff, It’s Your Ship: Management Techniques from the Best Damn Ship in the Navy, 10Th Anniv. Grand Central Publishing, 2012.

-

G. Adzic, Impact Mapping: Making a big impact with software products and projects. Gojko Adzic, 2012.

-

Agile Alliance, “Agile Manifesto and Principles,” no. 4/13/2011. 2001.

-

A. Akera, “Edmund Berkeley and the Origins of the ACM,” Communications of the ACM, vol. 50, no. 5, pp. 31–35, 2007.

-

R. Akershoek, “IT4IT™ for Managing the Business of IT.” 2016.

-

J. Allspaw and P. Hammond, “10 deploys per day: Dev & ops cooperation at Flickr,” Velocity 2009. O’Relly Publications, San Jose, CA, 2009.

-

J. Allspaw and J. Robbins, Web operations, 1st ed. Beijing China ; Sebastopol, CA: O’Reilly, 2010, pp. xvii, 315 p.

-

A. C. Alonzo and A. W. Gotwals, Learning Progressions in Science. Rotterdam: Sense Publishers, 2012.

-

S. Ambler, “Agile Outsourcing,” Dr. Dobb’s Journal, Mar. 2005.

-

S. Ambler, “Agility@Scale: Strategies for Scaling Agile Software Development.” 2015.

-

S. W. Ambler and M. Lines, Disciplined Agile Delivery: A Practitioner’s Guide to Agile Software Delivery in the Enterprise. 2012, pp. 1–2.

-

S. W. Ambler and P. J. Sadalage, Refactoring databases : evolutionary database design. Harlow, U.K.: Addison-Wesley, 2006, pp. xxvii, 350 p.

-

D. J. Anderson, Kanban: Successful Evolutionary Change for your Technology Business. Sequim, WA: Blue Hole Press, 2010.

-

T. Arbogast, B. Vodde, and C. Larman, “Agile Contracts Primer.” 2012.

-

J. Arnold, “Tilt the playing field: discover, nurture, and speed up the delivery of value.” Liberio, 2013.

-

C. Bank and J. Cao, The Guide to Usability Testing. uxpin.com, 2016.

-

K. Beck, extreme programming eXplained : embrace change. Reading, MA: Addison-Wesley, 2000, pp. xxi, 190 p.

-

S. Beer, “What is cybernetics?,” Kybernetes, vol. 31, no. 2, pp. 209–219, 2002.

-

S. Bell et al., Run grow transform : integrating business and lean IT. Boca Raton, FL: CRC Press, 2013, pp. xlii, 336 p.

-

S. C. Bell and M. A. Orzen, Lean IT: Enabling and Sustaining Your Lean Transformation. Boca Raton, Florida: CRC Press, 2010.

-

S. Bente, U. Bombosch, and S. Langade, Collaborative Enterprise Architecture: Enriching EA with Lean, Agile, and Enterprise 2.0 Practices. Waltham, MA: Morgan Kaufman - Elsevier, 2012.

-

S. Bernard, An Introduction to Enterprise Architecture. AuthorHouse, 2012.

-

C. Betz, “Release management integration pattern - seeking devops comments,” Lean4IT: The architecture of IT value, vol. 2014. 2011.

-

C. Betz, “The CMDB is not a data warehouse,” Integrated IT Management. Enterprise Management Associates, 2011.

-

C. T. Betz, Architecture and Patterns for IT: Service and Portfolio Management and Governance (Making Shoes for the Cobbler’s Children), 2nd Edition. Amsterdam: Elsevier/Morgan Kaufman, 2011.

-

C. T. Betz, “A DevOps Causal Loop Diagram parts 1 and 2,” Lean4IT: The architecture of IT value. 2013.

-

B. Beyer, C. Jones, J. Petoff, and N. R. Murphy, Site Reliability Engineering: How Google Runs Production Systems. Sebastopol, CA: O’Reilly Media, Inc., 2016.

-

J. Bezos, “Jeff Bezos’ 2016 Letter to Amazon Shareholders.” 2016.

-

S. Blank, The Four Steps to the Epiphany: Successful Strategies for Products That Win, 2nd ed. Steve Blank, 2013.

-

J. Bloomberg, “Agile Enterprise Architecture Finally Crosses the Chasm,” Forbes. Jul-2014.

-

B. Boehm, “A Spiral Model of Software Development and Enhancement,” IEEE Computer, vol. 21, no. 5, pp. 61–72, 1988.

-

L. Bossavit, “The Leprechauns of Software Engineering: How folklore turns into fact and what to do about it.” 2015.

-

D. Breston, “DevOps and SIAM: The Happy Nexus,” ITSMTools.com blog. 2017.

-

F. P. Brooks, The mythical man-month : essays on software engineering. Reading, Mass.: Addison-Wesley Pub. Co., 1975, pp. xi, 195 p.

-

F. P. Brooks, The mythical man-month : essays on software engineering, Anniversar. Reading, Mass.: Addison-Wesley Pub. Co., 1995, pp. xiii, 322 p.

-

A. Brown, N. Forsgren, J. Humble, N. Kersten, and G. Kim, “2016 State of DevOps report,” Puppet Labs, 2016.

-

J. Brustein, “Microsoft Kills Its Hated Stack Rankings. Does Anyone Do Employee Reviews Right?,” Bloomberg Business Week. 2013.

-

M. Buckingham and A. Goodall, “Reinventing performance management,” Harvard Business Review, vol. 93, no. 4, pp. 40–50, 2015.

-

M. Burgess, “When and where order matters,” homepage mark burgess. .

-

M. Burrows, Kanban from the Inside: Understand the Kanban Method, connect it to what you already know, introduce it with impact (Kindle ed.), Kindle. Sequim, Washington: Blue Hole Press, 2015.

-

M. G. I. Burrows, “The Chubby lock service for loosely-coupled distributed systems,” in _ 7th symposium on Operating systems design and implementation (OSDI ’06)_, 2006, pp. Pages 335–350 .

-

Business Architecture Guild, A Guide to the Business Architecture Body of Knowledge (BIZBOK Guide). Business Architecture Guild, 2016.

-

B. Butler, “Free cloud storage service MegaCloud goes dark,” Network World. 2013.

-

B. Butler, “Cloud’s worst-case scenario: What to do if your provider goes belly up,” Network World. 2014.

-

M. Cagan, Inspired: How to Create Products Customers Love. SVPG Press, 2008.

-

S. K. Card, J. D. Mackinlay, and B. Shneiderman, Readings in Information Visualization: Using Vision to Think. San Diego: Academic Press, 1999.

-

N. Carr, “IT Doesn’t Matter,” Harvard Business Review, pp. 5–12, 2003.

-

K. Cherry, “Multitasking: Bad for Your Productivity and Brain Health,” verywell.com. 2016.

-

M. Cherubini, G. Venolia, R. Deline, and A. J. Ko, “Let ’ s Go to the Whiteboard: How and Why Software Developers Use Drawings,” CHI 2007 Proceedings, pp. 557–566, 2007.

-

J. Choi, “The Science Behind Why Jeff Bezos’s Two-Pizza Team Rule Works.” Jan-2014.

-

C. Christensen, S. Cook, and T. Hall, “What Customers Want from Your Products,” Working Knowledge (Harvard Business School). 2006.

-

Clayton Christensen Institute, “Jobs to be Done,” http://www.christenseninstitute.org/. 2015.

-

Cloud Native Computing Foundation, “CNCF Cloud Native Definition v1.0,” CNCF TOC repository. 2018.

-

R. Coase, “The nature of the firm,” Economica, vol. 4, pp. 386–405, 1937.

-

A. Cockburn, “Walking skeleton,” http://alistair.cockburn.us/. 1996.

-

A. Cockburn, Agile Software Development: The Cooperative Game, 2nd ed. Boston, MA: Pearson Education, Inc., 2007.

-

M. Cohn, “Agile estimating and planning,” in VTT Symposium (Valtion Teknillinen Tutkimuskeskus), 2006, no. 241, pp. 37–39.

-

M. Cohn, Succeeding with Agile: Software Development Using Scrum. Upper Saddle River, New Jersey: Addison-Wesley, 2010.

-

S. Comella-Dorda, L. Santiago, and G. Speksnijder, “An operating model for company-wide agile development,” McKinsey & Company. 2016.

-

Committee on the Financial Aspects of Corporate Governance, “Report of the Committee on the Financial Aspects of Corporate Governance (aka Cadbury Report),” Gee and Co, Ltd., London, 1992.

-

Computer History Museum, “Memory & Storage,” Timeline of Computer History. 2019.

-

W. Contributors, “Technology Business Management Council,” Wikipedia, The Free Encyclopedia. 2019.

-

A. Cooper, R. Reimann, and D. Cronin, “About Face 3: The Essentials of Interaction Design.” 2009.

-

Cornell University, “Explaining Why the Millenium Bridge Wobbled,” Science Daily. 2005.

-

COSO Commission, “Internal Control — Integrated Framework (2013).” 2013.

-

M. Csikszentmihalyi, Flow : the psychology of optimal experience, 1st ed. New York: Harper & Row, 1990, pp. xii, 303 p.

-

W. Cunningham, “Experience Report: The WyCash Portfolio Management System,” OOPSLA ’92, vol. 4, no. 2. pp. 29–30, Mar-1992.

-

W. Cunningham, “Do The Simplest Thing That Could Possibly Work,” wiki.c2.com. 2014.

-

T. Data Management Association, The DAMA Guide to The Data Management Body of Knowledge (DAMA-DMBOK Guide). Bradley Beach, NJ: Technics Publications, LLC, 2009.

-

Dave Hornford, Sriram Sabesan, Vidhya Sriram, and Ken Street, “The Seven Levers of Digital Transformation,” The Open Group, 2017.

-

A. De Nicola and M. Missikoff, “A Lightweight Methodology for Rapid Ontology Engineering,” Communications of the ACM2, vol. 59, no. 3, pp. 79–86, 2016.

-

S. Dekker, The Field Guide to Understanding ’Human Error.’ Burlington, VT: Ashgate Publishing Company, 2006.

-

J. DeLuccia, IT COMPLIANCE AND CONTROLS: Best Practices for Implementation. Hoboken, N.J.: John Wiley & Sons, Inc., 2008.

-

J. DeLuccia, J. Gallimore, G. Kim, B. Miller, and J. D. L. & J. G. & G. K. & B. Miller, “DevOps Audit Defense Toolkit,” IT Revolution, 2015.

-

DHS, “Report No. 2006-03, The Use of Commercial Data,” DHS Data Privacy and Integrity Advisory Committee, 2006.

-

A. E. Ditri, J. C. Shaw, and W. Atkins, Managing the EDP function. N.Y.: McGraw-Hill, 1971.

-

D. Drogseth, “The Enterprise Service Catalog - Unifying IT Services for the Digital Age,” APM Digest. 2016.

-

P. F. Drucker, Post-capitalist society, 1st ed. New York, NY: HarperBusiness, 1993, pp. 232 p.

-

D. du Preez, “A CIO’s worst nightmare: When your cloud provider goes bankrupt,” diginomica. 2015.

-

R. Dunbar, How Many Friends Does One Person Need? Dunbar’s Number and Other Evolutionary Quirks. Harvard University Press, 2010.

-

P. M. Duvall, S. Matyas, and A. Glover, Continuous integration : improving software quality and reducing risk. Upper Saddle River, NJ: Addison-Wesley, 2007, pp. xxxiii, 283 p.

-

K. M. Eisenhardt, “Agency Theory: An Assessment and Review,” Source: The Academy of Management Review Academy of Management Review, vol. 14, no. 1, pp. 57–74, 1989.

-

R. England, Plus! The Standard+Case Approach: See Service Response in a New Light. Mana, New Zealand: Two Hills Ltd., 2013.

-

R. England, “Service catalogue and request catalogue,” IT Skeptic Blog. 2016.

-

E. Evans, Domain-driven design : tackling complexity in the heart of software. Boston ; London: Addison-Wesley, 2004, pp. xxx, 528 p.

-

R. P. Feynman, “Cargo Cult Science,” Engineering and Science, vol. 33, pp. 10–13, 1974.

-

N. Forsgren, “Continuous Delivery + DevOps = Awesome.” 2016.

-

N. Forsgren, J. Humble, and G. Kim, Accelerate: Building and Scaling High Performing Technology Organizations. Portland, OR: IT Revolution Press, 2018.

-

N. Forsgren, G. Kim, N. Kersten, and J. Humble, “2014 State of DevOps Report,” Puppet Labs, 2014.

-

M. Fowler, Patterns of enterprise application architecture. Boston: Addison-Wesley, 2003, pp. xxiv, 533.

-

A. Fox, E. A. Brewer, and A. Fox, “Harvest, Yield and Scalable Tolerant Systems,” 7th Workshop Hot Topics in Operating Systems (HotOS 99). IEEE CS, 1999.

-

J. Gall, The Systems Bible: The beginner’s guide to systems large and small. General Systemantics Pr/Liberty, 2012.

-

E. Gamma, R. Helm, R. Johnson, and J. Vlissides, Design patterns : elements of reusable object-oriented software. Reading, Mass.: Addison-Wesley, 1995, pp. xv, 395.

-

A. Gawande, The Checklist Manifesto. New York, N.Y: Picador, 2010.

-

R. Gillett, “Productivity Hack Of The Week: The Two Pizza Approach To Productive Teamwork | Fast Company | Business + Innovation,” fastcompany.com. 2014.

-

R. L. Glass, Software runaways. Upper Saddle River, NJ: Prentice Hall PTR, 1998, pp. xvi, 259.

-

E. M. Goldratt, Critical chain. Great Barrington, Ma.: North River, 1997, pp. 246 p.

-

B. Goodwin, “How CIOs can raise their ’IT clock speed’ as pressure to innovate grows,” ComputerWeekly.com. 2015.

-

J. Gothelf and J. S. Seiden, Lean UX: Applying Lean Principles to Improve User Experience. Sebastopol, CA: O’Reilly Media, Inc., 2013.

-

Great Schools Partnership, “Learning Progression,” Glossary of Education Reform. 2013.

-

M. Griffin, How To Write a Policy Manual. www.templatezone.com, 2016.

-

T. Griffin, “Two Powerful Mental Models: Network Effects and Critical Mass – Andreessen Horowitz,” Andreessen Horowitz. .

-

G. Gruver, M. Young, and P. Fulghum, A Practical Approach to Large-Scale Agile Development: How HP Transformed Laserjet Futuresmart Firmware. Upper Saddle River, N.J.: Pearson Education, Inc., 2013, pp. xxiv, 183 pages.

-

E. Hallikainen, “Service Catalog and Configuration Management Database as the Foundation of SIAM,” PhD thesis, 2015.

-

P. Hammant, “Legacy Application Strangulation : Case Studies,” Paul Hammant’s Blog. 2013.

-

M. Hammer and J. Champy, Reengineering the corporation. London: Nicholas Brealey, 1993, pp. vi,223p.

-

V. Harnish, Scaling Up: How a Few Companies Make It…and Why the Rest Don’t. Gazelles, Inc., 2014.

-

P. Harpring, Introduction to Controlled Vocabularies: Terminology for Art, Architecture and other Cultural Works. Los Angeles, CA: Getty Publications, 2010.

-

S. Harris, CISSP Exam Guide, 6th ed. New York: McGraw-Hill Education, 2013.

-

L. Hassi and M. Laakso, “Design thinking in the management discourse: Defining the elements of the concept,” in 18th international product development conference, Delft, 2011.

-

R. Hastings, “Netflix Culture: Freedom & Responsibility,” Slideshare.net. 2009.

-

D. C. Hay, Data model patterns : conventions of thought. New York: Dorset House ; Chichester : Wiley [distributor], 1996, pp. xix,268p.

-

M. Heller, “GE’s Jim Fowler on the CIO role in the digital industrial economy,” CIO Magazine Online. 2016.

-

G. Hohpe and B. Woolf, Enterprise integration patterns : designing, building, and deploying messaging solutions. Boston ; London: Addison-Wesley, 2003, pp. . cm.

-

J. H. Holland, “Studying Complex Adaptive Systems,” Journal of Systems Science and Complexity, vol. 19, no. 1, pp. 1–8, Mar. 2006.

-

J. Hope and R. Fraser, “Beyond Budgeting Questions and Answers,” Beyond Budgeting Round Table, 2001.

-

M. Housman and D. Minor, “Toxic Workers,” Harvard Business School, 2015.

-

D. W. Hubbard, The Failure of Risk Management. Hoboken, New Jersey: John Wiley & Sons, Inc., 2009.

-

D. W. Hubbard, How to measure anything : finding the value of "intangibles" in business, 2nd ed. Hoboken, N.J.: Wiley, 2010, pp. xv, 304 p.

-

J. Humble, “The Flaw at the Heart of Bimodal IT,” Continuousdelivery.com. 2016.

-

J. Humble and J. Molesky, “Why Enterprises Must Adopt Devops to Enable Continuous Delivery,” Cutter IT Journal, vol. 24, no. 8, 2011.

-

J. Humble, J. Molesky, and B. O’Reilly, Lean enterprise, First edit. 2013, pp. xxi, 317 pages.

-

J. Humble, J. Molesky, and B. O’Reilly, Lean Enterprise: Adopting Continuous Delivery, DevOps, and Lean Startup at Scale. 2014.

-

J. R. Huntzinger, Lean Cost Management: Accounting for Lean by Establishing Flow. Fort Lauderdale, Fl.: J. Ross Publishing, 2007.

-

IEEE, “Software Engineering Body of Knowledge, version 3,” IEEE, 2014.

-

International Auditing and Assurance Standards Board (IAASB), “ISAE 3000 (Revised), Assurance Engagements Other than Audits or Reviews of Historical Financial Information,” International Federation of Accountants, 2013.

-

International Institute of Business Analysis (IIBA), BABOK v3: A Guide to the Business Analysis Body of Knowledge. Toronto, Canada: International Intitute of Business Analysis, 2015.

-

E. Isaacs and A. Walendowski, Designing from both sides of the screen: How Designers and Engineers Can Collaborate to Build Cooperative Technology. Indianapolis, Indiana: New Riders Publishing, 2002.

-

ISACA, COBIT 5 for Information Security. Rolling Meadows, IL: ISACA, 2012.

-

ISACA, COBIT 5 for Assurance. Rolling Meadows, IL: ISACA, 2013.

-

ISACA, ITAF: A Professional Practices Framework for IS Audit/ Assurance, 3rd Edition. Rolling Meadows, IL: ISACA, 2014.

-

ISACA, “COBIT 2019 Framework: Introduction and Methodology,” ISACA, Schaumberg, IL, 2018.

-

ISACA, “IT Control Objectives for Sarbanes-Oxley Using COBIT 5, 3rd Edition.,” ISACA, 2019.

-

ISO/IEC, “ISO/IEC 7498-1: Open Systems Interconnection – Basic Reference Model: The Basic Model,” International Organization for Standardization, 1994.

-

ISO/IEC, “ISO/IEC 38500 - Corporate governance of information technology.” 2008.

-

ISO/IEC/IEEE, “ISO/IEC/IEEE 42010:2011 - Systems and software engineering – Architecture description,” March, 2011.

-

IT Governance Institute, “IT Controls Objectives for Sarbanes-Oxley,” IT Governance Institute, Rolling Meadows, IL, 2006.

-

F. Ivancsich, R. Kruse, and D. Sharrock, “Why ‘Real Options’ is the biggest fail of the Agile Community so far,” www.agile42.com. 2013.

-

S. H. Kan, Metrics and models in software quality engineering. Reading, Mass.: Addison-Wesley, 1995, pp. xvii, 344.

-

C. Kaner, J. L. Falk, and H. Q. Nguyen, Testing computer software, 2nd ed. New York: Wiley, 1999, pp. xv, 480.

-

R. M. Kanter, The change masters : innovations for productivity in the American corporation. New York: Simon and Schuster, 1983, pp. 432 p.

-

R. S. Kaplan and D. P. Norton, “The balanced scorecard - measure that drive performance.,” Harvard Business Review, no. January-February, pp. 71–79, 1992.

-

M. Kennaley, Sdlc 3.0: Beyond a Tacit Understanding of Agile: Towards the Next Generation of Software Engineering. Fourth Medium Consulting, 2010.

-

G. Kim, K. Behr, and G. Spafford, The Phoenix Project: A Novel About IT, DevOps, and Helping Your Business Win. IT Revolution Press, 2013.

-

G. Kim, J. Humble, P. Debois, and J. Willis, The DevOps Handbook. Portland, OR: IT Revolution Press, 2016.

-

G. Klein, P. J. Feltovich, and D. D. Woods, “Common Ground and Coordination in Joint Activity,” in Organizational simulation, Hoboken, New Jersey: John Wiley & Sons, Inc., 2005.

-

M. Knez and D. Simester, “Making Across-the-Board Incentives Work,” Harvard Business Review, no. Feb 2002, 2002.

-

H. Kniberg and A. Ivarsson, “Scaling Agile @ Spotify with Tribes, Squads, Chapters & Guilds,” Jan. 2012.

-

R. Kohavi, T. Crook, and R. Longbotham, “Online Experimentation At Microsoft.” 2009.

-

B. Kos, “Kanban – Visualize your workflow - AgileLeanLife,” agileleanlife.com. 2016.

-

C. Ladas, Scrumban. Modus Cooperandi Press (January 12, 2009), 2009.

-

D. Laney, “3D Data Management: Controlling Data Volume, Velocity, and Variety,” Meta Group (now Gartner), 2001.

-

C. Larman and V. R. Basili, “Iterative and incremental development: A brief history,” Computer, vol. 36, no. 6. pp. 47–56, 2003.

-

C. Larman and B. Vodde, Scaling lean & agile development : thinking and organizational tools for large-scale Scrum. Upper Saddle River, NJ: Addison-Wesley, 2009, pp. xiv, 348 p.

-

G. Lawton, “Forging an IT service catalog management plan to drive business goals.” 2018.

-

D. Leffingwell, A. Yakyma, D. Jemilo, R. Knaster, A. Shalloway, and I. Oren, “Scaled Agile Framework,” no. 10 October 2015. 2014.

-

T. A. Limoncelli, S. R. Chalup, and C. J. Hogan, The Practice of Cloud System Administration: Designing and Operating Large Distributed Systems, Volume 2, vol. 2. Addison-Wesley Professional, 2014.

-

G. Linden, “Early Amazon: Shopping cart recommendations,” Geeking with Greg. 2006.

-

S. Lins, P. Grochol, S. Schneider, and A. Sunyaev, “Dynamic Certification of Cloud Services: Trust, but Verify!,” IEEE Security & Privacy, vol. 14, no. 2, pp. 66–71, Mar. 2016.

-

J. Loeliger, Version control with Git, 1st ed. Beijing ; Sebastopol, CA: O’Reilly, 2009, pp. xv, 310 p.

-

J. Loftus, “Open source IP case puts spotlight on patents,” techtarget.com. 2006.

-

R. J. Madachy, Software process dynamics. Hoboken, Piscataway, NJ: Wiley IEEE Press, 2008, pp. xxiii, 601 p.

-

A. A. Maestro, “Turn IT into a Service Catalog,” DevOps.com blog. 2015.

-

B. Maizlish and R. Handler, IT Portfolio Management Step-By-Step: Unlocking the Business Value of Technology. Hoboken, New Jersey: John Wiley & Sons, 2005.

-

R. Malan and D. Bredemeyer, “The Art of Change: Fractal and Emergent,” Cutter Consortium Enterprise Architecture Advisory Service Executive Report, vol. 13, no. 5, 2010.

-

T. W. Malone and K. Crowston, “The Interdisciplinary Study of Coordination, by Malone, Thomas W and Crowston, Kevin,” ACM Computing Surveys, vol. 26, no. 1, 1994.

-

H. Marks, “Code Spaces: A Lesson In Cloud Backup,” Network Computing. 2014.

-

D. L. Marquet, Turn the Ship Around!: A True Story of Turning Followers into Leaders: L. David Marquet, Stephen R. Covey: 8601411904479: Amazon.com: Books. Portfolio, 2013.

-

C. Matts and O. Maassen, “‘Real Options’ Underlie Agile Practices,” InfoQ. 2007.

-

J. McAdam, “Information Technology Measurements,” in Chargeback and IT Cost Accounting, T. A. Quinlan, Ed. Santa Barbara, CA: IT Financial Management Association, 2003, pp. 90–91.

-

S. McChrystal, T. Collins, D. Silverman, and C. Fussell, Team of Teams: New Rules of Engagement for a Complex World. New York, New York: Portfolio/Penguin (Random House), 2015.

-

D. McCrory, “Data Gravity – in the Clouds,” McCrory’s Blog. 2010.

-

P. Mell and T. Grance, “The NIST Definition of Cloud Computing (Technical report), Special publication 800-145,” National Institute of Standards and Technology: U.S. Department of Commerce., 2011.

-

N. D. Meyer, Internal Market Economics: practical resource-governance processes based on principles we all believe in. Dansbury, CT: NDMA Publishing, 2013.

-

C. Millard, Ed., Cloud Computing Law. Oxford, UK: Oxford University Press, 2013.

-

C. Millotat, “Understanding the Prussian-German General Staff System,” Strategic Studies Institute (US MIlitary), Carlisle Barracks, PA, 1992.

-

R. R. Moeller, Executive’s Guide to IT Governance: Improving Systems Processes with Service Management, COBIT, and ITIL. Hoboken, New Jersey: John Wiley & Sons, Inc., 2013.

-

D. Moody, “The ‘Physics’ of Notations: Towards a Scientific Basis for Constructing Visual Notations in Software Engineering,” IEEE Transactions on Software Engineering, vol. 35, no. 5, pp. 756–778, 2009.

-

J. M. Morgan and J. K. Liker, Designing the future: how Ford, Toyota, and other world-class organizations use lean product development to drive innovation and transform their business. McGraw-Hill Education.

-

J. M. Morgan and J. K. Liker, The Toyota product development system: Integrating People, Process, and Technology. Productivity Press, 2006.

-

J. P. Morgenthal, “A Reality Check on ‘Everyone’s Moving Everything To The Cloud’ | The Tech Evangelist.” 2016.

-

K. Morris, Infrastructure as Code: Managing Servers in the Cloud. Sebastopol, CA: O’Reilly Media, Inc., 2016.

-

C. Moskowitz, “Mind’s Limit Found: 4 Things at Once,” http://www.livescience.com. 2008.

-

S. Narayam, “Scaling Agile: Problems and Solutions | ThoughtWorks,” Thoughtworks Blogs. 2015.

-

S. Narayam, Agile IT organization design: for digital transformation and continuous delivery. Pearson Education Inc. , 2015.

-

S. Newman, Building microservices : designing fine-grained systems. Sebastopol, CA: O’Reilly Media, Inc., 2015.

-

NIST, “NIST SP 800-145, The NIST Definition of Cloud Computing,” 2011.

-

R. L. Nolan, Managing the data resource function, 1St ed. West, 1974, p. 394p.

-

Office of Government Commerce, Service strategy. Norwich: The Stationery Office, 2007, pp. xi, 261 p.

-

T. Ohno, Toyota production system : beyond large-scale production. Cambridge, Mass.: Productivity Press, 1988.

-

E. Olson, “Microsoft, GE, and the futility of ranking employees,” Fortune, no. November 18, 2013, 2013.

-

A. Opelt, B. Gloger, W. Pfarl, and R. Mittermayr, Agile contracts : creating and managing successful projects with Scrum. Hoboken, N.J.: John Wiley & Sons Inc., 2013, pp. xiv, 282 pages.

-

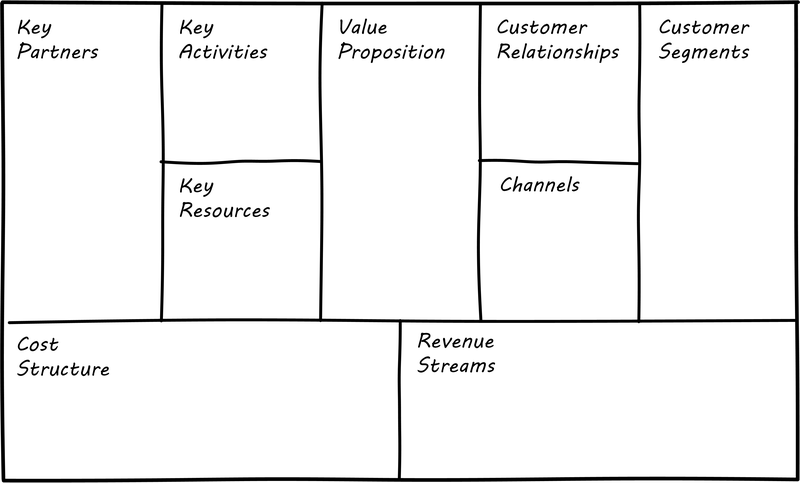

A. Osterwalder and Y. Pigneur, “Business Model Generation,” Wiley, p. 280, 2010.

-

A. Osterwalder, Y. Pigneur, G. Bernarda, and A. Smith, Value Proposition Design. Hoboken, N.J.: John Wiley & Sons, Inc., 2014.

-

J. Patton, User Story Mapping. Discover the Whole Story, Build the Right Product, First edit. 2014, p. 324.

-

R. Pichler, Agile Product Management with Scrum: Creating Products that Customers Love. Boston, MA: Addison-Wesley - Pearson Education, 2010, p. 160.

-

M. Poppendieck and T. D. Poppendieck, Lean Software Development: An Agile Toolkit. Boston: Addison Wesley, 2003.

-

M. Poppendieck and T. D. Poppendieck, Implementing lean software development : from concept to cash. London: Addison-Wesley, 2007, pp. xxv, 276 p.

-

S. Portny, Project Management for Dummies. Hoboken, New Jersey: John Wiley & Sons, 2013.

-

Project Management Institute and Project Management Institute., A guide to the project management body of knowledge (PMBOK guide), 3rd ed. Newtown Square, Pa.: Project Management Institute Inc., 2013, pp. xxi, 589 pages.

-

Puppet Labs, “2015 State of DevOps Report,” Puppet Labs, 2015.

-

T. A. Quinlan, Chargeback and IT Cost Accounting. Santa Barbara, CA: IT Financial Management Association, 2003.

-

B. Raczynski and B. Curtis, “Software Data Violate SPC’s Underlying Assumptions,” IEEE Software, vol. 25, no. 3, pp. 49–51, 2008.

-

Rational Software, Rational Software Corporation, and R. Software, “Rational Unified Process: Best Practices for Software Development Teams,” IBM, 2011.

-

D. J. Reifer, Making the software business case : improvement by the numbers. Boston: Addison Wesley, 2002, pp. xviii, 300.

-

D. G. Reinertsen, Managing the design factory: a product developer’s toolkit. New York ; London: Free Press, 1997, pp. xi,269p.

-

D. G. Reinertsen, The principles of product development flow: second generation lean product development. Redondo Beach, Calif.: Celeritas, 2009, pp. ix, 294 p.

-

G. L. Richardson, Project Management Theory and Practice. Boca Raton: Auerbach Publications, Taylor & Francis Group, 2010.

-

E. Ries, The lean startup : how today’s entrepreneurs use continuous innovation to create radically successful businesses, 1st ed. New York: Crown Business, 2011, pp. 320 p.

-

D. K. Rigby, J. Sutherland, and A. Noble, “Agile At Scale: How To Go From A Few Teams To Hundreds,” Harvard Business Review, 2018.

-

D. K. Rigby, J. Sutherland, and H. Takeuchi, “Embracing Agile,” Harvard Business Review, no. May, 2016.

-

H. Rock, David; Grant, “Why Diverse Teams Are Smarter,” Harvard Business Review. 2016.

-

E. Rogers, Diffusion of Innovations, 5th ed. New York, N.Y.: Free Press - Simon & Schuster, Inc., 2003.

-

J. W. Ross, P. Weill, and D. Robertson, Enterprise architecture as strategy : creating a foundation for business execution. Boston, Mass.: Harvard Business School Press, 2006, pp. xviii, 234 p.

-

M. Rother, Toyota kata : managing people for improvement, adaptiveness, and superior results. New York: McGraw Hill, 2010, pp. xx, 306 p.

-

M. Rother and J. Shook, “Learning to See: Value Stream Mapping to Add Value and Eliminate MUDA [Spiral-bound],” Lean Enterprise Institute. p. 102, 2003.

-

J. Rothman, “Not Ready for Agile? Start Your Journey with Release Trains,” Stickyminds.com. 2011.

-

W. Royce, “Managing the Development of Large Software Systems,” in Proc. IEEE WESCON, Los Angeles, 1970, pp. 1–9.

-

J. Rozovsky, “The five keys to a successful Google team,” re:Work, Mar. 2015.

-

K. S. Rubin, Essential Scrum : a practical guide to the most popular agile process. Upper Saddle River, NJ: Addison-Wesley, 2012, pp. xliii, 452 p.

-

G. A. Rummler and A. P. Brache, Improving performance: how to manage the white space on the organization chart, 2nd ed. San Francisco, CA: Jossey-Bass, 1995, pp. xxv, 226.

-

SAFe, “Agile Release Train – Scaled Agile Framework,” http://www.scaledagileframework.com/. 2016.

-

Scaled Agile Framework, “Guidance – Features and Components – Scaled Agile Framework.” 2016.

-

S. Schlarman, “Developing Effective Policy, Procedure, and Standards,” www.disaster-resource.com. 2008.

-

W. E. Schneider, The reengineering alternative : a plan for making your current culture work. McGraw-Hill, 1999, p. 173.

-

K. Schwaber, The Enterprise and Scrum. Redmond, Wash: Microsoft Press, 2007.

-

K. Schwaber and M. I. Beedle, Agile Software Development with Scrum. Upper Saddle River, N.J.: Prentice Hall, 2002.

-

C. Schwartz and J. Schauer, “The Dojo – Implementing an Immersive Learning Environment for Teams | Agile Alliance,” in Agile 2016, Atlanta, GA, 2016.

-

S. B. ; F. Sells Richard S. and S. B. ; F. Sells, “Evaluation of Research on Effects of Visual Training on Visual Functions,” Am J Ophthal, vol. 44, no. 2, pp. 230–236, Aug. 1957.

-

C. E. Shannon, “A symbolic analysis of relay and switching circuits,” Transactions of the American Institute of Electrical Engineers, vol. 57, no. 12, pp. 713–723, 1938.

-

C. E. Shannon and W. Weaver, The mathematical theory of communication. Urbana,: University of Illinois Press, 1949, pp. v (i.e. vii), 117 p.

-

A. Sharp and P. McDermott, Workflow modeling : tools for process improvement and applications development, 2nd ed. Boston: Artech House, 2009, pp. xx, 449 p.

-

E. Sigler, “So, What is ChatOps? And How do I Get Started?,” Pagerduty.Com. 2014.

-

L. Silverston and John Wiley & Sons., “The data model resource CD-ROM. Volume 1 a library of universal data models for all enterprises.” Wiley, New York, pp. 1 computer optical disc 4 3/4 in., 2001.

-

L. Silverston, The data model resource book Vol 1: A library of universal data models for all enterprises, Rev. ed. New York ; Chichester: Wiley, 2001, pp. 2 v.

-

L. Silverston, The data model resource book Vol 3: Universal patterns for data modeling. Indianapolis, Ind.: Wiley, 2008, pp. xxxii, 606 p.

-

C. J. Sims, Scrum: a Breathtakingly Brief and Agile Introduction. Dymaxicon, 2012.

-

R. Sirkiä and M. Laanti, “Lean and Agile Financial Planning,” via Scaled Agile Framework website, 2013.

-

M. Skelton and M. Pais, Team Topologies: Organizing Business and Technology Teams for Fast Flow. Portland, OR: IT Revolution Press, 2019.

-

P. G. Smith and D. G. Reinertsen, Developing products in half the time. New York, N.Y.: Van Nostrand Reinhold, 1991.

-

P. G. Smith and D. G. Reinertsen, Developing products in half the time : new rules, new tools, [New ed.]. New York ; London: Van Nostrand Reinhold, 1998, pp. xix, 298p.

-

O. Solon, “You are Facebook’s product, not customer,” Wired UK. 2011.

-

I. Sommerville, Software engineering, 6th ed. Harlow, England ; New York: Addison-Wesley, 2001, pp. xx, 693.

-

Stephen Watts, “Enterprise Service Management vs IT Service Management: What’s The Difference? – BMC Blogs,” BMC Blogs. .

-

J. Sterman, Business dynamics : systems thinking and modeling for a complex world. Boston: Irwin/McGraw-Hill, 2000, pp. xxvi, 982 p.

-

D. E. Strode and S. L. Huff, “A Taxonomy of Dependencies in Agile Software Development,” in 23rd Australasian Conference on Information Systems, 2012.

-

D. E. Strode, S. L. Huff, B. Hope, and S. Link, “Coordination in co-located agile software development projects,” The Journal of Systems and Software, vol. 85, pp. 1222–1238, 2012.

-

B. Stroustrup, “Viewpoint: What should we teach new software developers? Why?,” Communications of the ACM, vol. 53, no. 1, p. 40, Jan. 2010.

-

J. Sussna, Designing Delivery: Rethinking IT in the Digital Service Economy. O’Relly Publications, 2015.

-

J. V. Sutherland, Scrum : the art of doing twice the work in half the time, First Edit. Crown Business , 2014, pp. viii, 248 pages.

-

R. I. Sutton and H. Rao, Scaling up excellence : getting to more without settling for less. Crown Business/Random House, 2014.

-

A. Sweetser, “A Comparison of System Dynamics (SD) and Discrete Event Simulation (DES).”

-

The Joint Task Force on Computing Curricula IEEE Computer Society Association for Computing Machinery, “Software Engineering 2014: Curriculum Guidelines for Undergraduate Degree Programs in Software Engineering,” Association for Computing Machinery, February, 2015.

-

The National Court Rules Committee, “Federal Rules of Civil Procedure.” 2016.

-

The Open Group, “The Open Group IT4IT™ Reference Architecture, Version 2.1,” 2017.

-

The Open Group, “Standards Process - Definitions and Glossary.” 2018.

-

The Stationery Office, ITIL Service Design: 2011 Edition. Norwich, U.K.: The Stationery Office, 2011.

-

The Stationery Office, ITIL Service Strategy: 2011 Edition. Norwich, U.K: The Stationery Office, 2011.

-

The Stationery Office, ITIL Continual Service Improvement: 2011 Edition. Norwich, U.K.: The Stationery Office, 2011.

-

J. Tidwell, Designing Interfaces. Sebastopol, CA: O’Reilly Media, Inc., 2006.

-

H. Topi et al., “Revising the MSIS Curriculum: Specifying Graduate Competencies (Second Public Deliverable of the ACM/AIS MSIS 2016 Task Force),” Joint ACM/AIS MSIS 2016 Task Force, 2016.

-

D. Traynor, “Focus on the Job, Not the Customer,” Inside Intercom. 2016.

-

M. Treacy and F. Wiersema, The Discipline of Market Leaders: Choose Your Customers, Narrow Your Focus, Dominate Your Market. New York, N.Y.: Basic Books - Perseus Books Group, 1997.

-

E. R. Tufte, The Visual Display of Quantitative Information, vol. 4. 2001.

-

Uptime Institute, “Explaining the Uptime Institute’s Tier Classification System,” Uptime Institute Journal. 2014.

-

Uptime Institute, “Tier Certification Tiers is the Global Language of Data Center Performance Tier Certification is Worldwide Credibility.” 2016.

-

A. Venkatraman, “2e2 datacentre administrators hold customers’ data to \pounds1m ransom,” ComputerWeekly.com. 2013.

-

D. Vergun, “Toxic leaders decrease Soldiers’ effectiveness, experts say,” www.army.mil. 2015.

-

J. von Neumann and H. H. Goldstine, “Planning and Coding of Problems for an Electronic Computing Instrument,” Institute for Advanced Study, Princeton N.J., 1947.

-

A. Ward and D. K. Sobek, Lean Product and Process Development, 2nd Ed. Lean Enterprise Institute, 2014, p. 349.

-

S. Wardley, “Designing for constant evolution,” Hacker Noon. 2017.

-

G. West, Scale: The Universal Laws of Life and Death in Organisms, Cities, and Companies. London: Weidenfeld & Nicolson: The Orion Publishing Group Ltd, 2017.

-

G. Westerman, D. Bonnet, and A. Mcafee, “The Nine Elements of Digital Transformation,” MIT Sloan Management Review, vol. January, pp. 1–6, 2014.

-

WFMC, “Adaptive Case Management,” Http://Www.Xpdl.Org. pp. 1–23, 2010.

-

J. A. Whittaker, J. Arbon, and J. Carollo, How Google tests software. Upper Saddle River, NJ: Addison-Wesley, 2012, pp. xxvii, 281 p.

-

N. Wiener, “Cybernetics,” Scientific American, vol. 179, pp. 14–18, 1948.

-

Wikipedia Authors, “Multicloud,” Wikipedia, The Free Encyclopedia. 2019.

-

J. P. Womack and D. T. Jones, Lean thinking: banish waste and create wealth in your corporation, 1St Free P. New York: Free Press, 2003, pp. 396 p.

-

J. P. Womack, D. T. Jones, D. Roos, and Massachusetts Institute of Technology., The machine that changed the world : based on the Massachusetts Institute of Technology 5-million dollar 5-year study on the future of the automobile. New York: Rawson Associates, 1990, pp. viii, 323 p.

-

A. Woolley, T. W. Malone, and C. F. Chabris, “Why Some Teams Are Smarter Than Others,” New York Times, no. 12. Jan-2015.

-

S. Yegulalp, “Why GPL still gives enterprises the jitters | InfoWorld,” Infoworld.com. 2014.

-

W. Young and N. G. Leveson, “An integrated approach to safety and security based on systems theory,” Communications of the ACM, vol. 57, no. 2, pp. 31–35, Feb. 2014.

-

E. Yourdon and L. L. Constantine, Structured design : fundamentals of a discipline of computer program and systems design. Englewood Cliffs, N.J.: Prentice Hall, 1979, pp. xix, 473.

-

J. Zachman, “Zachman Framework,” IBM Systems Journal, vol. 26, no. 3, pp. 276–292, 1987.

1. Introduction

1.1. Objective

This document is intended to assist individuals and organizations who wish to create and manage product offerings with an increasing digital component, or lead their organization through Digital Transformation. It is a synthesis of practices and guidance from a wide variety of practitioners and professional communities active in digital technology. It integrates concepts from diverse sources such as business model innovation, product research and monetization, behavioral economics, Agile, DevOps, Enterprise Architecture, organizational development, service management, product management, data management, operations management, and corporate governance. Through providing an integrated and rationalized framework, based on notable and proven practices and perspectives, this document is positioned as leading guidance for digital technology and management professionals worldwide.

1.2. Overview

This document describes the resources, services, and assets that may be involved in creating and delivering such experiences. It provides guidance for the Digital Practitioner, whether based in a traditional "IT" organization, manufacturing unit, sales, customer support, or embedded in a cutting-edge integrated product team.

1.3. Conformance

Readers are advised to check The Open Group website for any conformance and certification requirements referencing this standard.

1.4. Terminology

For the purposes of this document, the following terminology definitions apply:

- Can

-

Describes a possible feature or behavior available to the user or application.

- May

-

Describes a feature or behavior that is optional. To avoid ambiguity, the opposite of “may” is expressed as “need not”, instead of “may not”.

- Shall

-

Describes a feature or behavior that is a requirement. To avoid ambiguity, do not use “must” as an alternative to “shall”.

- Shall not

-

Describes a feature or behavior that is an absolute prohibition.

- Should

-

Describes a feature or behavior that is recommended but not required.

- Will

-

Same meaning as “shall”; “shall” is the preferred term.

1.5. Future Directions

While digital is a fast-evolving field, the intent of this document is to identify the business and technical practices needed for a digital business, and to stay as independent of the implementation technology as possible. However, it is expected that this document will need to be revised from time to time to remain current with both practice and technology. To maintain the coherence of the document in the face of this evolution, a set of Principles of the DPBoK Standard have been established.

2. Definitions

For the purposes of this document, the following terms and definitions apply. Merriam-Webster’s Collegiate Dictionary should be referenced for terms not defined in this section.

- Body of Knowledge

-

A collection of knowledge items or areas generally agreed to be essential to understanding a particular subject. [Source:ISO/IEC 24773-1:2019]

- Digital Enterprise

-

An enterprise characterized by: 1. creation of digitalized products or services that are either delivered fully digitally (e.g., digital media or online banking), or 2. where physical products and services are obtained by the customer by digital means (e.g., online car-sharing services).

- Digital Technology

-

IT in the form of a product or service that is digitally consumable to create or enable business value.

- Digital Transformation

-

The radical, fundamental change towards becoming a digital enterprise.

- Digitalization

-

The application of digital technology to create additional business value within the primary value chain of enterprises.

- Digitization

-

The conversion of analog information into digital form.

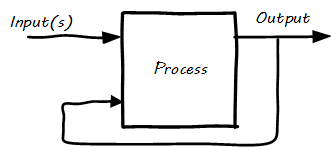

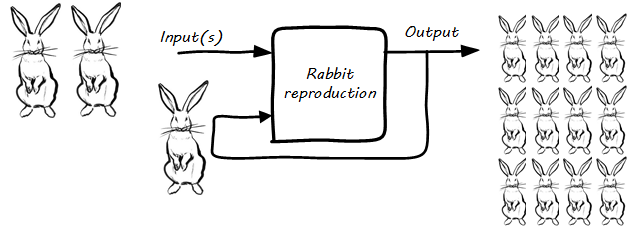

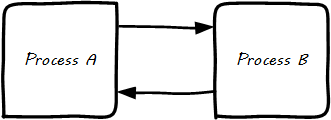

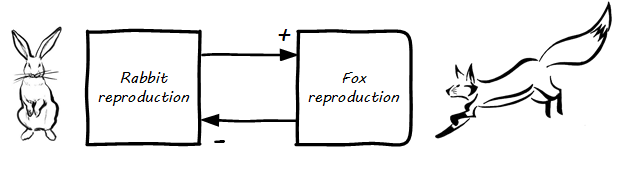

- Process

-

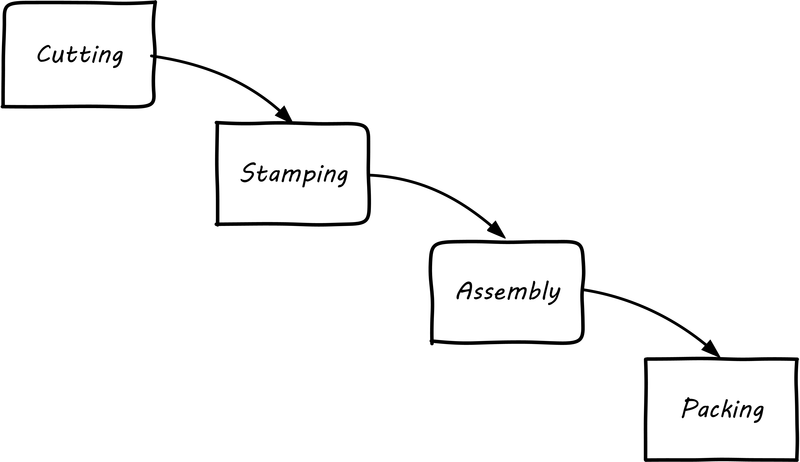

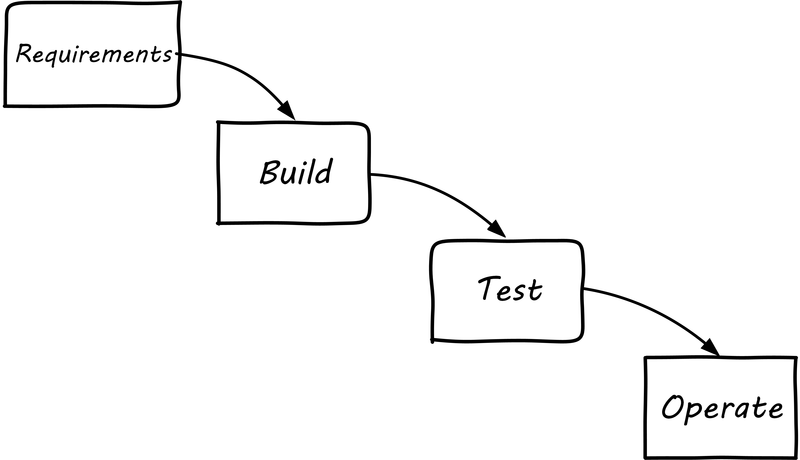

An ordered, countable set of activities; an event-driven, value-adding sequence that can be measured and improved.

3. Digital Transformation

This chapter describes Digital Transformation.

3.1. Example Scenario

Consider a scenario wherein an individual is looking to buy a prosthetic limb for her brother. Today and in the near future she is likely to perform the following activities:

-

Send a picture of her brother to a limb designer

-

Use an electronic device to measure the limb

-

Visualize the design options of the limb with her brother and designer

-

Get the limb design and connections validated by a specialist several thousand miles from her home

-

Select a facility closer to her home for final fitting and delivery

-

Share the design electronically with the local facility

-

Complete necessary arrangements with the local facility and the insurance company

-

Transfer money to the designer and the print facility

-

Make a reservation and have it honored at the print facility

-

Use a wayfinding application on a smart device

-

Watch the limb 3D printed, quality-tested, assembled, and fitted

-

Make sure the insurance company has paid all parties

-

And, most importantly, watch her brother light up in delight

Each of these experiences is co-created by her desire for value, and the responses of a set of digital resources. It also reflects how distance, time, and costs have shrunk while the consumer’s experience is increasingly customized and personal.

The resources and capabilities required to deliver such experiences are vast and complex, spanning mainframe and distributed computers housed in data centers and managed in various ways, including advanced cloud services. Every individual involved in the design and delivery of these contemporary, evolving digital technologies is a Digital Practitioner.

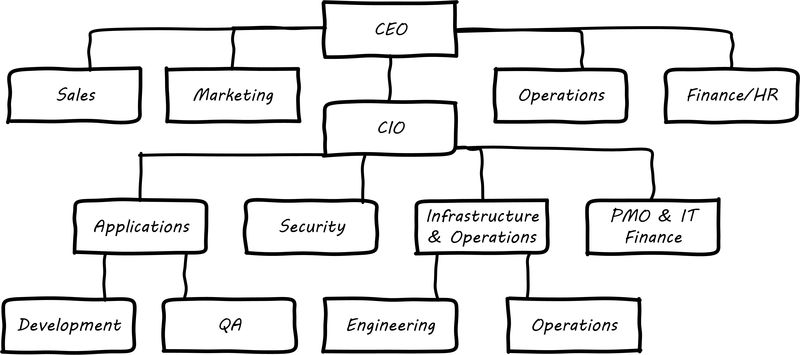

3.2. Digital Transformation as Strategy

Jim Fowler, CIO of GE, stated in 2016: "When I am in business meetings, I hear people talk about digital as a function or a role. It is not. Digital is a capability that needs to exist in every job. Twenty years ago, we broke e-commerce out into its own organization, and today e-commerce is just a part of the way we work. That’s where digital and IT are headed; IT will be no longer be a distinct function, it will just be the way we work. … we have moved to a flatter organizational model with “teams of teams” who are focused on outcomes. These are co-located groups of people who own a small, minimal viable product deliverable that they can produce in 90 days. The team focuses on one piece of work that they will own through its complete lifecycle … in [the “back-office”] model, the CIO controls infrastructure, the network, storage, and makes the PCs run. The CIOs who choose to play that role will not be relevant for long.” [128]

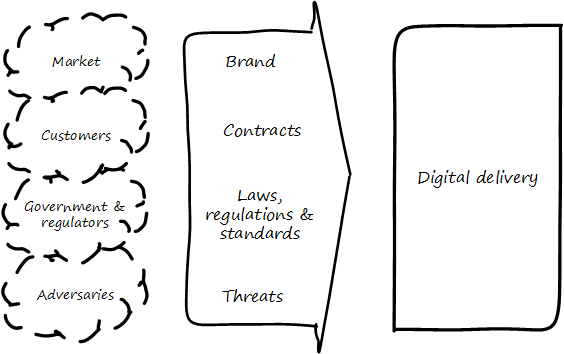

Digital Transformation is fundamentally a strategy and an operating model change, in which technological advancements are leveraged to improve human experiences and operating efficiencies, and to evolve the products and services to which customers will remain loyal. It is the consequence of:

-

The ability to handle information in the digital form

-

Using digital technologies to manage the process of creating, capturing, and analyzing information to deliver perceptive human-machine interaction experience

The modern digital enterprise faces multiple challenges in its transformation to the digital economy. New technologies (cloud, IoT, machine learning) and new techniques (DPM, reliability engineering, continuous delivery) both demand attention. This family of guidance will address both aspects. However, technologies are faster moving, while techniques and practices evolve at a slower pace.

For organizations to cope with this fast technology evolution pace and succeed in this Digital Transformation, changes should be pervasive through the whole organization. Digital Transformation as strategy should be aligned with the overall organization context and environment, and should be derived and sometimes even replace the existing organization strategy.

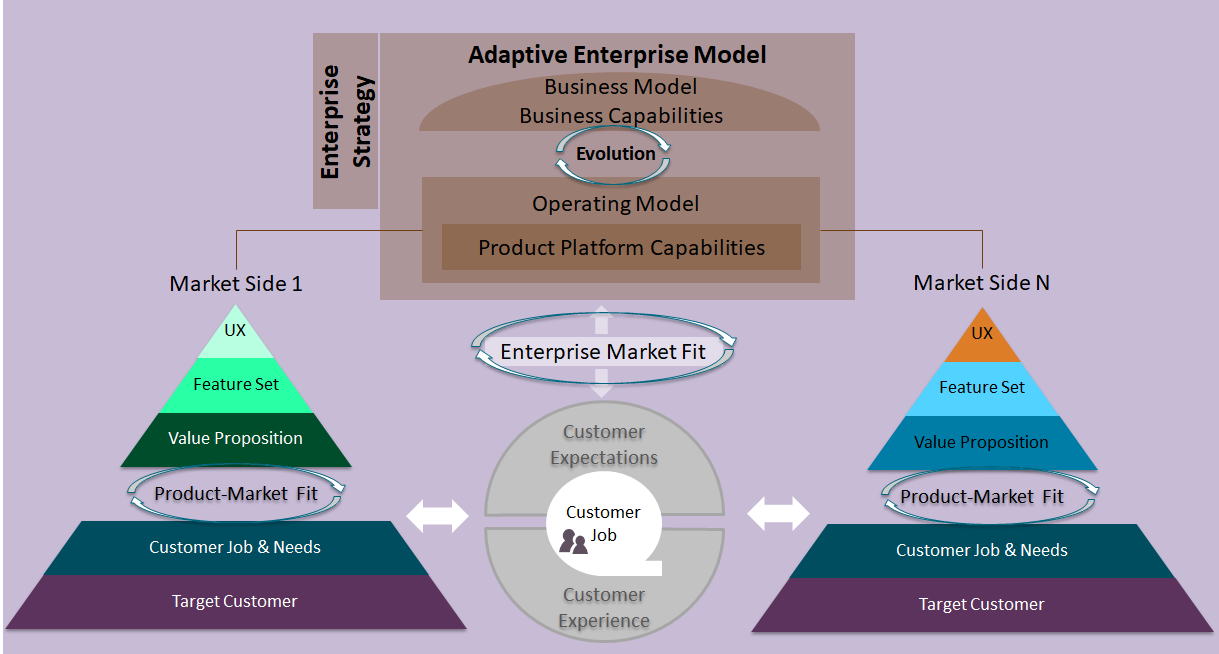

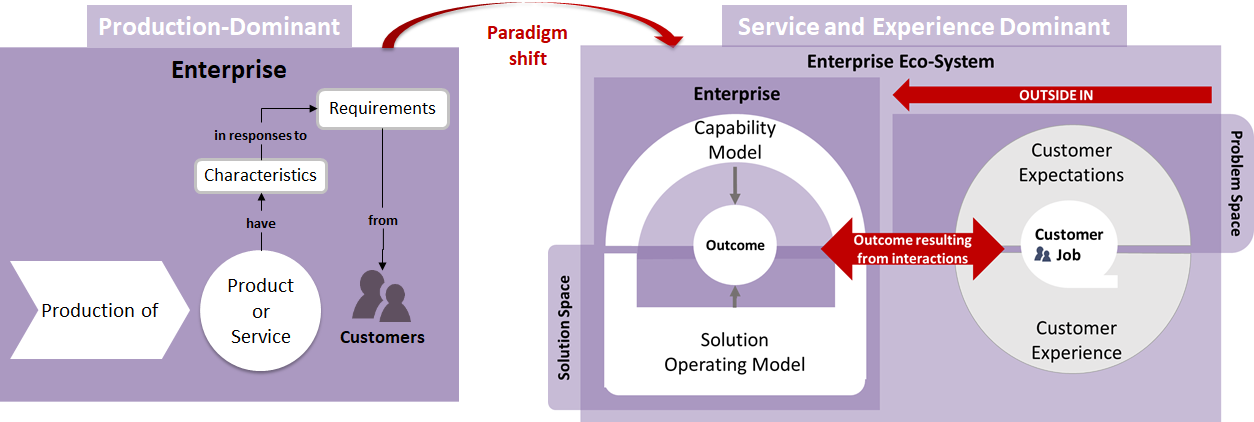

This strategy shift should encompass the new business and IT disruptive trends, using an outside-in perspective, and lead the development of new business and operational models connected with digital technologies and platforms and with the digital economy as a whole.

3.3. What is Digital?

Being "digital", in the sense of digitizing information, is not new. It has existed, arguably, since Shannon mapped Boolean logic onto electronic circuits [253]. This document uses the definitions defined in Chapter 2, Definitions.

A "digital-first" culture is where the business models, plans, architectures, and implementation strategies are based on a digital organization architecture that inspires and rewards a number of desired behaviors, such as servant leadership, strategic value chain thinking, consumer focus, fault tolerance, agility, and more. It requires a workforce with a sense of psychological safety, digitally savvy enough to execute a “digital-first approach".

As part of this paradigm shift, it is important to have a clear understanding of the existing capabilities, which can be retired, and the new ones that will be needed. In some cases, organizations may need to deal with all these changes while keeping their current legacy platform and supporting applications.

3.4. Seven Levers of Change

To succeed in today’s digital era, organizations will need to consider the following seven levers of change, as discussed in the White Paper: "The Seven Levers of Digital Transformation" [81]:

-

Business process transformation

-

Customer engagement and experience

-

Product or service digitization

-

IT and delivery transformation

-

Organizational culture

-

Strategy

-

Business ecosystem

These levers require a fundamental understanding of value creation for both the organization and the customer. They equip businesses with a structure to reduce the number of failed projects, guide investment decisions, and create a set of products and services designed to seal customer loyalty. For digital success you will need to assess readiness, actively include your people, measure and govern for value - not activities performed, develop your roadmap top-down, and pivot often with bottom-up learnings.

The example given at the start of this section is an illustration of the impact of seven levers to the primary value chain. In the example, some organizations that enabled the experience may be startups, but others may be more established firms now changing the way they have been operating. The printing facility, the orthopedic and prosthetic specialist, and even the customer changed their expectations and ways they used to function. The change has been made possible with the innovations in digital technologies.

Technology is the glue that connects all players in the ecosystem – suppliers, distributors, service providers, employees, and the customers - and it is a powerful means to building a future-ready organization. However, it is worth bearing in mind that it is not an end in itself. The seven levers are symbiotic pillars that amplify the effects of one another.

For an organization to become Agile, change should start with organizational structure and cultural change – the whole organization should be aligned with the Agile view. The new paradigm for an Agile enterprise should focus on becoming flexible by design: the ability to modify tactics and operations to respond to changing conditions.

4. Principles of the DPBoK Standard

4.1. Guiding Concepts

The content of this document will change over time, but shall remain consistent with these core guiding concepts:

-

Comprehensiveness

-

Currency

-

Capability-based

-

Verifiability

-

Fine-grained and Clinical Terminology

-

Compatibility with Other Frameworks

-

Compatibility with Agile Principles

-

Compatibility with Enterprise Architecture

-

A Learning Artifact

-

Developed as a Digital Product

-

Competency-based Content

-

Scaling Model as Learning Progression

4.2. Comprehensiveness

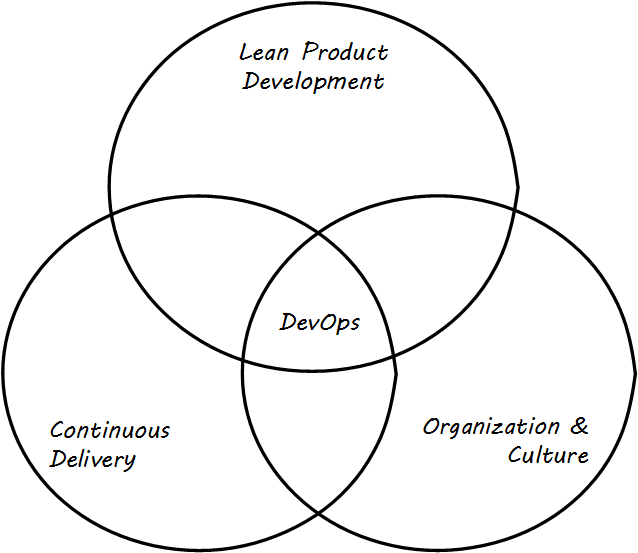

This document shall provide comprehensive guidance for the digital and IT professional in his or her professional contexts, whether market-facing or supporting. It shall address the complete spectrum of concerns encountered by the Digital Practitioner, from the initial decision for digital investment through value discovery, design, construction, delivery, operation, and ongoing evolution. It shall cover management and organizational development topics of collaboration, coordination, structure, and culture, in the context of Digital Product Management (DPM). It shall cover sourcing and human resource management in the digital context. It shall cover Governance, Risk Management, and Compliance (GRC), data and information management, and architecture. It shall strive to be the “go-to” guidance for orienting Digital Practitioners worldwide to their chosen career.

This document shall demonstrate thorough and current consistency with the principles and practices of Agile development and related trends, such as continuous delivery, DevOps, Lean Product Development, Kanban and Lean IT, design thinking in the digital context, SRE, and web-scale computing. It shall curate notable current guidance while maintaining a neutral and clinical overall position on controversial topics. It shall serve the Digital Practitioner by identifying relationships and overarching themes across this curated Body of Knowledge.

The focus of this document, however, is on longer-lived professional and management practices, not the ephemeral aspects of technology. The following should be, in general, discussed primarily by reference at a level suitable for non-technical audiences:

-

Technical standards (platforms, protocols, formats, interoperability, etc.)

-

Specific programming languages and frameworks

The following in general should be avoided, even by reference:

-

Particular vendors and commercial products (this does not include notable open source products with demonstrated longevity); if commercial products are mentioned as examples, at least two examples should be supplied

-

Specific commercial methodologies (some exceptions to this may exist, such as ITIL and SAFe, subject to evidence of substantial notability and demonstrated longevity)

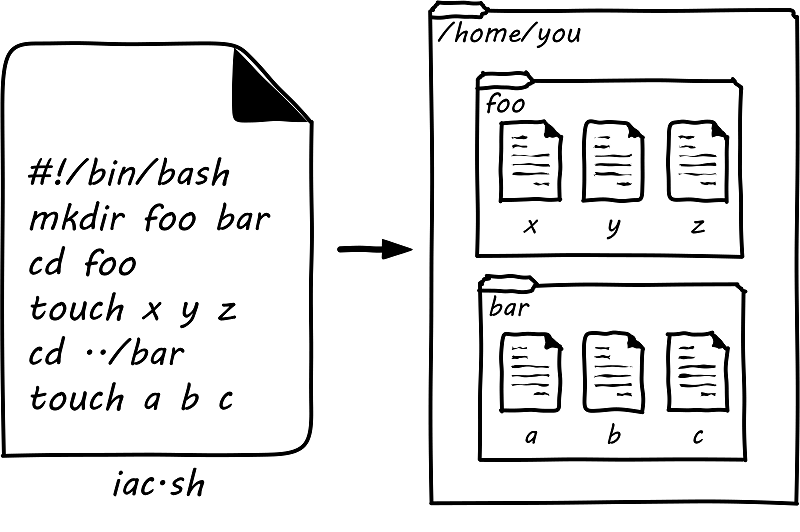

Specific technical practices, such as Infrastructure as Code (IaC), virtualization, cloud, and SRE, may be in scope, to be determined on a case-by-case basis. Broader technical trends such as Internet of Things (IoT) and cognitive technologies may be discussed, primarily in terms of their impact on technical practices. (There are many other bodies of work for the practitioner to refer to on such topics.) In general, this document should not be so technically-neutral and abstract as to appear academic and irrelevant to the modern Digital Practitioner.

4.3. Currency

This document shall remain current with industry practices and trends, subject to evidence of notability and reasonable longevity.

4.4. Capability-Based

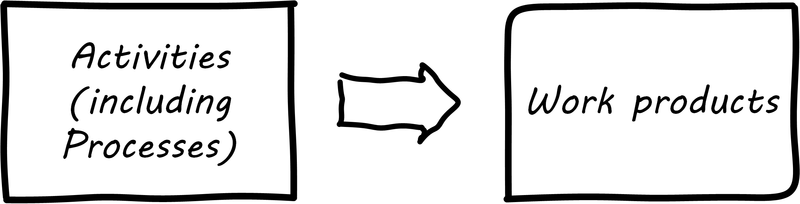

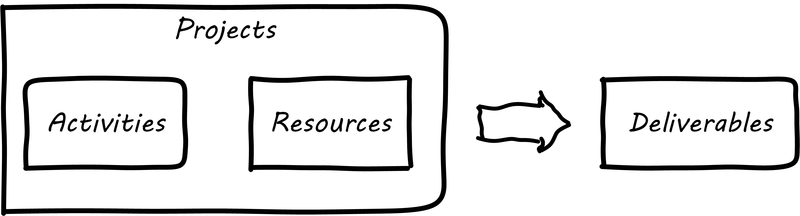

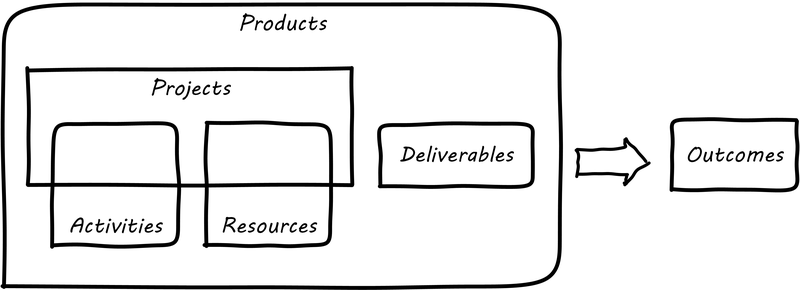

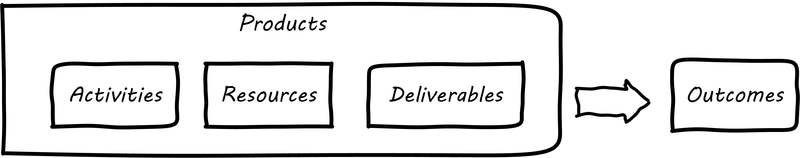

Much current computing and IT guidance uses the concept of “process” as a fundamental building block, with various issues:

-

Inconsistency with the definition of “process” favored by the Business Process Management (BPM) community [32]

-

Promotion of formalized “process” as a primary, preferred coordination and delivery model and basis for improvement, rather than one mechanism among several

This document should prefer the concept of “capability” as its fundamental structure, in a definition consistent with other work of The Open Group. The concept of “practice” may also be used. The highest-order DPBoK capabilities shall be cross-cutting, large-grained concepts, not to be confused with organizational functions or processes. They shall be derived and ordered based on a scaling model. Establishment or alteration of DPBoK capabilities and practices must be evidence-based. This document shall align with emerging Business Architecture standards in this regard.

4.5. Verifiability

In the computing and digital professions, there is currently a significant and destructive gap between academic theory and research and industrial practice. This can be corrected. For example, medicine has a much more productive feedback loop between researchers and practicing clinicians.

In the interest of narrowing this gap, this document shall be verifiable. Its concepts must be well-grounded, with clear evidence as to their notability. It must not propose concepts or terminology that have little or no evidence of practical adoption in industry. Its structure, principles, practices, and concepts must be falsifiable. It shall be open to rational skepticism and criticism and adaptive in the face of evidence such as surveys, market assessments, analysis of industry narratives and cases, and simulations of socio-technical systems. It should also demonstrate an awareness of useful academic research and problem framing.

The principle of verifiability does permit for analysis, synthesis, and interpretation. This document should seek to "add value" to industry understanding wherever possible, but must also remain well-grounded while doing so.

Finally, this document must not fall into the trap of excessive semantic debate and the fruitless search for universally applicable abstract ontologies. A framework with recognized inconsistencies but well grounded in industry domain language is preferable to a perfectly consistent framework based on conjectural concepts.

4.6. Fine-Grained and Clinical Terminology

Within its capability progression, this document shall strive to employ terminology and concepts that are fine-grained, precise, objective, well-supported, and clinical. For example, it is helpful to break a management concern such as “process management” down into lower-level concepts of task ordering and dependencies, cadence, and synchronization. See, for example, Reinertsen’s work on “Managing Flow under Variability” ([230], Chapter 7).

4.7. Compatibility with Other Frameworks

This document should be to the greatest extent possible compatible with other bodies of knowledge and frameworks, while still adhering to the previously articulated principles. It should be positioned as a “standard of standards”, with the objective of aligning and bringing a coherent approach to navigating the currently fragmented information base in the digital industry.

Because other frameworks are large-grained combinations of many concerns, it may not be possible to be compatible in all regards. This document should seek to interoperate with other frameworks using fine-grained terminology. For example, rather than asserting consistency with the Project Management Body of Knowledge® (PMBOK®) as a whole, it is preferable that this document frames its relationship in terms of components such as investment management, planning, resource allocation, risk management, and execution. Similarly, rather than characterizing its relationship to ITIL as a whole, this document should frame its relationship more specifically in terms of the ITIL approaches to product management, process management, and continuous improvement.

Where other frameworks cover a topic sufficiently, this document shall not repeat that coverage. The role of this document is to integrate and synthesize. However, this document shall not overlook or fail to identify points where its point of view varies from the recommendations of other guidance. In such cases, it shall do so in a principled fashion based on clear evidence and with specificity as to the nature of the differences.

Not all sound practice has been formalized through standards. This document may, subject to evidence of notability, reference the contributions of individuals.

4.8. Compatibility with Agile Principles

Agile software development has emerged as a dominant approach in software-intensive systems creation, and is expanding its reach and insights into non-software, non-computing fields as well [234, 233]. There are a variety of principles and perspectives on Agile, starting with the well-known Agile Manifesto [8], furthered by the work of the Agile Alliance. Commercial Agile frameworks are increasing in number and scope; for example, [177, 18].

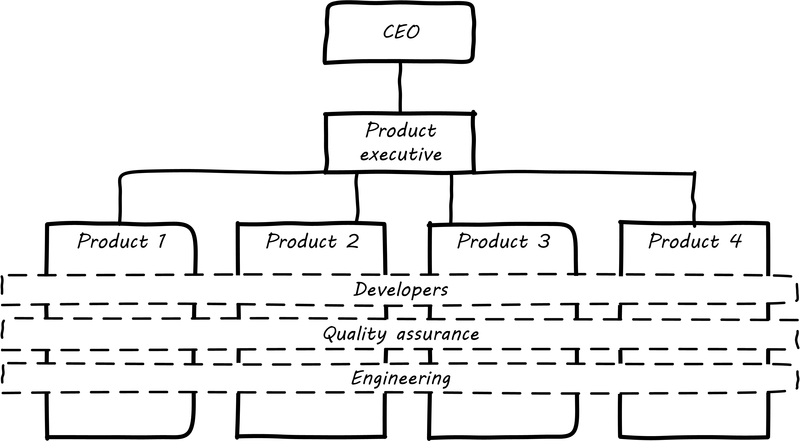

Agile principles can be described in specific and precise ways; Agile’s history and influence in the computing profession are broad and notable [174], and the underlying intellectual foundations of Agile are robust [250, 230]. Agile describes sound approaches and practices for product management with a high Research and Development (R&D) component. Using collaborative, focused, cross-functional teams with iterative, feedback-enhanced methods is the most effective approach for solving complex problems (as compared to routing problem-solving work across functional team boundaries with sequential “phase gates”). Where digital systems management involves the discovery of information and coping with “unknown unknowns”, this document shall favor Agile principles.

However, Agile (as a specific set of documented, curated practices) is at its strongest in the cohesive team context. It does not have the same level of consensus or clarity in larger contexts, and the topic of “scaling Agile” is controversial in the industry. This document should approach the scaling problem in part as a problem of coordination, which is a topic of research attention in academia. Scaling issues are also driven by the organization’s approach to internal investment and organizational development, up to and including culture. Corporate governance must be addressed as well. These are broad topics in management, with many notable and qualified influences for this document to curate into the digital context.

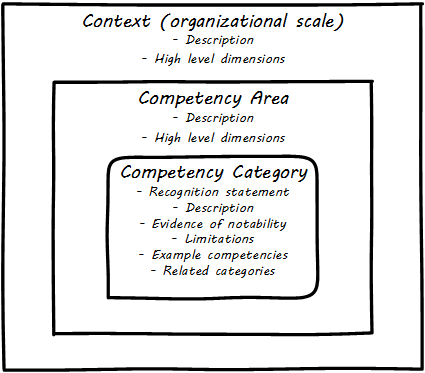

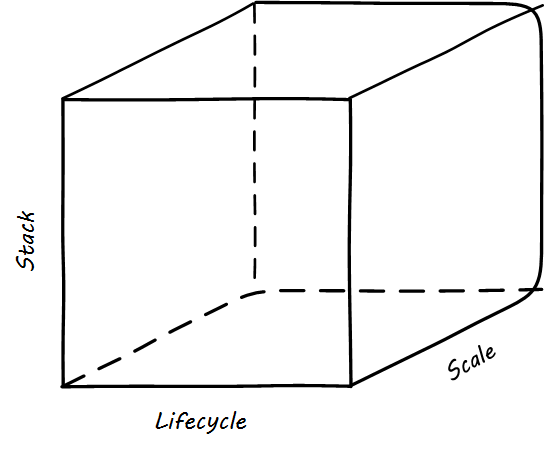

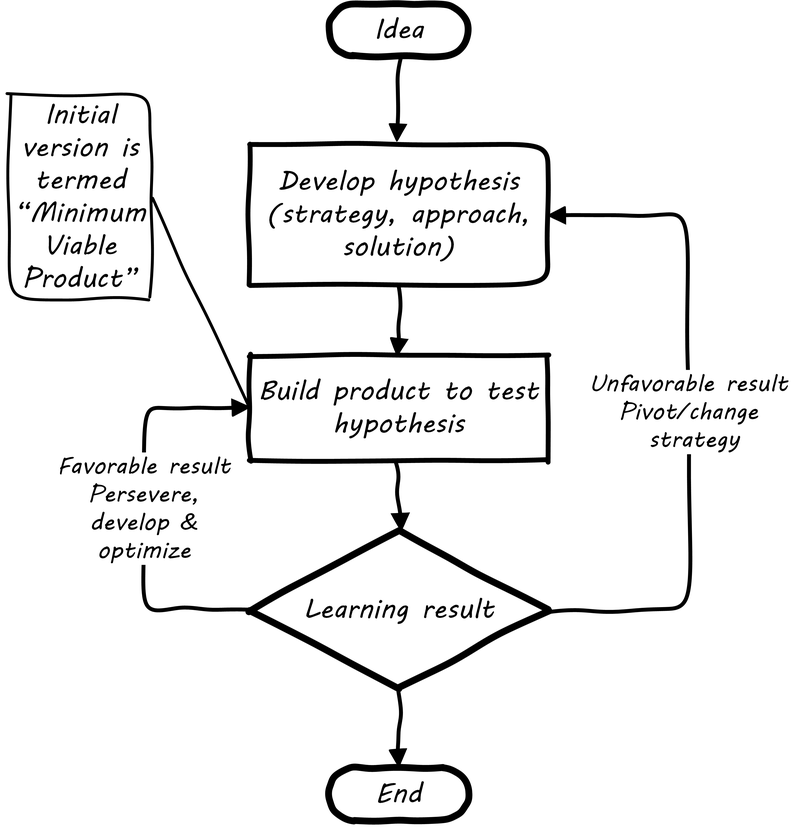

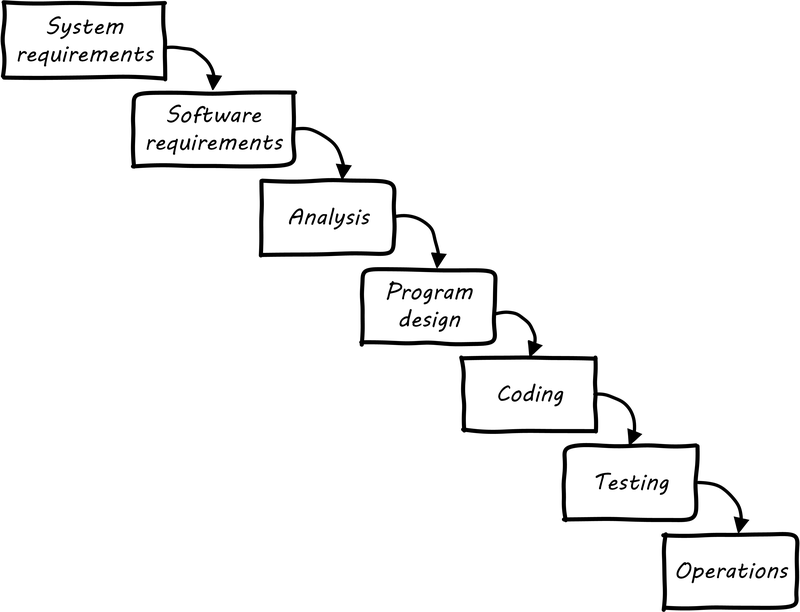

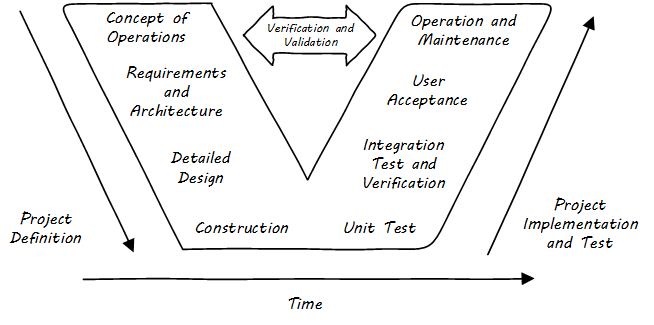

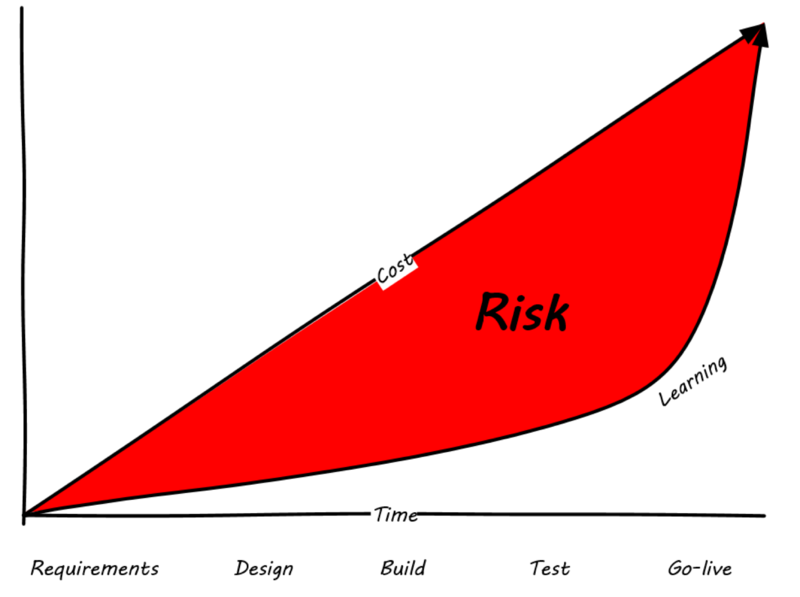

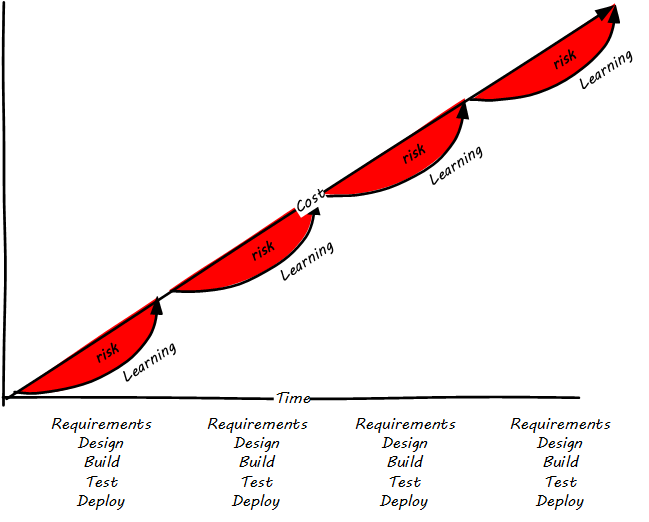

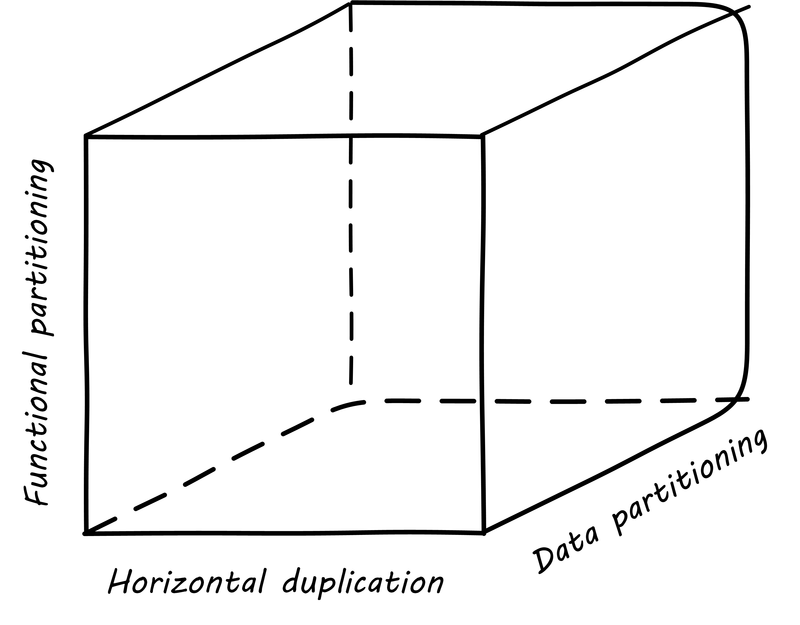

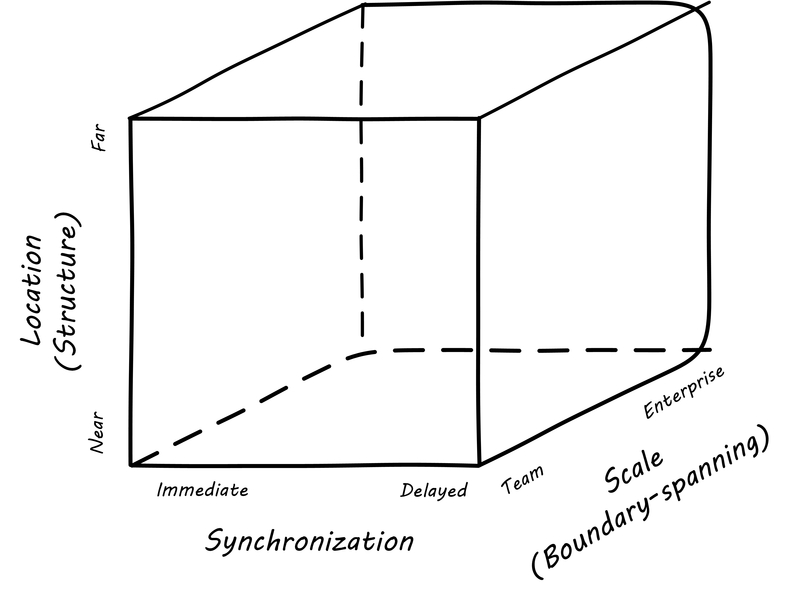

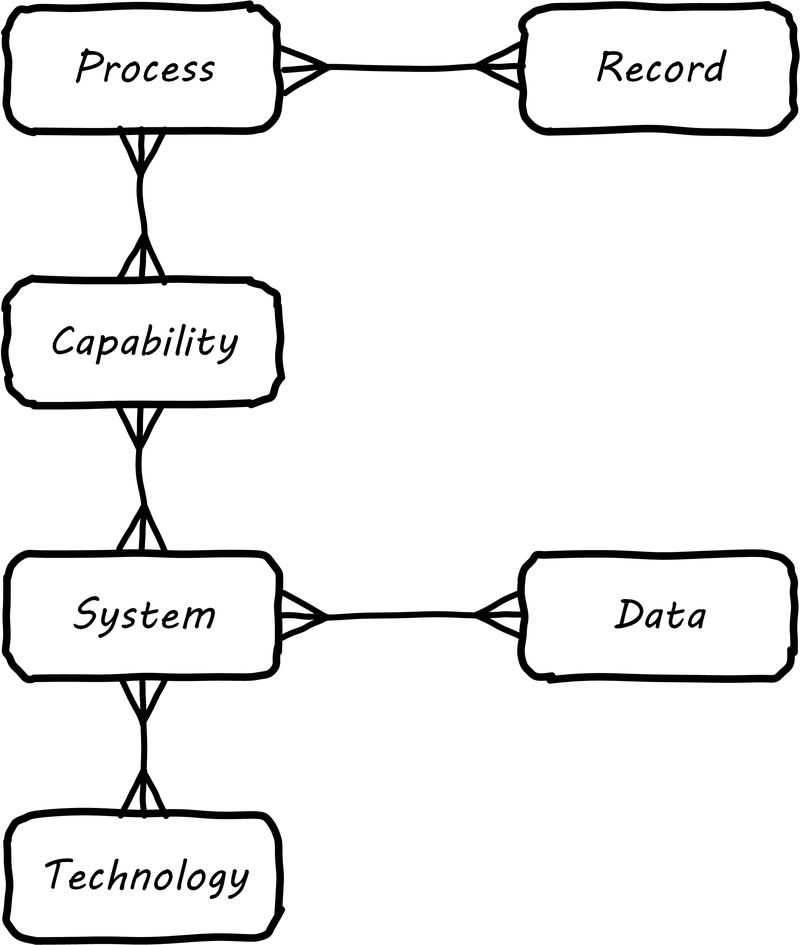

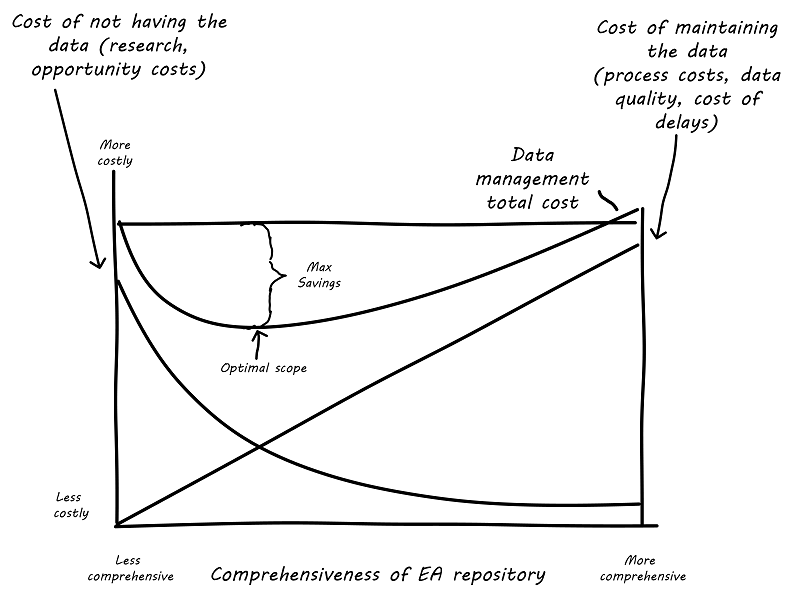

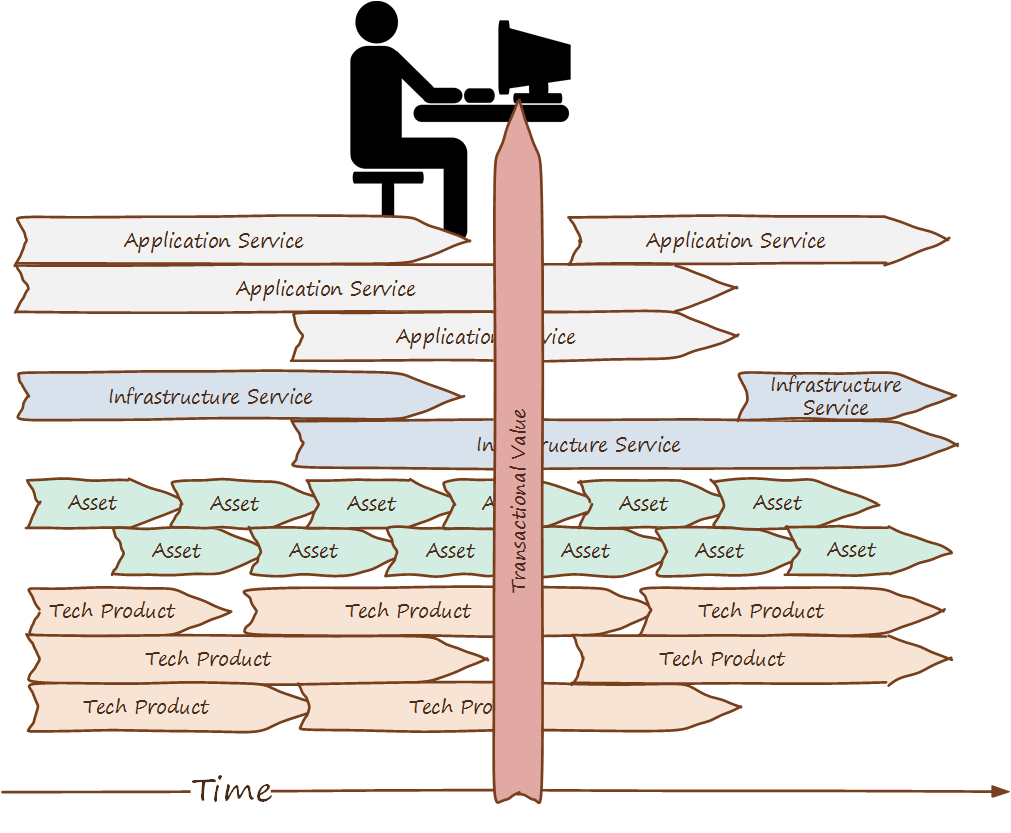

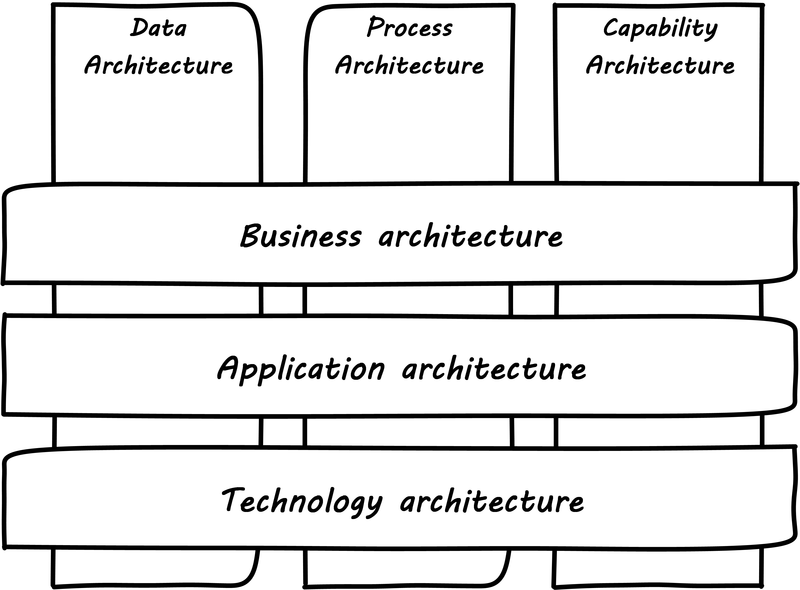

4.9. Compatibility with Enterprise Architecture