Ecosystems Architecture

New Thinking for Practitioners in the Age of AI

by Philip Tetlow, Neal Fishman, Paul Homan, and Rahul

Copyright © 2023, The Open Group

All rights reserved.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of the copyright owners.

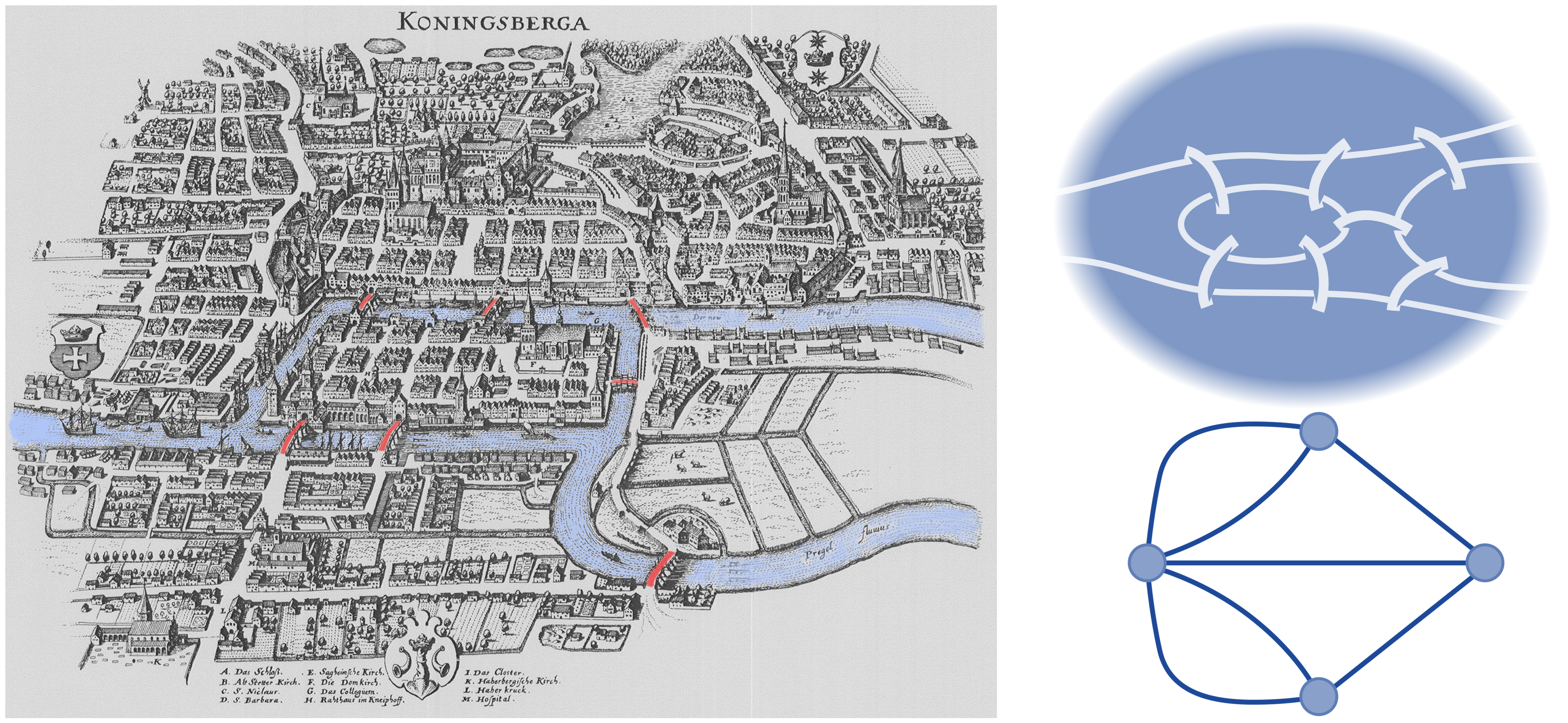

The top right image in Figure 6 is sourced from https://commons.wikimedia.org/wiki/File:7_bridges.svg, and is licensed under the Creative Commons Attribution-Share Alike 3.0 Unported license. For details of the license, see https://creativecommons.org/licenses/by-sa/3.0/deed.en.

The Open Group Press

Ecosystems Architecture

Document Number: G23B

Published by The Open Group, October 2023.

Comments relating to the material contained in this document may be submitted to:

The Open Group, Apex Plaza, Forbury Road, Reading, Berkshire, RG1 1AX, United Kingdom

or by electronic mail to:

ogspecs@opengroup.org

Built with asciidoctor, version 2.0.18. Backend: html5 Build date: 2023-10-27 07:06:30 +0100

Preface

The Open Group Press

The Open Group Press is an imprint of The Open Group for advancing knowledge of information technology by publishing works from individual authors within The Open Group membership that are relevant to advancing The Open Group mission of Boundaryless Information Flow™. The key focus of The Open Group Press is to publish high-quality monographs, as well as introductory technology books intended for the general public, and act as a complement to The Open Group standards, guides, and white papers. The views and opinions expressed in this book are those of the authors, and do not necessarily reflect the consensus position of The Open Group members or staff.

The Open Group

The Open Group is a global consortium that enables the achievement of business objectives through technology standards. Our diverse membership of more than 900 organizations includes customers, systems and solutions suppliers, tools vendors, integrators, academics, and consultants across multiple industries.

The mission of The Open Group is to drive the creation of Boundaryless Information Flow™ achieved by:

-

Working with customers to capture, understand, and address current and emerging requirements, establish policies, and share best practices

-

Working with suppliers, consortia, and standards bodies to develop consensus and facilitate interoperability, to evolve and integrate specifications and open source technologies

-

Offering a comprehensive set of services to enhance the operational efficiency of consortia

-

Developing and operating the industry’s premier certification service and encouraging procurement of certified products

Further information on The Open Group is available at www.opengroup.org.

The Open Group publishes a wide range of technical documentation, most of which is focused on development of standards and guides, but which also includes white papers, technical studies, certification and testing documentation, and business titles. Full details and a catalog are available at www.opengroup.org/library.

About the Authors

Philip Tetlow, PhD, C.Eng, FIET, is CTO for Data Ecosystems at IBM (UK & Ireland) and a Distinguished IT Architect in The Open Group Open Professions program. He is a one-time Vice President of IBM’s Academy of Technology, a W3C member, a Visiting Professor of Practice at Newcastle University and an Adjunct Professor at Southampton University.

Neal Fishman, BSc, is an IBM (US) Distinguished Engineer and a Distinguished IT Architect in The Open Group Open Professions program. He is a former distance learning instructor at the University of Washington. He has written several published works, including Enterprise Architecture Using the Zachman Framework, Viral Data in SOA: An Enterprise Pandemic, and Smarter Data Science: Succeeding with Enterprise-Grade Data and AI Projects.

Paul Homan, MSc, CITP, FBCS, is the CTO for the Industrial Sector in IBM Services (UK & Ireland). He is an IBM Distinguished Engineer and Distinguished IT Architect in The Open Group Open Professions program. With over 30 years’ experience in IT, he is highly passionate about and practically experienced in Architecture & Strategy; in particular, as applied to the Industrial sector. He is well known for applying an Architected Approach to delivering strategic business transformation, and has been a long time contributor to The Open Group, significantly in relation to the TOGAF Standard.

Rahul, MCA, BSc, is a Senior Research Engineer for the Emerging Technology Lab at Honda R&D Europe UK. He primarily focuses on deep tech strategy, data privacy, and decentralized architecture research for next-generation systems and services.

Contributors

The authors gratefully acknowledge the following contributors:

Steve Nicholls, BSc, is the Account Technical Lead Manager at DXC Technology (UK). He is skilled in Digital Strategy, IT Strategy, Data Center Management, and Project Portfolio Management.

Mark Dickson, BA, is the Architecture Forum Director at The Open Group. He is an experienced Chief Architect, Enterprise Architect, and expert in Agile delivery.

Christopher Hinds, MEng, CEng (Mech), is Head of Enterprise Architect for Applications at Rolls-Royce PLC. He has been with the company since 2006 and worked across Engineering, Manufacturing, and IT. Chris sits in Group IT and works in an ecosystem of more than 20 Enterprise Architects and more than 30 third parties influencing architectural direction.

Stuart Weller, BSc, is an Enterprise Architect at Rolls-Royce PLC. He is TOGAF® 9 Certified and is responsible for Enterprise Architecture standards.

Trademarks

ArchiMate, FACE, FACE logo, Future Airborne Capability Environment, Making Standards Work, Open O logo, Open O and Check certification logo, OSDU, Platform 3.0, The Open Group, TOGAF, UNIX, UNIXWARE, and X logo are registered trademarks and Boundaryless Information Flow, Build with Integrity Buy with Confidence, Commercial Aviation Reference Architecture, Dependability Through Assuredness, Digital Practitioner Body of Knowledge, DPBoK, EMMM, FHIM Profile Builder, FHIM logo, FPB, IT4IT, IT4IT logo, O-AA, O-DEF, O-HERA, O-PAS, O-TTPS, Open Agile Architecture, Open FAIR, Open Footprint, Open Process Automation, Open Subsurface Data Universe, Open Trusted Technology Provider, Sensor Integration Simplified, SOSA, and SOSA logo are trademarks of The Open Group.

Betamax is a trademark of Sony Corporation.

Box is a registered trademark of Box, Inc.

Facebook is a registered trademark of Facebook, Inc.

Forrester is a registered trademark of Forrester Research, Inc.

Gartner is a registered trademark of Gartner, Inc.

Google is a registered trademark of Google LLC.

IBM is a registered trademark of International Business Machines Corporation.

JavaScript is a trademark of Oracle Corporation.

McKinsey is a trademark of McKinsey Holdings, Inc.

MDA, Model Driven Architecture, and UML are registered trademarks and Unified Modeling Language is a trademark of Object Management Group, Inc.

Mural is a registered trademark of Tactivos, Inc.

Python is a registered trademark of the Python Software Foundation.

Twitter is a trademark of Twitter, Inc.

VHS is a trademark of the Victor Company of Japan (JVC).

W3C and XML are registered trademarks of the World Wide Web Consortium (W3C®).

WhatsApp is a trademark of WhatsApp LLC.

YouTube is a registered trademark of Google LLC.

Zachman Framework is a trademark of John A. Zachman and Zachman International.

All other brands, company, and product names are used for identification purposes only and may be trademarks that are the sole property of their respective owners.

Acknowledgements

The foundations for this book were laid down by a project under the auspices of the IBM Academy of Technology. Accordingly, the founding members of that project must be credited, with a specific mention going to John H Bosma, William Chamberlin, Scott Gerard, Carl Anderson, and Richard Hopkins. Their kind input significantly helped incubate the ideas in this book.

Next, we must thank Mark Dickson, who worked tirelessly to steer our team within The Open Group as we captured and composed our thoughts while writing.

We are also indebted to James Hope, who helped implement and test some of our ideas on homology in Chapter 3.

Finally, we must thank the late Ian Charters, Grady Booch, and Professor Barrie Thompson for their inspiration, support, and encouragement over the years. They are the ones who set the compass.

Foreword

Simply stated; it is time!

In other words, we are at a point where both business and Information Technology (IT) communities must establish practices designed to embrace the various ecosystems upon which their enterprises depend.

A pragmatic place to start is with architecture; specifically, IT architecture aimed at the ecosystems level — at the hyper-enterprise level, as it were, and with an ambition to augment informal practice already in place in and around Enterprise Architecture. This comes from domains like Electronic Data Interchange (EDI), Information Retrieval (IR), Generative Artificial Intelligence (GenAI), and Blockchain, and covers ideas not yet consolidated or formalized — as is the case with Enterprise and/or Systems Architecture.

So, let us start with a caveat. Ecosystems Architecture, as presented here, is an additive discipline to that of Enterprise Architecture, meaning that Ecosystems Architecture is not intended to replace or compete with that discipline. On the contrary, the work of an Enterprise Architect is seen as a necessary pathway, or even a prerequisite, toward becoming an Ecosystem Architect, by drawing upon skills already mastered.

The business side of the enterprise has eternally relied on the broader support of its surrounding ecosystems. To that end, ecosystems and ecosystems thinking are nothing new. What is new, however, is the recognition that IT architecture can play an instrumental role in how ecosystems and the enterprise interact. Hundreds of universities around the world already offer courses containing an ecosystems element. For instance, majors can be obtained in Supply Chain Management, Logistics, and so on. Yet, while optimization is often covered in these programs, the role of IT architecture in that optimization is not. IT architects, therefore, have invariably played a passive role when organizations think in terms of the world around them.

That needs to change.

Why? Simply because today’s enterprises swim in the sea that is the global digital ether, and without the support of the dynamic electronic connections around them, they would drown. As a case in point, the supply chain crisis brought about by COVID mortally wounded many organizations who were blasé about their extended digital dependence. It thereby quashed any fallacy of ecosystems being extraneous to mission-critical business concerns. So, if “being digitally extended” is considered to be important, then Ecosystems Architecture and the role of Ecosystems Architects must also be seen as imperative. In saying that, and as an aside, it is important to note that although global data exchange protocols, like TCP/IP, EDI X.400, and NIEM, have been successfully used for years, and although they may indeed be part of any Ecosystems Architect’s kitbag, Ecosystems Architecture should not be considered synonymous for any or all of them. Its scope is far greater, with a broad affinity to Enterprise Architecture — while being distinctively different.

All of this means that Ecosystem Architects must sit alongside their business counterparts as their organizations build out in their surrounding ecosystems. This, of course, requires an eye to the technologies involved, and the support of unbiased, systematized, and standardized practice.

As an example of bias, naïve organizations often make the mistake of believing that they live at the epicenter of their customer and supplier networks. This is rarely, if ever, the case as Ecosystems Architecture is keen to point out. Not only does centrality skew an organization’s worldview, but it can serve to restrict its opportunities. This is important because, as the grander context of business scales and becomes more complex, retaining objectivity will become ever more important. Aspiring to create a discipline that can transcend the reach of personal and organizational viewpoints was, therefore, the primary motivation behind the push for Ecosystems Architecture as a distinct and discrete discipline.

Neal Fishman

Distinguished Engineer, IBM

Prologue

History and Background

“Problems cannot be solved at the same level of awareness that created them.” — Albert Einstein

We are still amid a technological epoch. Not since the harnessing of steam power have we seen such a change. During the Industrial Revolution, innovation moved from small-scale artisan endeavor to widespread industrialization, which started the upward spiral of globalization and eventually spat out an ever-expanding network of effective electronic communications. In short, rapid technological advances began to catapult human potential beyond its natural limits as the 20th century dawned.

As the world’s communication networks expanded, and our brightest minds connected, the take-up of applied know-how became transformative. So much so, that by the end of the 20th century, technology in general had irrefutably changed the course of history. As a key indicator, and even taking into account deaths due to war and conflict — rounding off at around 123[1] million [1] — our planet’s population grew three times faster than at any other time in history, from 1.5 to 6.1 billion souls in just 100 years [2].

But amid all that progress, one episode stands out.

As the demands of World War II pushed the world’s innovators into overdrive, a torrent of advance ensued. Where once crude equipment had proved sufficient to support mechanization, sophisticated electronic circuits would soon take over as the challenges of war work became clear — challenges so great that they would force the arrival of the digital age.

Whereas previous conflicts had majored in the manual interception and processing of military intelligence, by the outbreak of war in 1939, electronic communications had become dominant. Not only were valuable enemy insights being sent over the wire, but, with the earlier arrival of radio, long-distance human-to-human communications were literally in the air. World War II was, therefore, the first conflict to be truly fought within the newly formed battlegrounds of global electronic communication.

Warring factions quickly developed the wherewithal to capture and interpret electronic intelligence at scale. That advantage was coveted throughout the war and long after. That and the ability to harness science’s newest and most profound discoveries. Both provided the impetus for unparalleled advance, as security paranoia gripped the world’s political elite. On the upside came the rise of digital electronics and the whirlwind of information technology that would follow, but in parallel, we developed weapons of mass destruction like the atomic and hydrogen bombs.

At the heart of it all was information and kinship, in our voracious appetite for knowledge and the unassailable desire to find, share, and protect that which we hold true. Nowhere in human history will you find better evidence that we are a social species; all our surrounding computers and networks do today is underline that fact. Grasping that will be key to understanding the text to follow. For instance, as a race, we have often advanced by connecting to maximize our strengths and protect our weaknesses; whether that be how we hunt mammoth, build pyramids, or create our latest AI models. This is the tribe-intelligence that has bolstered our success and welcomed us in the onward march of technology to augment our strengths and protect our weaknesses. It speaks to the fact that evolution cannot be turned back and, likewise, neither can the advance of any technology that catalyzes or assists its progress. Information technology will always move forward apace, while the sprawling threads of the world’s networks can only ever extend to increase their reach.

These things we know, even though we might poorly distill and communicate the essence of the connected insight they bring. What is certainly lesser known, though, is what ongoing impact such expansion and advance will have on professional practice, especially since future technological advances may soon surpass the upper limits of God-given talents.

As technologists, we wear many hats. As inventors, we regularly push the envelope. But as architects, engineers, and inquisitors, we are expected to deliver on the promise of our ideas: to make real the things that we imagine and realize tangible benefit. In doing so, we demand rigor and aspire to professional excellence, which is only right and proper. But in that aspiration lies a challenge that increasingly holds us back: generally, good practice comes out of the tried and tested, and, indeed, the more tried and tested the better.

But tried and tested implies playing it safe and only doing the things that we know will work. Yet how can such practice succeed in the face of rapid advance and expansion? How can we know with certainty that old methods will work when pushing out into the truly unknown, and at increasing speed?

There can only ever be one answer, in that forward-facing practice must be squarely based on established first principles — the underlying tenets of all technological advances and the very philosophical cornerstones of advancement itself, regardless of any rights or wrongs in current best practice.

So, do any such cornerstones exist? Emphatically yes, and surprisingly they are relatively simple and few.

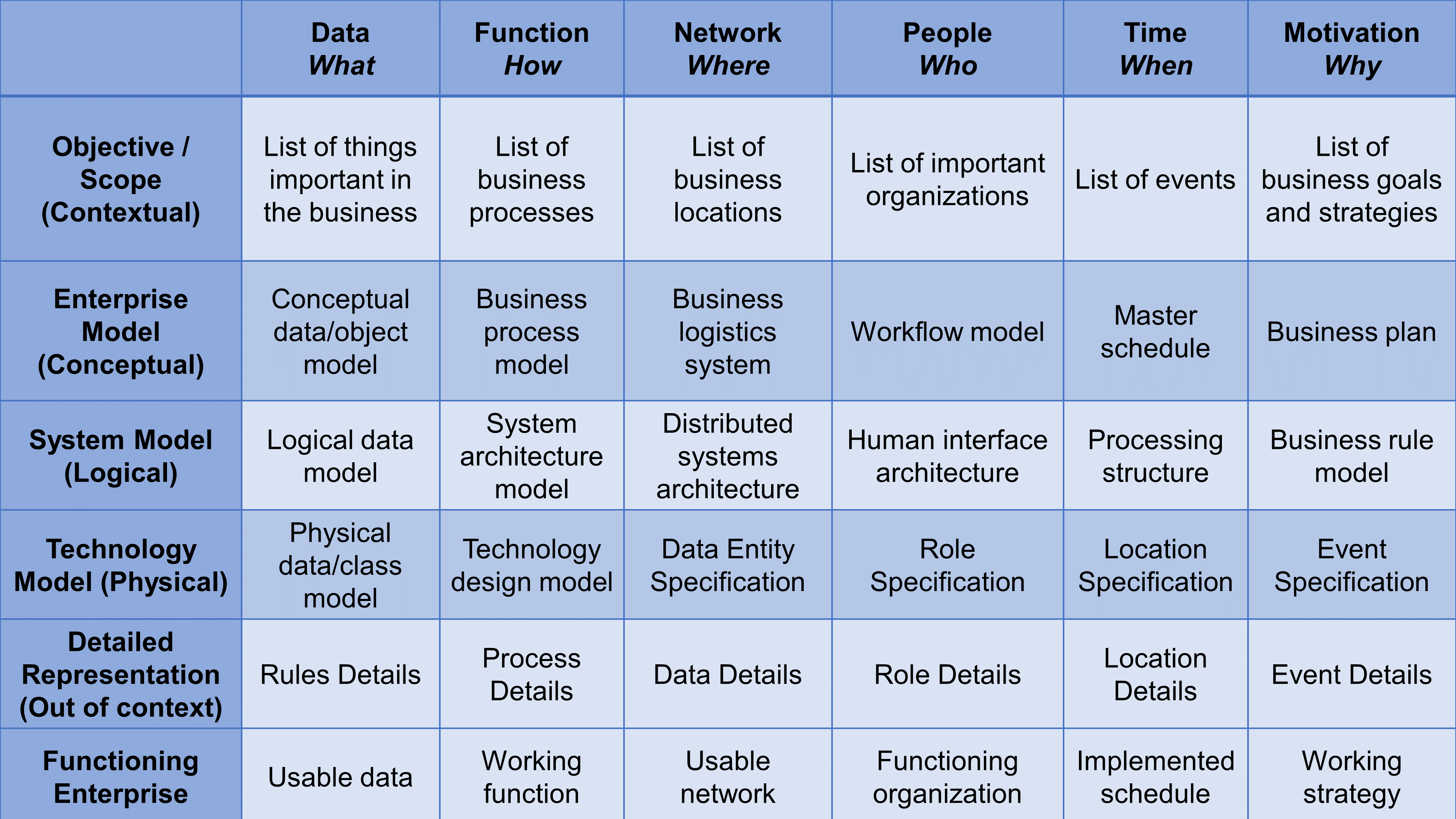

Scale and Complexity

As we become more proficient with a tool or technology, we largely learn how to build bigger and better things with it. Be they bridges, skyscrapers, or Information Technology (IT) solutions, their outcomes largely become business as usual once realized. What really matters though, is that the tools and techniques used for problem-solving evolve in kind as demand moves onward and upward. For that reason, when a change in demand comes along, it is normally accompanied by an equivalent advance in practice, followed by some name change in recognition. For instance, IT architects talk of “components”, whereas Enterprise Architects talk of “systems”. Both are comparable in terms of architectural practice but differ in terms of the scale and abstraction levels they address. In that way, IT architecture focuses on delivering IT at the systems level, whereas Enterprise Architecture is all about systems of systems.

Interestingly, the increases in scale and complexity that brought us Enterprise Architecture were themselves a consequence of advances in communications technology as new network protocols catalyzed progress and expanded the potential for connectivity — so that a disparate IT system could comfortably talk to another disparate IT system. Nevertheless, boil the essence of this progress down and only two characteristics remain: the scale at which we choose to solve problems, and the levels of complexity necessary to successfully deliver appropriate solutions.

That is it. In a nutshell, if we can work from a base of managing scale and complexity, then the selection of tools and techniques we use becomes less important.

Considering the lesser of these two evils first, in recent times, we have become increasingly adept at tackling complexity head-on. For instance, we now understand that the antidote to complexity is the ability to abstract. As more and more complexity is introduced into the solutions we build, as professionals we simply step back further in order to squeeze in the overall perspectives we need. We therefore work using units of a “headful”, as the architect Maurice Perks [3] once said — any solution containing more than a headful of complexity [4] needs multiple professionals in attendance. As we step back, detail is obviously lost as the headful squeezing happens, even though we admirably try to apply various coping techniques, like dissecting out individual concerns and structuring them into hierarchies, ontologies, or whatever. But today, that is mostly fine as we have learned to employ computers to slurp up the fallout. This is the essence of Computer Aided Design (CAD) and the reason why tools like Integrated Development Environments (IDEs) have proved so successful. Complexity, therefore, is not a significant challenge. We mostly have it licked. Scale, on the other hand, is much more of a challenge.

In simple terms, the difficulty with asking the question “How big?” is that there is theoretically no upper limit. This happens to be a mind-bending challenge, especially given that the disciplines of architecture and engineering are built on the very idea of limits. So, before we can build anything, at least in a very practical sense, we must know where and when to stop. In other words, we must be able to contain the problems we want to solve — to put them in a mental box of some kind and be able to close the lid. That is the way it works, right?

Well, actually … no, not necessarily. If we were to stick to the confines of common-or-garden IT architecture and/or engineering then perhaps, but let us not forget that both are founded on the principles of science and, more deeply, the disciplines of mathematics and philosophy in some very real sense. So, if we dared to dive deep and go back to first principles, it is surely relevant to ask if any branch of science or mathematics has managed to contain the idea of the uncontainable? Are either mathematics or philosophy comfortable with the idea of infinity or, more precisely, the idea of non-closable problem spaces — intellectual boxes with no sides, ceilings, or floors?

Not surprisingly, the answer is “yes” and yes to the point of almost embarrassing crossover.

Philosophy, Physics, and Technology at the Birth of the Digital Age

For appropriate context, it is important that we take a historical perspective. This is important stuff, as hopefully will become clear when ideas on new architectural approaches are introduced later, so please bear with the narrative for now. This diversion ultimately comes down to not being able to understand the future without having a strong perspective on the past.

As Alan Turing, the father of modern-day computer science, passed through the gates of Bletchley Park for the last time at the end of World War II, he was destined to eventually go to Manchester, to take up a position at the university there. Contrary to popular belief, he had not developed the world’s first digital computer at Bletchley, but the team around him had got close, and Turing was keen to keep up the good work. Also contrary to popular belief, Turing had not spent the war entirely at Bletchley, or undertaken his earlier ground-breaking work on logic entirely in the UK. Instead, he had found himself in the US, first as a doctoral student before the war, then as a military advisor towards its end. His task was to share all he knew about message decryption with US Intelligence, once America had joined with the Allied forces in Europe.

On both his visits, he mixed with rarefied company. As a student at Princeton University, for instance, he would no doubt have seen Albert Einstein walking the campus’s various pathways, and his studies would have demanded the attention of the elite gathered there. So rarefied was that company, in fact, that many of Turing’s contemporaries were drafted in to help with the atomic bomb’s Manhattan Project, as the urgency of war work wound up in the US. Most notably on that list was the mathematician and all-round polymath John von Neumann, who had not only previously formulated a more flexible version of logic than the Boolean formulation [5] central to Turing’s ground-breaking work, but had captured the mathematical essence of quantum mechanics more purposefully than anyone else in his generation. That did not mark him out solely as a mathematician or a physicist though. No, he was more than that. By the 1940s, von Neumann had been swayed by the insight of Turing and others, and had become convinced of the potential of electronic computing devices. As a result, he took Turing’s ideas and transformed them into a set of valuable engineering blueprints. This was ground-breaking, fundamental stuff, as, for instance, his sequential access architecture is still the predominant pattern used for digital processor design today.

As for his ongoing relationship with Turing, fate would entangle their destinies, and in the years following World War II, von Neumann and Turing’s careers would overlap significantly. Both worked hard to incubate the first truly programmable electronic computers, and both became caught up in the brushfire of interest in nuclear energy. Von Neumann’s clear brilliance, for instance, unavoidably sucked him into the upper workings of the US government, where he advised on multiple committees, several of which were nuclear-related, while Turing’s team nursed its prototype computer, the “Baby”,[2] on funds reserved for the establishment of a British nuclear program. Both, therefore, survived on a diet of computing and nuclear research in tandem, but what resulted was not, strictly speaking, pure and perfect.

For sure, Turing and von Neumann understood the base principles of their founding work better than anyone else, but both were acutely aware of the engineering limits of the day and the political challenges associated with their funding. So, to harden their ideas as quickly and efficiently as they could, both knew they had to compromise. As the fragile post-war economy licked its wounds and the frigid air of the Cold War swept in, both understood they had to be pragmatic to push through their ideas; it was not the time for idealism or precision. Technical progress, and fast technical progress at that, was the order of the day, especially in the face of a growing threat of a changing geopolitical world.

For Turing, that was easier than for von Neumann. His model of computing was based on the Boolean extremes of absolute and complete logical truth or falsehood: any proposition under test must be either completely right or wrong. In engineering terms, that mapped nicely onto the idea of bi-pole electrical switching, as an electronic switch was either on or off, with no middle ground. Building up from there was relatively easy, especially given the increasingly cheap and available supply of electronic bi-pole switches and relays in the form of valves. So, many other engineering problems aside, the route to success for Turing’s followers was relatively clear.

The same was not true for those ascribed to von Neumann’s vision, however. In his mind, von Neumann had understood that switches can have many settings, not just those of the simplest true/false, on/off model. Instead, he saw switching to be like the volume knob on a perfect guitar amplifier; a knob that could control an infinite range of noise. This was a continuous version of switching, a version only bounded by the lower limit of impossibility and the upper limit of certainty. The important point though, was that infinite levels of precision could be allowed in between both bounds. In von Neumann’s logic, a proposition can therefore be asserted as being partly true or, likewise, partly false. In essence then, von Neumann viewed logic as a continuous and infinite spectrum of gray, whereas Turing’s preference was for polarized truth, as in black or white.

In the round, Turing’s model turned out to be much more practical to build, whereas von Neumann’s model was more accommodating of the abstract theoretical models underlying computer science. In that way, Turing showed the way to build broadly applicable, working digital computers, whereas von Neumann captured the very essence of computational logic at its root. He, rather than Turing, had struck computing’s base substrate and understood that the essential act of computing does not necessarily need the discreet extremes at the core of Boolean logic.

Computing, von Neumann had realized, could manifest itself in many ways. But more than that, by establishing a continuous spectrum of logic, his thinking mapped perfectly onto another domain. In what was either a gargantuan victory for serendipity or, more likely, a beguiling display of genius, von Neumann had established that the world of quantum mechanics and the abstract notion of computing could be described using the same mathematical frameworks. He had, therefore, heralded what the physicist Richard Feynman would proclaim several decades later:

“Nature isn’t classical, dammit, and if you want to make a simulation of nature, you’d better make it quantum mechanical…” [6]

Relevance to IT Architecture and Architectural Thinking

This, perhaps perplexing, outburst would turn out to be the clarion that heralded the rise of quantum computing, and although it may have taken some time to realize, we now know that the quantum paradigm does indeed map onto the most foundational of all possible computational models. It is literally where the buck stops for computing and is the most accommodating variant available. All other models, including those aligned with Turing’s thinking, are merely derivatives. There is no further truth beyond that, full stop, end of sentence, game over.

But why this historic preamble, and specifically why the segue into the world of quantum?

If you look broadly across the IT industry today, you will see that it is significantly biased toward previous successes. And for good reason — success builds upon success. For instance, we still use von Neumann’s interpretation of Turing’s ideas to design the central processing units in most of the world’s digital computers, even though we have known for decades that his more advanced vision of computing is far broader and more inclusive. Granted, state-of-the-art quantum computing is still not quite general purpose yet, or ready for mainstream, but the physical constraints dominant at the atomic level mean that the sweet spot for quantum will always be more focused than widespread. But regardless, that should not limit our thinking and practice. Only the properties carried forward from computation’s absolute ground truths should do that, and not any advantage that has been accrued above them through the necessities of application.

And that is a significant problem with IT architecture today. Like most applied disciplines, it is built from a base of incremental application success, rather than a clear understanding of what is possible and what is not. In other words, the mainstream design of IT solutions at both systems and enterprise levels has been distracted by decades of successful and safe practice. To say that another way, we rely heavily on hands-on tradition.

Engineers and architects of all kinds may well be applauding at this point. “If in doubt, make it stout and use the things you know about” is their mantra, and that is indeed laudable to a certain point. Nevertheless, as we seek to push out to design and build IT systems above enterprise scale, out into the era of hyper-Enterprise Architecture as it were, the limits of tried-and-tested are being pushed. In such a world, we may plausibly need to model billions of actors and events, all changing over time and each with a myriad of characteristics. And that takes us out into an absolute headache of headfuls worth of complexity.

Where, in the past, the scale and complexity of the IT systems we aspired to design and build could be comfortably supported by the same hands-on pragmatic style favored by Turing, as he steered toward the nascent computers of a post-war world, we are no longer afforded such luxury. The demands of today’s IT systems world now lie above the levels of such pragmatism and out of the reach of any one individual or easily managed team. No, the game has changed. Now, we are being forced to move beyond the security afforded by reductionist[3] approaches [7], in the hope that they might yield single-headful victories. This is therefore a time to open up and become more accommodating of the full spectrum of theory available to us. It is the time to appreciate the kick-start given by Turing and move on to embrace the teachings of von Neumann. This is the age where accommodation will win out over hands-on pragmatism. It is a time to think brave thoughts. Professional practice has reached a turning point, whether we like it or not.

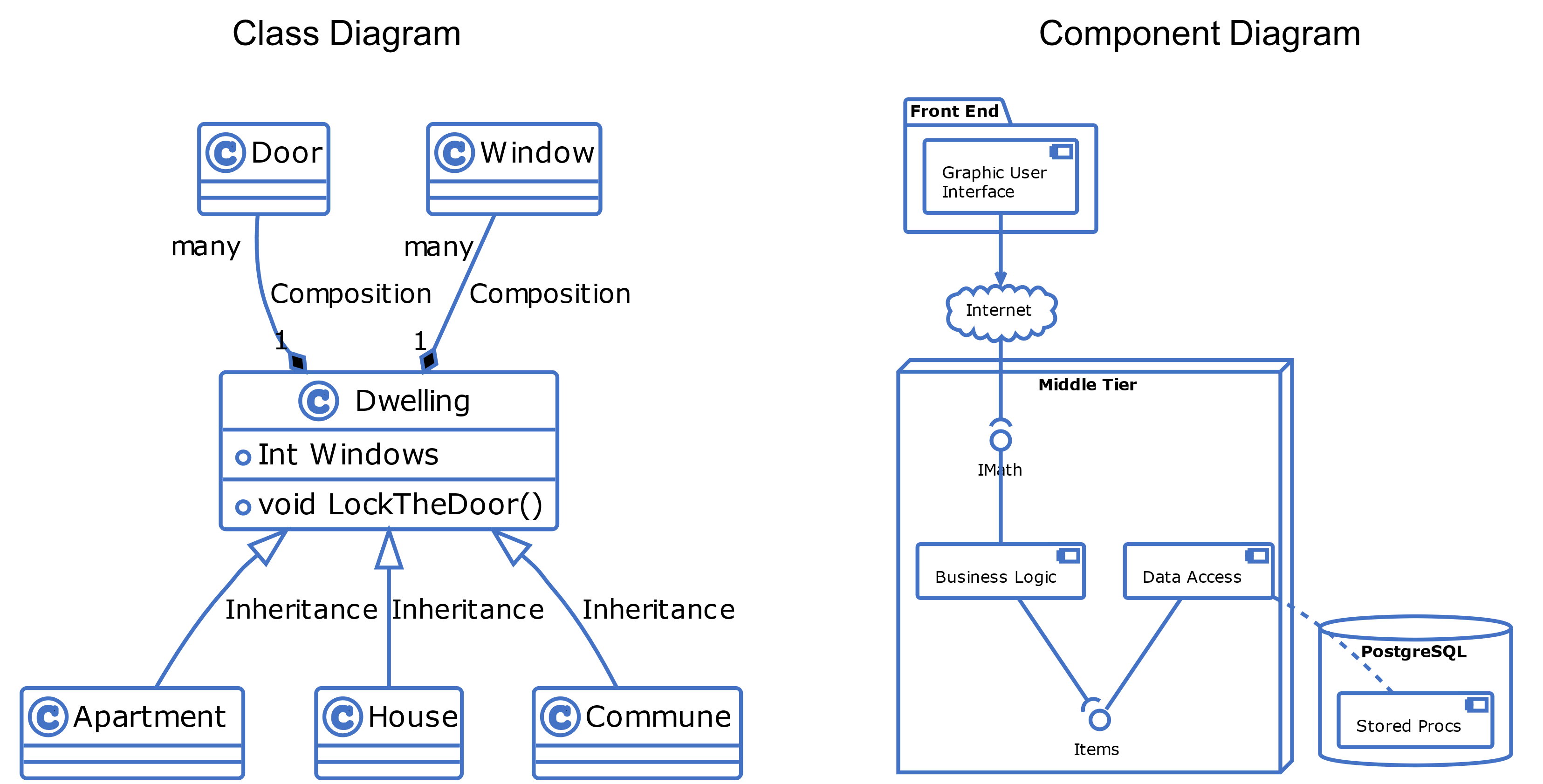

Even our most respected experts agree [8]. Take Grady Booch [9] for instance, one of the inventors of the Unified Modeling Language™ (UML®) and one of the first to acknowledge the value of objects [10] [11] in software engineering. He openly talks in terms of three golden ages of architectural thinking.[4] The first, he suggests, was focused on algorithmic decomposition, invested in the translation of functional specifics into hand-written program code. In other words, the manual translation of rule systems into machine-readable forms dominated. Then, as Paul Homan [12] suggests, came the realization that ideas could be encapsulated and modeled independently of any underlying rules systems or data structures. While fed on the broadening out of use cases [13], that provided the essence of the second age, which saw the rise of object and class decomposition and in which, it might be argued, abstraction and representation broke free — so that architectural thinking need not focus exclusively on code generation. It also forked work on methodologies into two schools. The first embraced the natural formality of algorithms and therefore sought precision of specification over mass appeal. Today, we recognize this branch as Formal Methods [14], and its roots still run deep into mathematical tradition. For the design of safety-critical IT systems, like life support in healthcare or air traffic control, formal methods still play a vital role.

Alongside, however, came something much freer, more intuitive, and much more mainstream.

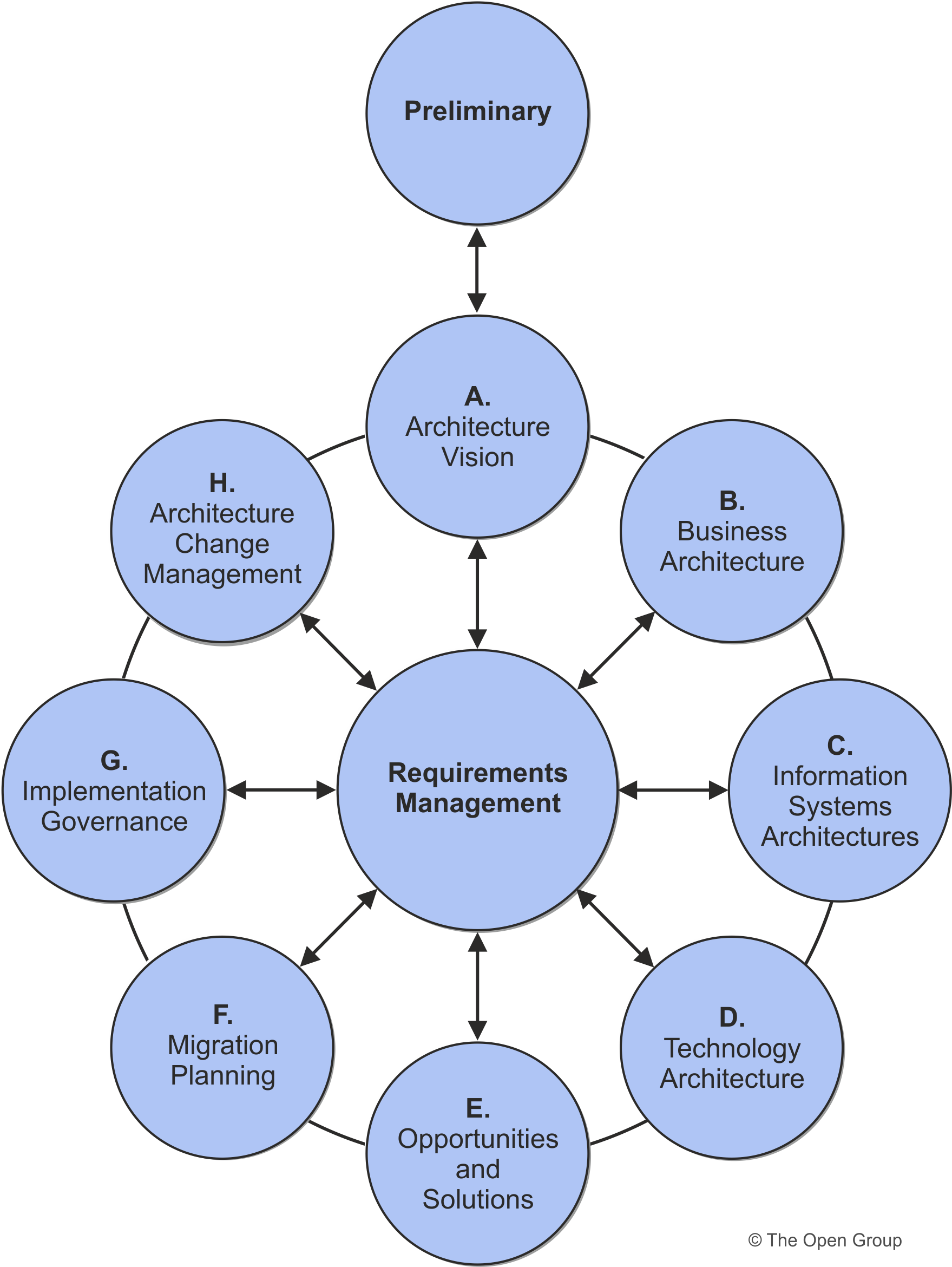

Feeding on the innate human preference for visual communication, and with a nod to the, then fashionable, use of flowcharts in structured thinking, a style of methodological tooling emerged that linked various drawn shapes together using simple lines. This gave birth to the world of Semi-Formal Methods, which still dominates IT architecture and software engineering practice today, and within which can be found familiar tooling favorites, like the UML modeling language and the TOGAF® Enterprise Architecture framework [15] — which have both served IT professionals well for decades.

The third age, however, as Grady advocates, is somewhat different from what has gone before, wherein we now do not always explicitly program our machines, but rather teach them [8]. This step-change comes from the advent of new technologies, like Generative Artificial Intelligence (GenAI) [16], Large Language Models (LLMs) [17], and significantly-sized neural networks [18], and sees human experts partially replaced by machine-based counterparts. In saying that, however, it is important to remember that the “A” in AI does not necessarily always stand for “artificial”. It can also stand for “augmented”, in that synthetic assistants do not so much replace human function, but rather enhance it. The third age is therefore about both the replacement and enhancement of professional (human) practice, especially in the IT space. AI therefore allows us to abstract above the level of everyday work to focus on the augmentation of practice itself. In that way, we can now feed superhuman-like capabilities directly into human-constrained workflows. The hope is that these new superpowers might, in some cases at least, outstrip the limits of natural human skill to help make the once intractable tractable and the once unreachable reachable. So, in summary, this next-gen momentum is as much about providing antidotes to complexity and scale in IT systems as it is about advancing the fields of IT architecture and software engineering. In the age-old way, as with all technologies and professional practices, as they mature they become optimized to the point where complexity and unpredictability are effectively negated.

All that said, there is no need to feel a sense of impending doom, as much progress has already been made. For instance, well-established areas of practice now exist, not too distant at all from the traditional grounds of IT architecture, and which have already successfully ventured out far beyond the limits of the headful. These, and their various accompanying ideas, will all be introduced as the threads of this book come together. As a note to those set on reading this text, it should hopefully be fascinating to understand that these ideas are both old and established. They are the stuff of the conversations that held IT’s early pioneers together, only to be resurfaced anew today.

Even though the rats’ nest of connected technology around us today might not feel very quantum or von Neumann-like at surface inspection, stand back and quite the opposite should become clear. En masse, the overpowering complexity and scale of it all buzzes and hums above us, not at all unlike the apparent randomness inherent to every single atom involved. To capture that essence and bottle it is the key to future progress. That, and an acute appreciation of just how different the buzzing is in comparison to the familiar melodies of systems and Enterprise Architecture.

Widening the Lens — The Road to Ecosystems Architecture

As the digital age dawned, associated benefits were small and localized at first. In the world of commerce, for instance, it was soon realized that business accounts could be processed far faster by replacing human computers with electronic counterparts. Likewise, customers and suppliers could be herded with a simple phone call. It was all a matter of optimizing what was familiar and within the confines of well-established commercial practice. Beyond that, it was perhaps, just perhaps, about pushing norms to experiment with the new electronic trickery. Out of that came new business, and eventually whole new industries were spawned; the reach of the planet’s networks kept expanding, slowly finding their way into every nook and cranny of big business.

What came next was a forgone conclusion. Soon, it became clear that individuals, families, and communities could connect from far-flung places. That is what we do as a social species. It is in our nature. And so, too, this skill ascended to business level. Economics and the sheer intoxication of international opportunity took over, and the race to adopt and adapt began.

By the 1980s, desperate to keep up, many business leaders began to breach the boundaries of their traditional businesses and start to wrestle with the ultra-high-scale, complexly connected communications networks emerging around them. Some might have seen this as innovation in the wild, but, to others, it was nothing more than an emergence born out of the Internet’s arrival. Whichever way, the resulting mass-extension of commercial reach shifted the balance of business as the new century arrived. The slithering beast of the Internet Age was out of its shell, writhing and hissing as the walls of enterprise fell around it. Business emphasis had shifted. No longer was there a need for enterprise-centricity. It was about hyper-enterprise now. Where once systems of business dominated, now it was about ecosystems.

From an IT architecture perspective, this prompted talk of systems of systems theories and even sociotechnical networks, and all without so much as a nod from the professional standards community. Regardless, grass-roots interest flourished and the term Ecosystems Architecture first appeared in positioning papers [19] [20] [21] somewhere between 2014 and 2019, although the concepts involved had likely been in circulation long before that.

Looking back, it is possible to remember the rise in interest just before objects and Object-Oriented Design (OOD) were formalized in the 1980s. At that time, many professionals were thinking along similar lines and one or two were applying their ideas under other names, but, in the final analysis, the breakthrough came down to a single act of clarity and courage, when the key concepts were summarized and labeled acceptably. The same was true with Enterprise Architecture. By the time the discipline had its name, its core ideas were already in circulation, but practice was not truly established until the name itself had been used in anger. Thus, in many ways, naming was the key breakthrough and not any major change in ideas or substance. And so it is today. As we see the ideas of Enterprise Architecture blend into the new, the name Ecosystems Architecture will become increasingly important going forward, as will the idea of hyper-enterprise systems and third-age IT architecture.

This should come as no surprise. Enterprise Architects have been familiar with the idea of ecosystems for some time and the pressing need to describe dynamic networks of extended systems working toward shared goals. Such matters are not up for debate. What is still up for discussion, though, is how we establish credibility in this new era of hyper-enterprise connectivity.

1. From Enterprise to Ecosystem

“To infinity and beyond!” — Buzz Lightyear (Disney, Toy Story)

Look up the term (enterprise) ecosystem in any dictionary and you will likely find something like this[5]:

ec·o·sys·tem / eko-oh-sis-tuhm

Noun

Origin: 1935

1. A community of organisms together with their physical environment and interdependent relationships

2. A complex network or interconnected system

An ecosystem is a complex web of interdependent enterprises and relationships aimed at creating and allocating business value.

Ecosystems are broad by nature, potentially spanning multiple geographies and industries, including public and private institutions and consumers [22].

Frustratingly, such multifaceted descriptions make it hard to pin down a concise definition. Furthermore, the online dictionary Dictionary.com does not help, by also revealing that the word “enterprise” can have multiple meanings. It nevertheless settles to broadly summarize its account as:

“a company organized for commercial purposes [23].”

The definition at Merriam-Webster.com does somewhat better, however, by helpfully prompting suggestions as shown here:

enterprise / en-tə-ˌprīz

Noun

1. A project or undertaking that is especially difficult, complicated, or risky

2. a: A unit of economic organization or activity, especially a business organization

b: A systematic, purposeful activity, as in: “agriculture is the main economic enterprise among these people”

In essence, the idea of “enterprise” also presents as a synonym for “business organization”, and so, as Information Technology (IT) architects, we invariably apply the term “enterprise” to mean all the business units, or identifiable high-level functions, within an organization. Each business unit, therefore, provides a specialization within the construct of an enterprise.

By implication then, an enterprise ecosystem totals as an extended network of enterprises (or individuals) who exchange products or services within an environment governed by the laws of supply and demand [24], and where each enterprise contributes some form of specialization. This becomes a scalable element and provides a core repeatable pattern that is common across both Enterprise and Ecosystems Architectures alike. Also, by implication, the idea of business organization does not restrict how, when, where, or what commerce takes place; ecosystems in an enterprise context can be broad by nature, potentially spanning multiple geographies and industries, including public and private institutions and consumers. This means that ecosystems share many of the characteristics found in markets, so that participants [24]:

-

Provide a specialized function, or play out a specific role

-

Can extend activities or interactions through their environment

-

Present a set of capabilities of inherent value to their environment

-

Are subject to implicit and explicit rules governing conduct within their environment

-

Facilitate links across the environment connecting resources like data, knowledge, money, and product

-

Regulate the speed and scale at which content or value is exchanged within the environment

-

Jointly allow the admittance and expulsion of other participants into the environment

This leads to systems of mutual cooperation and orchestrations, as self-interest averages out into a network of self-support based on communal survival; participants relinquish any desire for dominance by understanding that more value can be gained through external coordination and collaboration. In short, team spirit wins. This leads to mutuality in the formal or informal sharing of ideas, standards, values, and goals, and synchronization, in that enterprises formally or informally engage in coordinated communication and the sharing of resources.

Concrete examples of such ecosystems are becoming increasingly easy to find, especially with the rapid rise of the service economy [25] [26] [27] [28] [29]. For instance, healthcare providers in the US now regularly orchestrate health insurance, hospitals, and physicians to provide integrated support for their customers, with one particular enterprise now connecting over nine million healthcare members with physicians, doctors, and medical centers. Likewise, a major retailer in Sweden now coordinates store operations, supply chain, real estate development, and financial services across 1,300 stores throughout the country [24].

This evolution in business models is being driven by a need to match or exceed customer expectations — as emerging technologies grow more distributed, powerful, and intelligent — and the global mindset shifts rapidly. This should hopefully be self-evident. As where, in 1969, the first moon landing was achieved with less computing power than a pocket calculator, today the average cell phone user has privileged access to cloud-based resources that would have made even the world’s most successful organizations jealous just a few short years ago. What is more, as advances in technologies like Artificial Intelligence (AI) accelerate, they serve to mask the complexity of the IT systems behind them. This lowers the barriers to entry and welcomes those hitherto excluded. It incrementally empowers consumers (sometimes to enterprise-like status) and draws them away from enterprises that cannot keep up. What results is increased competition and a race to the bottom for organizations unwilling or unable to embrace the reality of an increasingly connected world. This has been supported by reports as far back as 2015, which predicted that “53% of executives plan to reduce the complexities for consumers even as technological sophistication increases” [30].

Advancing technology also serves to blur the boundaries between what is physically real and what is virtual, as the digital-physical mashup [31] of products and services helps to increase value and catalyze seamless customer experiences. Indeed, many enterprises are already working hard to remove organizational boundaries between their digital and physical channels, with 71% of executives believing that ecosystem disruption is already a dominant factor in consumers demanding more complete experiences going forward [32]. Likewise, 63% of executives believe that new business models will profoundly impact their industries [32]. Many organizations are therefore being nudged to expand beyond their core competencies, and are forming deeply collaborative partnerships. These can be more extreme and dependent than any traditional customer-supplier relationship, specifically because of the need for mutual commercial survival.

All of this is further heightened by changing attitudes among regulators and legislators. Long gone are the days of “set and forget” IT strategy. Take the European Union (EU) General Data Protection Regulations (GDPR) [33], for example, which mandates that individuals must have access and control over their personal data; a key proposition which is taken further by the EU Data Act [34] [35], and which demands fair access and use of data generated through services, smart objects, machines, and devices. This not only points to opportunities for multi-party data sharing, but also creates ongoing pressure for both IT strategy and IT architecture to keep eroding barriers to technology access — to prevent things from mashing up further, as it were.

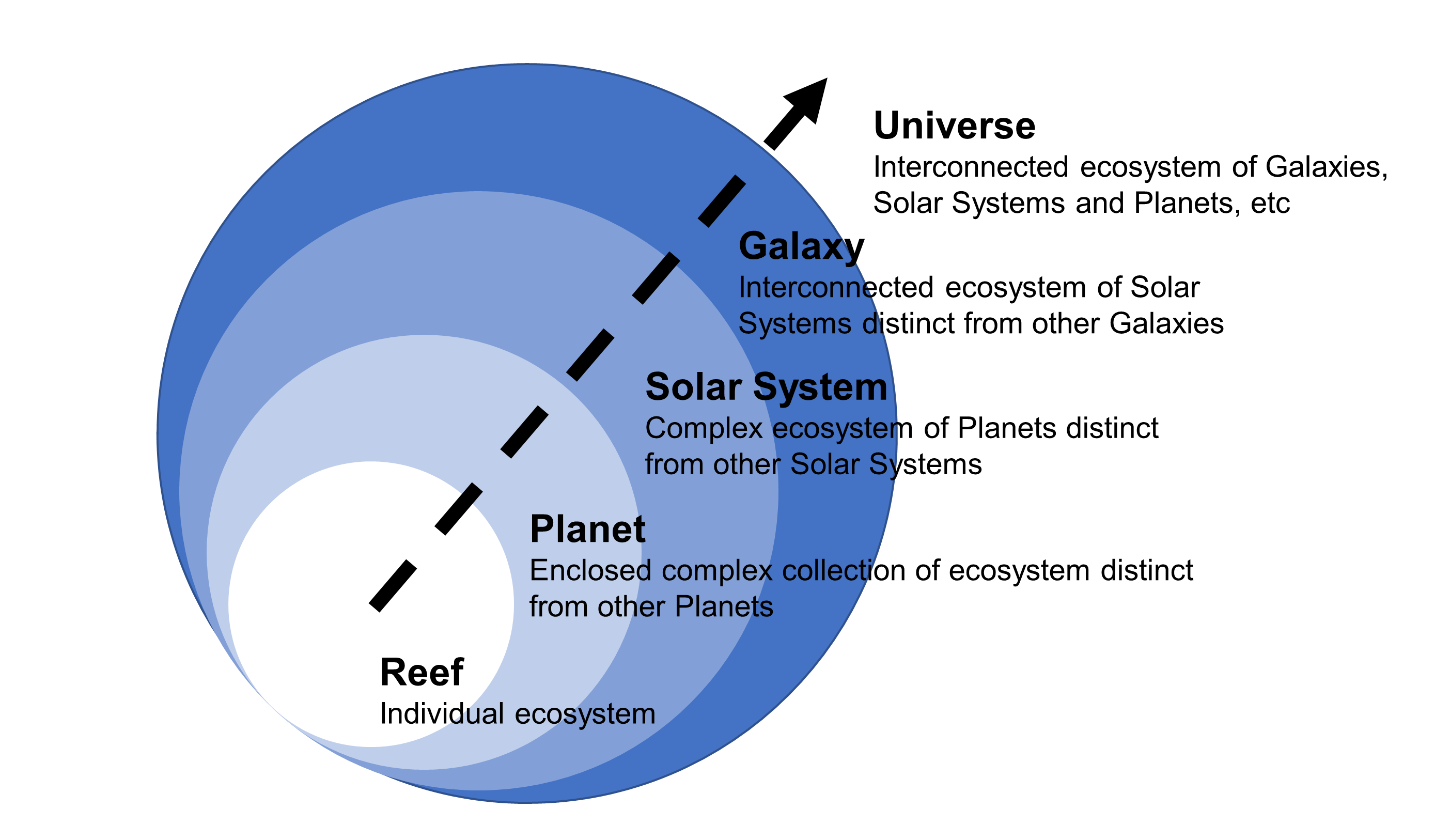

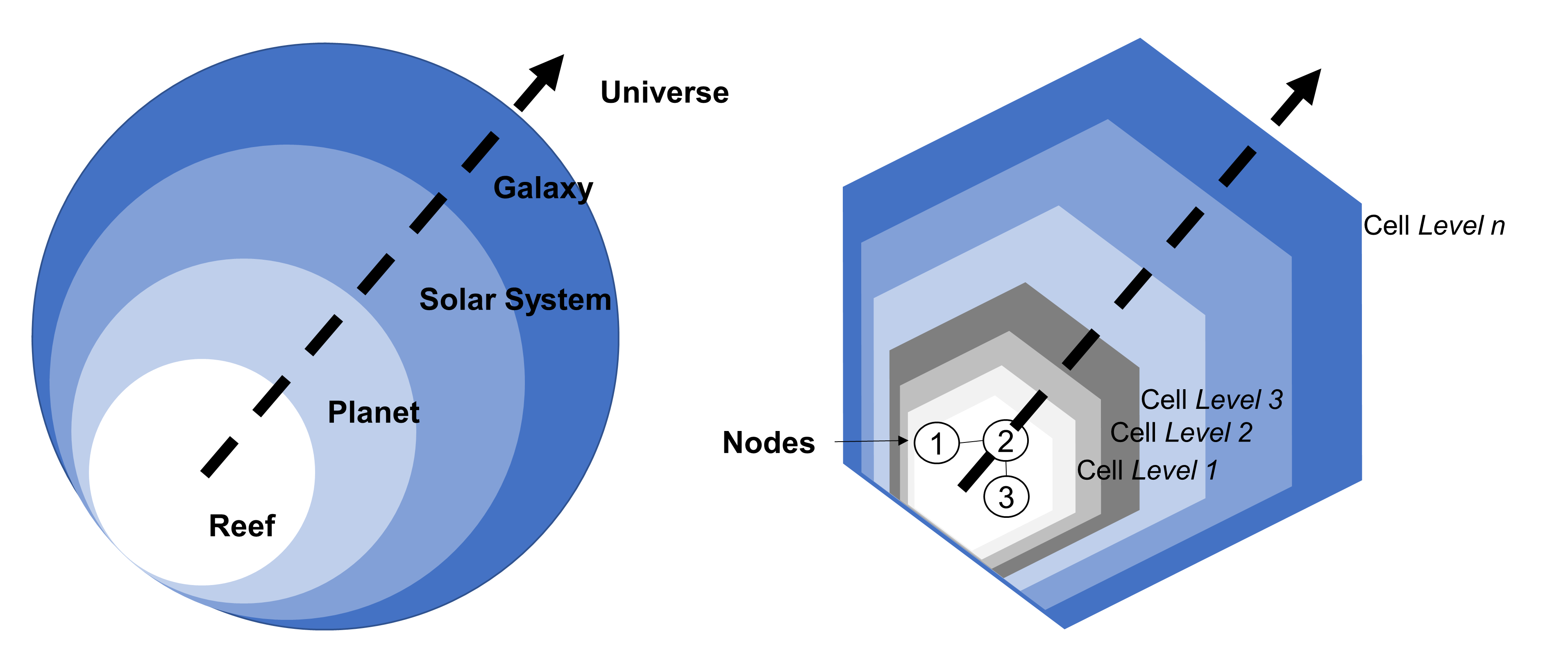

1.1. Scale, Structure, and Human-Centric Purpose

Ecosystems can also be recursive in their structure and reach, so it is often hard to understand where one ends and the other begins. As such, it is perfectly valid to speak in terms of ecosystems of ecosystems, where one or several may be embedded into others. Consequently, participants can be part of one or many ecosystems while playing out multiple evolving roles. Likewise, the purpose of any single ecosystem may well be directed toward a single purpose, whereas others might legitimately focus on multiple agendas. Nevertheless, purpose will always be targeted in support of some underlying human-centric[6] need, with examples of broad categories including:

-

Sustenance (Air, Food, Water, etc.)

-

Shelter, Dwelling, Home, and Town (including supporting civil infrastructure)

-

Energy, Resources, and Sustainability

-

Safety, Security, and Protection

-

Health, Wellbeing, and Fitness

-

Mobility and Transport

-

Rest and Recovery, Comfort

-

Community, Relationships, Family and Friends

-

Entertainment and Leisure

-

Education and Learning

-

Novelty and Innovation

-

Learning, Development, and Education

-

Equality, Justice, and Peace

-

Information Exchange and Communication

-

Trade and Finance (including Make and Supply)

-

Personal Fulfillment

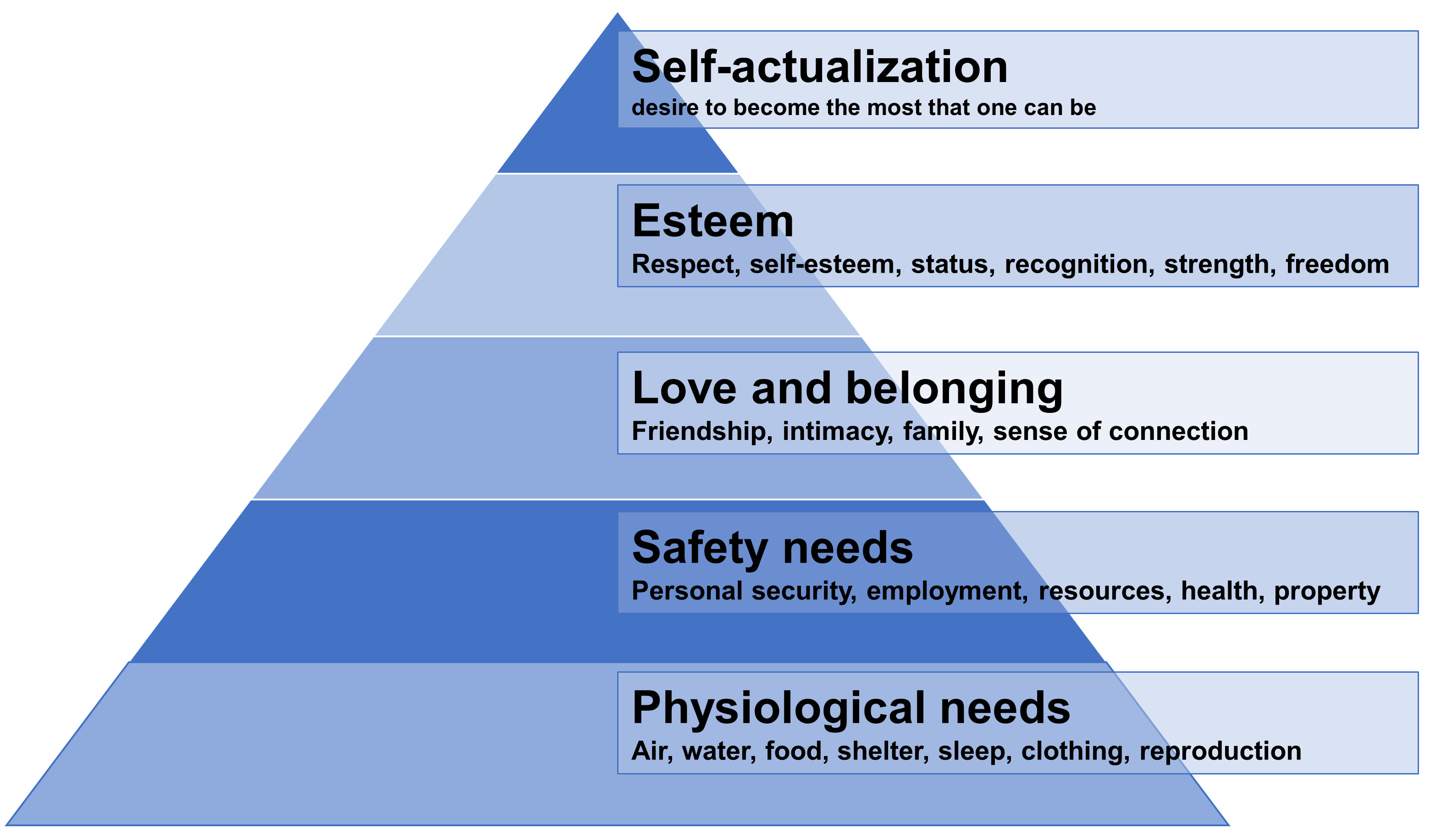

Such categories fall in line with many recognized models of psychological and social behavior and are also strongly influenced by the 2015 United Nations (UN) Sustainable Development Goals [36]. Relevant models include Abraham Maslow’s Hierarchy of Needs [37], which provides a hierarchy of motivational drivers associated with the human condition, covering everything from basic survival instinct up to personal enlightenment, belief systems, and beyond.

1.2. Sociotechnical Systems

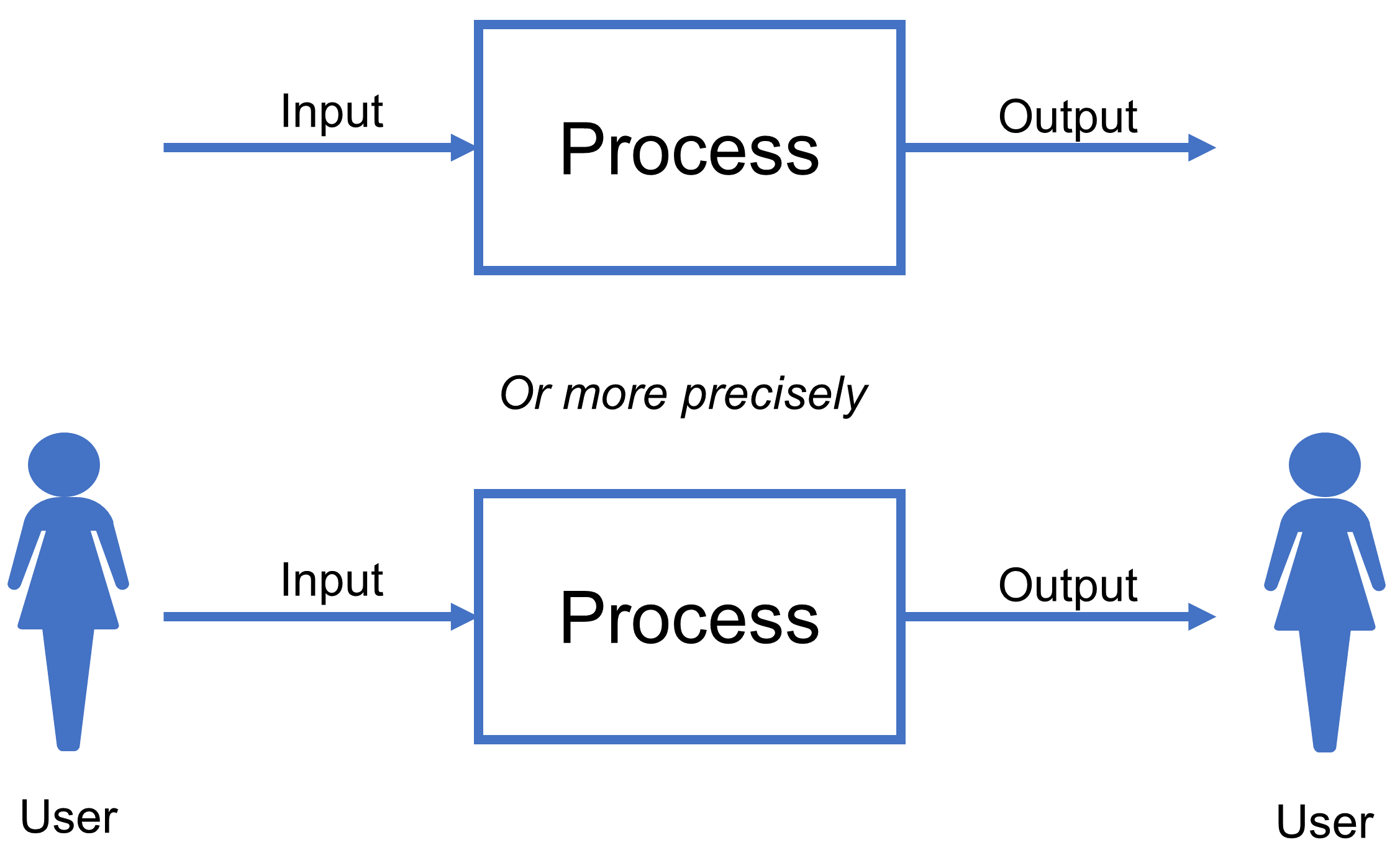

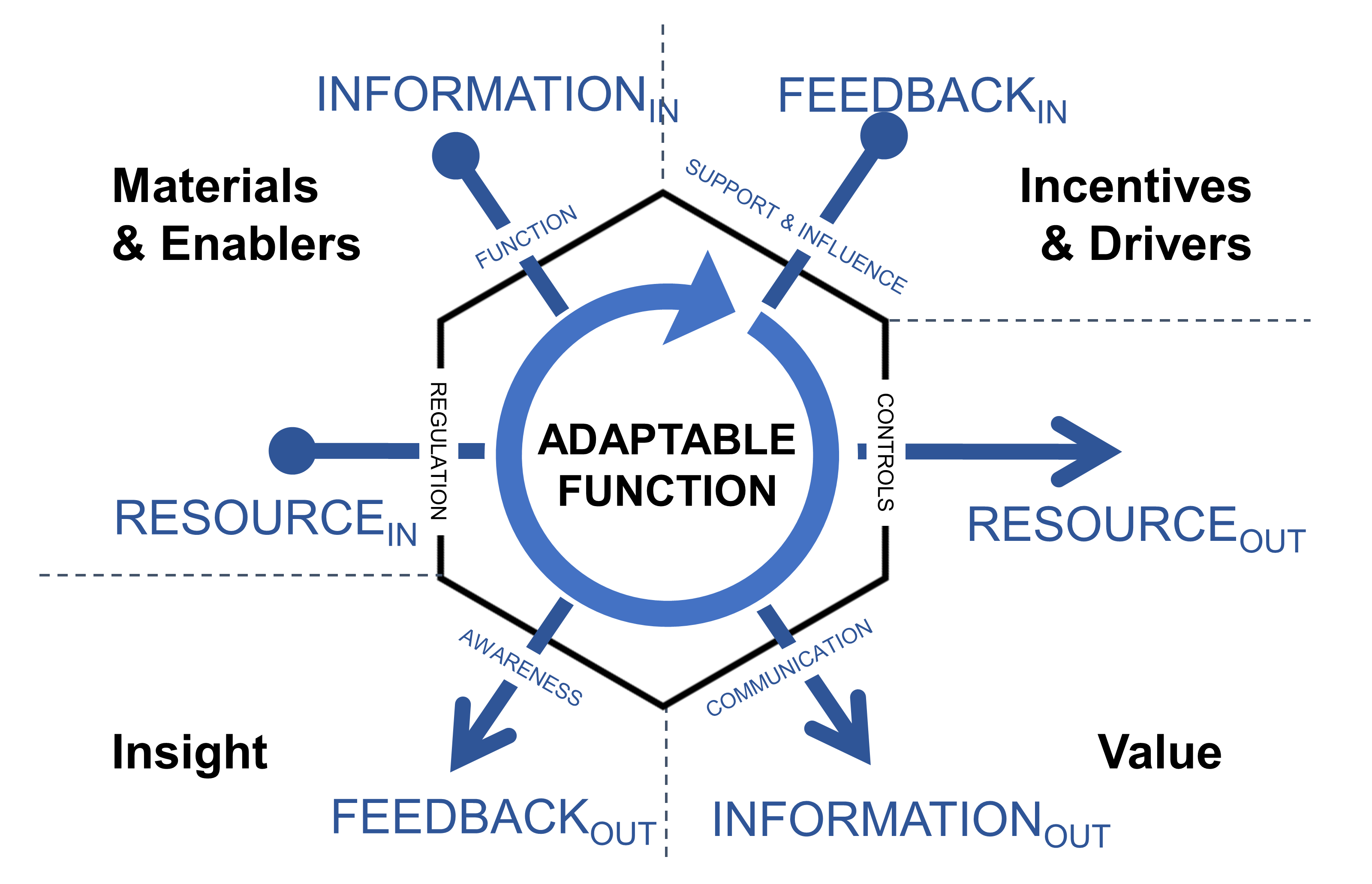

Models like Maslow’s Hierarchy of Needs nicely highlight the symbiotic relationship between human and machine in an enterprise ecosystem world. As such, they act as a cornerstone in a broad field of study focused on how people and technology merge to form Sociotechnical Systems (STS) in the modern world. The ideas behind Sociotechnical Science [38] [39] can therefore be directly overlaid to help frame the notion of enterprise ecosystems in terms of IT architecture. This is because they succinctly sum up how the various actors, elements, and constraints involved can meld into one singular whole. An enterprise ecosystem can further be seen as:

“The emergent summation of a self-organizing network, or networks, composed of human-centric participants, be they individuals, groups, enterprises, or organizations, the technologies they use, the information at their disposal, and the environment in which they all co-exist. In such arrangements, connections between constituent parts (artifacts) can therefore be explicit, through either direct communication or integration, or implicit, as in being more osmosis-like, where connections are transient, implied, inferred, or informal.”

Participants and/or constituents (artifacts) in a sociotechnical ecosystem, therefore, make use of technologies, information, and resources provided to and from other participants and/or constituents and their environment, and provide and receive reciprocating feedback as a natural response. Together, both participants and/or constituents and their environment create a system of dynamically evolving parts and properties that often display episodes of convergence, diversification, and extinction in much the same way as experienced in diversely natural ecosystems.

Enterprise ecosystems, therefore:

-

Are networked-based

-

Often have poorly defined, nondescript, or permeable boundaries, both at local and global levels

-

Dynamically change over time for reasons of singular or mutual benefit

-

Scale in ways that can create extremely large and complex communication structures, without compromising local and/or global integrity

-

May behave differently within local regions

-

Are often self-organizing and do not require explicit coordination across local command-control structures

1.3. Within a Hairsbreadth of Enterprise?

Although the idea of working with IT above enterprise-level might feel daunting at first, the parallels between Enterprise and Ecosystems Architecture can be quite striking, especially when it is considered that the delineation between the two may often be just about how we formalize the boundaries involved. For instance, it could plausibly be proposed that what we perceive as an enterprise is, actually in practice, nothing more than a small, self-contained ecosystem, and that the principal differences between Enterprise and Ecosystems Architecture are simply about scope, complexity, and scale. In other words, where we establish any boundaries of discourse or closure.

Enterprise Architecture, therefore, simply corresponds to a hypo-enterprise[7] mindset, whereas Ecosystems Architecture is predominantly hyper-enterprise[8] aligned.

On the surface then, the idea of an enterprise, as a legal entity at least, generates a singularity that just makes sense — even when considering that such entities emerge out of a collective of business or mutually beneficial functions. Cumulatively these amass solidity around corporate identity and higher-level purpose, and so exhibit external alignment, continuity, and a general lack of internal friction — while under the covers, individual functions may well be jostling for position or even partaking in all-out war. You may, of course, disagree, exclaiming that the enterprises you have worked with are nothing like that in practice. But hopefully, you get the point. As a model and as a generalized architectural pattern, enterprise implies a oneness inherited by the use of a term that is singular in nature and which masks the plurality of its form as a series of lesser business units, in its connected internal areas of specialization or function. As such, we might conclude that because an enterprise is composed of such units, it is actually an ecosystem in its own right; an ecosystem manifesting from a networked clustering of specializations. Thus, any enterprise must impose the boundary for its internal ecosystem, based on the footprint of its legal identity. Likewise, any enterprise ecosystem can be considered as a similarly closed, connected, and whole continuum, only this time accommodating higher levels of abstraction than at the enterprise-level. As a result, Ecosystems Architecture encompasses group types like domains, regions, communities, or colonies of enterprises (or lower abstraction constituents), and above that whole ecosystems, worlds, or universes as types for singular compound entities at or above the notion of the individual enterprise. In that way, Ecosystems Architecture simply extends the recursive hierarchy of abstraction, categorization, and containment formed when Enterprise Architecture positioned itself above the level of systems architecture.

With that in mind, ask yourself how many times you have heard the sentiment that all IT-based features or functions should be aligned with the business? Perhaps you yourself have even presented such an argument? That is because the very nature of architectural practice in IT is to work toward a definition of enterprise that appears, both internally and externally, to be seamlessly in line with commercial and legal intent, rather than just a patchwork of specialized functions, stovepipes, or silos, all individually contributing piecemeal. This is, of course, interesting from an ecosystems perspective, simply because the removal of any demarcation between silos should be aligned with how an enterprise, or more specifically its business and legal intent, is actually composed, rather than how it is perceived to be set up or directed. As such, any intent to align IT with the enterprise, or its surrounding ecosystem(s) should be handled carefully, as it could first be construed as rhetoric prone to misaligned thinking, and second, it could serve to create unnecessary friction between IT and the purpose it seeks to serve.

1.4. Attributes and Components, then up through Systems and Enterprises, Followed by Nodes

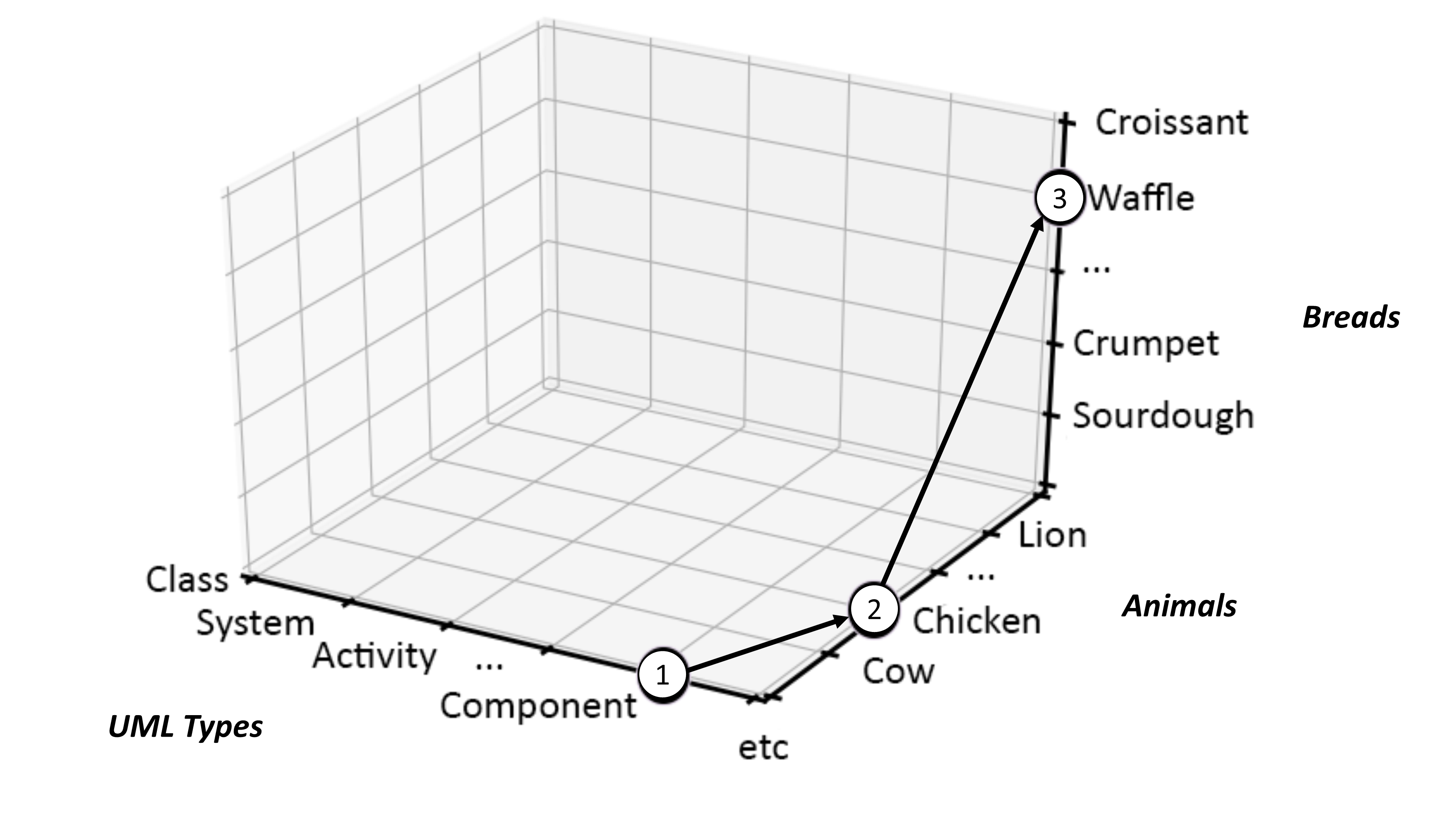

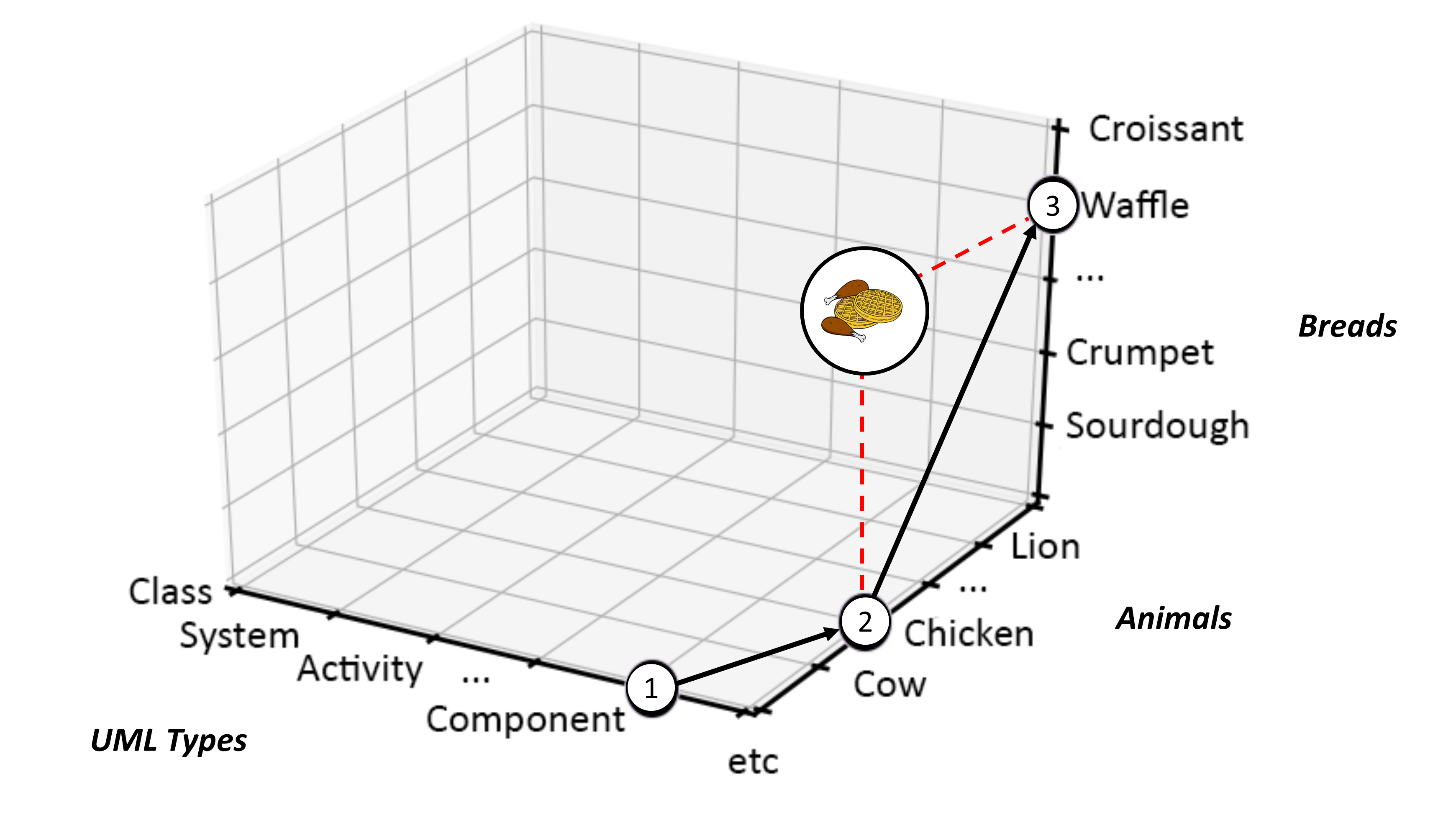

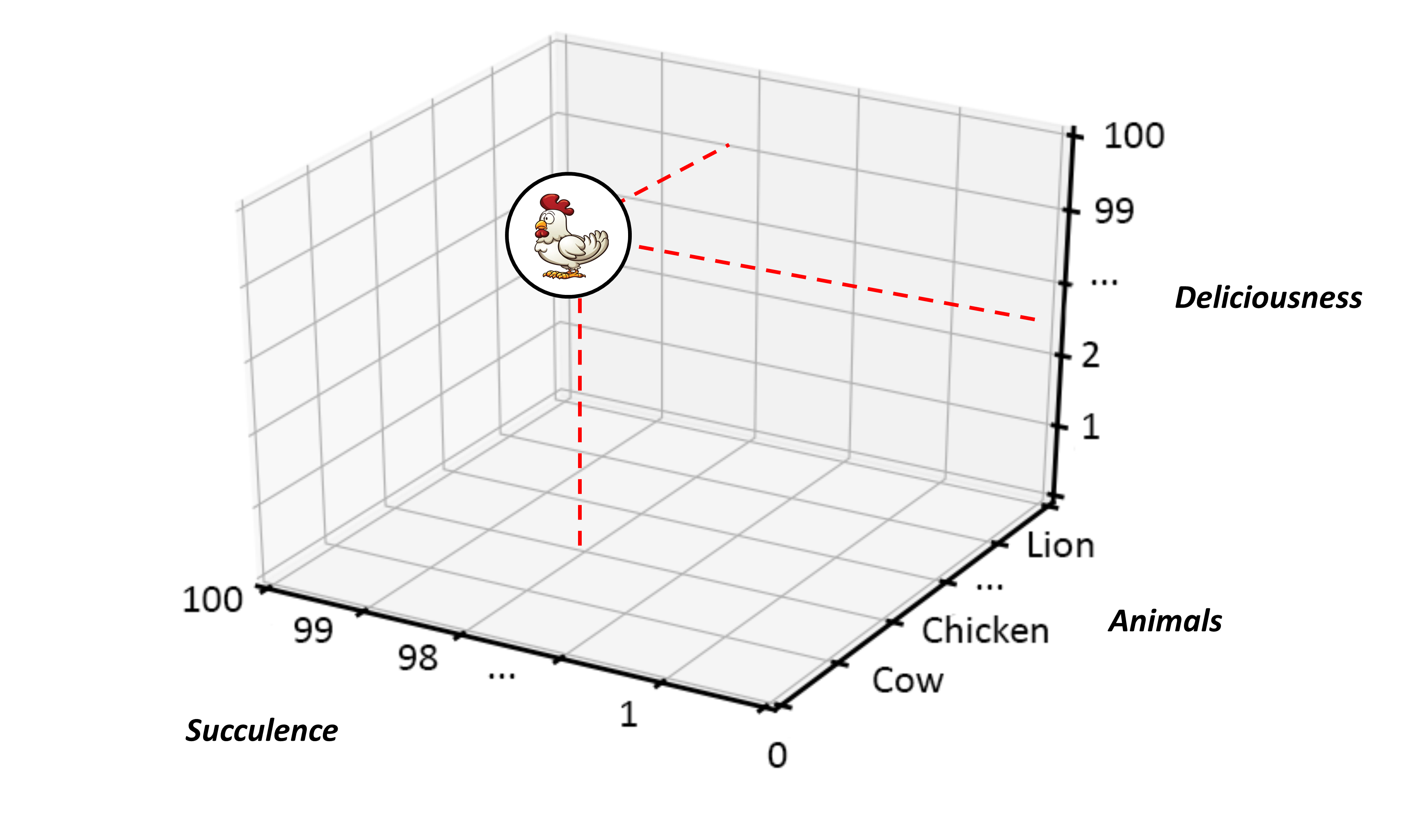

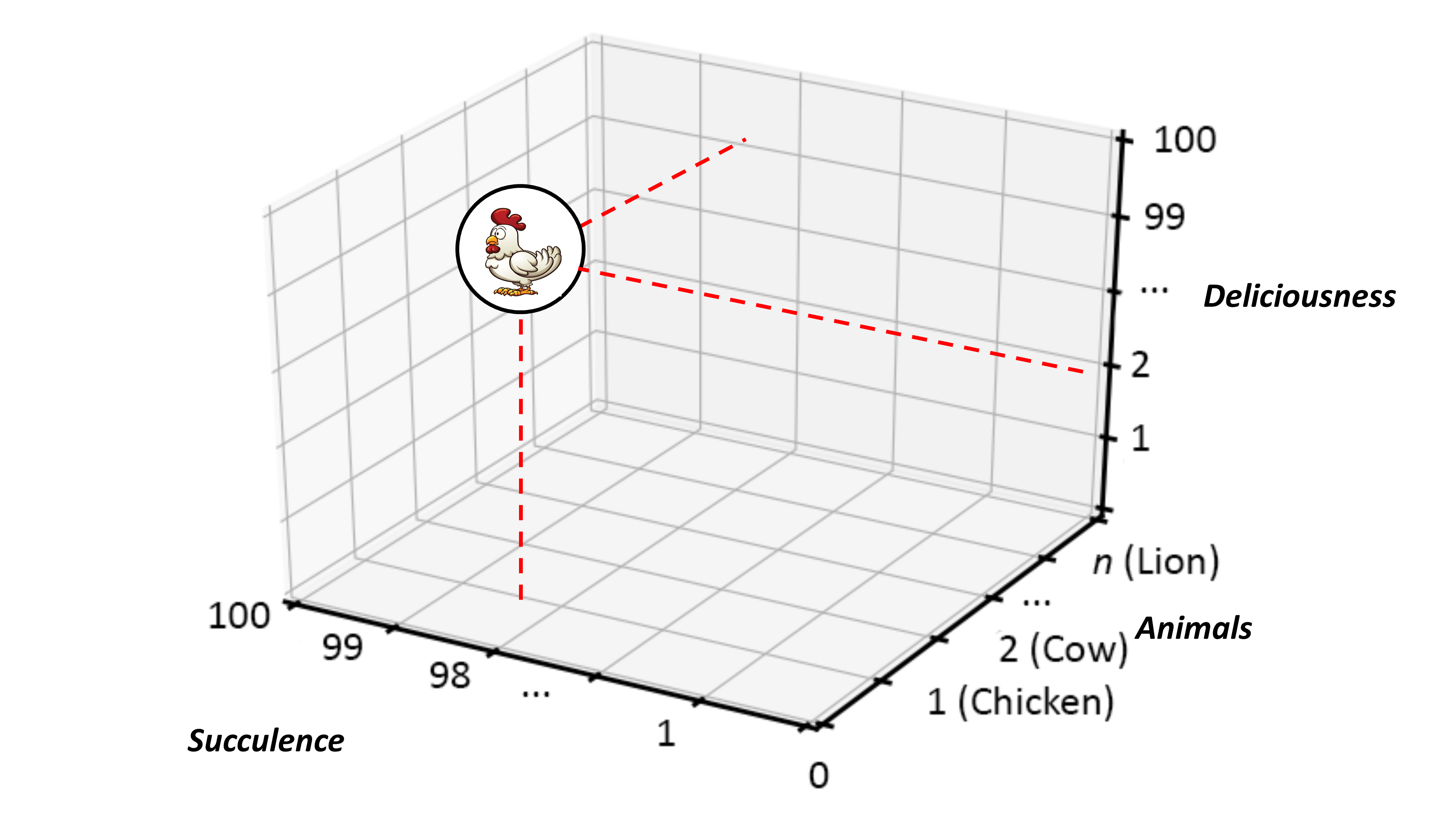

Whereas IT architects perceive the world in terms of functions, attributes, and so on contained within components, Enterprise Architects do the same in terms of components within systems. So that begs the question: what should Ecosystems Architects trade in?

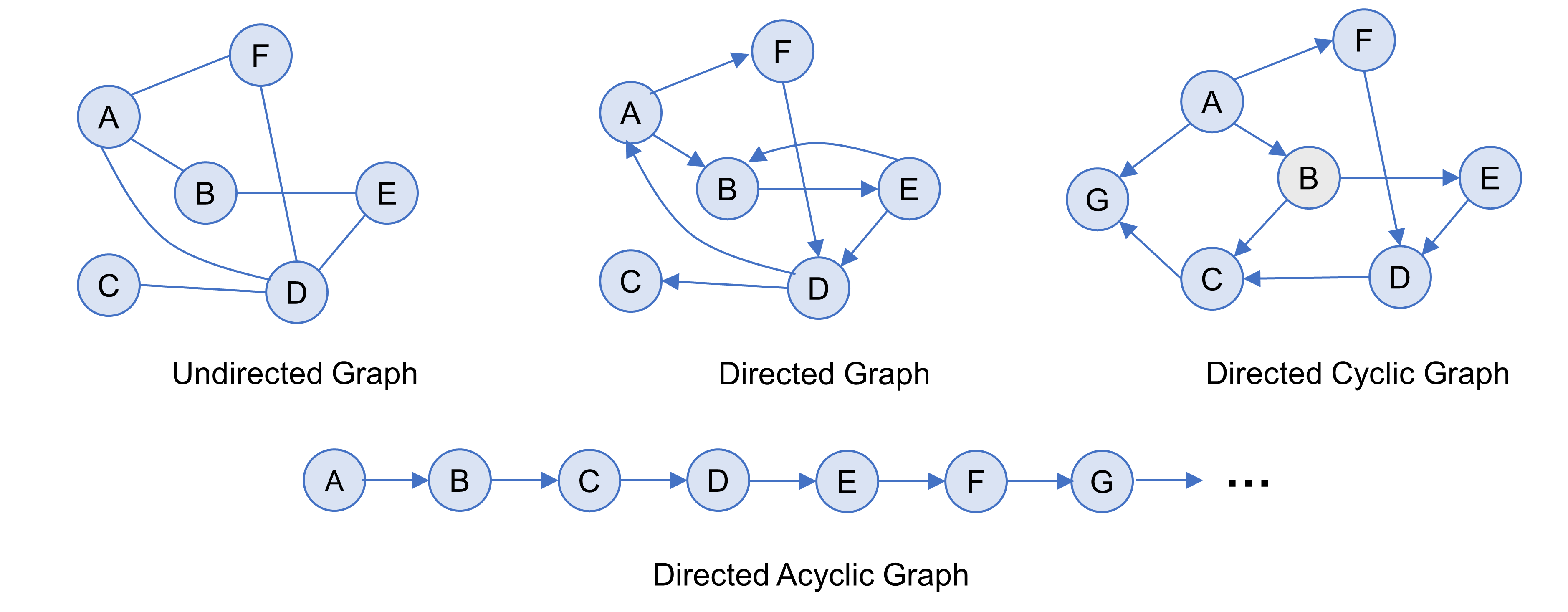

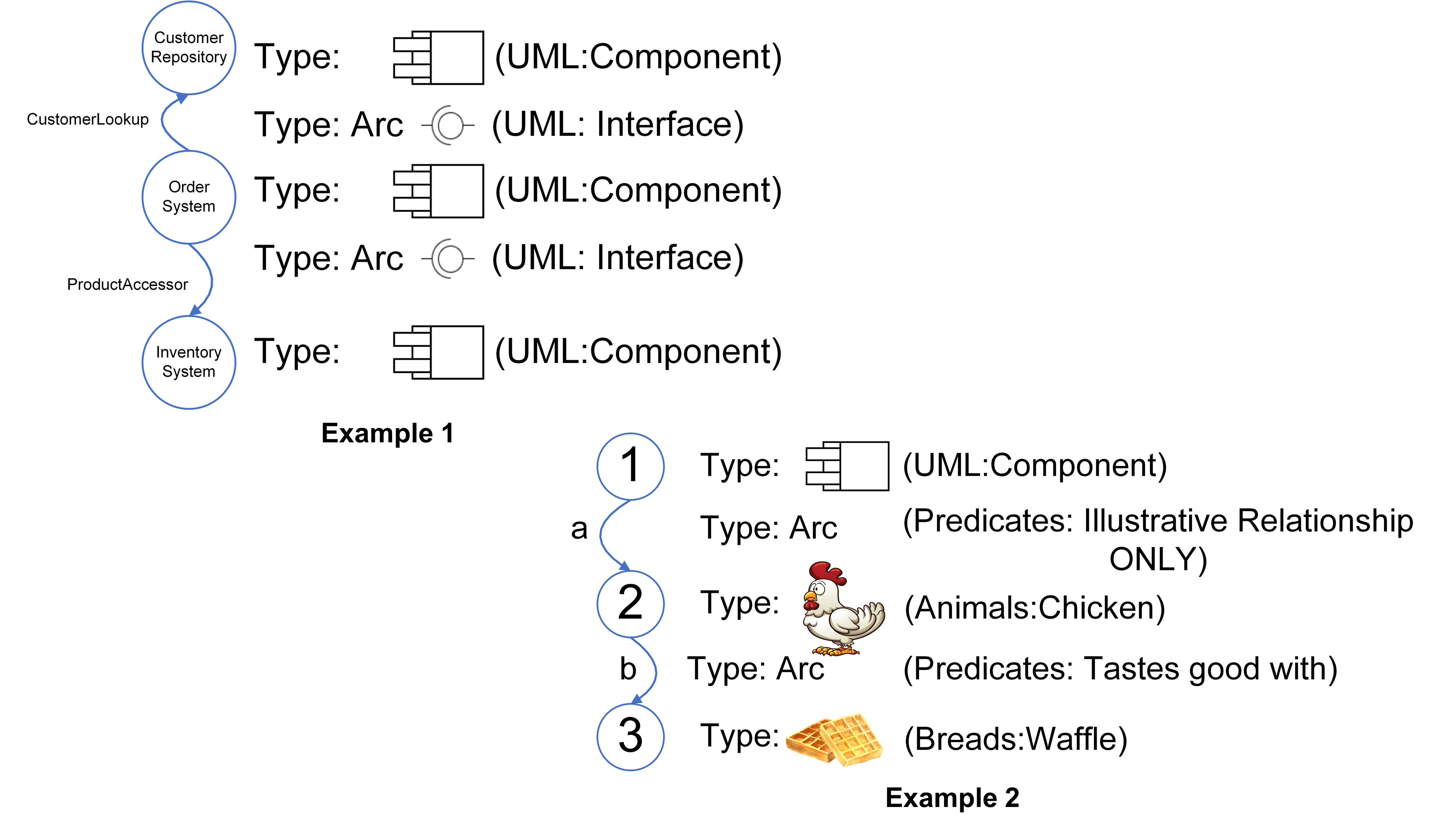

Because of the breadth and scale of the ideas requiring representation at the ecosystems level, categorization and containment must be handled in a very generic way — granted there is the issue of scale and the fact that ecosystems can have a recursive nature, it is, therefore, counterproductive to use competing sets of definitions at differing levels of abstraction. Instead, Ecosystem Architects will likely need to talk in terms that are agnostic of scale, abstraction, and complexity. This leads to the cover-all idea of representation via nodes; with a node simply providing a generic way to describe either a single idea or thing worthy of mention, or, likewise, a collection of either or both, and regardless of level of scale, abstraction, or complexity involved.

An Ecosystem Architect will, therefore, take these nodes and correlate them to clusters, domains, regions, communities, colonies, enterprises, systems, components, aspects, attributes, or whatever and at suitable abstraction levels, as needed. In that way, nodes can be typed according to whatever features require representation, and then connected to produce whatever coherent representation is required in the form of a generic graph — an ontological[9] schematic showing how nodes are related.

This is much the same as is done at both systems and enterprise levels, in that all architectural schematics can be considered as specialized forms of graphs. The only difference being that ecosystems-centric schematics are not so precious about the levels of abstraction and graphical nomenclature used to classify and describe node instances. Instead, associated typing is expected to be explicitly side-lined from the graphical symbology in play and documented as a node attribute in accompanying documentation. This increases the semantic expression available by making graph representation both flexible and extensible.

In general terms, then, nodes are represented at the ecosystem level using a flattened nomenclature of either boxes, or more commonly circles, connected by lines or arrows. There are no constraints on whether lines should be straight or not. The use of arrows normally indicates some form of directional flow, but again, typing can be moved out into accompanying documentation. This includes typing associated with composition, association, implication, and so on. For more details, please see Chapter 3.

1.5. Emergent Structure, Taoist Thinking, and Abstract Workspaces

The transition from graphical typing to documented attribution might, at first, feel misplaced and foolhardy, especially to those steeped in more traditional IT architecture practice. Nevertheless, the move is deliberate at the ecosystem level.

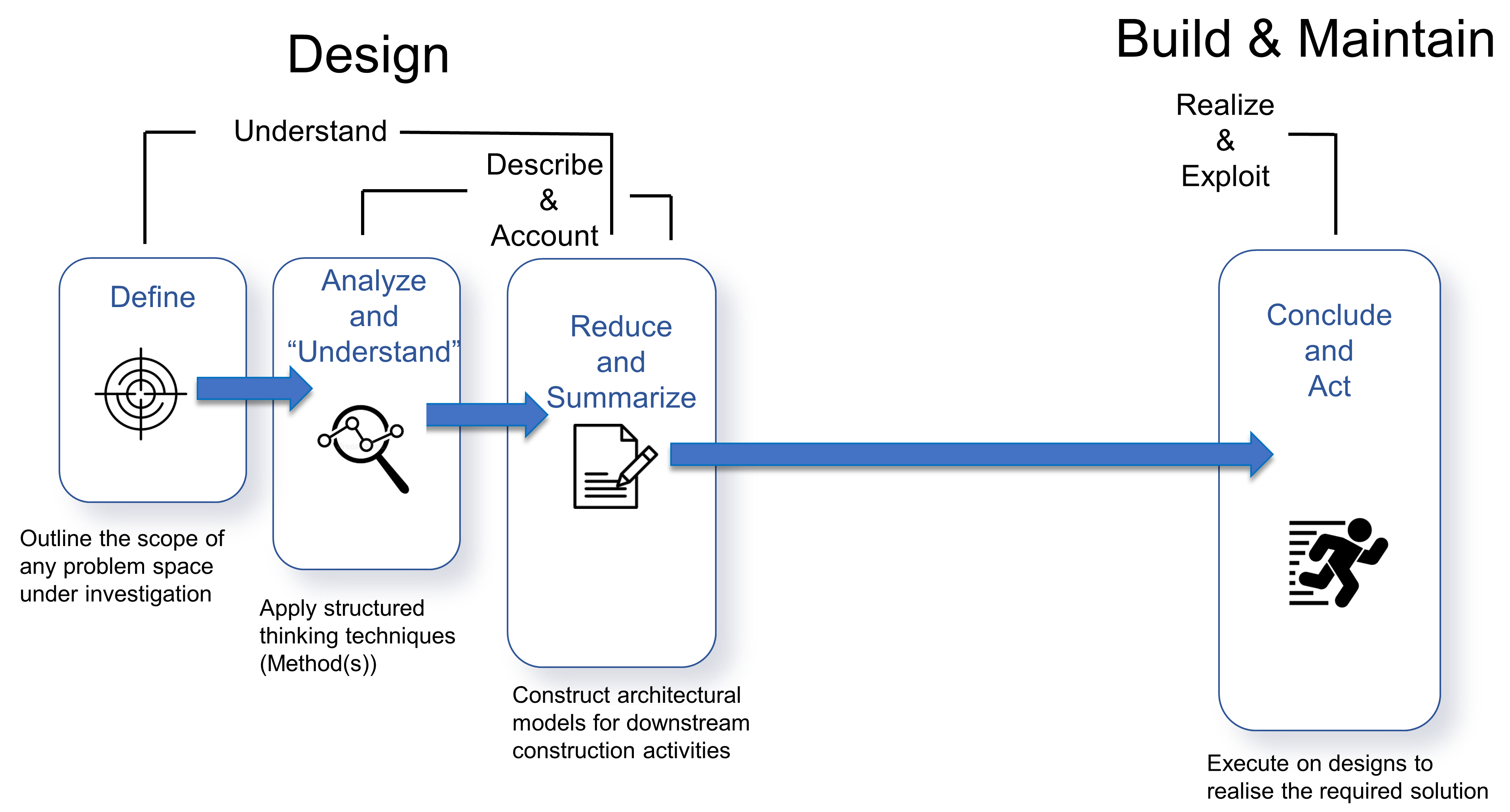

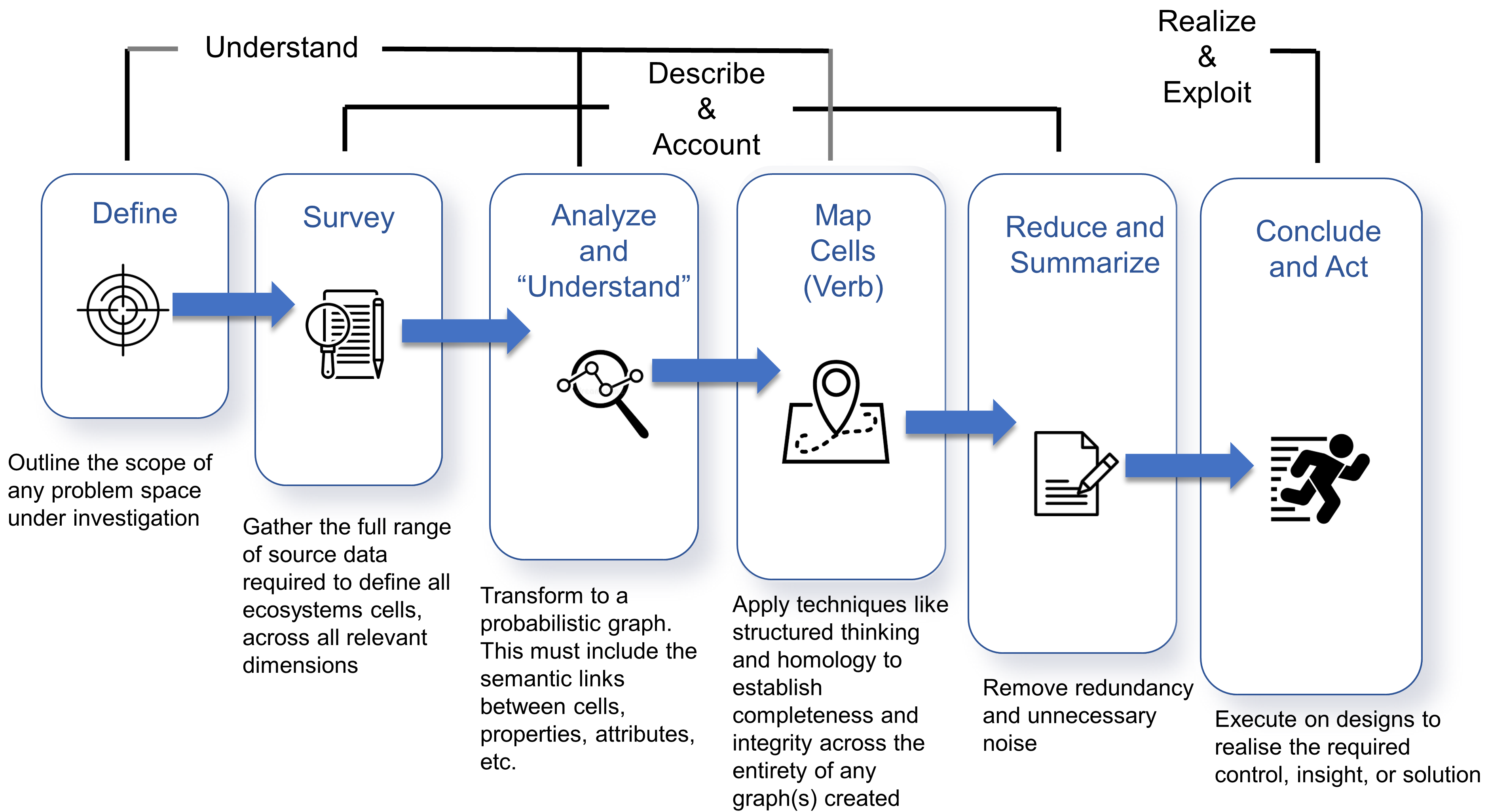

Of old, we, as IT architects, might have been asked to write down what we knew of a problem space, then analyze that text to help identify its various entities, actions, and properties of interest. In that way, prominent nouns typically translated into architectural entities, verbs their actions, and adjectives their properties or aspects. From there, composition flowed from decomposition, and architectural structure arose from the design and engineering processes being followed.

With Ecosystems Architecture, however, the complexities and scale of the problem spaces involved might well mask any system’s structure up front, so all we can do is state what is known and include whatever assembly is obvious as we go. This is analogous to throwing all our thoughts, ideas, and discoveries at a wall and allowing an architecture to emerge of its own accord — much like the singular idea of an enterprise spontaneously emerging from the cumulative contribution of its individual business functions. That is, rather than deliberately forcing out structure through a sequence of reductionist and/or compositional design tasks.

This change, therefore, demands increased diligence in documenting our thoughts across the board and at all times. In that, the analogy of an imaginary wall, or architectural workspace, becomes very real, and an abstract mathematical framework replaces the architect’s favored pen and paper to record progress. This specifically allows all the aspects of an architecture to be pinned down precisely, and in a very Taoist[10] way — almost like a highly expressive mind map with little concern for scale or complexity. That introduces its own rigor and a unique set of advantages, by way of the mathematical formalisms introduced. But more of that in Chapter 3. All that really changes is the order in which we recognize architectural elements, and the moments at which revelations become apparent. In the end, the same aim is reached, in a coherent understanding of how a system is, or should, be configured, even though the route to achieve that might not be totally clear at the outset and may also need the help of some advanced tooling[11] along the way.

Relying less on sequential progress to squeeze out structure means that understanding integration and interoperability must rise in prominence, especially during the early stages of architectural thinking. In short, the notion of connectivity needs to always be at the forefront in an architect’s mind, especially when it is understood that typing moves from being graphically explicit to an act of assigning property via additional documentation across all architectural elements. This stance is transferable to Enterprise Architecture as well, but the potential inability to perceive the enterprise as an ecosystem can serve to increase the dysfunctionality within that enterprise — through misalignment, discontinuity, and friction created by the deployed systems already in place.

1.6. Natural Successors

Enterprise Architects are well positioned to become Ecosystem Architects, by the virtue that it is only the boundary of discourse that changes when moving up from one abstraction to the other. First, however, there must be a significant shift in the appreciation of how an enterprise is meaningfully constructed. So much so that the value of isolated specialization is worth reiterating.

To nail this home, simply think of any organizational chart. What do you see? A box for Sales, a box for Marketing, a box for Engineering, and so forth, all followed by the other boxes for essential areas of business specialization. In other words, and in less flavorsome terms perhaps, a silo for Sales, a silo for Marketing, a silo for Engineering, and so on.

In an ecosystems approach then, each silo simply becomes a node, that, in turn, might act as a container for further nodes at increasing levels of detail. These nodes, as repositories for contributing functionality, data, and so on, are then fundamental and necessary. In fact, they become, in part at least, a best practice essential, in that the nature of the node affords delineation, specialization, flexibility, performance, and efficiency in an ecosystems view. Nodes, therefore, act as the basic means of aggregation at the ecosystem level.

In part, this realization comes down to appreciating that each silo establishes a set of motivations and behavioral patterns that directly support its existence and separation from other silos. There will, of course, always be some overarching motivations that transcend the individual unit, but these are more akin to accepted social behavior within some higher means of decomposition, and might actually act as connections between nodes when that level is considered formally. Therein, and in business terms, each silo may be associated with a primary sponsor, as in a Vice President or Director perhaps. This leader may or may not then get along with other Silo Leads, and so competition can take hold and distort reality as seen by IT. This not only plays out around extra-silo composition, but also intra-silo composition, with Silo Leads perhaps being prone to subdivide their own domains into lesser areas of speciality. This obviously creates more silos and more opportunity for isolating motivations and behaviors. As a result, sub-speciality silos can often present as badly formed nodes. This is a never-ending story. As no doubt most experienced IT architects will realize, internal organizational conflicts of one form or another can permeate and simmer into perpetuity. This creates one of the eternal challenges facing all IT architects.

In summary then, modern enterprise is a blend of both dependent and independent business units that co-exist within and across a complex ecosystem. Invariably, that ecosystem can transcend corporate boundaries, bringing further into question where the idea of an enterprise ends and the reality of open-ended ecosystems thinking begins. A starting point for aspiring Ecosystem Architects is therefore to learn how their own enterprise functions and behaves. Alignment needs to be toward supporting the organizational silo view and not disarming it or causing further friction. The bane of IT is the silo, and yet it is also its savior, as the enterprise is, at its simplest level, nothing more than a conglomeration of silos — or, as the Ecosystem Architect will come to call them, nodes. Some nodes interoperate better than others, some compete, and some, at times, are stubbornly dysfunctional. It is not the job of IT to fix the enterprise per se, and it is not a job of the business to change the enterprise to conform to IT’s perception. Nevertheless, it is the role of IT to support the enterprise in any manner that the enterprise itself wishes to exist. And to do that, IT must take to heart its mantra: “We want to align ourselves with the business”. This, therefore, is the starting point for the Enterprise Architect to transition up to hyper-enterprise ecosystems thinking levels.

2. What is all the Fuss About?

"The journey not the arrival matters.” — T.S. Eliot

“All pioneering engineering is very much like poker; there are some things that you know for certain, some things that you think you know, and some things that you don’t know and know that you can’t know.” — Sir Christopher Hinton

“It is paradoxical, yet true, to say, that the more we know, the more ignorant we become in the absolute sense, for it is only through enlightenment that we become conscious of our limitations. Precisely one of the most gratifying results of intellectual evolution is the continuous opening up of new and greater prospects.” — Nikola Tesla

Historically, organizational leaders only needed to worry about getting the best from their piece of the value chain. Today, however, as change becomes the only real constant in business, those leaders are being forced to reevaluate; specifically to embrace their broader context and explore the hitherto untapped adjacent opportunities around them. That brings greater uncertainty, and with it a need to embrace ever more comprehensive approaches to viable systems thinking. Unavoidably, both are now a necessity if organizations are to achieve parity with the successes of yesteryear.

This is indeed a frightening prospect. Still today, most organizations pay scant attention to the idea of constant change, especially when that involves large-scale, open-ended, complex adjustment. In such circumstances, it is not uncommon for leaders to run for cover. But that is only natural. At its core, the problem is about how we perceive risk and manage it successfully. Even so, in a world of ecosystems, clarity rarely comes in black or white, and the idea of understanding precisely where one challenge ends and another begins can be somewhat of a luxury.

Overall then, the world around us evolves constantly, like it or not, and just because it might be impossible for any individual to directly understand all the fineries involved, does not alter that fact one bit. In the modern, networked world, business evolves rapidly and often in complex ways at scale. That is our everyday de facto.

2.1. Evolution Over Change in IT Systems (Universal Darwinism)

To be blunt, change is not about evolution, but evolution is very much about change. In that regard, the former simply focuses on alteration and occurs without consideration for any grander plan. Evolution, on the other hand, points to change targeted toward the global betterment of those involved. Change can therefore be a negative thing, whereas evolution feeds on change to drive out negativity and promote advancement. Organizations can change, whereas, in the long term at least, ecosystems can only ever evolve if they are to remain viable. What is more, today we understand that evolution is a phenomenon not just limited to the natural world.

Scientists like Charles Darwin might well have taught us that evolution is tightly bound to skin and sinew, but more recent updates suggest that the idea is much more encompassing. For instance, in 1976 the eminent biologist and writer, Richard Dawkins, presented an information-based view in which he outlined the idea of selfishness in genes; thereby promoting the notion that they act only for themselves and similarly only replicate for their own good [40]. Dawkins also introduced the important distinction between replicators and their vehicles. It is the information held within the genes that is the real replicator, and the gene’s physical structure simply its carrier. A replicator can therefore be anything that can be copied, and that includes completely virtual capital like ideas, concepts, and Intellectual Property (IP) of significant commercial value. A vehicle is therefore only an entity that interacts with its environment to undertake or assist the copying process [40]. As a result, in modern business, any concept or idea embodied in the digital ether can be considered as a replicator, and human beings, alongside any hardware and software used in the form of IT, as its vehicle. This ties us, our musings, and our advancements together, as it always has, into the perpetually changing environment of commotion we awkwardly refer to as “progress”.

What results is the outcome of what Dawkins saw as a fundamental principle at work. In that, he suggested that wherever it arises, anywhere in the universe, be that real or virtual, “all life evolves by the differential survival of replicating entities”. This is the foundation for the idea of Universal Darwinism, which encompasses the application of Darwinian thinking beyond the confines of biological evolution, and which prompted Dawkins to ask an obvious, yet provocative, question: Are there any other replicators on our planet? The answer, he argued, was emphatically “yes”. Staring us in the face, although still drifting clumsily about in its primeval soup of evolutionary modernity, is another replicator — a unit of imitation [41] that is the very essence of sociotechnical progress itself. This is the fuel that truly powers the modern digital age, and it represents an important leap in understanding. By raising evolution above any level needing biological representation, it makes the digital ether an eminently suitable place for universal evolution. This is how the ongoing progress of the digital world maintains its momentum. Humans and the tools we build are inextricably bound together in an onward march. In realizing that, Dawkins found a name for his self-replicating ethereal unit of cultural exchange or imitation, taken from a suitable Greek root, and calling it a “meme”, inspirationally chosen for its likeness to the very word “gene” itself.

As examples of memes, Dawkins proposed “tunes, catch-phrases, clothes fashions, ways of making pots or building arches”. He mentioned specific ideas that catch on and propagate around the world by jumping from brain to brain or system to system. He talked about fashions in dress or diet, and about ceremonies, customs, and technologies — all of which are spread by one person copying another [41]. In truth, and although he could not have realized it at the time, Dawkins had captured the very essence of the post-Internet world, in a virtual memetic framework that would evolve just as all natural life had done before it. In a short space, Dawkins had laid down the foundations for understanding the evolution of memes. He discussed their propagation by jumping from mind to mind, and likened memes to parasites infecting a host in much the same way that the modern Web can be considered. He treated them as physically realized living structures and showed that mutually assisting memes will gang together in groups just as genes do. Most importantly, he treated the meme as a replicator in its own right and thereby for the first time presented to the world an idea that would profoundly influence global society [40] and business progress in one.

2.2. Things We Know About Complexity and Scale in Sociotechnical Ecosystems

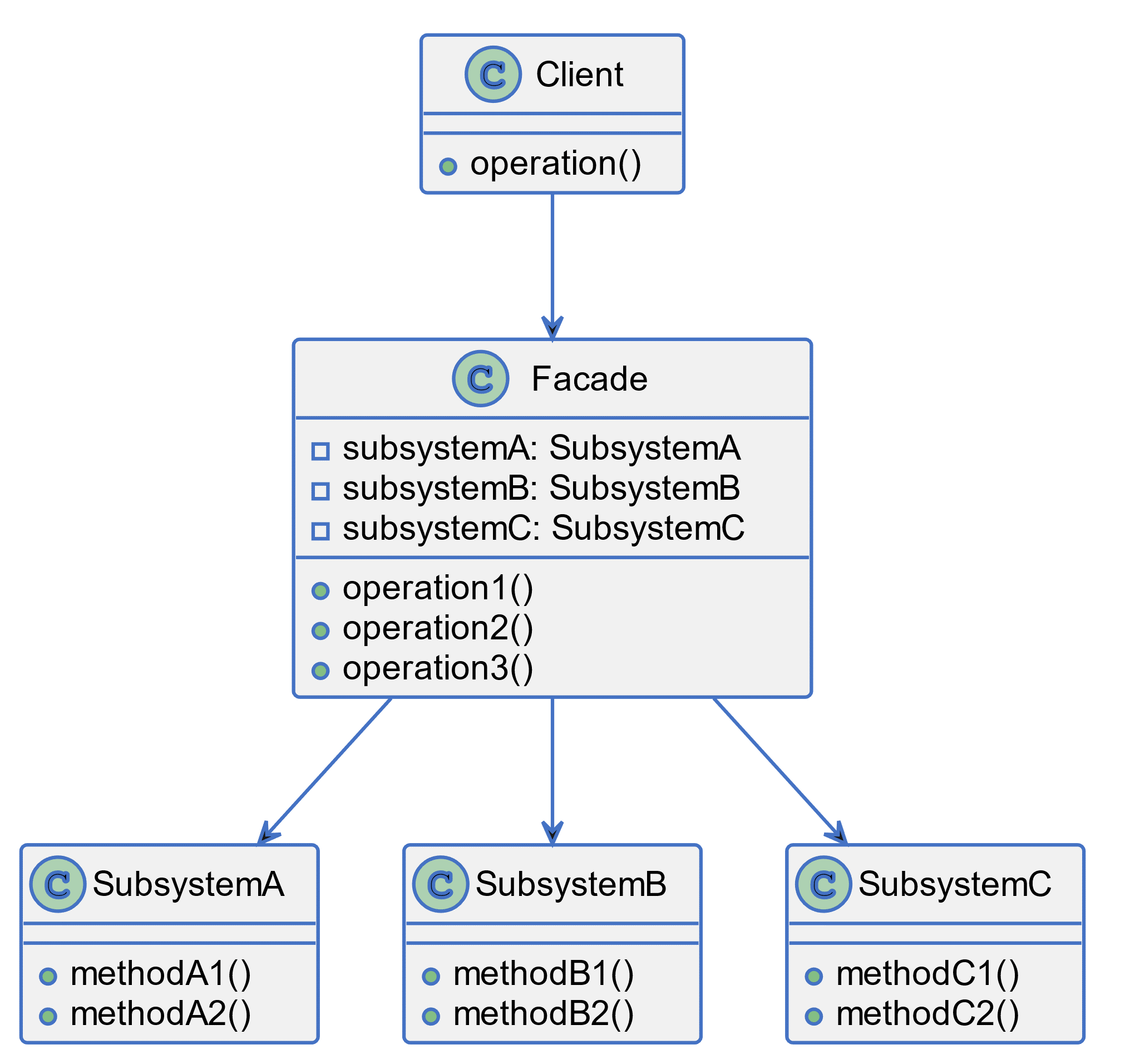

With specific regard to the emergence of sociotechnical ecosystems in the modern age, there are certain things we know, and which should not be underestimated. For instance, as with all ecosystems, be they naturally real-world, synthetically virtual, or an amalgam of both, their arrangement self-organizes and regulates in ways that generally benefit the whole and not necessarily individual parts. We also know that the self-regulation involved nearly always pushes to reduce waste and effort. To the untrained eye, this can appear unruly and chaotic, but closer inspection will show both order and disorder vying for position. Ecosystems, therefore, show well-defined regularities but can also fluctuate erratically [39]. They are complex systems in the sense that they exhibit neither complete order nor complete disorder. Rather, there is no fixed position at any scale to point to as an absolute definition. Instead, complexity relates to a broad spectrum of characteristics. At one end lies slight irregularity, while at the other complete randomness, without meaning or purpose. Both are extremes of the same thing. When slight variation is exhibited it is highly likely that the overall pattern of the whole can be accurately predicted just by examining one tiny part, but when complete randomness is encountered that would be pointless and impossible. In the middle lies some exciting ground; a sweet spot of complexity perched on the edge of chaos. Here, the pattern is neither random nor completely ordered. Regions of differing sizes can all be found exhibiting similar features, leading to the perception of some underlying theme at many different scales. This is where truly natural complexity lives and where the bullseye for Ecosystems Architecture can be found. It cannot be described in terms of simple extrapolations around a few basic regularities, as is the case with Enterprise Architecture. Instead, it displays nontrivial correlations and associations that are not necessarily reducible to smaller or more fundamental units [42]. In a nutshell, such complexity cannot be boiled out and served as if cooked from a list of simple ingredients. Only a deep understanding of all the participants, connections, and properties involved can ever lead to a true appreciation of the whole, regardless of any surface presentation. In that way, complexity behaves like a façade, an interface almost, standing guard over the wherewithal behind. Recognizing this guard and paying due respect is the first step on the road to Ecosystems Architecture. It also ushers in a need for Taoist-like practices. In simple terms, that implies a constant and equal appreciation of all architectural properties in play and at all times; the continual abandonment of preconceived ideas around both local and global patterns (unless blatantly obvious across multiple viewpoints), and a willingness to accept that architectural structure may just emerge without any traceable justification.

In the open then, what is fascinating about complexity’s sweet spot is that it is not only supported by, but promoted as a result of new technology. When looking at economic history, as opposed to economic theory, technology is not really like a commodity at all. It is much more like an evolving ecosystem itself. In particular, innovations rarely happen in a vacuum. They are usually made possible by other innovations already in place. For instance, a laser printer is basically just a photocopier with a laser and a little computer circuitry added to tell the laser where to etch on the copying drum for printing. So, a laser printer is possible with computer technology, laser technology, and photo-reproducing technology together. One technology simply builds upon the inherent capabilities of others [43].