4. That is All Well and Good — Coping Mechanisms

The preceding chapter was all about technique; that is, it outlined a method already in widespread use, but perhaps not so well known amongst IT architects and software engineers. It also explained how that technique might be used to reason over multiple headfuls of information, so that they might be consumed in chunks of a single headful or less. In other words, how extremely large problem spaces might be cataloged and used successfully by your everyday (human) IT architect for work at the hyper-enterprise level.

Highlighting such a technique was not about prescription though, but more about highlighting that tools already exist to allow individuals to reach beyond the cognitive limits of the average human brain, and which do not necessarily rely on traditional reductionist problem-solving techniques.

With all that said, more pragmatically-inclined readers should hopefully have raised cries of “so what?”, and quite rightly so. Multi-headful party tricks amount to little more than tools available to the would-be Ecosystems Architect. They act as implements to assist, but provide little in the way of advice on how, where, when, and why they should be used. In other words, they provide little in the way of methodology.

Methods on their own add little value. Without some methodology to surround and support them, some rational for application and purpose as it were, along with instructions for how to go about whatever practice is necessary, methods amount to little more than instruction manuals for a car you cannot drive.

So, what might an Ecosystems Architecture methodology look and feel like?

4.1. Prior Art

As with all new endeavors, the wisest way to push forward is not to do anything rash. Instead, progress should not only, but must, be based by extension on provenly successful practice. So, with any approach targeted at hyper-enterprise IT architecture, the trick must be first to consult established practice in the hypo-enterprise space — netting out into an appreciation of the most widely accepted Enterprise Architecture methods; for example, the TOGAF® Standard and the Zachman Framework™ [108] [109]. This should further be supported by an appreciation of the underpinning principles coming from computer science, mathematics, and so on (as covered in the Prologue).

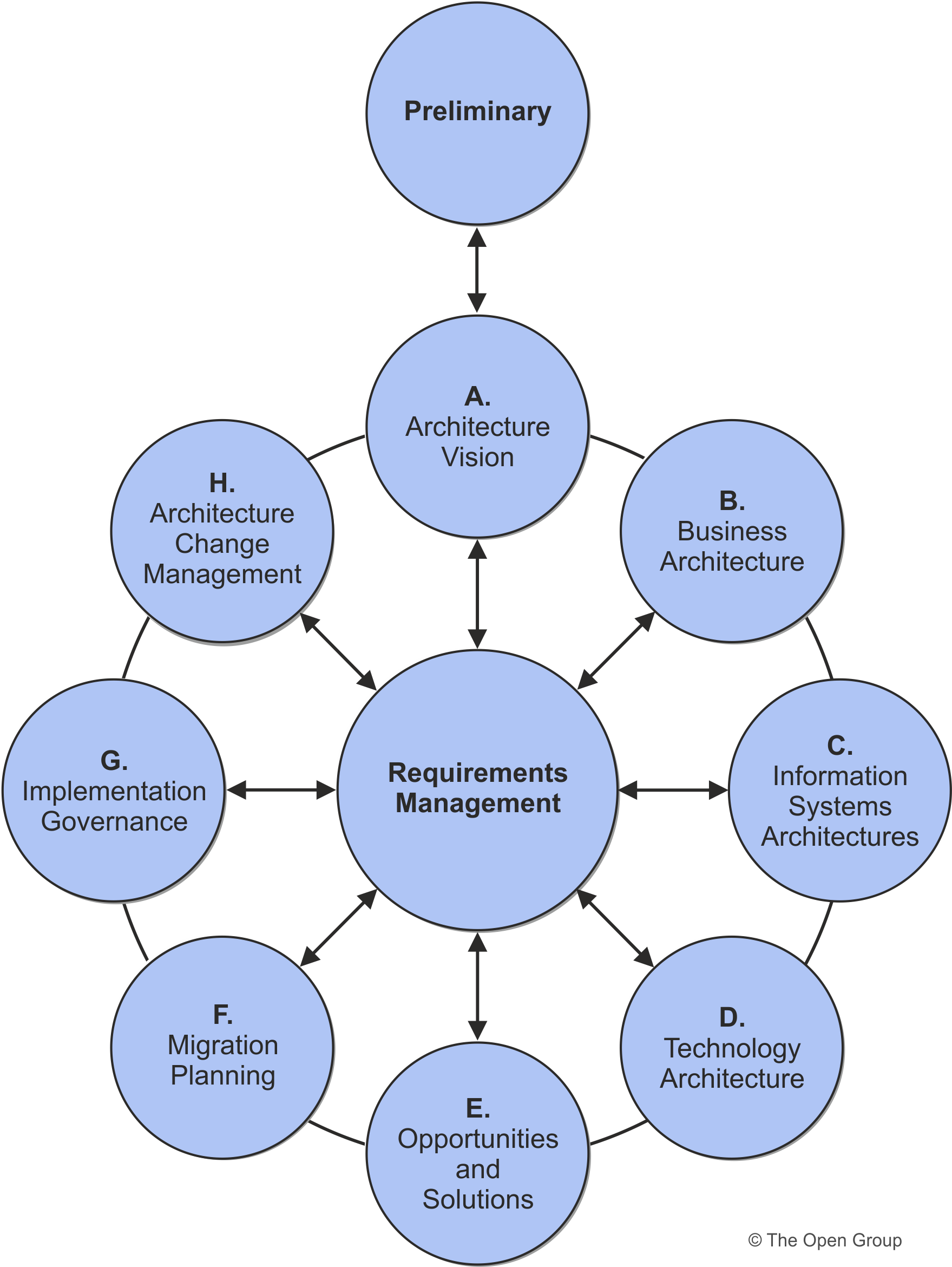

The TOGAF Standard was initially established in 1995. It prides itself on being an approach that satisfies business needs as the central concern of all design activities, but, at its core, it is fundamentally a collection of structured[1] and systems thinking practices [110] laid out to achieve systematic progress across the planning, design, build, and maintenance lifecycles of any collective group of enterprise IT systems. It does this by splitting Enterprise Architecture across four domains: Business, Application, Data, and Technical, and advising an approximate roster of work across an eight-phase method, known as the Architecture Development Method (ADM).

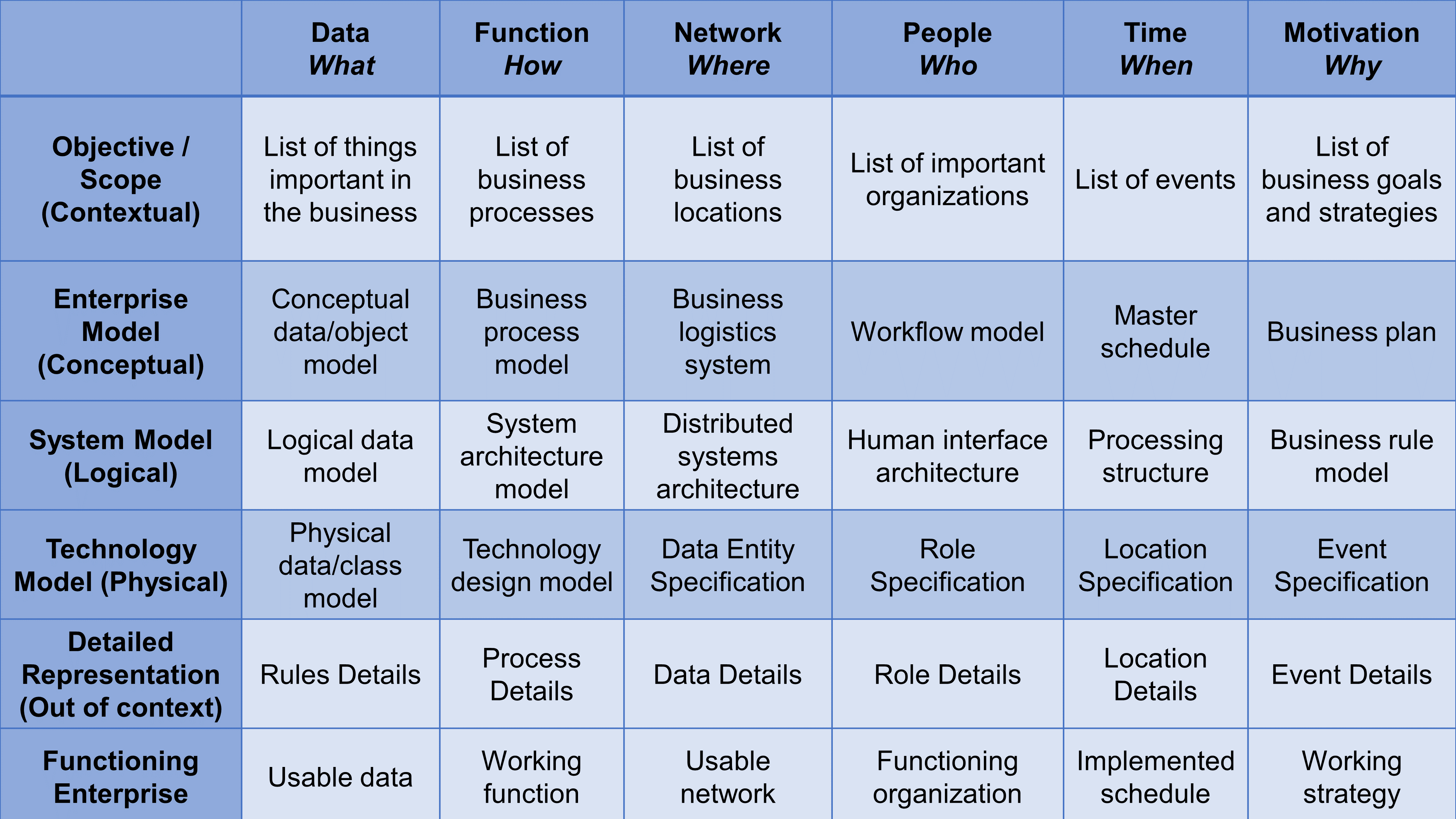

The Zachman Framework, shown in Figure 20,[2] by contrast, is slightly older, having first been published in 1987, and is somewhat more lenient in its doctrine. As such, it concentrates on describing the relationships between different Enterprise Architecture artifacts and perspectives and traditionally takes the form of a 36-cell matrix of ideas. Zachman, therefore, does not provide any guidelines for creating architectural artifacts, but instead prioritizes enterprise awareness by providing a holistic overview of perspectives and relationships across an enterprise. In that regard, Zachman is not necessarily a methodology per se, but rather a template for mapping out the fundamental structure of Enterprise Architecture.

Both the TOGAF Standard and the Zachman Framework act as guidelines for applying a variety of architectural tools, many of which are graphical and/or graph-based. Both are also based on earlier architectural frameworks, usually focused on the systematic and the top-down structured development of singular IT systems and/or the various architectural artifacts involved. This lineage includes frameworks and toolsets worthy of mention, including the UML [111], Structured Systems Analysis & Design (SSADM) [112], Object-Oriented Design (OOD) [113], Jackson Systems Development (JSD) [114], and so on.

All, however, are merely examples of structured thinking approaches which help make sense of a complex world by looking at it in terms of wholes and relationships. All also try to emphasize sequenced pathways combining processes that incrementally contribute toward some form of key delivery event; be that completion of a system’s, or systems’, design, a go-live date, or whatever.

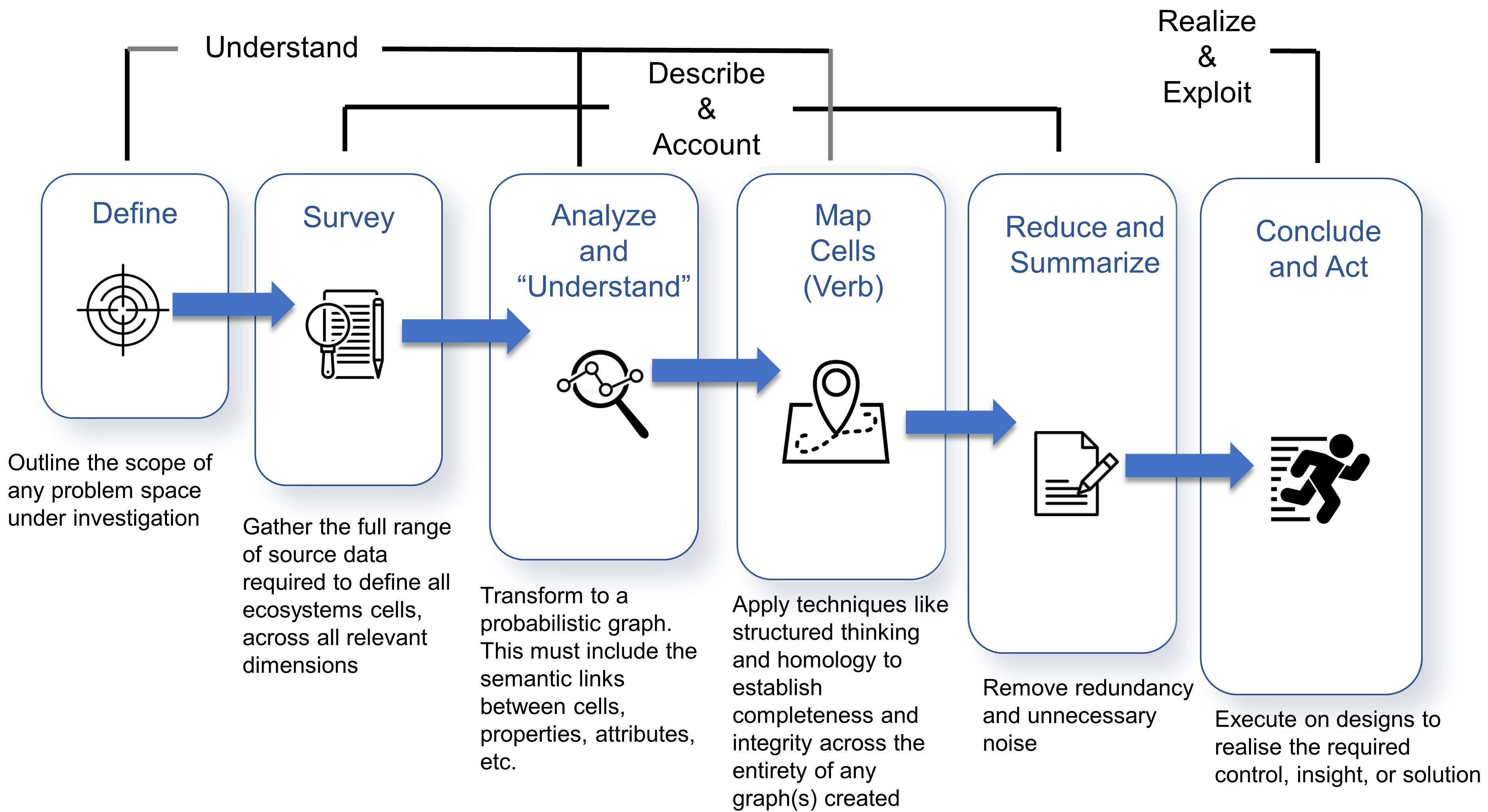

Boiled down to their essentials, all such frameworks can be summarized as a sequenced set of steps, or stages, aimed at:

-

Understanding a target problem space

-

Accounting for that space and describing a suitable (IT-based) solution aimed at alleviating related business challenges or releasing new business benefit

-

Exploring, explaining, realizing, and exploiting any resultant IT solution, once in place

4.2. Software Lifecyle Approaches

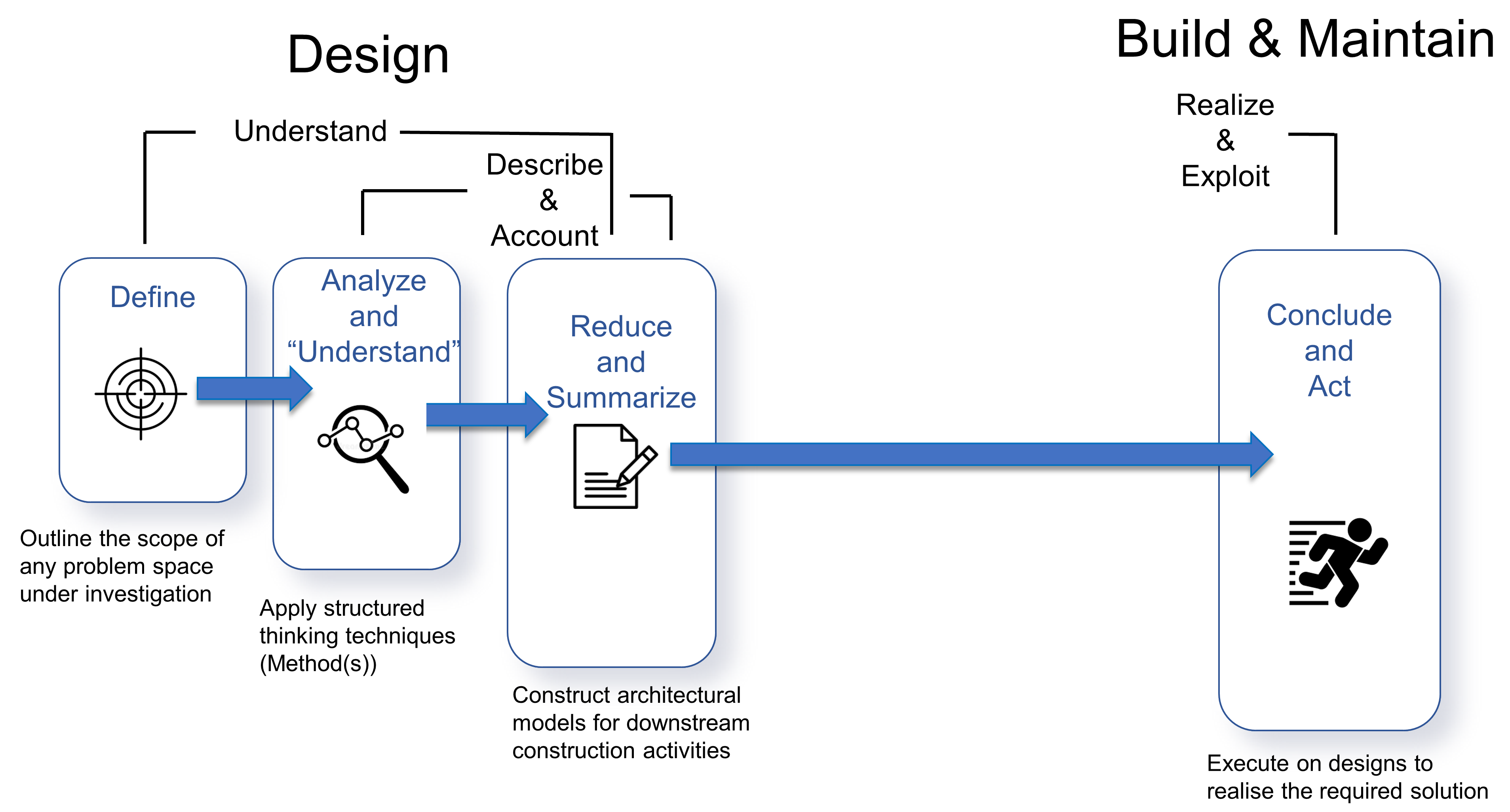

This leads to a broad-brush depiction of the IT overall systems lifecycle within any given enterprise, as shown in Figure 21.

Here we see the same basic structure as listed at the end of the previous section. As such, the first two and a bit stages map onto the process of understanding a target problem space, the latter part of the second and all of the third stages focus on accounting and describing what needs to be done to implement any IT solution required — to make it “real”, as it were — while the fourth and final stage is all about actually making real and then ensuring that tangible benefit can result from delivery outputs.

Various systems and software lifecycle approaches have been developed over the years to help IT delivery across these stages, and range from the more traditional, as in Waterfall [115] or V-style [116], through to more contemporary modes of work, like Agile [117] delivery. All equip the IT architect with a structured set of coping mechanisms, and all are aimed at helping understand the viability of any work they are being asked to assist and/or undertake. And in so doing, they hopefully step up in support when asking questions like:

-

What am I, as an IT architect, uniquely responsible for?

-

How might I justify and explain my actions?

-

Am I doing things at the right time and in the right order?

-

What will not get done if I do not act professionally?

-

What are the value propositions associated with my work?

-

How am I contributing to the overall vitality of a particular business function or enterprise?

-

What are the consequences of not following some form of architectural method?

4.3. The VIE Framework

All of these ideas, tools, and approaches complement foundational systems thinking, like that found in the Viable Systems Model (VSM) [118] [119], which seeks to understand the organizational structure of any autonomous system capable of producing itself. VSM was developed over a period of 30 years and is based on observations across various different businesses and institutions. It therefore sees a viable system as any arrangement organized in such a way as to meet the demands of surviving in a changing environment. Viable systems must be adaptable and, to some extent at least, autonomous, in that their housing organizations should be able to maximize the freedom of their participants (individuals and internal systems), within all practical constraints of the requirements of them to fulfill their purpose.

As Paul Homan reminds us, this leads to an overarching set of characteristics common to all practically evolving sociotechnical systems at, or below, enterprise level:

-

VIABILITY

Participants (humans and systems) must be able to instantiate, exist, and co-exist within the bounds of all relevant local and global constraints/rule systems.In simple terms: Things should work and actually do what they are expected to do when working with, or alongside, other things.

-

INTEGRITY

Participants (humans and systems) must contribute meaningfully across local and global schemes and/or communities and in ways that are mutually beneficial to either the overriding majority or critical lead actors.They must not unintentionally damage the existing cohesion and content of the systems (as opposed to intentional damage that promotes adaption growth in light of environmental adaptation).

In simple terms: Do not fight unless absolutely necessary. Progressive harmony normally points the way forward.

-

EXTENSIBILITY

Functional and non-functional characteristics (at both individual and group levels) must be open to progressive change/enhancement over time, and not intentionally close off any options without commensurate benefit.In simple terms: Change is good, but generally only good change.

4.4. The Seeds of a Hyper-Enterprise New Method

The VIE framework nicely summarizes many decades of thinking focused on evolving professional practice, but it still leaves several gaps when the need to work at ecosystems level arises. For instance, most, if not all, established methods provide little in the way of thinking when it comes to the probabilistic nature of change or degree of functional and non-functional fit. Nor do they adequately address the challenges of ever-increasing abstraction levels, as networks scale out above enterprise level.

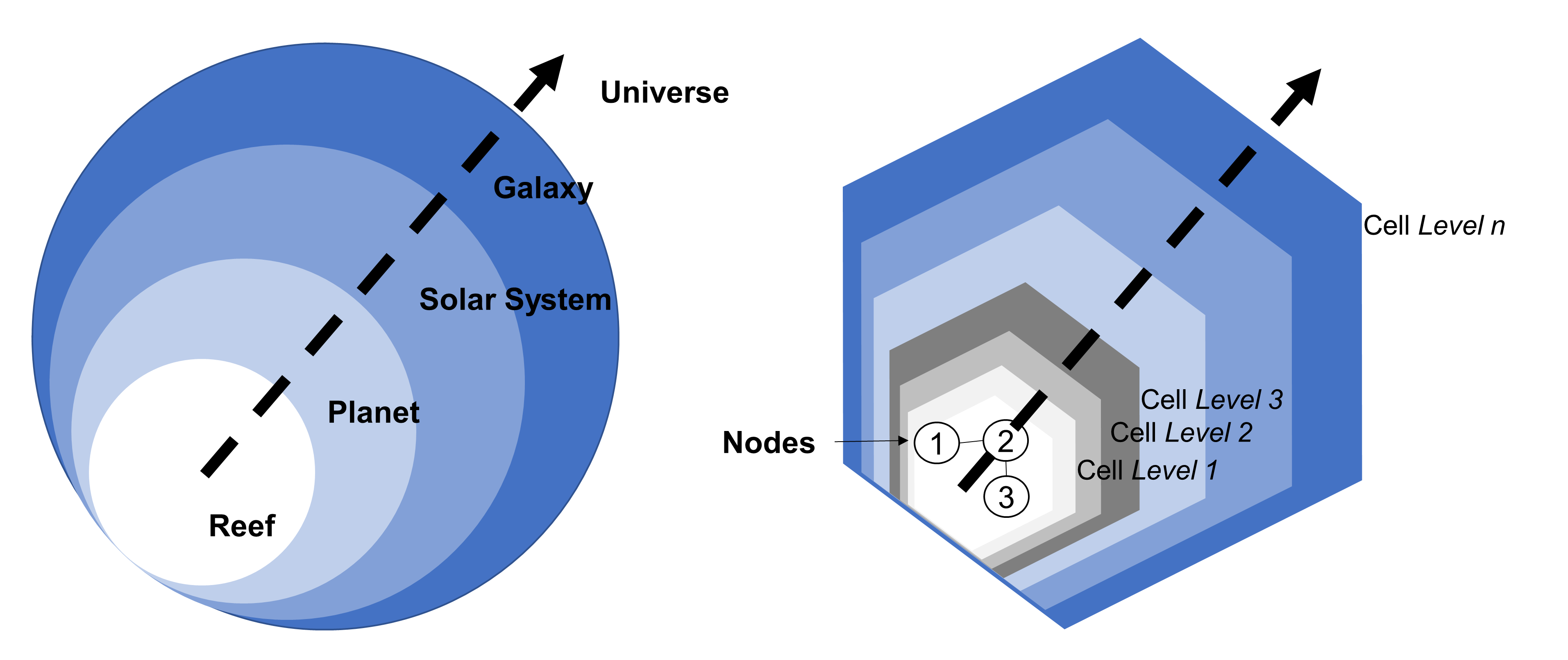

All of this consequently signals a need for methodology extension or modification at the very least. In doing that, you will recall a preference from the method outlined in the previous chapter, which presents the ideas of nodes and graphs as a ubiquitous way to model all the architectural detail required at both hypo and hyper-enterprise levels. You will also recall from Chapter 1 that the structure of the systems involved can be recursive; therefore, creating a need to work across multiple levels of abstraction.

For illustrative or convenience purposes, then, such abstraction levels can be labeled with unique names like Systems-Level, Enterprise-Level, Reef-Level, Planet-Level, and so on, but no generic unit for grouping or abstraction is provided. So, let us do that now by introducing the idea of cells.

4.5. Cells

A cell represents a generic collection of graph nodes, in the form of a complete and coherent graph or sub-graph at a specific level of abstraction, and which carries both viable architectural meaning and business and/or technical relevance.

Because of their recursive nature, cells provide a scheme for describing the Matryoshka-doll-like nature of systems, enterprises, and ecosystems, and become interchangeable with the idea of nodes as abstraction levels rise. As such, it is perfectly legitimate for a cell to consist of a graph composed of nodes or a graph of cells containing other cells, containing cells or nodes, and so on. Likewise, this implies that architectural graphs can accept cells as individual nodes which mask lower levels of abstraction.

This means that Ecosystems Architecture remains graph-based, as abstraction (and scale) increases, but it allows the overlaying of classification schemes to hide lower levels of detail — not unlike the idea of information hiding found in object orientation [120] [121] [122]. This follows the principles of concern, segregation, and separation, common to many structured thinking approaches.

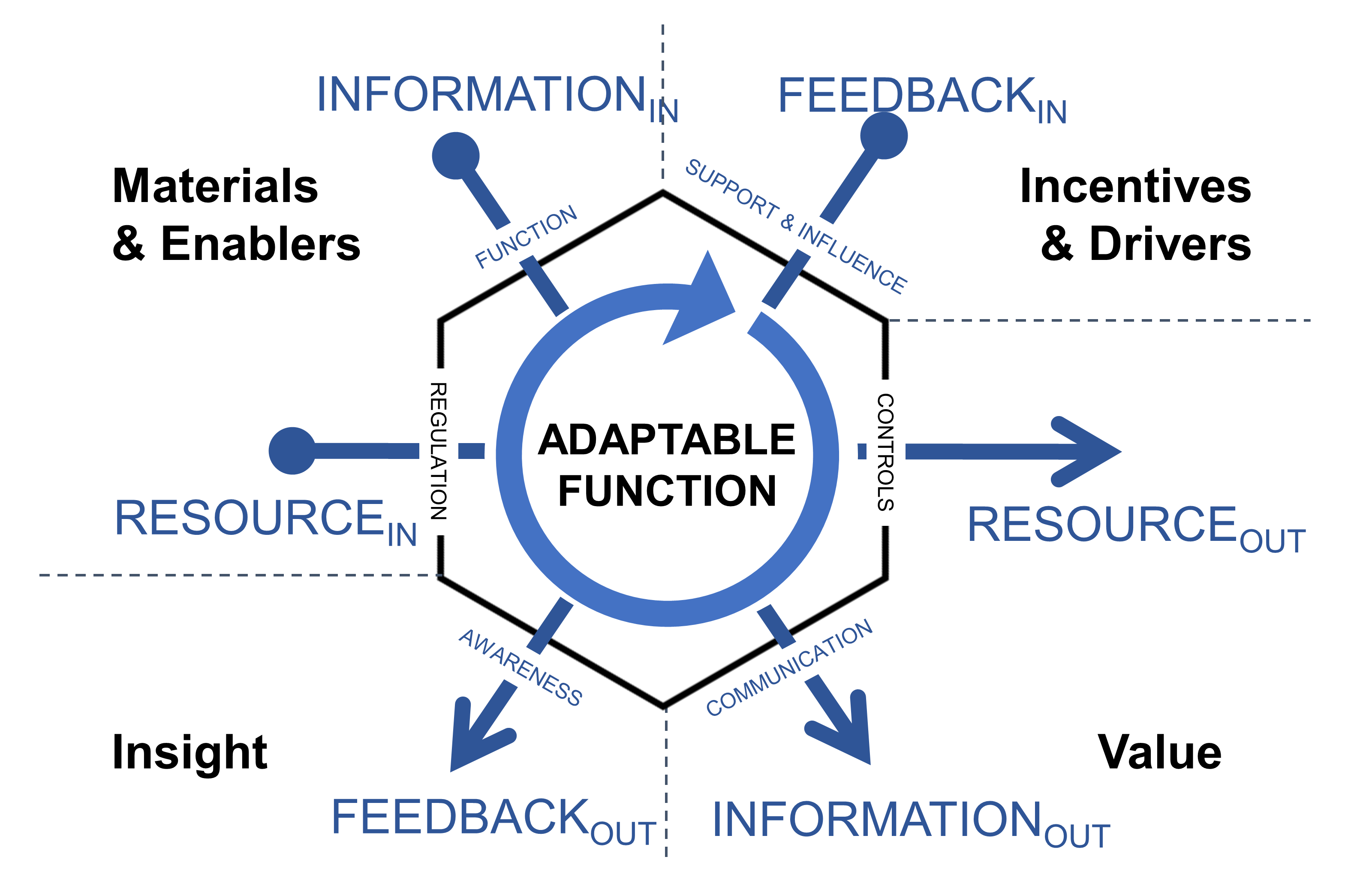

Cells also fit naturally with the ideas of viable systems thinking, in that they promote a pattern based on adaptable function. This sees cells as fitting into a self-organizing hierarchy of arrangement and also being dependent on a collection of both technical and business-focused inputs and outputs. These cover both information (technical) and resources (business) requiring transformation, and include the idea of self-regulation via specialized inputs classified as feedback.

All cells consequently act as both aggregating classifiers and participating nodal elements within an Ecosystems Architecture — regardless of their specific abstraction level and with any outermost cell acting as the embodiment of the containing ecosystem itself. Innermost, or lowest level cells, therefore, act as labels or indexes to the finest-grain graph content of relevance within an ecosystem, likely at, or below the systems-level. Lastly, everything outside an ecosystem’s outermost cell can be considered as its environment, even though that will itself have a recursive cell structure. This creates a continuum of interaction and co-evolution across adjacent domains/landscapes/universes, etc.

4.6. From VIE to VIPER

Expanding out into the ecosystems arena also implies extension to the list of essential characters for a viable system:

-

VIABILITY

Participants (humans and systems) must be able to instantiate, exist, and co-exist within the bounds of all relevant local and global constraints/rule systems.In simple terms: Things should work and actually do what they are expected to do when working with, or alongside, other things.

-

INTEGRITY

Participants (humans and systems) must contribute meaningfully across local and global schemes and/or communities and in ways that are mutually beneficial to either the overriding majority or critical lead actors. They must not unintentionally damage the existing cohesion and content of the systems (as opposed to intentional damage that promotes adaption growth in light of environmental adaptation).In simple terms: Do not fight unless absolutely necessary. Progressive harmony normally points the way forward.

-

PROBABILITY

Participants (humans and systems) may or may not exist and/or participate depending upon context. Participation may be beyond the control of both the individual and/or group.In simple terms: Certainty may well take a back seat at times. Prepare for that.

-

EXTENSIBILITY

Functional and non-functional characteristics (at both individual and group levels) must be open to progressive change/enhancement over time, and not intentionally close off any options without commensurate benefit.In simple terms: Change is good, but generally only good change.

-

RESPONSIVENESS

Participants (humans and systems) must be open to monitoring and participation in feedback loops.In simple terms: Help yourself and others at all times.

The inclusion of probability and responsiveness, therefore, captures the essential evolutionary self-organizing nature inherent to every ecosystem. They also highlight the need for any associated methods, methodologies, tools, and so on to be deeply rooted in mathematical techniques focused on chance, approximation, and connectivity. This does not mean, however, that mathematical formality needs to be forefront in any such approach, and certainly not to the point where advance mathematical wherewithal becomes a barrier to entry. No, it simply implies that some appreciation of probability is favorable and, at the very least, that probability should be considered as an essential (but not mandatory) property of any graph nodes and connections being worked with.

Probability and responsiveness also extend any overarching lifecycle framework in play, by highlighting the need for the unbiased gathering of detail before thinking about architectural patterns, abstraction, or hierarchy of structure. This pushes reductionist thinking toward the back end of the lifecycle and promotes Taoist-like neutrality and open-mindedness upfront.

4.7. The 7+1 Keys of Viable Enterprise Ecosystems

The VIPER framework also leads to a more fine-grained list of essential characteristics, as outlined by the IBM® Academy of Technology [123]. This provides insights into areas of operational importance, such as orchestration, functional and non-functional restriction, interoperability, and governance.

4.7.1. Principles of Vitality and Evolution

-

Function and Mutuality

Participants (humans and systems) must be able to do something that contributes to all, or a meaningful part, of the ecosystem. -

Communication and Orchestration

Participants (humans and systems) must be able to communicate and co-ordinate resources with other elements, either within their immediate context (the ecosystem) or outside of it (the environment) — must possess amplification and attenuation capabilities to suite. -

Controls

It must be possible to control the ways in which participants (humans and systems) group (structure), communicate, and consume resources in a common manner. -

Awareness

An ecosystem must support instrumentation and observation at both participant (humans and systems) and group levels. This includes implicit awareness of any environment, given that this can be considered as a both a unit and group participant in the ecosystem itself. -

Regulation

An ecosystem must support constraints at the group level that guide it as a whole to set policy and direction. -

Adaptability (including Tolerance)

Participants (humans and systems) and groups must be able to adapt to variance within the ecosystem and its environment. -

Support and Influence

Participating elements must be able to use resources supplied from other participating elements, groups, and/or the environment itself.

4.7.2. Principles of Self

-

Scaling

Structures must allow self-similarity (recursion/fractal) at all scales and abstractions.

4.8. End Thoughts

Hopefully, these and the other ideas presented will provide a starting point for structured thinking aimed at the hyper-enterprise level. They are by no means complete or definitive, but they have at least now been debated and (partially) field tested over a number of years and across a number of cross-industry use cases. What is badly needed now is review from the wider IT architecture community and the coming together of consensus over applicability, value, and overall direction.