Investment and Portfolio

Area Description

The decision to break an organization into multiple teams is in part an investment decision. The organization is going to devote some resources to one team, and some to another team. Furthermore, there will be additional spending still managed at a corporate level. If the results meet expectations, the organization will then likely proceed with further investments managed by the same or similar organization structure. How are these separate streams of investment decided on and managed? What is the approach for structuring them? How does an organization ensure that they are returning the desired results?

People are competitive. Multiple teams will start to contend for investment. This is unavoidable. They will want their activities adequately supported, and their understanding of “adequate” may be different from each other. They will be watching that the other teams don’t get “more than their share” and are using their share effectively. The leader starts to see that the teams need to be constantly reminded of the big picture, in order to keep their discussions and occasional disagreements constructive.

There is now a dedicated, full-time Chief Financial Officer and the organization is increasingly subject to standard accounting and budgeting rules. But annual budgeting seems to be opposed to how the digital startup has run to date. What alternatives are there? The organization’s approach to financial management affects every aspect of the company, including product team effectiveness.

The organization also begins to understand vendor relationships (e.g., your cloud providers) as a form of investment. As the use of their products deepens, it becomes more difficult to switch from them, and so the organization spends more time evaluating before committing. The organization establishes a more formalized approach. Open source changes the software vendor relationship to some degree, but it’s still a portfolio of commitments and relationships requiring management.

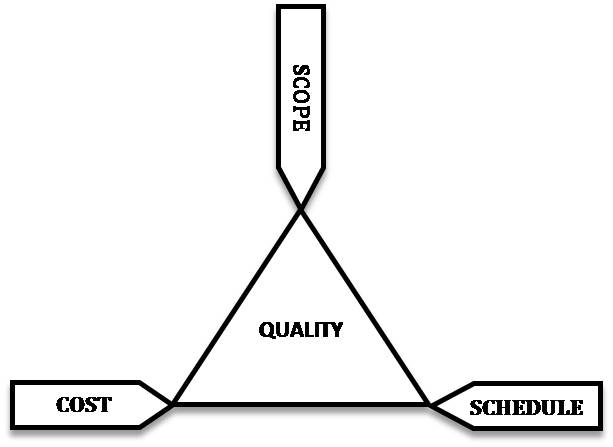

Project management is often seen as necessary for financial planning, especially regarding the efforts most critical to the business. The reason it is seen as essential is because of the desire to coordinate, manage, and plan. Having a vision isn’t worth much without some ability to forecast how long it will take and what it will cost, and to monitor progress against the forecast in an ongoing way. Project management is often defined as the execution of a given scope of work within constraints of time and budget. But questions arise. The organization has long been executing work, without this concept of “project”. This document discussed Scrum, Kanban, and various organizational levels and delivery models in the introduction to Context III. This Competency Category will examine this idea of “scope” in more detail. How can it be known in advance, so that the “constraints of time and budget” are reasonable?

As seen in this document’s discussions of product management, in implementing truly new products, (including digital products) estimating time and budget is challenging because the necessary information is not available. In fact, creating information — which (per Lean Product Development) requires tight feedback loops — is the actual work of the “project”. Therefore, in the new Agile world, there is some uncertainty as to the role of and even need for traditional project management. This Competency Category will examine some of the reasons for project management’s persistence and how it is adapting to the new product-centric reality.

In the project management literature and tradition, much focus is given to the execution aspect of project management — its ability to manage complex, interdependent work across resource limitations. We discussed project management and execution in Project Management as Coordination. In this section, we are interested in the structural role of project management as a way of managing investments. Project management may be institutionalized through establishing an organizational function known as the Project Management Office (PMO), which may use a concept of project portfolio as a means of constraining and managing the organization’s multiple priorities. What is the relationship of the traditional PMO to the new, product-centric, digital world?

Financial Management of Digital and IT

Description

Financial health is an essential dimension of business health. And digital technology has been one of the fastest-growing budget items in modern enterprises. Its relationship to enterprise financial management has been a concern since the first computers were acquired for business purposes.

| Financial management is a broad, complex, and evolving topic and its relationship to IT and digital management is even more so. This brief section can only cover a few basics. However, it is important for you to have an understanding of the intersection of Agile and Lean IT with finance, as your organization’s financial management approach can determine the effectiveness of your digital strategy. |

The objectives of IT finance include:

-

Providing a framework for tracking and accounting for digital income and expenses

-

Supporting financial analysis of digital strategies (business models and operating models, including sourcing)

-

Supporting the digital and IT-related aspects of the corporate budgetary and reporting processes, including internal and external policy compliance

-

Supporting (where appropriate) internal cost recovery from business units consuming digital services

-

Supporting accurate and transparent comparison of IT financial performance to peers and market offerings (benchmarking)

A company scaling up today would often make different decisions from a company that scaled up 40 years ago. This is especially apparent in the matter of how to finance digital/IT systems development and operations. The intent of this section is to explore both the traditional approaches to IT financial management and the emerging Agile/Lean responses.

This section has the following outline:

-

Historical IT financial practices

-

Annual budgeting and project funding

-

Cost accounting and chargeback

-

-

Next-generation IT finance

-

Lean Accounting & Beyond Budgeting

-

Lean Product Development

-

Internal “venture” funding

-

Value stream orientation

-

Internal market economics

-

Service brokerage

-

Historic IT Financial Practices

Historically, IT financial management has been defined by two major practices:

-

An annual budgeting cycle, in which project funding is decided

-

Cost accounting, sometimes with associated internal transfers (chargebacks) as a primary tool for understanding and controlling IT expenses

Both of these practices are being challenged by Agile and Lean IT thinking.

Annual Budgeting and Project Funding

IT organizations typically adhere to annual budgeting and planning cycles, which can involve painful rebalancing exercises across an entire portfolio of technology initiatives, as well as a sizeable amount of rework and waste. This approach is anathema to companies that are seeking to deploy Agile at scale. Some businesses in our research base are taking a different approach. Overall budgeting is still done yearly, but roadmaps and plans are revisited quarterly or monthly, and projects are reprioritized continually [69].

An Operating Model for Company-Wide Agile Development

In the common practice of the annual budget cycle, companies once a year embark on a detailed planning effort to forecast revenues and how they will be spent. Much emphasis is placed on the accuracy of such forecasts, despite its near-impossibility. (If estimating one large software project is challenging, how much more challenging to estimate an entire enterprise’s income and expenditures?)

The annual budget has two primary components: capital expenditures and operating expenditures, commonly called CAPEX and OPEX. The rules about what must go where are fairly rigid and determined by accounting standards with some leeway for the organization’s preferences.

Software development can be capitalized, as it is an investment from which the company hopes to benefit from in the future. Operations is typically expensed. Capitalized activities may be accounted for over multiple years (therefore becoming a reasonable candidate for financing and multi-year amortization). Expensed activities must be accounted for in-year.

One can only “go to the well” once a year. As such, extensive planning and negotiation traditionally take place around the IT project portfolio. Business units and their IT partners debate the priorities for the capital budget, assess resources, and finalize a list of investments. Project managers are identified and tasked with marshaling the needed resources for execution.

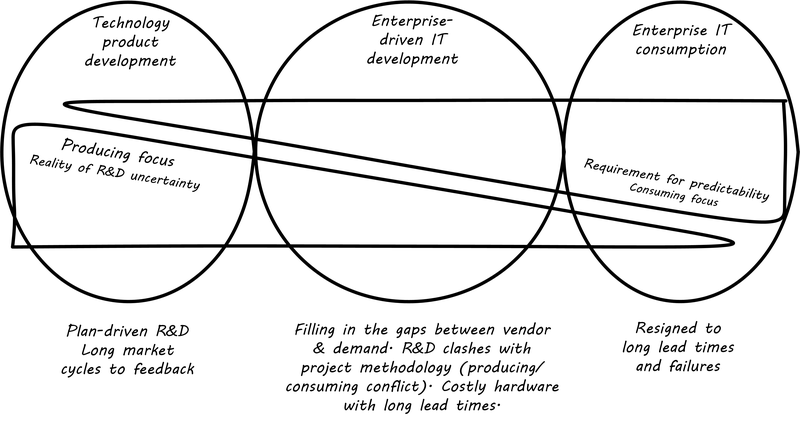

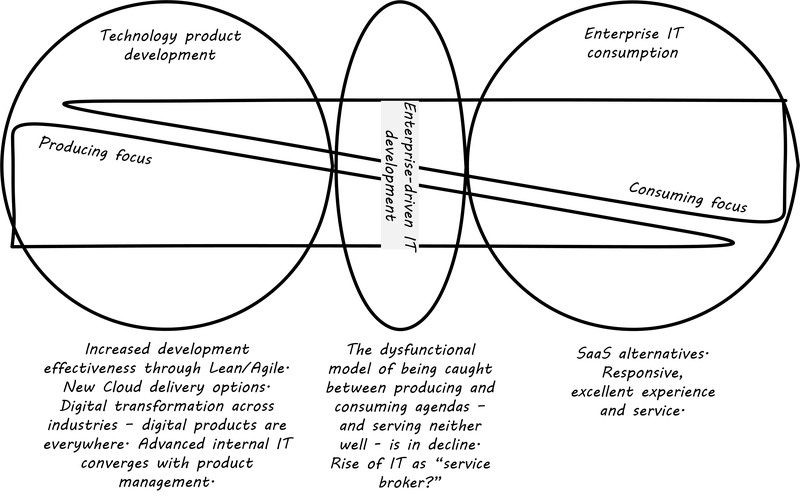

This annual cycle receives much criticism in the Agile and Lean communities. From a Lean perspective, projects can be equated to large “batches” of work. Using annual projects as a basis for investment can result in misguided attempts to plan large batches of complex work in great detail so that resource requirements can be known well in advance. The history of the Agile movement is in many ways a challenge and correction of this thinking, as we have discussed throughout this document.

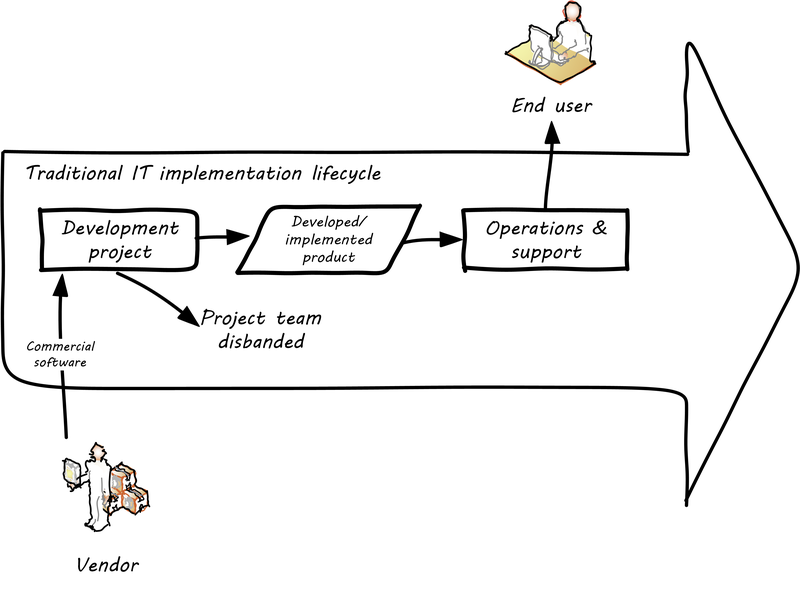

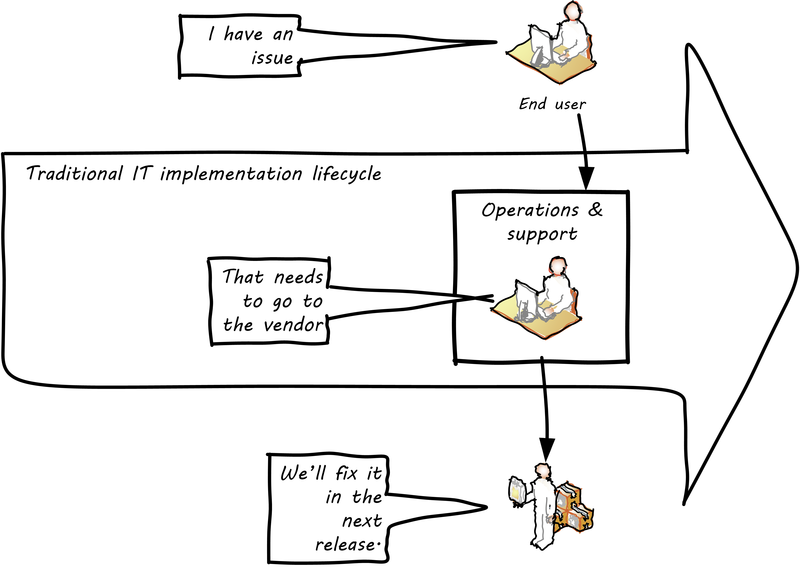

The execution model for digital/IT acquisition adds further complexity. Traditionally, project management has been the major funding vehicle for capital investments, distinct from the operational expense. But with the rise of cloud computing and product-centric management, companies are moving away from traditional capital projects. New products are created with greater agility, in response to market need, and without the large capital investments of the past in physical hardware.

This does not mean that traditional accounting distinctions between CAPEX and OPEX go away. Even with expensed cloud infrastructure services, software development may still be capitalized, as may software licenses.

Cost Accounting and Chargeback

| The term “cost accounting” is not the same as just “accounting for costs”, which is always a concern for any organization. Cost accounting is defined as “the techniques for determining the costs of products, processes, projects, etc. in order to report the correct amounts on the financial statements, and assisting management in making decisions and in the planning and control of an organization … For example, cost accounting is used to compute the unit cost of a manufacturer’s products in order to report the cost of inventory on its balance sheet and the cost of goods sold on its income statement. This is achieved with techniques such as the allocation of manufacturing overhead costs and through the use of process costing, operations costing, and job-order costing systems." [6] |

IT is often consumed as a "shared service” which requires internal financial transfers. What does this mean?

Here is a traditional example. An IT group purchases a mainframe computer for $1,000,000. This mainframe is made available to various departments who are charged for it. Because the mainframe is a shared resource, it can run multiple workloads simultaneously. For the year, we see the following usage:

-

30% Accounting

-

40% Sales Operations

-

30% Supply Chain

In the simplest direct allocation model, the departments would pay for the portion of the mainframe that they used. But things always are more complex than that.

-

What if the mainframe has excess capacity? Who pays for that?

-

What if Sales Operations stops using the mainframe? Do Accounting and Supply Chain have to make up the loss? What if Accounting decides to stop using it because of the price increase?

-

In public utilities, this is known as a "death spiral" and the problem was noted as early as 1974 by Richard Nolan [210 p. 179].

-

-

The mainframe requires power, floor space, and cooling - how are these incorporated into the departmental charges?

-

Ultimately, the Accounting organization (and perhaps Supply Chain) are back-office cost centers as well

-

Does it make sense for them to be allocated revenues from company income, only to have those revenues then re-directed to the IT department?

-

Historically, cost accounting has been the basis for much IT financial management (see, for example, ITIL Service Strategy, [283], p.202; [225]). Such approaches traditionally seek full absorption of unit costs; that is, each “unit” of inventory ideally represents the total cost of all its inputs: materials, labor, and overhead such as machinery and buildings.

In IT/digital service organizations, there are three basic sources of cost: “cells, atoms, and bits”. That is:

-

People (i.e., their time)

-

Hardware

-

Software

However, these are “direct” costs — costs that, for example, a product or project manager can see in their full amount.

Another class of cost is “indirect”. The IT service might be charged $300 per square foot for data center space. This provides access to rack space, power, cooling, security, and so forth. This charge represents the bills the Facilities organization receives from power companies, mechanicals vendors, security services, and so forth — not to mention the mortgage!

Finally, the service may depend on other services. Perhaps instead of a dedicated database server, the service subscribes to a database service that gives them a high-performance relational database, but where they do not pay directly for the hardware, software, and support services on which the database is based. Just to make things more complicated, the services might be external (public cloud) or internal (private cloud or other offerings).

Those are the major classes of cost. But how do we understand the “unit of inventory” in an IT services context? A variety of concepts can be used, depending on the service in question:

-

Transactions

-

Users

-

Network ports

-

Storage (e.g., gigabytes of disk)

In internal IT organizations (see "Defining consumer, customer, and sponsor") this cost accounting is then used to transfer funds from the budgets of consuming organizations to the IT budget. Sometimes this is done via simple allocations (marketing pays 30%, Sales pays 25%, etc.) and sometimes this is done through more sophisticated approaches, such as defining unit costs for services.

For example, the fully absorbed unit cost for a customer account transaction might be determined to be $0.25; this ideally represents the total cost of the hardware, software, and staff divided by the expected transactions. Establishing the models for such costs, and then tracking them, can be a complex undertaking, requiring correspondingly complex systems.

IT managers have known for years that overly detailed cost accounting approaches can result in consuming large fractions of IT resources. As AT&T financial manager John McAdam noted:

“Utilizing an excessive amount of resources to capture data takes away resources that could be used more productively to meet other customer needs. Internal processing for IT is typically 30-40% of the workload. Excessive data capturing only adds to this overhead cost.” [191]

There is also the problem that unit costing of this nature creates false expectations. Establishing an internal service pricing scheme implies that if the utilization of the service declines, so should the related billings. But if:

-

The hardware, software, and staff costs are already sunk, or relatively inflexible

-

The IT organization is seeking to recover costs fully

the per-transaction cost will simply have to increase if the number of transactions goes down. James R. Huntzinger discusses the problem of excess capacity distorting unit costs, and states “it is an absolutely necessary element of accurate representation of the facts of production that some provisions be made for keeping the cost of wasted time and resources separate from normal costs” [139]. Approaches for doing this will be discussed below.

Next-Generation IT Finance

What accounting should do is produce an unadulterated mirror of the business — an uncompromisable truth on which everyone can rely … Only an informed team, after all, is truly capable of making intelligent decisions.

as quoted by James Huntzinger

Criticisms of traditional approaches to IT finance have increased as Digital Transformation accelerates and companies turn to Agile operating models. Rami Sirkia and Maarit Laanti (in a paper used as the basis for the SAFe's financial module) describe the following shortcomings:

-

Long planning horizons, detailed cost estimates that must frequently be updated

-

Emphasis on planning accuracy and variance analysis

-

Context-free concern over budget overruns (even if a product is succeeding in the market, variances are viewed unfavorably)

-

Bureaucratic re-approval processes for project changes

-

Inflexible and slow resource re-allocation [261]

What do critics of cost accounting, allocated chargebacks, and large batch project funding suggest as alternatives to the historical approaches? There are some limitations evident in many discussions of Lean Accounting, notably an emphasis on manufactured goods. However, a variety of themes and approaches have emerged relevant to IT services, that we will discuss below:

-

Beyond Budgeting

-

Internal “venture” funding

-

Value stream orientation

-

Lean Accounting

-

Lean Product Development

-

Internal market economics

-

Service brokerage

Beyond Budgeting

Setting a numerical target and controlling performance against it is the foundation stone of the budget contract in today’s organization. But, as a concept, it is fundamentally flawed. It is like telling a motor racing driver to achieve an exact time for each lap … it cannot predict and control extraneous factors, any one of which could render the target totally meaningless. Nor does it help to build the capability to respond quickly to new situations. But, above all, it doesn’t teach people how to win.

Beyond Budgeting Questions and Answers

Beyond Budgeting is the name of a 2003 book by Jeremy Hope and Robin Fraser. It is written in part as an outcome of meetings and discussions between a number of large, mostly European firms dissatisfied with traditional budgeting approaches. Beyond Budgeting’s goals are described as:

releasing capable people from the chains of the top-down performance contract and enabling them to use the knowledge resources of the organization to satisfy customers profitably and consistently beat the competition

In particular, Beyond Budgeting critiques the concept of the “budget contract”. A simple “budget” is merely a “financial view of the future … [a] 'most likely outcome' given known information at the time …”. A “budget contract” by comparison is intended to “delegate the accountability for achieving agreed outcomes to divisional, functional, and departmental managers”. It includes concerns and mechanisms such as:

-

Targets

-

Rewards

-

Plans

-

Resources

-

Coordination

-

Reporting

and is intended to “commit a subordinate or team to achieving an agreed outcome.

Beyond Budgeting identifies various fallacies with this approach, including:

-

The idea that fixed financial targets maximize profit potential

-

Financial incentives build motivation and commitment (see discussion on motivation)

-

Annual plans direct actions that maximize market opportunities

-

Central resource allocation optimizes efficiency

-

Central coordination brings coherence

-

Financial reports provide relevant information for strategic decision-making

Beyond the poor outcomes that these assumptions generate, up to 20% to 30% of senior executives' time is spent on the annual budget contract. Overall, the Beyond Budgeting view is that the budget contract is:

a relic from an earlier age. It is expensive, absorbs far too much time, adds little value, and should be replaced by a more appropriate performance management model [131 p. 4], emphasis added.

Readers of this textbook should at this point notice that many of the Beyond Budgeting concerns reflect an Agile/Lean perspective. The fallacies of efficiency and central coordination have been discussed throughout this document. However, if an organization’s financial authorities remain committed to these as operating principles, the Digital Transformation will be difficult at best.

Beyond Budgeting proposes a number of principles for understanding and enabling organizational financial performance. These include:

-

Event-driven over annual planning

-

On-demand resources over centrally allocated resources

-

Relative targets (“beating the competition”) over fixed annual targets

-

Team-based rewards over individual rewards

-

Multi-faceted, multi-level, forward-looking analysis over analyzing historical variances

Internal “Venture” Funding

A handful of companies are even exploring a venture-capital-style budgeting model. Initial funding is provided for MVPs, which can be released quickly, refined according to customer feedback, and relaunched in the marketplace … And subsequent funding is based on how those MVPs perform in the market. Under this model, companies can reduce the risk that a project will fail, since MVPs are continually monitored and development tasks reprioritized … [69].

An Operating Model for Company-Wide Agile Development

As we have discussed previously, product and project management are distinct. Product management, in particular, has more concern for overall business outcomes. If we take this to a logical conclusion, the product portfolio becomes a form of the investment portfolio, managed not in terms of schedule and resources, but rather in terms of business results.

This implies the need for some form of internal venture funding model, to cover the initial investment in an MVP. If and when this internal investment bears fruit, it may become the basis for a value stream organization, which can then serve as a vehicle for direct costs and an internal services market (see below). McKinsey reports the following case:

With a rolling backlog and stable funding that decouples annual allocation from ongoing execution, the venture-funded product paradigm is likely to continue growing. A product management mindset activates a variety of useful practices, as we will discuss in the next section.

Options as a Portfolio Strategy

In governing for effectiveness and innovation, one technique is that of options. Related to the idea of options is parallel development. In investing terms, purchasing an option gives the right, but not the obligation, to purchase a stock (or other value) for a given price at a given time. Options are an important tool for investors to develop strategies to compensate for market uncertainty.

What does this have to do with developing digital products?

Product development is so uncertain that sometimes it makes sense to try several approaches at once. This, in fact, was how the program to develop the first atomic bomb was successfully managed. Parallel development is analogous to an options strategy. Small, sustained investments in different development “options” can optimize development payoff in certain situations (see [230], Product Management). When taken to a logical conclusion, such an options strategy starts to resemble the portfolio management approaches of venture capitalists. Venture capitalists invest in a broad variety of opportunities, knowing that most, in fact, will not pan out. See discussion of internal venture funding as a business model.

It is arguable that the venture-funded model has created different attitudes and expectations towards governance in West Coast “unicorn” culture. However, it would be dangerous to assume that this model is universally applicable. A firm is more than a collection of independent sub-organizations; this is an important line of thought in management theory, starting with Coase’s “The Nature of the Firm” [63].

The idea that “Real Options” were a basis for Agile practices was proposed by Chris Matts [190]. Investment banker turned Agile coach Franz Ivancsich strongly cautions against taking options theory too far, noting that to price them correctly you have to determine the complete range of potential values for the underlying asset [159].

Lean Product Development

Because we never show it on our balance sheet, we do not think of [design-in-process] as an asset to be managed, and we do not manage it.

Managing the Design Factory

The Lean Product Development thought of Don Reinertsen was discussed extensively in Work Management. His emphasis on employing an economic framework for decision-making is relevant to this discussion as well. In particular, his concept of cost of delay is poorly addressed in much IT financial planning, with its concerns for full utilization, efficiency, and variance analysis. Other Lean Accounting thinkers share this concern; for example:

the cost-management system in a Lean environment must be more reflective of the physical operation. It must not be confined to monetary measures but must also include non-financial measures, such as quality and throughput times._+[<<Huntzinger2007,139>>]+

Another useful Reinertsen concept is that of design-in-process. This is an explicit analog to the well-known Lean concept of work-in-process. Reinertsen makes the following points [229 p. 13]:

-

Design-in-progress is larger and more expensive to hold than work-in-progress

-

It has much lower turn rates

-

It has much higher holding costs (e.g., due to obsolescence)

-

The liabilities of design-in-progress are ignored due to weaknesses in accounting standards

These concerns give powerful economic incentives for managing throughput and flow, continuously re-prioritizing for the maximum economic benefit and driving towards the Lean ideal of single-piece flow.

Lean Accounting

It was not enough to chase out the cost accountants from the plants; the problem was to chase cost accounting from my people’s minds.

There are several often-cited motivations for cost accounting [139 p. 13]:

-

Inventory valuation (not applicable for intangible services)

-

Informing pricing strategy

-

Management of production costs

IT service costing has long presented additional challenges to traditional cost accounting. As IT Financial Management Association president Terry Quinlan notes, “Many factors have contributed to the difficulty of planning Electronic Data Processing (EDP) expenditures at both application and overall levels. A major factor is the difficulty of measuring fixed and variable cost.” [225 p. 6]

This begs the broader question: should traditional cost accounting be the primary accounting technique used at all? Cost accounting in Lean and Agile organizations is often controversial. Lean pioneer Taiichi Ohno of Toyota thought it was a flawed approach. Huntzinger [139] identifies a variety of issues:

-

Complexity

-

Un-maintainability

-

Supplies information “too late” (i.e., does not support fast feedback)

-

“Overhead” allocations result in distortions

Shingo Prize winner Steve Bell observes:

The trend in Lean Accounting has been to simplify. A guiding ideal is seen in the Wikipedia article on Lean Accounting:

The “ideal” for a manufacturing company is to have only two types of transactions within the production processes; the receipt of raw materials and the shipment of finished product.

Concepts such as value stream orientation, internal market economics, and service brokering all can contribute towards achieving this ideal.

Value Stream Orientation

Collecting costs into traditional financial accounting categories, like labor, material, overhead, selling, distribution, and administrative, will conceal the underlying cost structure of products … The alternative to traditional methods … is the creation of an environment that moves indirect costs and allocation into direct costs. [139]

Lean Cost Management

As discussed above, Lean thinking discourages the use of any concept of overhead, sometimes disparaged as “peanut butter” costing. Rather, direct assignment of all costs to the maximum degree is encouraged, in the interest of accounting simplicity.

We discussed a venture-funded product model above, as an alternative to project-centric approaches. Once a product has proven its viability and becomes an operational concern, it becomes the primary vehicle for those concerns previously handled through cost accounting and chargeback. The term for a product that has reached this stage is “value stream”. As Huntzinger notes, “Lean principles demand that companies align their operations around products and not processes” [139 p. 19].

By combining a value stream orientation in conjunction with organizational principles such as frugality, internal market economics, and decentralized decision-making (see, for example, [131 p. 12]), both Lean and Beyond Budgeting argue that more customer-satisfying and profitable results will ensue. The fact that the product, in this case, is digital (not manufactured), and the value stream centers around product development (not production) does not change the argument.

Internal Market Economics

value stream and product line managers, like so much in the Lean world, are “fractal”.

Lean Thinking

Coordinate cross-company interactions through “market-like” forces.

Beyond Budgeting Questions and Answers

IT has long been viewed as a “business within a business”. In the internal market model, services consume other services ad infinitum [195]. Sometimes the relationship is hierarchical (an application team consuming infrastructure services) and sometimes it is peer-to-peer (an application team consuming another’s services, or a network support team consuming email services, which in turn require network services).

The increasing sourcing options including various cloud options make it more and more important that internal digital services be comparable to external markets. This, in turn, puts constraints on traditional IT cost recovery approaches, which often result in charges with no seeming relationship to reasonable market prices.

There are several reasons for this. One commonly cited reason is that internal IT costs include support services, and therefore cannot fairly be compared to simple retail prices (e.g., for a computer as a good).

Another, more insidious reason is the rolling in of unrelated IT overhead to product prices. We have quoted James Huntzinger’s work above in various places on this topic. Dean Meyer has elaborated this topic in greater depth from an IT financial management perspective, calling for some organizational “goods” to be funded as either ventures (similar to above discussion) or “subsidies” (for enterprise-wide benefits such as technical standardization) [195 p. 92].

As discussed above, a particularly challenging form of IT overhead is excess capacity. The saying “the first person on the bus has to buy the bus” is often used in IT shared services, but is problematic. A new, venture-funded startup cannot operate this way — expecting the first few customers to fund the investment fully! Nor can this work in an internal market, unless heavy-handed political pressure is brought to bear. This is where internal venture funding is required.

Meyer presents a sophisticated framework for understanding and managing an internal market of digital services. This is not a simple undertaking; for example, correctly setting service prices can be surprisingly complex.

Service Brokerage

Finally, there is the idea that digital or IT services should be aggregated and “brokered” by some party (perhaps the descendant of a traditional IT organization). In particular, this helps with capacity management, which can be a troublesome source of internal pricing distortions. This has been seen not only in IT services, but in Lean attention to manufacturing; when unused capacity is figured into product cost accounting, distortions occur [139], Chapter 7, “Church and Excess Capacity”.

Applying Meyer’s principles, excess capacity would be identified as a subsidy or a venture as a distinct line item.

But cloud services can assist even further. Excess capacity often results from the available quantities in the market; e.g., one purchases hardware in large-grained capital units. But more flexibly priced, expensed compute on-demand services are available, it is feasible to allocate and de-allocate capacity on-demand, eliminating the need for accounting for excess capacity.

Evidence of Notability

Financial management in IT and digital systems has a long history of focused discussion; e.g., [225] and the work of the IT Financial Management Association and the TBM Council.

Limitations

IT financial management is a focused subset of financial management in general.

Related Topics

Digital Sourcing and Contracts

Description

Digital sourcing is the set of concerns related to identifying suppliers (sources) of the necessary inputs to deliver digital value. Contract management is a critical, subsidiary concern, where digital value intersects with law.

The basic classes of inputs include:

-

People (with certain skills and knowledge)

-

Hardware

-

Software

-

Services (which themselves are composed of people, hardware, and/or software)

Practically speaking, these inputs are handled by two different functions:

-

People (in particular, full-time employees) are handled by a human resources function

-

Hardware, software, and services are handled by a procurement function

-

Other terms associated with this are Vendor Management, Contract Management, and Supplier Management; we will not attempt to clarify or rationalize these areas in this section.

-

We discussed hiring and managing digital specialists in the the Competency Category of IT Human Resources Management.

Basic Concerns

A small company may establish binding agreements with vendors relatively casually. For example, when the founder first chose a cloud platform on which to build their product, they clicked on a button that said “I accept”, at the bottom of a lengthy legal document they didn’t necessarily read closely. This “clickwrap” agreement is a legally binding contract, which means that the terms and conditions it specifies are enforceable by the courts.

A startup may be inattentive to the full implications of its contracts for various reasons:

-

The founder does not understand the importance and consequences of legally binding agreements

-

The founder understands but feels they have little to lose (for example, they have incorporated as a limited liability company, meaning the founder’s personal assets are not at risk)

-

The service is perceived to be so broadly used that an agreement with it must be safe (if 50 other startups are using a well-known cloud provider and prospering, why would a startup founder spend precious time and money on overly detailed scrutiny of its agreements?)

All of these assumptions bear some risk — and many startups have been burned on such matters — but there are many other, perhaps more pressing risks for the founder and startup.

However, by the time the company has scaled to the team of teams level, contract management is almost certainly a concern of the Chief Financial Officer. The company has more resources (“deeper pockets”), and so lawsuits start to become a concern. The complexity of the company’s services may require more specialized terms and conditions. Standard “boilerplate” contracts thus are replaced by individualized agreements. Concerns over intellectual property, the ability to terminate agreements, liability for damages, and other topics require increased negotiation and counterproposing contractual language. See the case study on the 9 figure true-up for a grim scenario.

At this point, the company may have hired its own legal professional; certainly, legal fees are going up, whether as services from external law firms or internal staff.

Contract and vendor management is more than just establishing the initial agreement. The ongoing relationship must be monitored for its consistency with the contract. If the vendor agrees that its service will be available 99.999% of the time, the availability of that service should be measured, and if it falls below that threshhold, contractual penalties may need to be applied.

In larger organizations, where a vendor might hold multiple contracts, a concept of "vendor management” emerges. Vendors may be provided with “scorecards” that aggregate metrics which describe their performance and the overall impression of the customer. Perhaps key stakeholders are internally surveyed as to their impression of the vendor’s performance and whether they would be likely to recommend them again. Low scores may penalize a vendor’s chances in future Request for Information (RFI)/RFP processes; high scores may enhance them. Finally, advising on sourcing is one of the main services an Enterprise Architecture group may provide.

Outsourcing and Cloud Sourcing

The first significant vendor relationship the startup may engage with is for cloud services. The decision whether, and how much, to leverage cloud computing remains a topic of much industry discussion. The following pros and cons are typically considered [202]:

| Pro | Con |

|---|---|

Operational costs Public cloud workforce availability (as opposed to private cloud skills) Better capital management (i.e., through expensed cloud services) Ease in providing elastic scalability Agility (faster provisioning of commercial cloud instances) Reduce capex (faciilities, hardware, software) Promoting innovation (e.g., web-scale applications may require current cloud infrastructure) Public clouds are rich and mature, with extensive platform capabilities Flexible capacity management/resource utilization |

Data Gravity (scale of data too voluminous to easily migrate the apps and data to the cloud) Security (perception cloud is not as secure) Private clouds are improving Lack of equivalent SaaS offerings for applications being run in-house Significant integration requirements between in-house apps and new ones deployed to cloud Lack of ability to support migration to cloud Vendor licensing (see 9 figure true-up) Network latency (slow response of cloud-deployed apps) Poor transparency of cloud infrastructure Risk of cloud platform lock-in |

Cloud can reduce costs when deployed in a sophisticated way; if a company has purchased more capacity than it needs, cloud can be more economical (review the section on virtualization economics). However, ultimately, as Abbott and Fisher point out:

Large, rapidly growing companies can usually realize greater margins by using wholly owned equipment than they can by operating in the cloud. This difference arises because IaaS operators, while being able to purchase and manage their equipment cost-effectively, are still looking to make a profit on their services [4 p. 474].

Minimally, cloud services need to be controlled for consumption. Cloud providers will happily allow virtual machines to run indefinitely, and charge for them. An ecosystem of cloud brokers and value-add resellers is emerging to assist organizations with optimizing their cloud dollars.

Software Licensing

As software and digital services are increasingly used by firms large and small, the contractual rights of usage become more and more critical. We mentioned a "clickwrap” licensing agreement above. Software licensing, in general, is a large and detailed topic, one presenting a substantial financial risk to the unwary firm, especially when cloud and virtualization are concerned.

Software licensing is a subset of software asset management, which is itself a subset of IT asset management, discussed in more depth in the material on process management and IT lifecycles. Software asset management in many cases relies on the integrity of a digital organization’s package management; the package manager should represent a definitive inventory of all the software in use in the organization.

Free and open-source software (sometimes abbreviated FOSS) has become an increasingly prevalent and critical component of digital products. While technically “free”, the licensing of such software can present risks. In some cases, open-source products have unclear licensing that puts them at risk of legal conflicts which may impact users of the technology [182]. In other cases, the open-source license may discourage commercial or proprietary use; for example, the GNU General Public License (GPL) requirement for disclosing derivative works causes concern among enterprises [310].

The Role of Industry Analysts

When a company is faced by a sourcing question of any kind, one initial reaction is to research the market alternatives. But research is time-consuming, and markets are large and complex. Therefore, the role of industry or market analyst has developed.

In the financial media, we often hear from “industry analysts” who are concerned with whether a company is a good investment in the stock market. While there is some overlap, the industry analysts we are concerned with here are more focused on advising prospective customers of a given market’s offerings.

Because sourcing and contracting are an infrequent activity, especially for smaller companies, it is valuable to have such services. Because they are researching a market and talking to vendors and customers on a regular basis, analysts can be helpful to companies in the purchasing process.

However, analysts take money from the vendors they are covering as well, leading to occasional charges of conflict of interest. How does this work? There are a couple of ways.

First, the analyst firm engages in objective research of a given market segment. They do this by developing a common set of criteria for who gets included, and a detailed set of questions to assess their capabilities.

For example, an analyst firm might define a market segment of “Cloud IaaS” vendors. Only vendors supporting the basic NIST guidelines for IaaS are invited. Then, the analyst might ask: “Do you support SDNs; e.g., Network Function Virtualization?” as a question. Companies that answer “yes” will be given a higher score than companies that answer “no”. The number of questions on a major research report might be as high as 300 or even higher.

Once the report is completed, and the vendors are ranked (analyst firms typically use a two-dimensional ranking, such as the Gartner Magic Quadrant or Forrester Wave), it is made available to end users for a fee. Fees for such research might range from $500 to $5,000 or more, depending on how specialized the topic, how difficult the research, and the ability of prospective customers to pay.

| Large companies - e.g., those in the Fortune 500 - typically would purchase an “enterprise agreement", often defined as a named “seat” for an individual, who can then access entire categories of research. |

Customers may have further questions for the analyst who wrote the research. They may be entitled to some portion of analyst time as part of their license, or they may pay extra for this privilege.

Beyond selling the research to potential customers of a market, the analyst firm has a complex relationship with the vendors they are covering. In our example of a major market research report, the analyst firm’s sales team also reaches out to the vendors who were covered. The conversation goes something like this:

“Greetings. You did very well in our recent research report. Would you like to be able to give it away to prospective customers, with your success highlighted? If so, you can sponsor the report for $50,000.”

Because the analyst report is seen as having some independence, it can be an attractive marketing tool for the vendor, who will often pay (after some negotiating) for the sponsorship. In fact, vendors have so many opportunities along these lines they often find it necessary to establish a function known as “Analyst Relations” to manage all of them.

Software Development and Contracts

Software is often developed and delivered per contractual terms. Contracts are legally binding agreements, typically developed with the assistance of lawyers. As noted in [21 p. 5]: “Legal professionals are trained to act, under legal duty, to advance their client’s interests and protect them against all pitfalls, seen or unseen.” The idea of “customer collaboration over contract negotiation” may strike them as the height of naïveté.

However, Agile and Lean influences have made substantial inroads in contracting approaches.

Arbogast et al. describe the general areas of contract concern:

-

Risk, exposure, and liability

-

Flexibility

-

Clarity of obligations, expectations, and deliverables

They argue that: “An Agile project contract may articulate the same limitations of liability (and related terms) as a traditional project contract, but the Agile contract will better support avoiding the very problems that a lawyer is worried about.” (p.12)

So, what is an "Agile" contract?

There are two major classes of contracts:

-

Time and materials

-

Fixed-price

In a time and materials contract, the contracting firm simply pays the provider until the work is done. This means that the risk of the project overrunning its schedule or budget resides primarily with the firm hiring out the work. While this can work, there is often a desire on the part of the firm to reduce this risk. If you are hiring someone because they claim they are experts and can do the work better, cheaper, and/or quicker than your own staff, it seems reasonable that they should be willing to shoulder some of the risks.

In a fixed-price contract, the vendor providing the service will make a binding commitment that (for example): “we will get the software completely written in nine months for $3 million”. Penalties may be enforced if the software is late, and it is up to the vendor to control development costs. If the vendor does not understand the work correctly, they may lose money on the deal.

Reconciling Agile with fixed-price contracting approaches has been a challenging topic [214]. The desire for control over a contractual relationship is historically one of the major drivers of waterfall approaches. However, since requirements cannot be fully known in advance, this is problematic.

When a contract is signed based on waterfall assumptions, the project management process of change control is typically used to govern any alterations to the scope of the effort. Each change order typically implies some increase in cost to the customer. Because of this, the perceived risk mitigation of a fixed-price contract may become a false premise.

This problem has been understood for some time. Scott Ambler argued in 2005 that: “It’s time to abandon the idea that fixed bids reduce risk. Clients have far more control over a project with a variable, gated approach to funding in which working software is delivered on a regular basis” [16]. Andreas Opelt states: “For Agile IT projects it is, therefore, necessary to find an agreement that supports the balance between a fixed budget (maximum price range) and Agile development (scope not yet defined in detail).”

How is this done? Opelt and his co-authors further argue that the essential question revolves around the project “Iron Triangle":

-

Scope

-

Cost

-

Deadline

The approach they recommend is determining which of these elements is the “fixed point” and which is estimated. In traditional waterfall projects, the scope is fixed, while costs and deadline must be estimated (a problematic approach when product development is required).

In Opelt’s view, in Agile contracting, costs and deadlines are fixed, while the scope is “estimated” — understood to have some inevitable variability. "… you are never exactly aware of what details will be needed at the start of a project. On the other hand, you do not always need everything that had originally been considered to be important” [214].

Their recommended approach supports the following benefits:

-

Simplified adaptation to change

-

Non-punitive changes in scope

-

Reduced knowledge decay (large “batches” of requirements degrade in value over time)

This is achieved through:

-

Defining the contract at the level of product or project vision (epics or high-level stories; see discussion of Scrum) — not detailed specification

-

Developing high-level estimation

-

Establishing agreement for sharing the risk of product development variability

This last point, which Opelt et al. term “riskshare”, is key. If the schedule or cost expand beyond the initial estimate, both the supplier and the customer pay, according to some agreed %, which they recommend be between 30%-70%. If the supplier proportion is too low, the contract essentially becomes time and materials. If the customer proportion is too low, the contract starts to resemble traditional fixed-price.

Incremental checkpoints are also essential; for example, the supplier/customer interactions should be high bandwidth for the first few sprints, while culture and expectations are being established and the project is developing a rhythm.

Finally, the ability for either party to exit gracefully and with a minimum penalty is needed. If the initiative is testing market response (aka Lean Startup) and the product hypothesis is falsified, there is little point in continuing the work from the customer’s point of view. And, if the product vision turns out to be far more work than either party estimated, the supplier should be able to walk away (or at least insist on comprehensive re-negotiation).

These ideas are a departure from traditional contract management. As Opelt asks: “How can you sign a contract from which one party can exit at any time?”. Recall, however, that (if Agile principles are applied) the customer is receiving working software continuously through the engagement (e.g., after every sprint).

In conclusion, as Arbogast et al. argue: “Contracts that promote or mandate sequential lifecycle development increase project risk - an Agile approach … reduces risk because it limits both the scope of the deliverable and extent of the payment [and] allows for inevitable change” [21 p. 13].

Evidence of Notability

Sourcing, and outsourcing, have long been key topics in digital and IT management. The option to allocate some level of responsibility to external parties in exchange for compensation has been an aspect of digital management since the first computers became available.

Limitations

The decision to outsource some or all of an IT organization to a third party is often cast in terms of "it’s not our core competency". However, Digital Transformation is resulting in some companies changing their minds and bringing digital systems development and operation back in-house, as a key business competency.

Related Topics

Portfolio Management

Description

Now that we understand the coordination problem better, and have discussed finance and sourcing, we are prepared to make longer-term commitments to a more complicated organizational structure. As we stated in the Competency Area introduction, one way of looking at these longer-term commitments is as investments. We start them, we decide to continue them, or we decide to halt (exit) them. In fact, we could use the term “portfolio” to describe these various investments; this is not a new concept in IT management.

| The first comparison of IT investments to a portfolio was in 1974, by Richard Nolan in Managing the Data Resource Function [210]. |

Whatever the context for your digital products (external or internal), they are intended to provide value to your organization and ultimately your end customer. Each of them in a sense is a “bet” on how to realize this value (review the Spotify DIBB model), and represents in some sense a form of product discovery. As you deepen your abilities to understand investments, you may find yourself applying business case analysis techniques in more rigorous ways, but as always retaining a Lean Startup experimental mindset is advisable.

As you strengthen a hypothesis in a given product or feature structure, you increasingly formalize it: a clear product vision supported by dedicated resources. We will discuss the IT portfolio concept further in [portfolio-management]. In your earliest stages of differentiating your portfolio, you may first think about features versus components.

Features versus Components

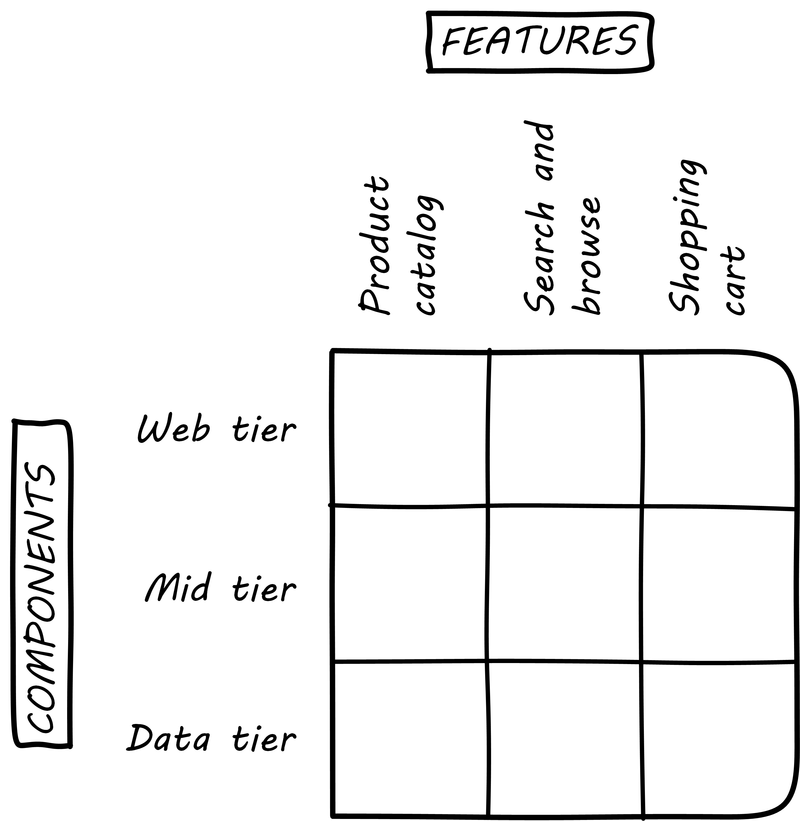

As you consider your options for partitioning your product, in terms of the AKF scaling cube, a useful and widely adopted distinction is that between “features” and “components” (see Features versus Components).

Features are what your product does. They are what the customers perceive as valuable. “Scope as viewed by the customer” according to Mark Kennaley [164] p.169. They may be "flowers" — defined by the value they provide externally, and encouraged to evolve with some freedom. You may be investing in new features using Lean Startup, the Spotify DIBB model, or some other hypothesis-driven approach.

Components are how your product is built, such as database and web components. In other words, they are a form of infrastructure (but infrastructure you may need to build yourself, rather than just spin up in the cloud). They are more likely to be “cogs” — more constrained and engineered to specifications. Mike Cohn defines a component team as “a team that develops software to be delivered to another team on the project rather than directly to users” [68 p. 183].

Feature teams are dedicated to a clearly defined functional scope (such as “item search” or “customer account lookup”), while component teams are defined by their technology platform (such as “database” or “rich client”). Component teams may become shared services, which need to be carefully understood and managed (more on this to come). A component’s failure may affect multiple feature teams, which makes them riskier.

It may be easy to say that features are more important than components, but this can be carried too far. Do you want each feature team choosing its own database product? This might not be the best idea; you will have to hire specialists for each database product chosen. Allowing feature teams to define their own technical direction can result in brittle, fragmented architectures, technical debt, and rework. Software product management needs to be a careful balance between these two perspectives. SAFe suggests that components are relatively:

-

More technically focused

-

More generally re-usable

than features. SAFE also recommends a ratio of roughly 20-25% component teams to 75%-80% feature teams [246].

Mike Cohn suggests the following advantages for feature teams [68 pp. 183-184]:

-

They are better able to evaluate the impact of design decisions

-

They reduce hand-off waste (a coordination problem)

-

They present less schedule risk

-

They maintain focus on delivering outcomes

He also suggests [68 pp. 186-187] that component teams are justified when:

-

Their work will be used by multiple teams

-

They reduce the sharing of specialists across teams

-

The risk of multiple approaches outweighs the disadvantages of a component team

Ultimately, the distinction between “feature versus component” is similar to the distinction between “application” and “infrastructure". Features deliver outcomes to people whose primary interests are not defined by digital or IT. Components deliver outcomes to people whose primary interests are defined by digital or IT.

Epics and New Products

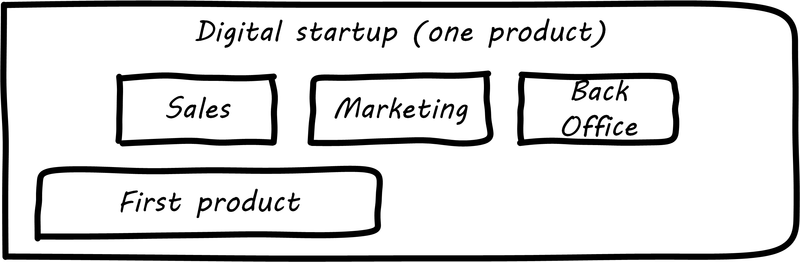

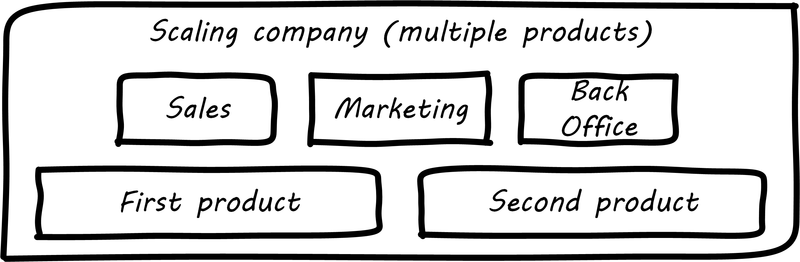

In the coordination Competency Category, we talked of one product with multiple feature and/or component teams (see One Company, One Product). Features and components as we are discussing them here are large enough to require separate teams (with new coordination requirements). At an even larger scale, we have new product ideas, perhaps first seen as epics in a product backlog.

Eventually, larger and more ambitious initiatives lead to a key organizational state transition: from one product to multiple products. Consider our hypothetical startup company. At first, everyone on the team is supporting one product and dedicated to its success. There is little sense of contention with “others” in the organization. This changes with the addition of a second product team with different incentives (see One Company, Multiple Products). Concerns for fair allocation and a sense of internal competition naturally arise out of this diversification. Fairness is deeply wired into human (and animal) brains, and the creation of a new product with an associated team provokes new dynamics in the growing company.

Because resources are always limited, it is critical that the demands of each product be managed using objective criteria, requiring formalization. This was a different problem when you were a tight-knit startup; you were constrained, but everyone knew they were “in it together". Now you need some ground rules to support your increasingly diverse activities. This leads to new concerns:

-

Managing scope and preventing unintended creep or drift from the product’s original charter

-

Managing contention for enterprise or shared resources

-

Execution to timeframes (e.g., the critical trade show)

-

Coordinating dependencies (e.g., achieving larger, cross-product goals)

-

Maintaining good relationships when a team’s success depends on another team’s commitment

-

Accountability for results

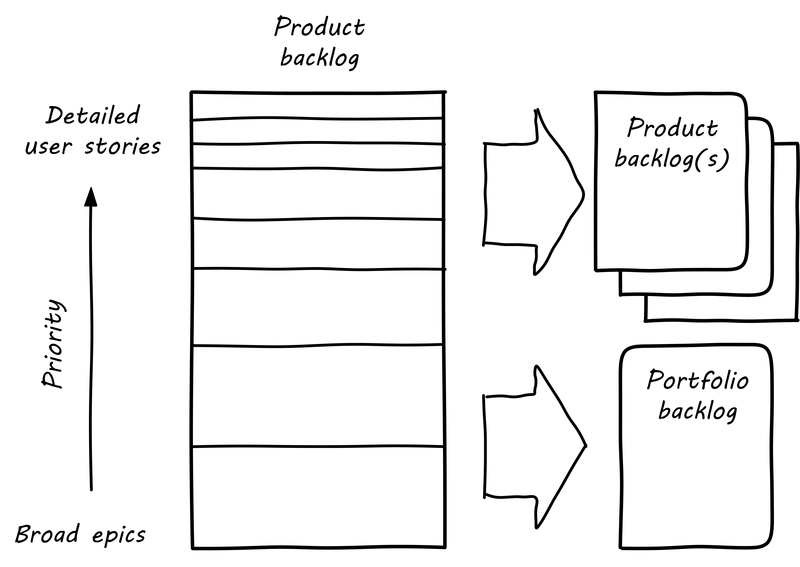

Structurally, we might decide to separate a portfolio backlog from the product backlog. What does this mean?

-

The portfolio backlog is the list of potential new products in which the organization might invest

-

Each product team still has its own backlog of stories (or other representations of their work)

The DEEP backlog we discussed in Work Management gets split accordingly (see Portfolio versus Product Backlog).

The decision to invest in a new product should not be taken lightly. When the decision is made, the actual process is as we covered in Product Management: ideally, a closed-loop, iterative process of discovering a product that is valuable, usable, and feasible.

There is one crucial difference: the investment decision is formal and internal. While we started our company with an understanding of our investment context, we looked primarily to market feedback and grew incrementally from a small scale. (Perhaps there was venture funding involved, but this document doesn’t cover that.)

Now, we may have a set of competing ideas on which we are thinking about placing bets. In order to make a rational decision, we need to understand the costs and benefits of the proposed initiatives. This is difficult to do precisely, but how can we rationally choose otherwise? We have to make some assumptions and estimate the likely benefits and the effort it might take to realize them.

Larger-Scale Planning and Estimating

Fundamentally, we plan for two reasons:

-

To decide whether to make an investment

-

To ensure the investment’s effort progresses effectively and efficiently

We have discussed investment decision-making in terms of the overall business context, in terms of the product roadmap, the product backlog, and in terms of Lean Product Development and cost of delay. As we think about making larger-scale, multi-team digital investments, all of these practices come together to support our decision-making process. Estimating the likely time and cost of one or more larger-scale digital product investment is not rocket science; doing so is based on the same techniques we have used at the single-team, single-product level.

With increasing scope of work and increasing time horizon tends to come increasing uncertainty. We know that we will use fast feedback and ongoing hypothesis-driven development to control for this uncertainty. But at some point, we either make a decision to invest in a given feature or product and start the hypothesis testing cycle — or we don’t.

Once we have made this decision, there are various techniques we can use to prioritize the work so that the most significant risks and hypotheses are addressed soonest. But in any case, when large blocks of funding are at issue, there will be some expectation of monitoring and communication. In order to monitor, we have to have some kind of baseline expectation to monitor against. Longer-horizon artifacts such as the product roadmap and release plan are usually the basis for monitoring and reporting on product or initiative progress.

In planning and execution, we seek to optimize the following contradictory goals:

-

Delivering maximum value (outcomes)

-

Minimizing the waste of un-utilized resources (people, time, equipment, software)

Obviously, we want outcomes — digital value — but we want it within constraints. It has to be within a timeframe that makes economic sense. If we pay 40 people to do work that a competitor or supplier can do with three, we have not produced a valuable outcome relative to the market. If we take 12 months to do something that someone else can do in five, again, our value is suspect. If we purchase software or hardware we don’t need (or before we need it) and, as a result, our initiative’s total costs go up relative to alternatives, we again may not be creating value. Many of the techniques suggested here are familiar to formal project management. Project management has the deepest tools, and whether or not you use a formal project structure, you will find yourself facing similar thought processes as you scale.

To meet these value goals, we need to:

-

Estimate so that expected benefits can be compared to expected costs, ultimately to inform the investment decision (start, continue, stop)

-

Plan so that we understand dependencies (e.g., when one team must complete a task before another team can start theirs)

Estimation sometimes causes controversy. When a team is asked for a projected delivery date, the temptation for management is to “hold them accountable” for that date and penalize them for not delivering value by then. But product discovery is inherently uncertain, and therefore such penalties can seem arbitrary. Experiments show that when animals are penalized unpredictably, they fall into a condition known as “learned helplessness”, in which they stop trying to avoid the penalties [304].

We discussed various coordination tools and techniques previously. Developing plans for understanding dependencies is one of the best known such techniques. An example of such a planning dependency would be that the database product should be chosen and configured before any schema development takes place (this might be a component team working with a feature team).

Planning Larger Efforts

… many large projects need to announce and commit to deadlines many months in advance, and many large projects do have inter-team dependencies …

Agile Estimating

Agile and adaptive techniques can be used to plan larger, multi-team programs. Again, we have covered many fundamentals of product vision, estimation, and work management in earlier material. Here, we are interested in the concerns that emerge at a larger scale, which we can generally class into:

-

Accountability

-

Coordination

-

Risk management

Accountability

With larger blocks of funding comes higher visibility and inquiries as to progress. At a program level, differentiating between estimates and commitments becomes even more essential.

Coordination

Mike Cohn suggests that larger efforts specifically can benefit from the following coordination techniques [67]:

-

Estimation baseline (velocity)

-

Key details sooner

-

Lookahead planning

-

Feeding buffers

Estimating across multiple teams is difficult without a common scale, and Cohn proposes an approach for determining this in terms of team velocity. He also suggests that, in larger projects, some details will require more advance planning (it is easy to see APIs as being one example), and some team members' time should be devoted to planning for the next release. Finally, where dependencies exist, buffers should be used; that is, if Team A needs something from Team B by May 1, Team B should plan on delivering it by April 15.

Risk Management

Finally, risk and contingency planning is essential. In developing any plan, Abbott and Fisher recommend the “5-95 rule”: 5% of the time on building a good plan, and 95% of the time planning for contingencies [4 p. 105]. We will discuss risk management in detail in Governance, Risk, Security, and Compliance.

Evidence of Notability

Portfolio management in the IT and digital context has been a topic since at least 1974 [210].

Limitations

IT portfolios (whether conceived as service, project, application, or product portfolios) are significantly different from classic financial portfolios and the usefulness of the metaphor is periodically questioned.

Related Topics

The Digital Product or Service Catalog

Description

A Digital Product or Service Catalog is an organized and curated collection of any and all business and IT-related services that can be performed by, for, or within an enterprise [306].

(Though the above serves as a good general definition of a Service Catalog, this section will explain some complexities around this naming and related concepts.)

When an organization reaches a team of teams level, teams need some way to start exposing their digital products and services to other teams and individuals in the organization.

If your organization is a SaaS provider or some other external digital product or service provider, at this level, you may also have enough differentiation in your digital product or service offerings that your public-facing digital Product or Service Catalog requires more formalized management.

| While industry terminology is still unsettled, some organizations and software vendors are staying with the “Service Catalog” name, while others are now using “Digital Product Catalog” to reflect and promote DPM practices’ growing influence among Digital Practitioners; more on this below. |

“While Service Catalogs are not new, they are becoming increasingly critical to enterprises seeking to optimize IT efficiencies, service delivery, and business outcomes. They are also a way of supporting both business and IT services, as well as optimizing IT for cost and value with critical metrics and insights.”

Digital Product/Service Catalogs can cover various needs and requests, from professional service requests to end-user IT support requests, to user access to internally delivered software and applications, to support for third-party cloud services across the board, to support for business (non-IT) services which are managed through an integrated service desk [88].

Digitally-savvy organizations pursue commoditization and enhanced automation for self-service for many service request types.

The most recent trends reflect an increasing number of organizations using Artificial Intelligence (AI) to automate service requests and using Service Catalogs to manage cloud services.

| Digital Product/Service Examples by Type and Delivery Option | DevOps | Low/No-Code | Externally Sourced (SIAM) |

|---|---|---|---|

High touch |

Requests to change internally developed services |

Access to internal professional services (e.g., SRE/security consultations) |

External professional services |

Commodity |

Rare |

Internal workflows (ESM) - can include provisioning of both internally and externally sourced operational services (e.g., IaaS) |

BPO |

Operational/Application |

DevOps-driven strategic digital services |

More complex low/no code-based development |

SaaS |

Operational/Infrastructure |

Infrastructure platforms; e.g., the DevOps pipeline itself |

N/A |

PaaS, IaaS, and SIAM |

Service Catalog Management Approaches: APM versus SPM versus DPM

Though Service Catalogs have been around for a long time, the management concepts underpinning them are evolving.

Traditionally, Service Catalogs have been developed in the service of the larger management approaches of Application Portfolio Management (APM) or Service Portfolio Management (SPM), but are increasingly being driven by Digital Product Management (DPM) concepts; below is a short summary of this evolution.

Application Portfolio Management (APM)

For larger organizations, one approach to keep tabs on every IT product is APM [185]. Commonly associated with Enterprise Architecture practices where the application architecture is needed to manage and make broad-sweeping technology or data changes. These would include efforts such as understanding the impact of choosing a different database vendor, deciding to port internally managed applications into the cloud, or to address regulatory requirements like Sarbanes-Oxley (SOX), Health Insurance Portability and Accountability Act (HIPAA), or Payment Card Industry (PCI) which impact many different applications—even if they don’t have an active team assigned.

The APM practices, however, often lack operational context, often summarizing the detail from CMDBs and support metrics to have a vague idea of how well the applications are performing, but often not with great fidelity.

Service Portfolio Management (SPM)

From an operational context, some organizations use SPM practices to manage the services being consumed, focusing on ticket generation, consumption measurement, and user satisfaction of the IT products after they have been put into operational status. The SPM practices have strong operational management roots, pursuing operational concerns such as reliability, stability, and other support-oriented metrics.

However, SPM doesn’t often address architectural, planning, and compliance needs well. ITIL V3 introduced Service Strategy [211]; however, in reality few organizations implemented this, and few vendors provide tools to manage services in a strategic/planning context even today.

Common Need to Own and Manage at Every Scale

While there are operational versus planning needs creating the two portfolios, we find there is a common owner that is accountable for both. What APM practices call a “Business Owner” is often the same person SPM practices call a “Service Manager”. Often this common stakeholder is responsible for the business context of the IT product, whether it’s a product used internally or externally.

External versus Internally-Consumed Product Catalog

Another line being blurred is the consumers of the IT product; some are internal and increasingly many are external consumers. The differences in who uses an IT product are now becoming very blurry; for example, traditionally human resources systems that used to provide employee-facing functionality, now also offer job applicant functionality for external use. The user experience for the consumption of an IT product therefore needs to be considered in a similar fashion, whether it’s an employee consumption experience or customer-facing experience. Employees would become frustrated where employers were to provide poor employee experience. And worse, a customer’s experience with an IT product can spell disaster, resulting in loss of revenue and, in extreme cases, damage to the company’s brand.

Digital Product Management

Newer concepts have emerged to better manage all aspects of every IT product through the full value stream. “DPM” bridges the classic APM and SPM worlds into a full lifecycle approach, and this new portfolio management acumen is often used to maintain the planning, delivery, and operational details of the IT product, effectively collapsing two highly separated worlds into one simpler portfolio, and addressing both the portfolio-level challenges introduced at larger-scale, while simultaneously addressing gaps between planning and building an IT product versus deliver/run aspects.

To summarize, digital products and services increasingly are understood as the front end of value streams. Digital products and services provide desired outcomes consumers of the product want, and there is a value stream in the execution of a digital product or service.

However, there are also much longer lifecycle management concerns of the digital product or service itself and, for Digital Practitioners, it is important to keep in mind that the immediate end-to-end transactional gratification — for the digital product or service consumer — is a different concern than the management and governance of the full lifecycle of the multiple things that deliver that transactional gratification.

The former is oriented towards the consumer of the digital product or service, while the latter is oriented to the long-term stakeholder (the business owner) of the digital product or service.

Service Catalog versus Request Catalog

Service Catalogs and Request Catalogs are not the same. To clarify the difference, Rob England provides a good analogy:

"A service is a pipe. Customers sign up for a pipe. They select from a Service Catalog. Transactions come out of the pipe. Users request a transaction. They select from a Request Catalog."

England explains the analogy: "The customer is the business entity which is engaging to consume a service. A service provider agrees with another entity as the service customer. The individual people have delegated roles and responsibilities within that relationship including possibly the approval of transactions. If you as an individual buy a service from a service provider you are not personally buying it, you are buying on behalf of your organization.

The person who approves the expenditure on an individual transaction (a request) is not the customer. That is merely part of the internal process for request fulfilment. The person who agrees the initial engagement of the service is the customer.

The customer creates the pipe, the user consumes a transaction out of the pipe." [95]

Confusion around the distinction between these two catalogs has also caused come confusion for some service-level managers. Charles Betz writes: "Service-level metrics take two forms: First, there are those that measure the fulfill service request process (in this case, how long it took to provision someone’s email). Second, there are those that measure the aggregate performance of the system as a whole, as a "service" to multiple users and to those who paid for it.

This can be confusing, as a list of SLAs may or may not appear consistent with the Service Catalog. The orderable services provide user access to the human resources system, but the SLA may not be about how quickly such access is provided – instead, it may be about the aggregate experience of all the users who have access to the human resources system." [32]

Service Requests can Trigger Activities Against an Application Service or a Function

There are also ambiguities around the concept of the service lifecycle. "Some services (e.g., particular application services) have a lifecycle, yet other services (e.g., project management) would in theory always be required by the enterprise.

Some would solve this by saying that the actual "service" is not the application system, but that approach results in pushing important lifecycle activities down to a "system" level.

Another approach is simply to see the Service Catalog as containing distinct transactional/IT-based services and professional services; this is a pragmatic approach. The data implications might be that there is no one conceptual entity containing all "services" – the physical Service Catalog is an amalgamation of the two distinct concepts."

The semantics here are important and can be confusing when not fully understood [32].

Service Catalog Support for Hybrid Cloud, Multi-Cloud, and Service Integration and Management (SIAM)

Multi-cloud is the use of multiple cloud computing and storage services in a single heterogeneous architecture. This also refers to the distribution of cloud assets, software, applications, etc. across several cloud-hosting environments. With a typical multi-cloud architecture utilizing two or more public clouds as well as multiple private clouds, a multi-cloud environment aims to eliminate the reliance on any single cloud provider [306].

Hybrid cloud is a composition of two or more clouds (private, community, or public) that remain distinct entities but are bound together, offering the benefits of multiple deployment models. Hybrid cloud can also mean the ability to connect collocation, managed, and/or dedicated services with cloud resources. A hybrid cloud service crosses isolation and provider boundaries so that it can’t be simply put in one category of private, public, or community cloud service. It allows extension of either the capacity or the capability of a cloud service, by aggregation, integration, or customization with another cloud service [194].