Special section: Systems thinking and feedback

So, what is a system? A system is a set of things—people, cells, molecules, or whatever—interconnected in such a way that they produce their own pattern of behavior over time. The system may be buffeted, constricted, triggered, or driven by outside forces. But the system’s response to these forces is characteristic of itself, and that response is seldom simple in the real world.

Thinking in Systems

Systems thinking, and systems theory, are broad topics extending far beyond IT and the digital profession. Donella Meadows defined a system as “an interconnected set of elements that is coherently organized in a way that achieves something” [184]. Systems are more than the sum of their parts; each part contributes something to the greater whole, and often the behavior of the greater whole is not obvious from examining the parts of the system.

Systems thinking is an important influence on digital management. Digital systems are complex, and when the computers and software are considered as a combination of the people using them, we have a sociotechnical system. Digital systems management seeks to create, improve, and sustain these systems.

A digital management capability is itself a complex system. While the term “Information Systems (IS)” was widely replaced by “information technology (IT)” in the 1990s, do not be fooled. Enterprise IT is a complex sociotechnical system, that delivers the digital services to support a myriad of other complex sociotechnical systems.

The Merriam-Webster dictionary defines a system as “a regularly interacting or interdependent group of items forming a unified whole." These interactions and relationships quickly take center stage as you move from individual work to team efforts. Consider that while a two member team only has one relationship to worry about, a ten member team has 45, and a 100 person team has 4,950!

A thorough discussion of systems theory is beyond the scope of this book. However, many of the ideas that follow are informed by it. Obtaining a working knowledge of systems theory will not only enhance your understanding of this book, but it can also be an essential tool for managing uncertainty in your future career, teams, and organizations. If you are interested in this topic, you might start with Thinking in Systems: A Primer by Donella Meadows [184] and then read An Introduction to General Systems Thinking by Gerald Weinberg [279].

A brief introduction to feedback

The harder you push, the harder the system pushes back.

The Fifth Discipline

As the Senge quote implies, brute force does not scale well within the context of a system. One of the reasons for systems stability is feedback. Within the bounds of the system, actions lead to outcomes, which in turn affect future actions. This is a positive thing, as it is required to keep a complex operation on course.

Feedback is a loaded term. We hear terms like positive feedback and negative feedback and quickly associate it with performance coaching and management discipline. That is not the sense of feedback in this book. The definition of feedback as used in this book is based on engineering. There is a considerable related theory in general engineering and especially control theory, and the reader is encouraged to investigate some of these foundations if unfamiliar.

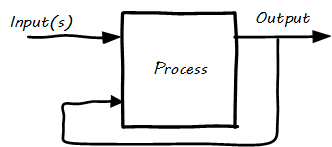

In Reinforcing feedback loop we see the classic illustration of a reinforcing feedback loop:

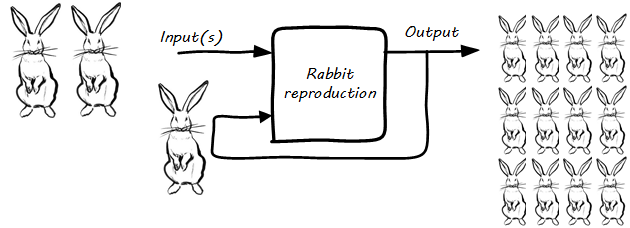

For example (as in Reinforcing (positive?) feedback, with rabbits), we can consider “rabbit reproduction” as a process with a reinforcing feedback loop.

The more rabbits, the faster they reproduce, and the more rabbits. This is sometimes called a “positive” feedback loop, although Mr. MacGregor the local gardener may not agree, given that they are eating all his cabbages!! This is why feedback experts (e.g.,[257]) prefer to call this “reinforcing” feedback because there is not necessarily anything “positive” about it.

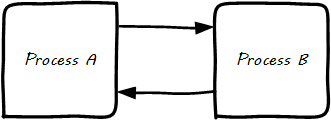

We can also consider feedback as the relationship between TWO processes (see Feedback between two processes).

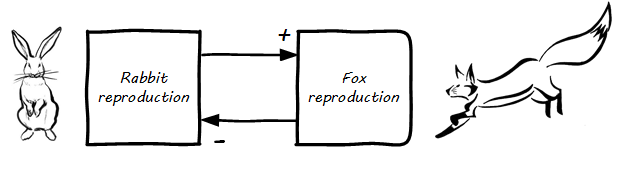

In our rabbit example, what if Process B is fox reproduction; that is, the birth rate of foxes (who eat rabbits) (Balancing (negative?) feedback, with rabbits and foxes)?

More rabbits equal more foxes (notice the “+” symbol on the line) because there are more rabbits to eat! But what does this do to the rabbits? It means FEWER rabbits (the “--” on the line). Which, ultimately, means fewer foxes, and at some point, the populations balance. This is classic negative feedback. However, the local foxes don’t see it as negative (nor do the local gardeners)! That is why feedback experts prefer to call this “balancing” feedback. Balancing feedback can be an important part of a system’s overall stability.

Wikipedia has good articles on Causal Loop Diagramming and Systems Dynamics (with cool dynamic visuals). [257] is the definitive text with applications.

|

Note

|

Still confused? Think about the last time you saw a “reply-all” email storm. The first accidental mass send generates feedback (emails saying “take me off this list”), which generate more emails (“stop emailing the list”), and so on. This does not continue indefinitely; management intervention, common sense, and fatigue eventually damp the storm down. |

What does systems thinking have to do with IT?

In an engineering sense, positive feedback is often dangerous and a topic of concern. A recent example of bad positive feedback in engineering is the London Millenium Bridge (see London Millenium Bridge [1]). On opening, the Millennium Bridge started to sway alarmingly, due to resonance and feedback which caused pedestrians to walk in cadence, increasing the resonance issues. The bridge had to be shut down immediately and retro-fitted with $9 million worth of tuned dampers [72].

As with bridges, at a technical level, reinforcing feedback can be a very bad thing in IT systems. In general, any process that is self-amplified without any balancing feedback will eventually consume all available resources, just like rabbits will eat all the food available to them. So, if you create a process (e.g.,write and run a computer program) that recursively spawns itself, it will sooner or later crash the computer as it devours memory and CPU. See Runaway processes.

Balancing feedback, on the other hand, is critical to making sure you are “staying on track.” Engineers use concepts of control theory, for example, damping, to keep bridges from falling down.

Remember in Chapter 1 we talked of the user’s value experience, and also how services evolve over time in a lifecycle? In terms of the dual-axis value chain, there are two primary digital value experiences:

— The value the user derives from the service (e.g.,account lookups, or a flawless navigational experience) — The value the investor derives from monetizing the product, or comparable incentives (e.g.,non-profit missions)

Additionally, the product team derives career value. This becomes more of a factor later in the game. We will discuss this further in Chapter 7 – on organization -— and Part IV, on architecture lifecycles and technical debt.

The product team receives feedback from both value experiences. The day-to-day interactions with the service (e.g.,help desk and operations) are understood, and (typically on a more intermittent basis) the portfolio investor also feeds back the information to the product team (the boss’s boss comes for a visit).

Balancing feedback in a business and IT context takes a wide variety of forms:

-

The results of a product test in the marketplace; for example, users' preference for a drop down box versus check boxes on a form

-

The product owner clarifying for developers their user experience vision for the product, based on a demonstration of developer work in process

-

The end users calling to tell you the “system is slow” (or down)

-

The product owner or portfolio sponsor calling to tell you they are not satisfied with the system’s value

In short, we see these two basic kinds of feedback:

-

Positive/Reinforcing, “do more of that”

-

Negative/Balancing, “stop doing that,” “fix that”

You should consider:

-

How you are accepting and executing on feedback signals

-

How the feedback relationship with your investors is evolving, in terms of your product direction

-

How the feedback relationship with your users is evolving, in terms of both operational criteria and product direction

One of the most important concepts related to feedback, one we will keep returning to, is that product value is based on feedback. We’ve discussed Lean Startup, which represents a feedback loop intended to discover product value. Don Reinertsen, whose work we will discuss in this chapter, has written extensively on the importance of fast feedback to the product discovery process.

Reinforcing feedback: the special case investors want

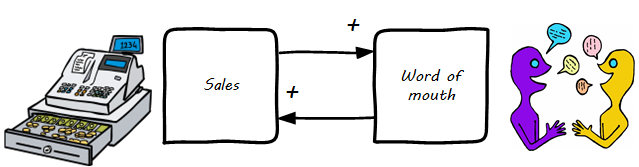

At a business level, there is a special kind of reinforcing feedback that defines the successful business (see The reinforcing feedback businesses want).

This is reinforcing feedback and positive for most people involved: investors, customers, employees. At some point, if the cycle continues, it will run into balancing feedback:

-

Competition

-

Market saturation

-

Negative externalities (regulation, pollution, etc.).

Open versus closed-loop systems

Finally, we should talk briefly about open-loop versus closed-loop systems.

-

Open-loop systems have no regulation, no balancing feedback

-

closed-loop systems have some form of balancing feedback

In navigation terminology, the open-loop attempt to stick to a course without external information (e.g.,navigating in the fog, without radar or communications) is known as "dead reckoning," in part because it can easily get you dead!

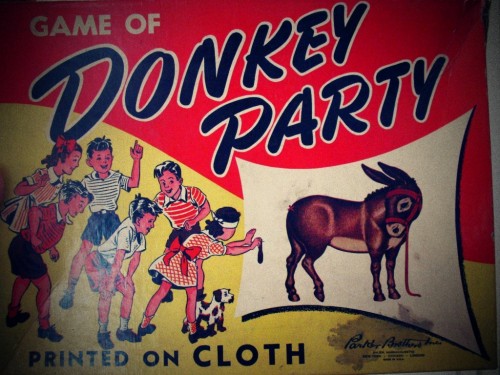

A good example of an open-loop system is the children’s game “pin the tail on the donkey” (see Pin the tail on the donkey [2]). In “pin the tail on the donkey,” a person has to execute a process (pinning a paper or cloth “tail” onto a poster of a donkey — no live donkeys are involved!) while blindfolded, based on their memory of their location (and perhaps after being deliberately disoriented by spinning in circles). Since they are blindfolded, they have to move across the room and pin the tail without the ongoing corrective feedback of their eyes. (Perhaps they are getting feedback from their friends, but perhaps their friends are not reliable).

Without the blindfold, it would be a closed-loop system. The person would rise from their chair and, through the ongoing feedback of their eyes to their central nervous system, would move towards the donkey and pin the tail in the correct location. In the context of a children’s game, the challenges of open-loop may seem obvious, but an important aspect of IT management over the past decades has been the struggle to overcome open-loop practices. Reliance on open-loop practices is arguably an indication of a dysfunctional culture. An IT team that is designing and delivering without sufficient corrective feedback from its stakeholders is an ineffective, open-loop system. Mark Kennaley [151] applies these principles to software development in much greater depth, and is recommended.

|

Note

|

No system can ever be fully “open-loop” indefinitely. Sooner or later, you take off the blindfold or wind up on the rocks. |

Engineers of complex systems use feedback techniques extensively. Complex systems do not work without them.

OODA

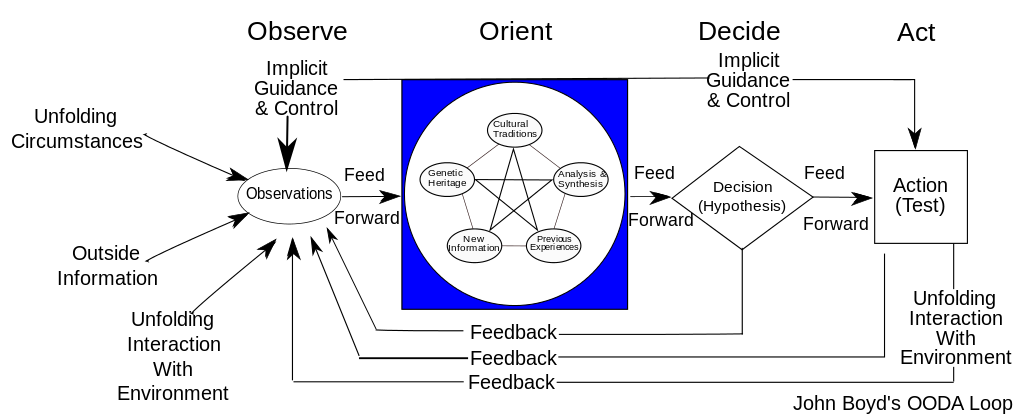

After the Korean War, the U.S. Air Force wished to clarify why its pilots had performed in a superior manner to the opposing pilots who were flying aircraft viewed as more capable. A colonel named John Boyd was tasked with researching the problem. His conclusions are based on the concept of feedback cycles, and how fast humans can execute them. Boyd determined that humans go through a defined process in building their mental model of complex and dynamic situations. This has been formalized in the concept of the OODA loop (see OODA loop [3])

OODA stands for:

-

Observe

-

Orient

-

Decide

-

Act

Because the U.S. fighters were lighter, more maneuverable, and had better visibility, their pilots were able to execute the OODA loop more quickly than their opponents, leading to victory. Boyd and others have extended this concept into various other domains including business strategy. The concept of the OODA feedback loop is frequently mentioned in presentations on Agile methods. Tightening the OODA loop accelerates the discovery of product value and is highly desirable.

The DevOps consensus as systems thinking

We covered continuous delivery and introduced DevOps in the previous chapter. Systems theory provides us with powerful tools to understand these topics more deeply.

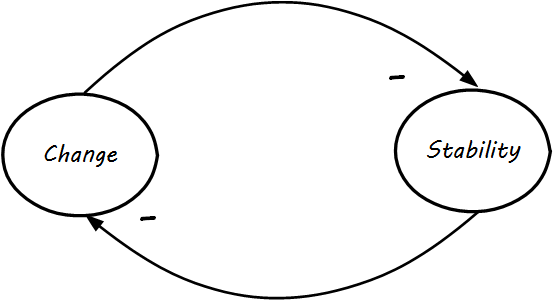

One of the assumptions we encounter throughout digital management is the idea that change and stability are opposing forces. In systems terms, we might use a diagram like Change versus stability (see [26] for original exploration.]) As a Causal Loop Diagram (CLD), it is saying that Change and Stability are opposed — the more we have of one, the less we have of the other. This is true, as far as it goes — most systems issues occur as a consequence of change; systems that are not changed in general do not crash as much.

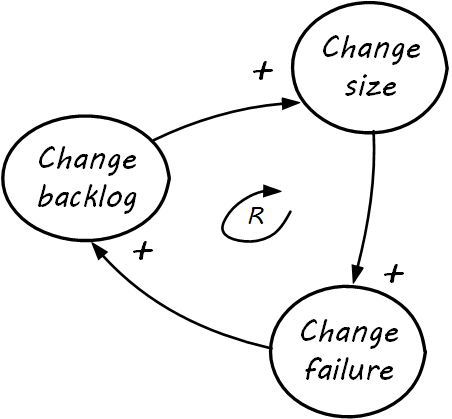

The trouble with viewing change and stability as diametrically opposed is that change is inevitable. If simple delaying tactics are put in, these can have a negative impact on stability, as in Change vicious cycle. What is this diagram telling us? If the owner of the system tries to prevent change, a larger and larger backlog will accumulate. This usually results in larger- and larger-scale attempts to clear the backlog (e.g.,large releases or major version updates). These are riskier activities which increase the likelihood of change failure. When changes fail, the backlog is not cleared and continues to increase, leading to further temptation for even larger changes.

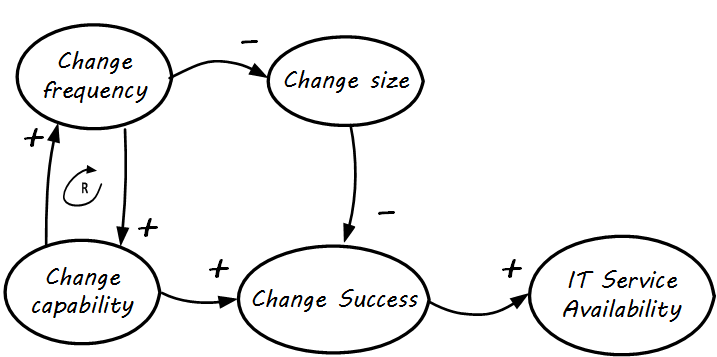

How do we solve this? Decades of thought and experimentation have resulted in continuous delivery and DevOps, which can be shown in terms of system thinking in The DevOps consensus.

To summarize a complex set of relationships:

-

As change occurs more frequently, it enables smaller change sizes.

-

Smaller change sizes are more likely to succeed (as change size goes up, change success likelihood goes down; hence, it is a balancing relationship).

-

As change occurs more frequently, organizational learning happens (change capability). This enables more frequent change to occur, as the organization learns. This has been summarized as, “if it hurts, do it more” (Martin Fowler in [86]).

-

The improved change capability, coupled with the smaller perturbations of smaller changes, together result in improved change success rates.

-

Improved change success, in turn, results in improved system stability and availability, even with frequent changes. Evidence supporting this de facto theory is emerging across the industry and can be seen in cases presented at the DevOps Enterprise Summit and discussed in The DevOps Handbook [154].

Notice the reinforcing feedback loop (the “R” in the looped arrow) between change frequency and change capability. Like all diagrams, this one is incomplete. Just making changes more frequently will not necessarily improve the change capability; a commitment to improving practices such as monitoring, automation, and so on is required, as the organization seeking to release more quickly will discover.